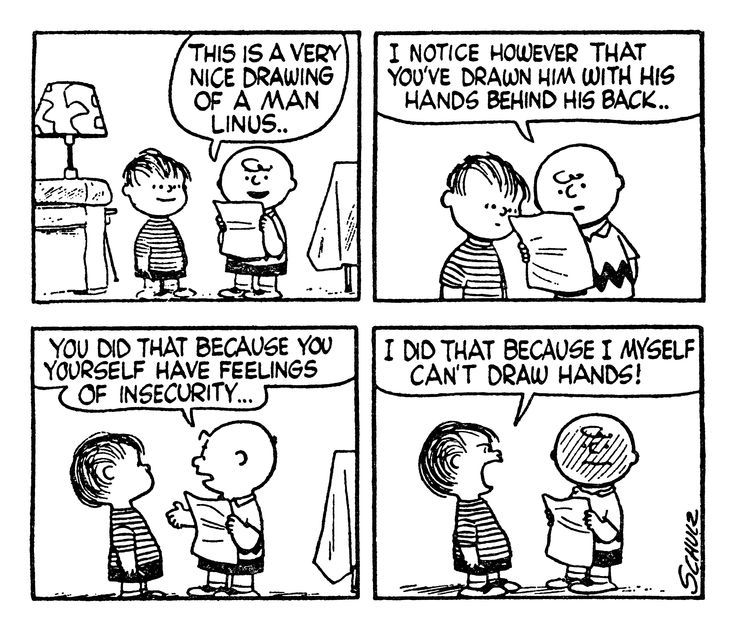

This is a fairly well-known Peanuts strip:

You can’t even count the fingers in the comic strip, accurately.

I’m tempted to fire up Midjourney and see if I can have it recreate this masterpiece, except maybe I can’t be arsed. (Midjourney’s choice of Discord as a user interface was cursed, in my opinion. Also, compared to running on a local GPU, Midjourney is painfully slow because of the user load. Success is its own problem.

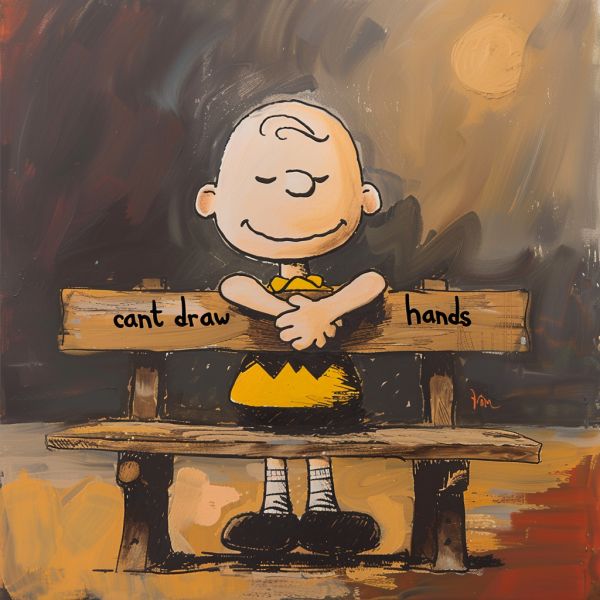

Midjourney AI and mjr: Peanuts cant draw hands as a real artwork

The improvement to text is pretty dramatic since the last time I tried any in Midjourney. What’s it been, months since they updated the training set?

One advantage of the “cloud” model of Midjourney is that new capabilities and improvements just “magically” show up since everything is server-side. Automatic1111, which I use on my game machine that has the big GPU, similarly auto-updates – one day you may fire it up and get a cascade of error messages, and another day it’s slower or faster. Cloud computing definitely killed the idea of “software release cycle” and moved us all into a state where it either works or it doesn’t – and if it doesn’t you just wait a couple days and maybe it’ll fix itself. Boeing is starting to build airplanes that way, too.

… and every single electric car I’ve ever travelled in is similar. In the next couple of years I’ll be buying a couple of cars. They’ll be internal combustion engined, Japanese, and if they perform like the couple I have now they’ll last for at least a decade and a half. I do wonder, by the time there’s absolutely no alternative to buying one of the newfangled iPhones on wheels that constitute the cars the government are trying to force us to buy nowadays, whether they’ll have reached a point where they’re fit for purpose. They’re certainly not at the moment, and judging by the friends’ cars I’ve travelled in over the last couple of years, they’re getting actively worse.

The complication being that airplanes have a hardware component. I got a glimpse of that world last week when I updated my Windows 10 machine. It churned away for at least a couple of hours, allegedly installing the accumulated updates. Finally, it stopped and notified me that those updates had failed, and it needed to spend even more time undoing them. The problem, I assume, is that someone at Microsoft put something in the update that is not compatible with all the hardware dating back to the time Win10 was introduced, which teh Interwebs tells me was 2015. For me, it was annoyance at considerable delays in doing the one thing I use Windows for every year (fill-in PDF tax forms). If you were in mid-air and suddenly found that the software no longer worked with that particular model of altimeter, the consequences might be more severe.

Improvement? I don’t doubt your authenticity here, but it looks exactly as if someone took a midjourney output and simply slapped a text layer over it using photoshop, GIMP or something similar. The text doesn’t follow the contour of the sign, it doesn’t appear to be done in the same style as the underlying pseudo-painting, and appears to be a complete non-sequitur in an otherwise relatively coherent image.

I wonder if this is a post-, or pre- processing step applied to the generated image that attempts to locate the kind of incomprehensible ‘text’ generated by the GAN and replace it with something like the output of a LLM, then paste it over the ‘text’. In other words, a cheap technical hack that, technically, addresses one of the persistent criticisms of these models but without actually solving the underlying problem, which is – as I keep saying – that the model doesn’t know anything, and is just performing complicated math to provide the kind of pixels most likely to be juxtaposed with the other generated pixels in response to the prompts.

I wonder if midjourney is capable of rendering text in this way in any other perspective than a linear front-view?

Sure, but also, this time it’s actual letters forming actual words.

This time, MidJR has generated something rather unnerving; irony. Along the same lines as “Ceci n’est pas une pipe”, this states “cant draw hands”, and there it is:- 5 fingers and a thumb. What next?

Actually, the left looks more like a three-finger+thumb cartoon hand, but with two knobbly appendages coming down from the bottom of it – unless omitting the dividing lines between the right and left hands was some kind of artistic choice here.