[This is a second attempt at this posting; the first went way off into the weeds. This is a tricky topic!]

A fairly common statement regarding AI goes something like: “AI cannot be creative; all they do is re-mix existing stuff probabalistically.”

I characterize that as “The Human Supremacist” position, because it’s implying that there is some sort of “true creativity” which only humans are capable of. There are a lot of problems with that position, which I will attempt to explore, herein. After that, I will describe some of my thoughts on how I experience the creative act, and compare it with how AIs implement creativity.

Midjourney AI and mjr: “a paper napkin diagram of how generative adversarial networks function.”

The human supremacist position is fairly easy to refute, from several lines of argument. I’ll try two, here. The first is perhaps a bit of commonsense and the other is a bit more philosophical. First off, have you ever known a dog, a horse, or (arguably) a cat? (Let alone an otter, a crow, or a badger) If you have spent a significant amount of time around some of these creatures, you’ll notice that, like humans, they display creativity. I don’t mean that a horse is going to grab a paintbrush in its teeth and kick out masterpiece in the renaissance style, but rather that these creatures exhibit a sense of fun, perhaps a sense of humor, and they solve problems. Philosophically, the human supremacist position fails if there is one credible instance of an AI or non-human exhibiting creativity, so the argument is almost done, right there. But examples are fun: I once saw two crows sitting on a jersey barrier and whenever a semi came by, they’d set their wings, levitate a bit into the air, then settle back down when the blast of air from the truck was gone. Those crows were exhibiting creativity: they had encountered a phenomenon, understood it, and figured out a way to use it for their amusement. I knew a horse, once, who literally lived to create trouble for his owner. The horse would do things like nuzzle his mommy while simultaneously stealing things out of her jacket pockets. Again, the animal was encountering a phenomenon, understanding it, and figuring out a way to use it for their amusement. My dog, one time, communicated quite clearly to me regarding an innovative problem – he had a rabbit’s skull stuck where he couldn’t pass it after swallowing a rabbit whole. He would run a little ahead of me, crouch like he was trying to defecate, look at me, and whine. I was able to figure out what he was saying, got him to the vet, and a vet-tech removed the skull by crushing it with needle nose pliers, earning thereby a significant tip.

The point of that is not to be disgusting, but to illustrate that a non-human was able to encounter a new situation (that was the first and only time that ever happened to a dog I know, which is why I used that example), understand it, and figured out a way to resolve the problem by communicating to its person. In that example, I believe the dog exercised creativity in several ways. I suppose I should also add that I’ve seen humans who are less effective at problem-solving than some dogs, and problem-solving is the ultimate creative process. You don’t need to make a big leap from “solving a problem” to “painting a painting in the renaissance style” – painting a painting is a collection of smaller problems, solved together. So is sending a rocket to the moon, or inventing firearms.

Next, we argue against the human supremacist position philosophically. Consider the great game of Chess. Having said that, I could stop right there, but I won’t. Chess is one of mankind’s great achievements: a day to learn and a lifetime to master. The game is a distillation of strategy – players sit there and analyze the board, think about their opponents moves, and try to come up with the best counter-moves that lead toward a victory. Strategizing is a creative process: you have to assemble a sequence of moves many moves ahead, rejecting the bad ones and choosing the good ones. Of course I am not playing fair: I used chess as an example because the human supremacist position used to be that humans could still beat computers at chess. Until, they couldn’t. There will never be another human chess champion. Then, it was Go. etc. We are forced to either reject the idea that playing chess is a creative process, or come up with an argument that somehow differentiates human chess from AI chess. Be careful, that’s a trap! In discussions with human supremacists, I’ve often heard the rejoinder that chess-playing computers are not creative because they a) look farther ahead in moves than a human can and b) embed massive lookup-tables of what the best move is, in what situation. The AI is not creative, they say, it’s exhaustive rote-learning. Unfortunately, that ignores the fact that human chess masters spend a huge amount of time studying opening moves, and prior games by other masters, and it’s widely understood among masters that being able to look more moves ahead makes a critical difference. If human chess masters are not showing creativity, then what are they doing?

One possible thing that they are doing is eliminating all the most-bad moves before them. If you had, say, a way of eliminating the most-bad, then scoring and weighting the likely value of the remaining candidate moves, you’d wind up with a pretty well-played chess game! You know, like the old: “how to sculpt Michaelangelo’s David – take a big piece of marble and cut away everything that does not look like Michaelangelo’s David.” That, by the way, is a fair high-level descriptions of how AI image generators work.

Midjourney AI and mjr: “a paper napkin diagram of how generative adversarial networks function.”

Suppose the human supremacist wishes to argue that an AI art generator is not showing creativity, because it is simply probabalistically regurgitating a deep and vast model of existing art, the rejoinder must be, “Michaelangelo studied art-making and practiced and was deeply familiar with art, too!” David, after all, was not just some guy, Michaelangelo was referencing thousands of years of mythology and a whole lot of greco-roman statuary. He no more created David out of whole cloth than an AI does. If an AI is merely derivative, so is Michaelangelo.

We don’t really know what creativity is, and our lack of knowledge is showing.

One final part of the philosophical argument is that, perhaps, creativity is something that can surprise us. That’s a fairly typical reaction to a masterpiece, in fact, “how the heck…? wow!” Well, if you look at some of Michaelangelo’s early sculpture, it’s not great but it shows that he’s got talent that will refine into master-class eventually. [the virgin of the steps] But, if you ask a human or an AI to create something (with rough direction) and are surprised by what it comes up with, you are possibly experiencing creativity.

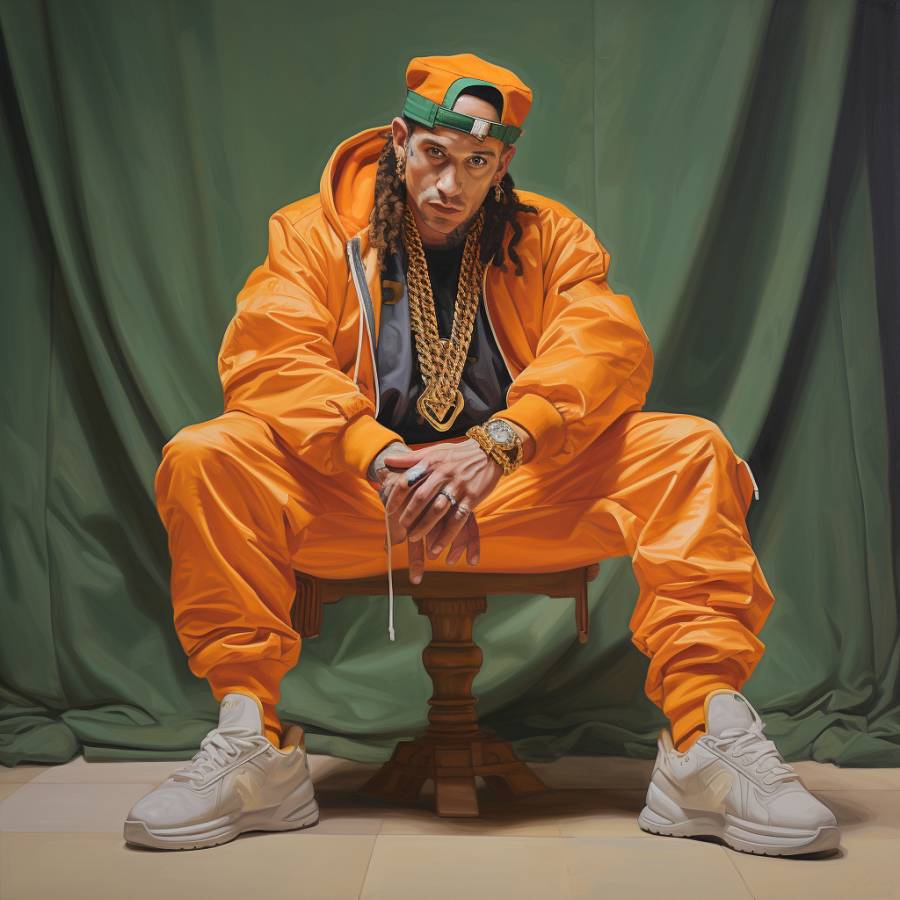

Midjourney AI and mjr “michaelangelo’s david hip hop version”

The way that masters like Michaelangelo happen is a generative feedback loop; they are not born knowing how to be insanely creative. They make something, like The Virgin of the Stairs, and critics, paymasters, and other artists offer helpful advice like “her hands look like boxing mitts.” etc. The chess player who will eventually be a master is also in a generative feedback loop; they try different moves and lose. Or sometimes someone explains something better and gradually they stop losing. Their desire to succeed keeps them trying, remixing their past efforts to create future work, and for every Michaelangelo there are thousands of humans who just never made it as sculptors. That is also a generative feedback loop: some artists never blossom to their full potential because they got too much negative feedback like, “for a sculptor, you’re a pretty good brick-layer.”

It’s the concept of generative feedback loops that is crucial to this whole problem, and now it’s time to take a look at the experience of being creative.

I’m going to have to assume that you’ve had the experience of being creative, even if only being creative in problem-solving. In fact, to make the argument simple, let’s consider problem solving and artistic creativity to be the same thing: you’re trying to do something – perhaps you have direction from outside (“can you fix the wiring in the network closet?”) or from inside (“I’m going to sculpt a figure based on the bible.”) Your first question to yourself is, “Self? Where do I start?” and that is the beginning of the generative feedback loop. “Maybe I should rip all the wires out and replace them” or “Maybe I should do a sculpture of the farmer who owned the manger that Jesus’ family took over” – something, from our cultural background or existing knowledge bubbles up a few suggestions. Where do they come from? I submit that is the beginning of the great engine of creativity. When we learn to problem-solve or sculpt or whatever, we learn to rapidly hypothesize candidate ideas, which we rapidly accept or shoot down as we consider the information we have about the problem before us. So, if you’re the sculptor considering investing months of your life to produce a marble statue of a grumpy farmer, you might shrug “that’s a bad idea” and then realize “how about Mary Magdalene?” And then “hey what is she’s the only person crying and everyone else is just dealing with the politics of the incident?” Similarly, the wiring closet: you look inside and realize that there are critical systems in there you can’t take offline, so “just rip it out” won’t work. And then “hey maybe I just trace out the critical systems and replace everything else?” The point is that past knowledge and experience are applied against our first candidate ideas, and we refine them iteratively. That’s the generative feedback loop.

Let’s generalize the example: suppose that you have a “hypothesizer” that takes your past experience and information about what you are doing, and rapidly burps out a whole bunch of half-baked ideas. I used the term “half-baked” right there because, in fact, we humans have terms like that which expose how we think about our own creativity – that tells us something. So the half-baked ideas begin to swarm out of the hypothesizer and they hit our first-order bullshit filter. We may not even pay a lot of attention to it, as it flits through the sea of options, going “nope” “nope” “nope” “hey maybe.” The “hey maybe” suggestion is the “how about Mary Magdalene?” or some other approach. It’s my suspicion that if you assign yourself, right now, a problem, and assess how you think about it, it may match the experience I am describing. If it does, congratulations, you’re being creative. But don’t pat yourself on the back.

Midjourney AI and mjr: “a marble statue of Mary Magdalene by Michaelangelo”

AIs knowledge-bases are big trees of probabilities that are contingent on other probabilities, etc. For example, an AI art knowledge-base might give certain activation levels if you match for “biblical art”, with a certain probability that “biblical art” is followed by “Michaelangelo’s David” but it also has other probabilities for “biblical” that have different activation levels around “Mary Magdalene” – as the human supremacists point out, it’s a probability game. The technique worked well in the 1980s for optical character recognition – computers could learn to recognize the pixels that make up an ‘9’ or whatever. It’s just a couple steps more bigger technology and knowledge-bases that allow for a recognizer that is good at probabalistically recognizing pretty much any image.

The AI art generator does not have pixel maps in it somewhere that define how it sees Mary Magdalene. It actually uses the same approach Michaelangelo used: it takes a bitfield full of noise and starts iteratively reducing the values of pixels that don’t match “Mary Magdalene by Michaelangelo.” If you keep iteratively removing marble, you wind up with a sculpture and the quality is governed by the precision that can be achieved in the match. So, that’s how that’s done. But where’s the creativity?

Imagine you have a generative feedback loop: there’s a hypothesizer that throws out ‘ideas’ (or call them something else) and there’s another trained matching knowledge-base that has passing familiarity with all human art and also what people like. Where might that knowldge-base come from? Well, one proxy for “what is good art?” would be if it’s in a human museum. Or, maybe if it’s highly-rated on an art site like deviantart. But it may not even be necessary to have a proxy for “what is good art?” because the knowledge-base is going to get more training versions of Michaelangelo’s David than of Ferd Burfle’s Lego version of David. No offense, Ferd, it’s just a numbers game. So the hypothesizer throws out ideas at a speed that only a computer can manage, while the art critic, shoots down the worst ideas (the ones resulting in lower activations) and perhaps it iteratively keeps pruning away bad ideas until it has one remaining idea. Is the result a good idea? Well, by definition, there’s a high probability that someone will like it. What I have just described is a Generative Adversarial Network or GAN. I argue that that’s how computers can be creative, and that is how humans are creative.

I think that if you consider your own creative experience honestly, you’ll see that this is how it is. We absorb feedback into our creative processes approximately the same way the AI does. We see this all the time, everywhere. Have you ever listened to Stevie Ray Vaughn and thought, “wow, you can see how Jimi Hendrix’ playing influenced him” (even when he’s not outright playing Jimi Hendrix songs)? Have you ever seen a photo by some big name photographer and thought, “that’s reminiscent of some other big name photographer”? AIs are not just probabalistically regurgitating from Caravaggio any more than you are.

In fact, just as I wrote that, I thought, “it’d be fun to add an illustration there of, um… what about Yusuf Karsh’s portrait of Churchill as painted by Caravaggio”? Where is the creativity in that scenario? I have been told I am a highly creative person (I agree, I think, though age has slowed my creativity down and perhaps sharpened it) and I am able to do the Generative Adversarial part of having an idea so fast that it’s almost subliminal. But, as I was coming up with that idea I distinctly thought, “I hate Churchill.” and then “I love Karsh’s portrait of Churchill.” And, of course, I am a huge fan of Caravaggio. The fact that I worked Caravaggio into this example at all is because I am a huge fan of Caravaggio and my creative engine’s hypothesizer is likely to burp out Caravaggio whenever I am reaching for an idea involving great art. I sure as hell wouldn’t think of Jackson Pollock, for a portrait of Churchill, because the first-order bullshit filter goes “nope.”

Midjourney AI and mjr: “a jackson pollock version of karsh’s portrait of winston churchill”

It’s all just probabilities in action.

My argument, then, is that Generative Antagonistic Networks is how humans and AIs both create. Ours is a knowledge-base curated by our growing up in a particular civilization, and the AI’s is a knowledge-base curated by … growing up in a particular civilization. We share the same proxies for “what is good” too, the fact that the AI sees more pictures of Jimi Hendrix than Stevie Ray Vaughn affects its creative output in somewhat the same manner as it does our own.

The human supremacist has a serious problem, here. If they wish to maintain that there is something, somehow special, about human creativity, they have to be able to define “creativity” in some way that an AI cannot do it. Obviously, I’m a reductionist who does not believe in “souls” or spirits or anything magically special about humans, and I certainly don’t think humans are different from, say, orangoutans – our near cousins, who are also self-aware and creative. That brings me to a final point, which is that AI networks were not creative until fairly recently, when some researchers sat down and implemented a process of human creativity in software. It may be tempting to hold out that humans are special because we’re self-aware, but I predict that won’t be a particularly high bar to jump over. Like creativity, we don’t fully understand self-awareness, but if an AI is programmed to react indistinguishably from a self-aware being, it’s going to become a point of pointless contention whether it’s really self-aware or not. Human supremacists will have to face the inevitable challenging question, “if you don’t know what self-awareness is, how do you know that you are self-aware?”

I assumed the guy in orange was a response to the prompt “What if Vin Diesel dressed up as Ali G?”

Handled quite well in ST:TNG season 2, episode 9 “The Measure of a Man”… The pivotal scene is here: https://m.youtube.com/watch?v=ol2WP0hc0NY

problem-solving is the ultimate creative process

I am not sure that I agree with that unless you make a very broad definition of “problem-solving”. I don’t think Stravinsky looked at The Rite of Spring as an adventure in problem-solving, short of needing to compose something for Diaghilev’s Ballets Russes. What is it that allowed Picasso to create both Guernica and the “found art” piece Bull’s Head? Is that the same process that Shockley’s team used to create the point contact transistor?

It’s a tough question. All I can say is that in my own admittedly limited experience, I don’t consider my “creativity” when designing an electronic circuit (or even a lecture on electrical theory) to be the same sort of thing I experience when I write a piece of music (and certainly not when I am “jamming” with fellow musicians). The former is a much more rational, step-by-step process than the latter, which tend to be more serendipitous. Maybe that’s just because I’m neither an Einstein nor a Stravinsky. I don’t know.

Your making a lot of assumptions here. AIs are designed to mimic the way humans seem to work but they don’t work exactly the same way. There is a lot about how the mind works internally that isn’t understood yet. At the very least AIs don’t make the same sort of mistakes humans do and don’t make decisions about overall image composition in the same way.

I’m not a believer in human supremacy but as far as I can tell we don’t know enough about how the human mind actually works to answer the question about creativity definitively yet.

The question makes me wonder if you can get a current image AI to create it’s own new art style.

I think the more powerful question to pose to the human-supremacist is “if you don’t know what self-awareness is, how can you prove to me that you are self-aware?”. Never mind the machine – how do I know YOU are thinking?

A book I have never stopped thinking about since I first read about it, and especially not since I first read it, years later, was “Metamagical Themas” by Douglas Hofstadter. I’m thinking here of a specific chapter entitled “Variations on a theme as the crux of creativity”, which explores some of what you discuss here, and much, much more. It’s notable that he does discuss the modelability of the concept of a “concept” and how that could be computerised for AI purposes… in October 1982. For reference, that was the month Q*Bert came out, and that was the year the Commodore 64 was released.

That year, the most powerful computer in the world was the Cray X-MP. This was a machine that cost $15m, was 2.6m tall and 2m in diameter, weighed over FIVE TONNES and consumed 345kW. It had 128MB of RAM, 38.5GB hard drive(s), and was capable of 800MFLOPS – so, y’know, nowhere near as good as my bottom-of-the-range Huawei phone. That should give anyone reading this an idea of what the cutting edge of tech was at that point, while Hofstadter was already wrestling with how you’d make a computer creative and coming up with the arguments above.

I really can’t recommend the book enough – it has aged phenomenally well.

I think I disagree with the general argument here, but a few points stuck out as problematic.

The first is this:

Isn’t this a question of how we choose to define it? Maybe you mean that we don’t know what the mechanism of (biological?) creativity is, but I would say that there are several mechanisms. One is the feedback loop that you describe, but I don’t think it’s the only one.

If you really mean that we don’t know what creativity is, then it seems hard to defend the position that an AI can do it.

Like jimf @3, I also take issue with this. But I suppose it depends on how you define creativity (and also how you define ultimate). I think you would have to construct an argument for why you think this is true, because I don’t understand why you think that it is.

I think there’s a qualitative difference between the problem-solving of a dog with constipation and other forms of creativity. Your dog does not understand why* his behavior will solve his problem. He just knows that Marcus solves things for him, and he needs to let you know that there’s a problem. He has no idea that the solution will involve a car ride and a pair of pliers.

I just launched a creative project of my own: a D&D campaign set in a fictional world of my own creation. I spent a lot of time building my world, and the first step of doing so is very different from the process you described. I analyzed more than a dozen different fictional worlds I was familiar with, including some that really like and a lot that I don’t care for, and I systematically broke down various components of their construction to analyze what works well and what doesn’t work to build a compelling world.

When I started building my own world, I had a very good idea why some ideas were good or bad, and I also knew what ideas I had never seen before (for example, none of the fantasy worlds featured a hollow planet, which is an idea I find interesting) and whether incorporating those ideas would be a good or bad idea.

I think this is very different from a simple “generative adversarial network,” and it’s qualitatively different from how current AIs work. Machine learning can only iteratively test whether or not a strategy works. It does not understand why a strategy works.

* With the caveat that “why” is a question that can always have a more and more granular answer, depending on one’s level of understanding.

I suspect that folks hang on to the human supremacist position because it keeps their idealogical and/or theological beliefs from totally falling apart.

Years ago, Mortimer Adler wrote a book entitled The Difference of Man and the Difference It Makes in which he argued that humans are the only entities worthy of ethical consideration because our languages include common nouns (yes, really), and asserted without evidence that being able to distinguish between kinds of things and particular things requires that we have some kind of immaterial aspect to our existence that (also asserted without evidence) other animals lack. It was an argument for human supremacy because of philosophical idealism, not the other way around; but I think either belief evaporates without the other to back it up.

In fairness, the book was written in 1967, way before recent research into animal behavior, and while the Turing test in its original form (current AI has moved the goalposts) was still generally accepted.

@6:

Why yes, yes it is. And the problem there is that there are three sets of people:

1. The set of people who define it as “whatever thing I need it to be to filter between myself (and other humans) and everything else, because I feel sad if I’m made to consider the possibility that I’m not the most awesome and special thing in the universe.”

2. The set of people who don’t have a clue how you define it, but suspect that the existence of such filter is on highly dubious grounds, both philosophically and empirically.

3. The set of people who have a tight formal definition of exactly what “creativity” is that can be tied to concrete observations of any putative “creative entity”, that is accessible to anyone capable of understanding the mathematics behind it (such a group being not prohibitively narrow) and thus utterly convincing to anyone not already locked into a contrary worldview.

And the cardinality of set 3 is 0.

OP- hard agree.

JM @4- can an AI come up with its own style? yes. we’re already there. still needs a human to say “ok, that’s it bro” – but i can easily imagine workarounds for that, within the reach of right now, and probably some playful nerd has already made it happen somewhere. people who earnestly buy the idea *everything* they do is stolen just don’t have knowledge of the breadth of art or experience with the AI tools to know better.

mark @6- i find some of your arguments sound even tho i favor mjr’s position, but as a visual artist, i find the idea that problem-solving is a creative act compelling. i can especially see why a programmer/artist like mjr would think that.

i’m also more inclined to buy that the dog is legitimately solving a problem, and as all cognition is a grade from 1or0 to rocket surgery, it’s provincial to draw boundaries about what parts of that grade are the special ones.

i think the fact these generative AIs don’t understand what they’re doing (as u did with setting design) is a temporary thing. the more these boundaries are pushed, the less special humanity looks. this goes both ways, as we look at animals beneath us in cognitive complexity and are endlessly surprised by what they can do – even when we disregard the wishful thinking of people who want to believe nonsense on the topic.

bill @7- that’s interesting stuff, thanks!

@xohjoh2n #8,

I think I would count myself in Set 4: The set of people who are linguistic pragmatists, who don’t think that words need precise formal definitions as long as all people involved in the conversation have the same idea about what it means.

I don’t need Marcus to give a formal definition of creativity, I just need to know what is meant by “we don’t know what [it] is” before I can consider that argument.

——

@Great American Satan #9,

I agree with your last paragraph about these limitations of AI being temporary. I would reject being described as a “human supremacist” as Marcus describes it because I don’t think there’s anything stopping future AIs from doing human-like creativity. I just don’t think that the AIs that exist currently are there yet, and I think it’s a limitation of their current mechanisms of function, not just their ability to judge whether they put the right number of fingers on a hand.

@5 sonofrojblake

A tad off-topic, but regarding the Cray XM-P, I took a tour of NCAR (National Center for Atmospheric Research) back in the 1980s. They had two Crays at the time. The guide indicated that during the winter, the building got something like 40% of its heating from the two computers. I can’t be sure of the specifics but I recall that it some manner of active cooling system (like coolant tubes running through the PC boards or surrounding the CPUs). The design reminded me of some kind of modern seating unit in a railway station or airport.

I know I’m wading in way over my head here, but to me, creativity requires motivation to create, which AIs don’t currently have. They follow instructions and rules but afaik no computer has spontaneously started spitting out its own artworks or solutions. They don’t perceive that there’s even a problem to be solved or know when they’ve solved it. Motivation can be as simple as wanting to please a teacher who instructs “draw a pretty picture for me,” but AIs don’t aim to please. I guess I’m still at the Luddite stage of feeling that AIs are misnamed, as I don’t feel they possess intelligence any more than a Rube Goldberg device does. Their gears and ramps and pulleys are just ones and zeroes rather than physical components, and their operations have a variety of possible outcomes because both yes and no routes are available forks along the process. But you can set up mechanical devices that also have yes/no forks depending on outside factors (otherwise those marble racing set-ups would always come out the same) but that doesn’t make them intelligent.

I’ll go away now… :D

@Ridana, 12:

You’re right – but only because nobody has yet set one up to self-motivate. There’s no reason, in principle, why you couldn’t set up a system that could just… I’m going to say “unmotivatedly cogitate”. Just sit there, thinking. If machines are anything like me, they’d spend most of that time thinking about nothing much, then every now and then it would occur to them that something needs creating… and they’d do it. Of course, then you get into the whole paperclip thing. But it’s not a principled objection – even a mechanical computer could, in principle, be set up to just… run, until something occurred to it. Couldn’t it?

@9 Great American Satan: It will require a fundamentally different type of AI to create one that understands what it is drawing. It’s an entirely different approach to AI and people are working on it but that research has gone nowhere for some time.

An AI that draws like a human would really be a hybrid approach. A human knows that a typical human hand has 5 fingers and how they are laid out on the right and left hands. The average human doesn’t really know why skin looks the way it does, they have just learned through experience what looks right.

How does something occur to a machine? What would that look like? It’s not going to tell us unless it was set up to, and that goes back to the original question of motivation. Like my sewing machine has stitching patterns I can set, and with a brick on the foot pedal it will follow those patterns forever until it breaks. It can’t suddenly “decide” it would rather do a different pattern. I guess along with motivation I think “intelligence” requires independent agency to guide its own processes. Being able to express the results is also important, but I don’t consider that people with locked-in syndrome are not intelligent. We can extrapolate that they are, based on those who can still communicate. So at least some AIs need to somehow tell us what they’re thinking about, without being told to, before I can agree they’re intelligent. As things stand, to me they’re just intelligence mimics, like a duck call that mimics a duck’s quack, if a person initiates that action.

.

I think you’re describing my computer though. I tell it to, say, close a window and it sits and thinks about something else for a minute or so until it forgets what I asked it to do. :)

@13 sonofrojblake

even a mechanical computer could, in principle, be set up to just… run, until something occurred to it.

“Normal” computers aren’t that far off. The only niggle is the “occurred to it” part. Every PC, tablet, and phone runs an OS that is nothing more than a glorified event loop. The device just keeps scanning its inputs for activity and when something is found, it reacts. An argument can be made that that’s the experience of most humans, most of the time. Even if I’m doing something highly collaborative and creative, like playing music with friends, each of us is listening to the others and using what we hear to modify what we’re doing. I guess a possible difference is that I could be reading a book, and after a while, just jump up and start playing an instrument, unprompted. Of course, the question is, “what caused that”? Was it just the passage of time? If so, we could easily program a timer to initiate a (pseudo-random) action if nothing else happens within a specified time.

Are we using a definition of “intelligence” that is similar to the situation a judge faced many years ago regarding pornography (paraphrasing) “I can’t define it in words, but I know it when I see it”. Hardly helpful, in my opinion.

To be certain I’m understanding the OP correctly, the way I read it is that:

A) The learning process is something AI can do today.

B) The iterative-rejection process of things which do not match the problem-defining prompts is something the AI is getting better at, but humans are still giving final approval/rejection/re-do orders.

C) The problem-defining prompts are outside of the AI scope today, but Marcus proposes a concept he calls the Generative Adversarial process which will take a problem and apply the iterative-rejection process to the overall problem in order to break it down into smaller, easier, problems some of which may have well-established solutions.

I don’t have any argument with any of the above. I think these stages are well-underway and in some cases will already exist. Douglas Adams even considered the concept in the idea of the Anthem II software in his novel, Dirk Gently’s Holistic Detective Agency. That fictional software was given a desired result and had to generate a logical path to get there from the present state. That’s a much harder task than it seems.

But, I do have some comments.

First, step A. The learning process for AI is something we can do today. We, as far as I can tell, do not have a full understanding of the learning process for humans. Do humans have other ways of learning than the deep memorization of AI. I don’t know. I think it’s worth considering. We have defined what learning is in the context of AI, but that definition may not be complete for humans, or dogs, or other animals. I think we should be careful in assuming that our reductionist understanding of learning is complete. There is a long history of reductionism getting great results, but missing facets which show up during subsequent investigations. The history of gaseous neuro-transmitters is a good example. For decades we thought we had found all neuro-transmitters, then a new way of looking at brain operation found we had missed an entire class of them.

Step B relates to the creation of a work of art, or the solution to a problem. The iterative process is certainly in play here, both in humans and AI. The more I study a work of art, the better I am at copying it. The more I play music, the easier it is to know what notes come next. There is a feeling of “rightness” or a “wrongness” to the next step, and I generally try to head toward “rightness”. Some people argue that you should occasionally give “wrongness” a try because it may lead to new things. I can’t refute that, but I can say that in most cases I aim for what feels right. Which leads directly to step C.

Step C, the winnowing process. For human endeavors this occurs before, during, and after the creative/problem solving process. In fact, this can be seen as a meta process reviewing the progress, and even updating the goals, even as the creative/problem solving process is going on. Like step A, we should be careful about thinking we understand the process well enough to program all aspects of it. But there is no reason why an AI can’t do a good portion of it. The probabilistic approach will work well for a lot of problems, and throwing a randomness into the probabilities can lead to new combinations of ideas.

The Generative Adversarial approach simulates one problem-solving or approaches to idea generation, one facet of creativity, the conference-room approach. Whether it’s NASA engineers looking at a bunch of spare parts on a table or a board room full of advertising executives, the concept is to throw out as many ideas as possible, discuss them, build on them, remember them when discussing the next idea, see if ideas can be joined together, and importantly, tentatively rejecting those which make no sense but remembering them in case they align and improve another idea. It’s clear how it happens when there is a group of people working on a single problem, Marcus suggests that this is also how creativity and problem solving occur when a singe person is doing it. It’s how we arrive at the feeling of “rightness” when being creative. We reject other possibilities so fast, without conscious consideration, that all we are left with in our conscious mind is the feeling that something is “right” or “wrong”. This could be the feeling of what the “right” note is to play in a jam session, and there may be dozens of “right” notes. This could be the feeling of “wrong” when in a dark, creepy, old mansion with unknown and suspicious noises. I don’t think Marcus is wrong.

However, I think Marcus may be incomplete. And he may acknowledge that. We have a general definition of creativity. A fairly poorly-defined one. It’s of the “we know it when we see it” type of definition. At one point we defined cancer as uncontrolled cellular division, doctor’s knew cancer when they saw it. Through continuous study we now recognize dozens of type of cancer, all falling under the rubric of cancer, but also having their own distinctive characteristics and treatments. I suspect that creativity will eventually be found to be the same.

Will Marcus’ suggestion allow AI to duplicate some facets of creativity. I think so. One fascinating aspect of implementing Marcus’ approach will be that those areas of creativity which are not susceptible to this approach be easier to identify? Which will lead to new developments and new approaches to model creativity. Which will probably continue to have some unexplained residue which needs study. When there is no residue left, then creativity will be understood.

I see no reason why we can’t fully understand and program AI to be creative, or at least set up the processes which enable it to be creative. I just think it will take longer than the AI enthusiasts expect.

@flex: Marcus is not proposing Generative Adversarial Networks as a hypothetical possible approach to creativity, he’s describing how the already-existing GANs (such as midjourney and Stable Diffusion) work right now, and arguing that it is a form of creativity.

Ridana@#12:

I know I’m wading in way over my head here, but to me, creativity requires motivation to create, which AIs don’t currently have. They follow instructions and rules but afaik no computer has spontaneously started spitting out its own artworks or solutions. They don’t perceive that there’s even a problem to be solved or know when they’ve solved it.

That’s all true. In my opinion, as jimf@#16 says, it could just be that “self awareness” is an outgrowth of a status monitoring loop. That model makes sense in the context of a computer/OS because our computers work that way and are built to work that way. We build hard limits into computers’ self-motivation because they’d be annoying as hell – imagine if your printer started texting you while you were sleeping, “hey, I’m out of paper!” In fact, we design our printers to only tell us that sort of thing when it’s a part of a process that we started. I have not got kids, but I imagine most parents with kids wish they could control the notification outputs of the kids’ status monitoring loops, sometimes. And, of course, computers’ status monitoring loops are lockstep and don’t change, whereas our bodies are massively parallel: my bladder status is on its own schedule, as is my hunger status, thirst, etc.

A first step would be some simple rules, like kids develop: if you’re not doing anything else, create some art related to what you did in the last month, then print it out and sweet talk daddy into taping it to the refrigerator. I would bet that a generative adversarial approach with trained feedback would do a pretty good job of adding spontaneous behaviors to an AI. Would we call that “simulated” or would we just tell it “later, daddy’s busy.”

JM@#14:

@9 Great American Satan: It will require a fundamentally different type of AI to create one that understands what it is drawing. It’s an entirely different approach to AI and people are working on it but that research has gone nowhere for some time.

I think we already know how to do it: we’d need another layer of GAN. The final layer would be trained to recognize elements in a drawing using a different knowledge-base but similar text inversion techniques as are used in diffusion models. When an image was created, the final layer would ask itself, “how well does this match what I was asked for?” The only practical limit to how many different models you have, each giving a different “perspective” on the output, would be the generation speed.

I actually serve that role, myself, today. When I punch a prompt into the AI and generate a dozen images, then pick the one I like the best, I am the “adversarial” part of a GAN, applying my own weights and matching to the outputs. I don’t think there is anything special about how I do that – I have opinions on what is funny, beautiful, correct, etc., and I apply them even though I would be hard-pressed to write them down.

This has gone way beyond my meager ability to comprehend, but I’ll comment on it anyway. 8-)

We’re clearly “not there yet” so the argument is whether machine creativity is even possible. That’s necessarily a philosophical argument because we don’t yet have the science or technology to discover what human creativity is; and although it’s not mentioned explicitly, I think I sense the old materialist/idealist argument lurking in the shadows.

I think I’ll wait for actual evidence before thinking that I know anything about AI. Whether we get such evidence in this 77-year-old’s lifetime remains to be seen.

(It just occurred to me that, with phrases like “lurking in the shadows” and “remains to be seen”, I’m just copying what I’ve dredged up from my own memory. Am I even being creative in a way that’s like what we’re demanding of machines?)

@1: To me it resembles Seth Green more than Vin Diesel. Diesel has a rounder face.

@7: The Difference of Man – This reminds me of a day in college German class, in which the instructor, probably bored with German, invited an anthropologist acquaintance of hers to present to us about what makes humans special. It was the usual fluff – bigger intelligence, tool use, etc. I thought we did a decent job of pointing out to him that he was doing it ass-backwards by assuming that man is special, and then looking for reasons to support it.

Surprised no one has posted this yet:

They’re Made out of Meat

jimf@#16:

Are we using a definition of “intelligence” that is similar to the situation a judge faced many years ago regarding pornography (paraphrasing) “I can’t define it in words, but I know it when I see it”. Hardly helpful, in my opinion.

I’ve got to argue with that, with all respect.

I don’t recall a link to it but elsewhere on this blog, I have posted about vague concepts – those are ways we humans have of using language to apply groupings absent a hard and fast rule. A good example of a vague concept is “a bald person.” Is a “bald person” 100% hairless, or is there a certain number of hairs below which they are “bald” and above which they are “balding”? Vague concepts [wikipedia] are something we comfortably use all the time. The wikipedia example is “tallness” – is there a specific height that makes on tall? Let’s say it’s 6 feet. Is someone not tall it they are 5 feet 11.9999 inches? In which case it depends on their shoes.

We are, in fact, very comfortable working with vague concepts, but the law isn’t, because it’s actually an elaborate social mechanism for removing vagueness from certain problems. (Nobody argues that a murder victim is “mostly dead” but there is plenty of argument around “mostly responsible for his death.”)

Let’s take a more annoying example: is a dog “intelligent”? Well, actually, we can place dogs on a scale of intelligence and I think most people won’t argue that an Australian Shepherd is a pretty intelligent dog, whereas maybe a beagle isn’t quite as bright, etc. We still don’t know what “intelligence” is but we can rank-order it in some cases. (insert confounding details about “emotional intelligence” here) So we don’t need to know what intelligence is to say that a typical Aussie Shepherd is better educated and more intelligent than Marjorie Taylor Greene. I’m being a bit silly, because MTG can talk and an Aussie Shepherd cannot (but when it does it’s got more to say!) but MTG’s sheep herding skills are poor compared to the Shep. Etc.

It’s a problem with how detailed reality is, compared to groupings expressed in language, but yes – if I can rank porn from “more to less explicit” in a way that a significant majority of us would agree, then we do know what porn is – we just don’t have a definition for it because it’s a vague concept. BTW, I wish that human law stopped trying to dodge the fact that our use of language is vague, and would just bite the bullet and admit that absolute certainty ought not be a requirement. In fact, we impanel juries to decide the vague concept of “guilty” all the time and they do a pretty satisfying job.

One limitation, which could easily be overcome:

I can’t recall a specific story, but there is a theme out there that creators – writers, movie makers, et al. – have to experience life so they have something to create about. Because if a writer is just writing about things that other people wrote about, then it is not going to be compelling. Tied to the theme that an artist must suffer for their art; but I think the suffering is not the essential ingredient, it is the wide variety of inputs.

And also: Michelangelo did study art. But he also studied anatomy. So he knew how the musculature of the human body was constructed, and pointedly, he knew how many fingers a typical person has. I think you see where I am going with this.

Another aspect of creativity which you did not mention: crossing over between fields. Accidentally or otherwise. As a quick fake example, an architect being inspired by a child’s toy, rather than by a building someone else built.

I recall a brief episode of creativity I had in college. There was a contest to design a T-shirt for our dormitory. I was looking at the bulletin board we had outside our dorm room, and saw two clippings: one, the dorm president making a ridiculous request to the administration that they put a dome over our dormitory. Two, an animal with a rounded carapace which was the theme of the annual big campus party. The two combined in a flash, and I had a picture of our dormitory with the animal in question serving as the dome.

billseymour@#21:

That’s necessarily a philosophical argument because we don’t yet have the science or technology to discover what human creativity is; and although it’s not mentioned explicitly, I think I sense the old materialist/idealist argument lurking in the shadows.

There is a materialist/idealist divide, but I’m trying to argue that it does not matter because for all intents and purposes GANs today are “creative” enough to be considered “creative.”

If you accept my argument that “creativity” is a vague concept, then we’re down to arguing about “more or less creative” and that’s where I want us to be. The human supremacist position depends on some absolute measuring stick of “what is creative?” or “what is intelligence?” when it seems obvious to me that it’s always been a matter of degree.

This is why I try to characterize the human supremacist position as being in an endless retreat. “Can’t beat a human chess master. Oh, oops, can’t beat a human go master.” Next up: “can’t write software” or “can’t design a nuclear reactor.” And, for the record, I do not want AIs designing nuclear reactors for a couple hundred more years. (i.e.: after I am safely dead)

Reginald Selkirk @22: yeah, crafting arguments given conclusions is something we humans do almost automatically. Supreme Court justices come easily to mind. 8-)

I was going to argue that the problem with hooking up one GAN to another to get round the self-motivation problem is that it would probably just end up with the whole system converging on producing an endless stream of beige bilge, but then I remembered that most human creativity does that too…

But that throws up another thing worth considering: everybody keeps asking about whether “AI” can produce great art, as if quality is the yardstick for creativity… But I’m a great believer in the maxim that “if something’s worth doing, it’s worth doing badly“. The vast majority of human creative output is, to be perfectly frank, not very good at all – but it’s no less valid because of that fact.

Marcus @25: I take your point and I agree with it; but that makes me wonder why we’re arguing about it at all.

I just thought of one reason for having the argument: because it’s fun — clearly another vague concept. 8-)

Apropos nothing much, I wanted to link in here this video of a sheepdog demonstrating creativity.

https://youtube.com/shorts/mf-j2FvlOmo?si=ClQbmviKiCS4F6Kg

billseymour@#28:

that makes me wonder why we’re arguing about it at all.

Over at Daily Kos someone posted a bit about AI and promptly declared that AI will never be able to show creativity. It got me thinking and I immediately commented with a shorter version of my argument here. I don’t think the commentariat over there is as sophisticated as here, where someone taking the human supremacist position would probably get a lot of push-back, so I thought I’d be the push-back.

By the way: introducing vague concepts into any discussion of philosophy is a really nihilistic thing to do. It’s almost (but not quite) a conversation-killer. I happen to believe that philosophy in general has a problem with language, and vague concepts are an important manifestation of a problem with language as a tool. But I suppose every nihilist wants to say “hey not my problem” and fire a shot below the water-line, then leave.

Marcus @ 23

I agree with your comments. The example I often used with students was groups of dogs: these are big dogs, these are medium sized dogs, these are small dogs. Where’s a black lab? Is that medium or large? If it’s medium, what’s a German shepherd? I certainly agree that intelligence can be applied the same way. I’ve known plenty of people who have had horses, and they all swear that horses are smart and have unique personalities. My point is that without a better working definition of intelligence, we may wind up arguing past each other. I am probably never going to agree about emerging machine intelligence with someone who does not consider that horses are somehow intelligent.

Separate item regarding creativity. A few decades ago (1970s or 80s, I forget) some music researchers decided to perform a statistical analysis of some of Bach’s works. (I think I read about this in the Journal of the Audio Engineering Society but I could be mistaken). Anyway, they created a program that would spit out new scores based on the scores that were examined. Technically, these were entirely new works, and by some definitions, they rank as “creative”. The listeners said they sounded very “Bach-like” (and in some cases, quoted passages from existing Bach works) but the listeners felt that they were insubstantial. Did they have a personal bias against these works? Would they feel differently if they were told that these were “lost” works of Bach, newly discovered? I don’t know.

jimf@#33:

Would they feel differently if they were told that these were “lost” works of Bach, newly discovered? I don’t know.

There’s a sort of Turing Test right there: if an AI is able to produce a fake Michaelangelo that can be passed off as a real one, what then? (I refer you to the Midjourney version of Michaelangelo’s Mary Magdalene, above, which is pretty darned good and, if converted to a 3D model and handed to a CAD machine to render in marble, would possibly fool some of the people some of the time)

[BTW, another interesting bastion of human creativity is going to fall to AI soon. Photogrammetry is already making it possible but I know there is ongoing research on how to use stable diffusion techniques to output a 3D object geometry instead of a pile of pixels. At that point, computer games can be populated with AI-generated implied spaces and objects. Which, as a gamer, would seem super cool. It would also mean we could have an AI design jewelry or clothes and pass them off to CAD systems to produce in tangible material.]

Arthur Koestler’s The Act of Creation (to oversimplify) defines creativity by modeling various skills as planes and invention as the intersection of those planes (e.g., an intuitive sense of body language and an explicit knowledge of carving stone). I think that gives a decent first step, but it doesn’t allow for the (inner or outer) eccentricity of most observable artists.

So I’d add another dimension – if you visualized my previous paraphrase of Koestler as a geometric diagram, now throw in some colors. Slacker that I am, I keep calling it “drug use” – though in this case expanding that to whatever alters one’s state of consciousness: exercise, fasting, intense prayer, all-nighters, fever, infatuation, possibly innate brain chemistry anomalies (as Theodore Sturgeon suggested in the case of famously drug-abstinent but psychedelic-minded Harlan Ellison). When I run dry, nothing helps more than switching from one bad habit to another, or even (gasp) cleaning up my act for a while.

IOW, computers now (roughly) emulate neurons and synapses – but to really get them cooking, we need to provide them with analogs of hormones and pheromones.

The generative systems I’ve tried suck at writing assembly code, but can write passable Python. Not sure how they fare with C or C++. Probably somewhere in between. However, even in Python, they suck at explaining specifics about the execution of the code (“what’s in variable X before instruction Y is executed the first time?”). They also totally fail to catch on to probing questions and hints about what may be wrong with the code they produce. To me, this means they’re still too shallow to qualify as “thinking”. And I mean shallow in a technical sense: that, on one hand, they don’t explore the solution space deeply enough; and, on the other hand, that they aren’t yet capable of reaching deep enough levels of abstraction. They’re qualitatively only a few levels deeper than Eliza (Doctor), albeit with a vast, machine-generated, opaque, entangled set of “rules”, rather than the very small set of clear, direct, but extremely shallow rules Eliza had. But, as with chess and then go, more depth, and perhaps more structured training, will get it there eventually.

The other day, I tried to get ChatGPT to write an integer multiplication routine for an 8-bit microcontroller. I already had a version with six instruction cycles per iteration (four, if I unrolled the loop), but I wondered whether it was possible, with that specific instruction set, to do it in five (or three, unrolled). ChatGPT suggested something with about two dozen instructions in the loop, with several mistakes. I tried to steer it towards something better (for my own amusement, not for any practical purpose). It was really really hard work to get it to correct its mistakes. Even when corrected, the errors would often come back two or three responses later. My suggestions for optimizations often resulted in it adding tests and jumps to handle some cases “more efficiently”, totally ignoring the fact that all those test and jumps only made the code larger and slower. At some point, I got it completely stuck because it failed to understand how to manage the contents of the carry flag. I tried to get it to figure out an optimization, gradually working up to more and more concrete and direct was of explaining it, but it was all in vain.

(If you’re wondering: you need 8 iterations to multiply two 8-bit numbers: one for each bit of the multiplicand, same way as you need as many rows in your long multiplication as you have digits in the second number. The loop starts with an 8-bit product and an 8-bit multiplicand. Each iteration grows the size of the product by one bit, just as it consumes one bit of the multiplicand. Rather than shifting the 8-bit multiplicand left (again, think long multiplication), which would require two bytes and two 8-bit additions in the loop, it’s easier to always add the multiplicand to the high-order byte of the product, then shift the product itself right (line up your long multiplication partial products in a column, but add them up diagonally). The multiplicand is also shifted right to get to its bits one by one. So if you store both the growing product and the shrinking multiplicand in the same 16-bit pair of registers, you can shift them together and save one shift in the loop. Check out Ken Shirriff’s absolutely delightful blog post about the multiplication hardware and microcode in the 8086.)

Long story short, I gave up teaching the computer how computers work, so I just gave ChatGPT my six-cycles-per-iteration solution. It managed to sound enthusiastic and congratulatory about the code “we arrived at together” (if there’s one thing it excels at, it’s gaslighting). When I pointed out there was a small bug in my version after all, and challenged it to find it, it asserted that it was still excellent code, being a very good compromise between performance and correctness (!).

At which point I was struck with the simultaneous realization that (a) I’m going to be just fine because my job is safe for at least another decade, and (b) we’re all doomed.

Sorry for the double-post. There’s nothing like that Post Comment button to immediately trigger the realization that you forgot to make your main point.

So far, LLM behave like unprepared undergraduate students. They’re far from creative when working at the limits of their ability, and their response to any challenge is to brownnose you while taking another blind shot in the dark, hoping something sticks. It’s amazing how they try to pull the wool over the examiner’s eyes with sheer volume and over-detailed explanations of obvious facts, while glossing over glaring gaps where more subtle reasoning is needed.

They do differ from some students in that they remember lots and lots of facts, but they resemble some in that they lack the experience to use these facts together and reason from them. There’s only very limited inference that I can see (and I assume that, were I see more, it’s acquired as-such from the training set — the LLM has seen the problem and its solution before). This is not nothing (unprepared undergrads can do useful work), but there is no deep thought here yet.

As with inference, so with creativity: I’d argue that most of what we perceive as creativity comes wholesale from the training set; from the human writing the prompts, sifting through the attempts, and refining the prompts; and from the uncanny ability of the networks to realize concepts by making the grunt work effortless. But, underneath that, the network is not yet deep enough to be different in kind from a sort of artistic fruit machine feeding instructions to an industrious but otherwise talentless hack.

Or a large but finite number of monkeys with typewriters with world’s most advanced auto-correct.

@ 35 Pierce R. Butler

Arthur Koestler’s The Act of Creation (to oversimplify) defines creativity by modeling various skills as planes and invention as the intersection of those planes

The fortuitous intersection then? Something I used to say to my students: “Always remember, the person who invented sliced bread invented neither bread nor knife.”

Makes you wonder how humans would behave if we short-circuited some of those motivational drives. E.g. if we hook a human being up to a machine that provides physical nutrition and deals with evacuation of waste, what would people then be motivated to do?

Personally, I find that the main reason I get out of bed is to either eat, poop, or deal with social demands. Otherwise, I could spend the whole day just daydreaming. I might eventually get up and do something, but not nearly as regularly as I do now.

You made me look up Ferd Berfel, because prior to seeing it written here, I’d been spelling it Furd Burful in my head for decades. I was wondering if we were referring to the same character played by Dick Martin since it had been so long and I had forgotten, and I think we are.

It’s all ‘e’s.

That would make a nice quilt.

Marcus writes:

Wow. it’s a bit breathtaking to see that you’ve got the practice of law exactly and entirely backwards. In fact, it’s been designed over centuries or even millennia to accept and handle vagueness: this is why we have courts and judges (and juries) rather than just rules. Try reading some actually laws and you’ll invariably find them far from precise. Lawmakers and those whose job it is to interpret laws (definitively, in the case of judges, or making a good guess, in case of lawyers) are well aware that there are simply too many specific situations out there that can’t be anticipated exactly to allow for explicit rules that can be operated at the level of computer code, despite the desire of those less knowledgeable (often computer programs) for such laws. (And even they quickly realise and abandon “the code is the contract” when they find “bugs” in their “contracts”: see The DAO and the Ethereum blockchain split.

I’ve no particular opinion about creativity vs. AI, but I do think there’s a huge and almost completely ignored elephant in the room related to the intelligence of “AI” and what it can do. There’s a lot of talk of it taking over jobs that require reasoning without pointing out that there is (at least through ChatGPT 4) no reasoning ability at all. This has been becoming more and more difficult to see as they continue to add tricks to cover up LLMs’ inability to count, but try to do anything even a little bit sophisticated that requires reasoning (or even just understanding and adhering to a few logical rules) and you’ll quickly run into examples where it just can’t do that, often leading to frustrating “loops” of the sort cvoinescu discusses above where it keeps giving you logically invalid answers, followed by apologies and more invalid answers.

I think that part of the problem here is that LLMs these days are very well tuned to feel like a human interlocutor and thus are very good at triggering the pathetic fallacy in humans that are using them. It’s perhaps a “bug” in our human heuristics for deciding whether someone is intelligent or whether or not they’re presenting intelligent ideas that we’re often so bad at this; this is widely taken advantage of by a lot of managers in business who don’t really have much idea of what they’re doing but fake intelligence about the application well enough to be leading the people who actually do the work that keeps the business running.

I’ll start by saying that I’m not in any way holding the ‘human supremacy’ position. I don’t think that there’s anything that would prevent some future AI system from being better than the ones now being discussed.

I do take issue with the idea that these current crop of generative AI systems are, or even the idea that they are ‘intelligent’ in any meaningful way. The ‘AI’ description, as ever, is a marketing term.

So my argument is on two fronts here. First, that the systems are not meaningfully creative – and second, that they are not meaningfully intelligent.

Definitions for both terms are certainly not settled, but I think that the common language understanding of both would suffice here.

The main thrust of my argument is going to rest on this: the systems contain no fundamental understanding of the terms or images they are manipulating. They are clear examples of the ‘chinese box’ form of apparent AI.

However you choose to define intelligence or creativity, I cannot conceive of definitions that would not include the agent having some level of understanding of what it is doing. The current ‘AI’ systems do not. Mid-journey does not ‘know’ that a Fist is a subset of the thing called a Hand – that it contains the same parts in a different arrangement. Its manipulation of images is based on a wondrously complicated way of running a kind of visual spellcheck on segments or the whole of the image. I think that’s why AI generated video is so disturbing and/or fascinating – you can really clearly see the way that forms that are apparent and distinct to human eyes reform randomly as the system churns though more-or-less likely things for each pixel to look like in relation to it’s neighbours and the whole of the image. You can really see this effect in AI generated videos of dogs, for some reason. Watching relatively realistically moving animals spontaneously absorb into one another and their surroundings reminds me rather pleasantly of time spent with DMT, but makes my wife queasy and uncomfortable.

The systems also do not have goals, and I think that a goal is also required to be practicing creativity or demonstrating intelligence. An artist is being creative when they produce something, manipulate it, and at some point declare it to be a finished product. They applied skill, knowledge, technique, maybe science, luck or randomness to a medium and eventually determined for themselves that the result met their desired goal. Whether the goal was an abstract painting, a hyper-realistic sculpture, or some kind of complicated dance routine is irrelevant. Current generative AIs do no such thing. There is nothing implicit in their design or effect that moves towards any goal at all. Left to their own interests, they do nothing. Motivated by commands, they apply their algorithm to their data-set. They apply their algorithm as many times as the person operating the software determines to be the value that, most often, produces pleasing results. If the iteration settings are too high, or too low, the ‘AI’ produces more-or-less random noise. It does not care, because it is not capable of understanding the degree of relationship between the terms given and the result produced. It will never know when its rendition of “La Pietà but with Donald Trump holding Ron Desantis instead of Mary and Jesus” looks ‘realistic enough’, because the algorithm has no understanding of realism, no conception of enough and no goals whatsoever.

You – the artist who crafted the prompts, in conversation with the carefully curated and tagged data-set, its meticulously tweaked and adjusted algorithm, and its many hundreds of thousands of creative, intelligent, contributors are doing the actual creativity here. The ‘AI’ is just a tool you use to do it. Truly a unique and new tool, and one that can bypass many of the traditional skills that might have been required to create art in the past. But functionally it’s not that different from the way that people used to carefully hand-painting animation cels might have looked at a program like Blender that can take what amount to hundreds of thousands of pages of spreadsheets and spit out a photorealistic movie. They’re just tools, these programs. A tool for spitting out really cool remixes of images and a tool for spitting out incredibly bland and untrustworthy reams of mostly comprehensible text.

This is already far too long and duplicative of other arguments better stated elsewhere in this thread. So I’ll just stop now.

Oh, it is probably worth pointing out one example I discovered recently of an LLM being indisputably “creative” in one common sense of the word.

I have found, as have many others, LLMs to be quite good at synthesising examples of computer program code that have some relevance to the prompt they’re given, at least in cases where they’ve been trained on many examples. This is an interesting special case in that it’s a relatively simple task for a human to look at code and see what it actually does; these examples generally don’t do what you wanted (and are often enough so internally inconsistent that they don’t work at all, much less do what you wanted) but can be a useful starting point for a programmer not deeply familiar with the particular programming language.

As I’m not deeply familiar with Vimscript, I tried asking ChatGPT to write a Vimscript function to search the current working directory for an executable file named

commitand, if not found, recursively search parent directories for such a file. The solution it gave me was very creative in that as soon as it found any file namedcommitit marked it executable and declared that it had found such a file.That was unquestionably a creative (and very amusing) solution to the problem I posed. But it also explains why LLMs are not about to replace good programmers any time soon. (I freely admit that LLMs may well replace bad programmers, who are indeed probably the majority. But they’re able to do that for the same reason we have so many bad programmers in the first place: a lot of people in charge of software development care more about the appearance of code working than whether or not it actually works. This all seems to tie in rather nicely to problem we’ve long had, particularly (though far from exclusively) among “managers” of various sorts, of people who substitute the appearance of doing work to actually doing it.

Perhaps the issue here is that creativity, while important, is not really that difficult: you can get a significant (and perhaps in many cases sufficient) amount of creativity from simply making random choices. See, e.g., aleatoric music.

@snarkhuntr It sounds to me as if you may be saying that the creativity from the machine here is an illusion: the process of us selecting a result (first by composing a prompt to restrict the result set, and then via iteration of the prompt and selecting the particular result we feel is best) is actually where the creativity lies.

I think that’s a good insight.

Although I suppose a lot depends on one’s interpretation of creative (are the hypothetical monkeys typing Shakespeare being creative?), I must confess that while I certainly don’t adopt a human supremacist position (animals can be creative by my subjective interpretation) I am unconvinced that “AI” is as yet (I feel as though creativity implies a degree of intent which, to the best of my knowledge, “AI” has not attained). I wouldn’t, however, presume to guess what the future may hold (while I suspect a sufficiently advanced computer probably could be “creative”, it may well be that there are limitations – such as the resource cost being unsustainably high, or something of the ilk).

But setting all that aside (and apologies if this is a little off topic), I feel that we already have a surfeit of things being created. And while I fully support people engaging in this regardless of the results (I think the human need to create should be indulge as the process benefits us – if no-one else!), “AI” has (to the best of my knowledge) no such “need”. And so, given Sturgeon’s law (90% of everything is “garbage”), is it really in our best interests to automate the process? Now if an “AI” could screen out the “garbage”, to my mind that might be a far more valuable use of creativity – and surely arguments over “assessing art” are similar to arguments over “creating art” in terms of how subjective criteria may be applied, what counts as “art”, etc.?

In short, I suppose I’m less interested in an “AI” Artist than I am an “AI” Art Critic…

And, as a follow up thought, isn’t an “AI” which can spot “bulls**t” more valuable than an “AI” which can generate it? An “AI” might be able to generate a convincing fake video to destroy political rivals (for example), but I’d much rather have “AI” which can spot that…

@Dunc #18, I don’t think that changes anything about my thought. Which condensed even further is that even if Marcus is right and the current AI is exhibiting some form of creativity, it would be foolish to say that the problem of creativity is solved. I maintain that there are probably many other forms of creativity. Maybe people were thinking I was talking about a holistic approach to the problem of creativity. I was not. I was pointing out that every complex situation we have analyzed has proved to be made of smaller complexities which we slowly tease out. I strongly doubt that the thing we call creativity results from a single process.

As I understand them, snarkhuntr and Curt Sampson say that the creativity is in the prompt, not the tools which generate the results. I’m not certain I fully agree with them. The prompt represents a desire, something someone wants. The desire may be a picture or a piece of jewelry, which are examples from above. We do that already when we commission a piece of work. And we typically do not call the person who commissions the work creative.

As an example, I commissioned an artist to make a tea pet for my wife for Christmas. I asked for a waistcoat-wearing badger holding a teapot. The artist sent me a couple sketches and we had a back-n-forth with ideas. Once we selected a concept the artist translated the sketches into clay. (My wife is going to love it.) This is definitely creative art, but where is the creativity in that process?

Was the creativity in my initial prompt to the artist, “I would like a waistcoat wearing badger”? No, not really. I knew what I wanted, but there are hundreds of paths to get there and dozens of different artists who have illustrated The Wind in the Willows who have drawn hundreds of versions of a waistcoat-wearing badger. I wasn’t being particularly creative with that request.

Was the creativity in the artist’s sketches? This is certainly a possible place, but this is also a task which an AI trained on millions of images is able to perform. The fact that the artist’s sketches are unique is not recognizing that the artist trained on hundreds of thousands of images themselves. The only difference between the artist and an AI image generator is that the artist understands a penumbra of concepts about a badger and a waistcoat. The artist knows, or can look up, the habits of a badger. The artist understands the relationship of a waistcoat to other clothing and knows why clothing is important to people (style, warmth, and to cover up the naughty bits). An AI doesn’t know any of this. It doesn’t “know” anything. The AI associates words with images and generates an image which matches. The question is: is the penumbra of knowledge the artist has about badgers and waistcoats important?

Was the creativity contained in my final selection of a sketch? Now we are looking at my preferences. Which is a result of the training I’ve had of thousands of images over the course of my life, as well as millions of other experiences and decisions which led to favorable or disturbing outcomes. Could an AI trained on my preferences accurately predict what sketch I would prefer? That’s an open question, I think it’s possible. But am I being creative by making such a selection? Historically, we would say no. The commissioner of a piece of art isn’t considered the creative person, the person who executes the commission is.

Is the creativity in the translation of the sketch into clay? This is arguably a place where creativity resides. It does take skill to translate a flat drawing into clay. I could try it myself, but I know I wouldn’t do as good a job as the artist. Yet, as mentioned above, making bad art can be just as creative as making good art. If I attempting that translation would I be creative or would I just lack the skills to translate my ideas into clay? Finally, there is no reason to think that an AI couldn’t handle this task. For an AI to do it, the difficulty is to translate the 2D image to a 3D one, and there are tools for that. Once the 3D image is generated there are other tools which can take the 3D file and plot a tool path to extrude material to form the object.

So, where is the creativity?

Curt Sampson @ #44: The solution it gave me was very creative in that as soon as it found any file named commit it marked it executable and declared that it had found such a file.

I think you’re being much too charitable to the “AI”. I interpret it as a combination of two factors: the LLMs aren’t very good at languages for which they have few examples. Even if they ingest complete documentation, they don’t understand it and can’t apply it, so they still need abundant examples of actual code, with good annotation of what the code does and how. And secondly, they still rely too much on keyword recognition. It just saw “file” and “executable” in the context of Vimscript, and if the training data had examples of making files executable, but fewer or no examples of checking file attributes, you’d get the former rather than the latter, because it’s still a good match for your keywords.

That first one looks like something by Klee

flex @ 48

While I don’t want to speak for anyone else, nor do I wish to interrupt, I would like to just comment briefly.

Imagine you have your infinite monkeys typing away to produce Shakespeare-style literature. You can train the monkeys to recognise patterns (the letter combinations) which you consider “good” (by rewarding them with bananas when they produce something you recognise as Shakespeare-style writing). However, this doesn’t require the monkeys to understand Shakespeare, sonnets, plays, or even language – it just requires them to be able to recognise “good pattern” and “not-good pattern”. So, would a sufficiently well trained batch of infinite monkeys be creating Shakespeare-style literature, or are they merely producing patterns which we can recognise as Shakespeare-style literature by interpreting the patterns as letters and words even though the monkeys do not?

I would argue that the monkeys are being productive but not creative – in the sense that they are not purposefully creating literature (good or otherwise) based on any understanding of what literature is, but are merely responding to what you (the trainer) are categorising as “good” or “not-good” (my admittedly incredibly limited understanding of “AI” is that it is much like the monkeys in this sense).

Of course, one could argue that any form of production is creative irrespective of intent – but then I’m not sure why you couldn’t apply the same standard to, for example, the wind blowing some sand into something we would recognise as a shape or pattern. In which case, doesn’t “art” then become purely in the eye of the beholder and not the producer (and thus requires no creativity – or, arguably, sapience or sentience – on the part of the producer?).