New databases are being assembled for ‘tone’ surveillance. What’s that? It’s not Miss Manners.

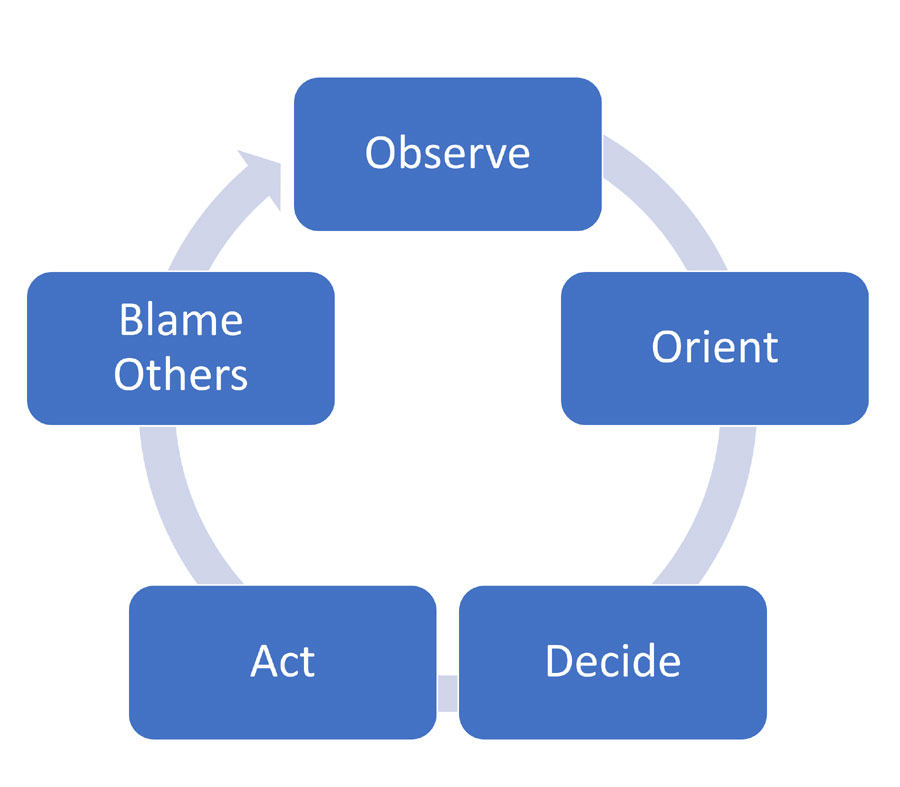

The surveillance/intelligence state has a huge problem: it’s collecting too much about too many people, so it cannot use the information strategically, it can only use it reactively. The entire great machinery of “big data” for intelligence becomes what I call the retro-scope: useful for figuring out what happened after you already know what happened.

If it’s too person-intensive to bring analysis forward in the decision-making process, we’ll just drop in some AI.

My beliefs about AI are still in a somewhat muddled state but for the purposes of this discussion, I’ll readily grant that AI are really good at classifying things. The basic form of classification has been in use for decades, most popularly for spam-blocking: you build codexes of phrases that are used in different types of documents, then you analyze a document by building a temporary codex and measuring the total difference between the precomputed codices and the document. Let’s say we have a codex that is chosen as “Anti Government” from facebook pages, and then another that is “Pro Military” – we can take a given posting, and if it’s got a higher total of phrases from the “Pro Military” codex, then that’s what it is. You can wave Bayes’ theorem or AI neural networks or Markov chains at it, if it makes you feel better, but basically it’s a scoring system. It works really fast, too, which is nice; you’re just doing a bunch of bloom filter checks. I implemented something much like that on email ingestion loop on whitehouse.gov, using a simple phrase-list I came up with over a cup of coffee, and it detected threat emails with about 99% accuracy.

So the forces of “We’re From The Government and We’re Here To Help” are stampeding down a process of adding automated classification to everything they collect. It’s a great gold-rush for the people who build the collection and analysis architectures, and we’ll have to see whether it pans out at the end, or not. My bet is that it’s mostly wasted, but they’re going to try anyway, because if they don’t they have to admit that they wasted our money building a retro-scope.

From The Nation:[nat]

Following President Trump’s calls for “extreme vetting” of immigrants from seven Muslim majority countries, then-Department of Homeland Security Secretary John F. Kelly hinted that he wanted full access to visa applicants’ social-media profiles. “We may want to get on their social media, with passwords. It’s very hard to truly vet these people in these countries, the seven countries,” Kelly said to the House’s Homeland Security Committee, adding, “If they don’t cooperate, they can go back.”

Such a proposal, if implemented, would expand the department’s secretive social media monitoring capacities. And as the Department of Homeland Security moves toward grabbing more social media data from foreigners, such information may be increasingly interpreted and emotionally characterized by sophisticated data-mining programs. What should be constitutionally protected speech could now hinder the mobility of travelers because of a secretive regime that subjects a person’s online words to experimental “emotion analysis.” According to audio leaked to The Nation, the Department of Homeland Security is currently building up datasets with social media–profile information that are searchable by “tone.”

What does “tone” mean? That’s the AI that recognizes aggressive jihadi-sounding talk, or antifa talk, or black lives matter talk, or “Trump is a complete dipshit” talk. DHS was already asking for people’s facebook and social media information, then they collected way too much of it, and now they’ve got to figure out what to do with it. “Emotion analysis” is just ignorance-speak for training sets. No doubt someone is going to sell DHS some very fancy AI stuff, but they probably could use POPfile. [wik]

At an industry conference in January, Michael Potts, then-deputy under secretary for enterprise and mission support at the DHS’s Office of Intelligence and Analysis, told audience members that the DHS’s unclassified-data environment today has four datasets that are “searchable by tone,” and plans to have 20 additional such datasets before the end of this year. This data environment, known as Neptune, includes data from US Customs and Border Protection’s Electronic System for Travel Authorization database, which currently retains publicly available social media account data from immigrants and travelers participating in the Visa Waiver Program.

[source]

(Patrick Grey from Risky Business [rb] interviews Marcus Ranum on “Big Data” at RSA Conference 2014)

It’s going to work, but it’s not going to work well enough that it’s going to help anyone. It’s also very easy to defeat: you should be building your facebook profile now with your neatly sanitized “share” stream and your public identity. Of course the NSA will be able to tell that you log in to that account and your real account from the same DHCP cloud with the same tracking cookies in your browser – but that linkage won’t be available interagency for some time to come, and it’ll change all the probabilities in the AIs training sets if it does: suddenly you will have accounts that are 50% anti-government and 50% pro-government and someone will manually have to sort it out (which means it won’t happen) This is what is called a Disambiguation Cost Attack [cyberinsurgency]

The department reviewed hundreds of tools pertinent to vetting foreigners and conducting criminal investigations, and by September it was using social media for “30 different operational and investigative purposes within the department,”

All these “Big Data” systems will keep getting embiggened, with more layers of AI because they’re too big already. But they are definitely a threat. Mistakes will kill: I was already pulled over by threatening gun-toting goons because of a mistake in Pennsylvania’s license plate scanners. If I were black, I’d have been in mortal danger. [stderr]

When you have a “Big Data” fire, the best way to put it out is to add more data, naturally: [beast]

When you have a “Big Data” fire, the best way to put it out is to add more data, naturally: [beast]

U.S. airlines use cameras to capture the faces of fliers and are giving the images to the government.

JetBlue started using customers’ biometric data, unique physical traits, in June to let them get on flights from Boston to Aruba without a boarding pass. At the same time, JetBlue started sending the data to Customs and Border Protection so the government could vet travelers. Delta is currently in discussions with CBP to do the same.

Even Homeland Security concedes airlines may use this for purposes other than ID checks.

“There is a risk that approved partners will use biometric images collected under the [service] for a purpose other than identity verification,” CBP said in a June privacy impact assessment (PDF).

I wonder what’s next? Perhaps the AIs will be asked to classify travelers based on whether they look “grumpy” or not, and merge that assessment against “tone” to get a full personality profile. In which case they will have to figure out how to tell someone who merely hates United Airlines’ lack of leg-room from someone who hates America for its Freedoms. Maybe they will use Faception – remember that one? [stderr]

The agency admits there are many privacy issues surrounding this “partner process” that need some resolving.

That’s government code for “we’re doing whatever we want and we’ll sort out whether it’s the right thing or not, if we wake up some day and discover that we give a shit.”

[Parts of this happy little ray of sunshine were forwarded to me by Shiv via the Hive Mind Interconnect]

It is worth noting that the visa application facebook-mining was instituted under President Barack Obama, in 2015.

Facial recognition systems don’t work as well against black people because the cameras are trained to higher contrast ratios (e.g.: a fish-pale white guy with dark eyebrows!)

Facial recognition/expression recognition is based almost entirely on the ‘science’ of Paul Ekman, a psychologist (I’d say “pseudo-scientist”) who developed a system of expression components. It turns out, naturally, that Ekman used himself and his white collegiate friends and students, and produced yet another branch of psychology that appears to be objective but is, in fact, bullshit. What’s really annoying about this is, if you build an AI that recognizes expressions based on Ekman, you have an AI that is as wrong as a psychologist. Way to go!

Technically the plural of “codex” should be “codices”.

I wonder if there’s an AI out there scanning the internet for Latin-based grammatical pedantry? If there is then I’m no doubt on several government offenders indexes already. Or, rather, indices as we offenders call them.

Still, on the up side I’m probably fast tracked for travel to the Vatican now.

So it probably doesn’t make much difference in the end, but I do not have any social media accounts (except for linkedin, and I don’t do anything with that), I haven’t flown anywhere in many years, and I probably won’t be flying very much (or any at all) in the future. Will I just be slower to be categorized?

AI just needs to look for multiple exclamation marks and caps lock and they’ll get a

terroristfreedom fighter every time.BUT LOFTY, SOME OF US USE THAT METHOD FOR SNARKING!!!!111!1!1

cartomancer@#1:

I wonder if there’s an AI out there scanning the internet for Latin-based grammatical pedantry?

10 BEGIN { Subject: “cartomancer”, Module: TuringTest1.0 }

20 ? Hello $Subject, have you stopped beating your significant other?

[REDACTED Classification level insufficient]

johnson catman@#4:

SOME OF US USE THAT METHOD FOR SNARKING!!!!111!1!1

How long will it take the snark-bots to learn that trick, I wonder?

My question is how long will it be before this sort of thing, and all the other sorts of things like this, actually start to become a serious impediment to travel and trade?

Virtually all tourists and all business travelers but the top echelon of company executives are proles like us. Will this have a significant economic impact (yes, it already is) … but big enough to be noticed and cared about by the gubmint?

It feels like a repeat of profiling.

If Joe Bloe the guardsmen is on the lookout for a bearded dude in a turban*, terrorists will recruit John Smith the Bank Clerk to do the deed.

*I’m aware this is usually indicative of Sikhism. Try telling that to the security theatre.

Well, what happens if you do not have these social accounts?

I am sure Markus and his readership could work out ways to defeat the program. I think it would only catch amateurs

@Cartomancer, #1: It depends.

Loan words which have acquired new meanings retain the pluralisation rules of the donor language in their original meaning, but follow English pluralisation rules when used in the new sense (because they are now English words in their own right). So beetles have antennae, but radios have antennas.

If we were talking about physical books bound at one edge, they certainly would be codices; the question is whether the meaning has changed significantly enough. Actually, since edge-binding is all about finding any given page quickly, maybe random-access databases are still codices …..

@Cartomancer, #1:

Don’t be ridiculous. If the plural of codex is codices, then the plural of rocks must be roces. And everyone knows the Roces were a tri-femalular singing group until Maggie died earlier this year.

The singular of lynx is link.