Warning: Sexual practices, bodily fluids

There’s an old USENET dictum: “never underestimate the bandwidth of a station wagon full of magnetic tapes.” (corollary: yeah, but the latency’s killer)

What do you do when you need to move a whole lot of data into the cloud? Let’s say a petabyte? (a million gigabytes) Assuming you can get 50% saturation out of a gigabit link that’s about 500 years transfer-time. And, if you’re like me and you live in Verizon’s metered LTE cloud, where every gigabyte over 30gb costs $15, it’d cost a bit less than $1 million. This is a real question, though, for organizations that are moving video archives (think: all the cop-camera footage in a major city) (heh, I’m kidding, major cities don’t keep that stuff!) or libraries, or genomic databases; what do they do?

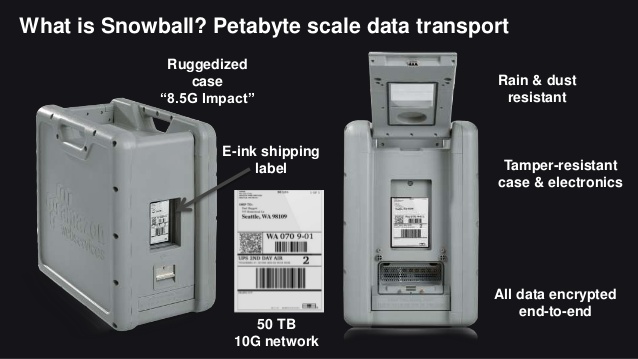

Amazon has an answer! It’s a truckload of tapes! Things start small with the Snowball appliance – an armored box for data. It’s pretty cool: you set it up on your network, copy your data to it, you ship it to amazon and they offload it into S3, and your data is in the cloud!

In the Snowball appliance, the truck is FEDex or UPS, or maybe your system administrator has to drive it somewhere in the back of their car. I have scary memories of when I was a young pup at Digital and our RA-81s moved from one facility to another in the back of my little Honda CRX. Nowadays most people don’t even understand “I put a VAX 11/750 rack in the back of a Honda CRX” and recognize that for the herculean feat it was. I also dropped a VR/219 monitor down a flight of stairs in the process; it survived.

If the armored box of hard drives doesn’t work for you, there’s the Snowmobile:

Amazon snowmobile – 40,000lbs of “lock in”

The Snowmobile is what you’d use if you had an Exabyte of data: it’s a small convoy of semitrailers full of hard drives. I wonder whether the NSA had an armed “midnight run” from Ft Meade, MD to the new data center in Utah, or were they just cross-syncing the data all along? There’s a cool plot in there for a cyberpunk adventure: someone thinks they’re about to knock over a truck full of iPhones but accidentally they get a petabyte of CIA data; hilarity and body-count ensue.

But, none of that was what I wanted to write about today. I wanted to talk about marketing. In well-run businesses, there’s a role called a “product manager” who interfaces with the engineering team and marketing team, and makes sure that things like the software licensing model, documentation, product collateral, and product name are in line with corporate road-maps. A product manager would be the person who would have flagged some Amazon engineer’s play when they tried to name a cloud computing data transfer after the sexual practice of spitting semen into one’s partner’s mouth.

Hey, I don’t care. I’m about as open-minded as you can get about this stuff. Have fun, y’all!

The data goes from your network, to the cloud, in one easy quick transaction

I wonder if they’re planning on offering an audit/log-data retention service and if they’ll call it “felching” [wikipedia] Those witty coder-bros! C’mon, there’s NO WAY that was accidental.

Ah, you’re making me nostalgic. One of the jobs I had in college was as “Data Aid” for a SCADA system (electrical grid control) destined for the PASNY Niagra Project. IIRC, It was dual PDP machines (my memory says 11/780, but I thought this was pre-VAX) with custom hardware to fail over between the hot and standby systems. That’s the summer I spent some fraction of my time using some very expensive hardware to play Adventure (aka Colossal Cave). This was before the RA-81; we were using removable disk packs (Google Image shows an RP04 that looks familiar)–I remember we had to park those suckers in the drive for some time before spinning them up so we didn’t get a thermal mismatch etching the platters.

Speaking of product manager missing stuff, the scuttlebutt (urban legend?) around the industry is that the Intel crew snuck some opcodes into the macro assembler allowing Logical OR and Logical AND into the SX register, giving us ORL SX and ANL SX.

@SOP

There’s a Microsoft protocol called MMS for delivering multimedia streams. The binary protocol involves several 32-bit constants that have to be *just so* otherwise the other end won’t talk to you (and given already fielded software, these pretty much can’t be changed unless you’re prepared to break them.) They published the protocol specification in 2008, and almost all of those constants are given in hex, apart from one. For some reason they specify 2953575118 in decimal…

Some Old Programmer@#1:

Google Image shows an RP04 that looks familiar

There were a bunch – RP04, RP06, RP07. Damn those things were heavy; they made disk drives like bulldozers in those days.

Yes, you had to let the packs come up to temperature; everyone learned that the hard way. Same with RA-81s, too. It’s weird that those things were so big and solid, yet they had such fine tolerances – and those tolerances were nothing compared to where hard drives run nowadays. RA-81s were so cool – if you resected the top of the unit there was a serial port you could plug a VT-52 into, and talk directly to the drive. Like, seriously, “how are you, hard drive? Oh yea? Well, could you position your head over at block #27? And what’s your bad block map look like these days?” I know because I saw an old school systems wizard reconstruct a munged bad block table by hand.

That failover stuff later became part of Digital’s advanced drive controller software and was used in VAX clusters and some databases. When General Robotics made an IPI dual-hosted controller for Sun SMD disks, I coded a hot/cold transfer daemon for them; those were fun times.

the scuttlebutt (urban legend?) around the industry is that the Intel crew snuck some opcodes into the macro assembler allowing Logical OR and Logical AND into the SX register, giving us ORL SX and ANL SX.

I know just the people to ask… Let me. (I’ll get back to you if I hear anything)

xohjoh2n@#2:

For some reason they specify 2953575118 in decimal…

Clever. And I thought using 0xDEADBEEF as a magic number was “edgy”

Hmm. We had a PDP 11/70 with 3 drives taking 4-5(?) platter disk packs, and a 11/34 taking a front-loading thin (1 platter?) cartridge. Don’t remember the drive/pack model names. But they were basically shoveled in and loaded up, no particular wait for thermal equilibrium on disk change. (Then again everything was kept in the same room so maybe no need.) ISTR it being mentioned that the drives originally came with “brusher” sponge pads, which “everyone” removed because they removed more disk surface than dust…

(Oh, and modern hard disks also have a serial interface. You can talk directly to the block remapper about zones and shit. And tell it what to do in ways you can’t over the normal SATA interface. Not that I’d trust a drive I’d ever done that to with real data ever again…)

Don’t remember the precise model now, but one evening in the High Energy Physics building, a friend and I where working in our shared office and when a grad turkey walked in and said she needed some help putting a pack into a drive in one of the downstairs labs. Seems simple enough, so we trooped down to help. Totally routine-looking job, my friend removed the pack in the drive whilst I removed the cover from the new pack — and a screw fell out.

Er… it’s probably not wise to install this pack, that‘s not supposed to happen. Why not? Well, a surface could be damaged or a platter loose, either of which could then cause the drive to be damaged — and that’s seriously expensive…

The pack wasn’t installed, and the cover (and screw) secured with tape and a “Do Not Use” sign, with our contact details. Never heard anything more about it.

(Around the same time, a different friend at a different facility had, without thinking, after a head crash, moved the pack to the second drive. The damaged pack then crashed the heads on that drive… Oops!)

I always likes 0x0BADC0DE for no bad code. Also useful for private fake USB device codes.

I thought that was called juggling?

I had a bit of fun once with the startup code for a new architecture project I was involved in. What I wanted to was “salt” the (huge) register-file with values which were “maximumly illegal”, “maximally improbable”, and “origin unique” — that is, each register’s initial value would indicate which register it was (origin unique) whilst also being very very unlikely to be a valid value (maximally improbable) and also, as much as possible, an illegal value (maximally illegal, causing some sort of processor fault if used). This was on a 64-bit machine.

The scheme I eventually cooked-up was a negative odd integer for all of the four 16-, both 32-, and the 64-bits values, a guaranteed impossible address (there were values which could never be valid addresses on this machine and caused a specific unique fault if used in an address-like manner (not just dereferencing)), a double-precision floating point NaN (couldn’t quite also make it a single-precision NaN, but a survey showed extremely little use of single-precision), and encoded the register ID in an easily-seen “human”-readable format (in a hex dump).

The idea here, of course, was multi-fold: If you observed one of these rather unique-looking values in an unexpected place, you had a warning something could be a wrong (as well as a starting-place when looking for the problem), and when used in many contexts either generate a fault (NaNs & bad pointers) or else, quite possibly / hopefully, unexpected behaviour (negative odd integers).

It worked, albeit I’m uncertain if any hard-to-find bugs were every caught (or caused!) by this careful “poison” salting.