The intelligence state has not been collecting data for its own entertainment; they have to find a use for it. Remember back to when the Bush administration’s secret interception program was disclosed? Probably the most painful bit of news in it was that the NSA wasn’t (yet) capable of doing anything particularly interesting or useful with the data.

If you’ve worked in computing at all in the last few years, you will have noticed there is a big push surrounding artificial intelligence and “big data” – these things are all interconnected:

- Collect data into a big pile

- Realize it is far to much to understand let alone search through

- I know! Let’s get some software to do it!

- Machine learning

Machine learning/AI does work but its learning is going to depend on having a measurable success criterion. It’s like the joke:

An AI walks into a bar and the bartender asks “what do you want?” The AI points to the guy next to him and says “I’ll have what he’s having.” Another AI walks into the bar, and points at the first AI and says “I’ll have what he’s having.

None of that matters, anymore. Now that the police state has authorized itself to collect and process whatever data it can collect, that’s what it’s going to do. And damn the consequences – we must prove that the effort was successful, for budgetary reasons.

Reporting in The Verge: [verge]

According to Ronal Serpas, the department’s chief at the time, one of the tools used by the New Orleans Police Department to identify members of gangs like 3NG and the 39ers came from the Silicon Valley company Palantir. The company provided software to a secretive NOPD program that traced people’s ties to other gang members, outlined criminal histories, analyzed social media, and predicted the likelihood that individuals would commit violence or become a victim. As part of the discovery process in Lewis’ trial, the government turned over more than 60,000 pages of documents detailing evidence gathered against him from confidential informants, ballistics, and other sources – but they made no mention of the NOPD’s partnership with Palantir, according to a source familiar with the 39ers trial.

The program began in 2012 as a partnership between New Orleans Police and Palantir Technologies, a data-mining firm founded with seed money from the CIA’s venture capital firm. According to interviews and documents obtained by The Verge, the initiative was essentially a predictive policing program, similar to the “heat list” in Chicago that purports to predict which people are likely drivers or victims of violence.

This is cops doing what cops always do: they identify a ‘bad neighborhood’ and ‘bad actors’ and watch who they talk to and what they do. The only difference is that there is a piece of machine-learning in there, now, to help add new links into the analysis based on probability.

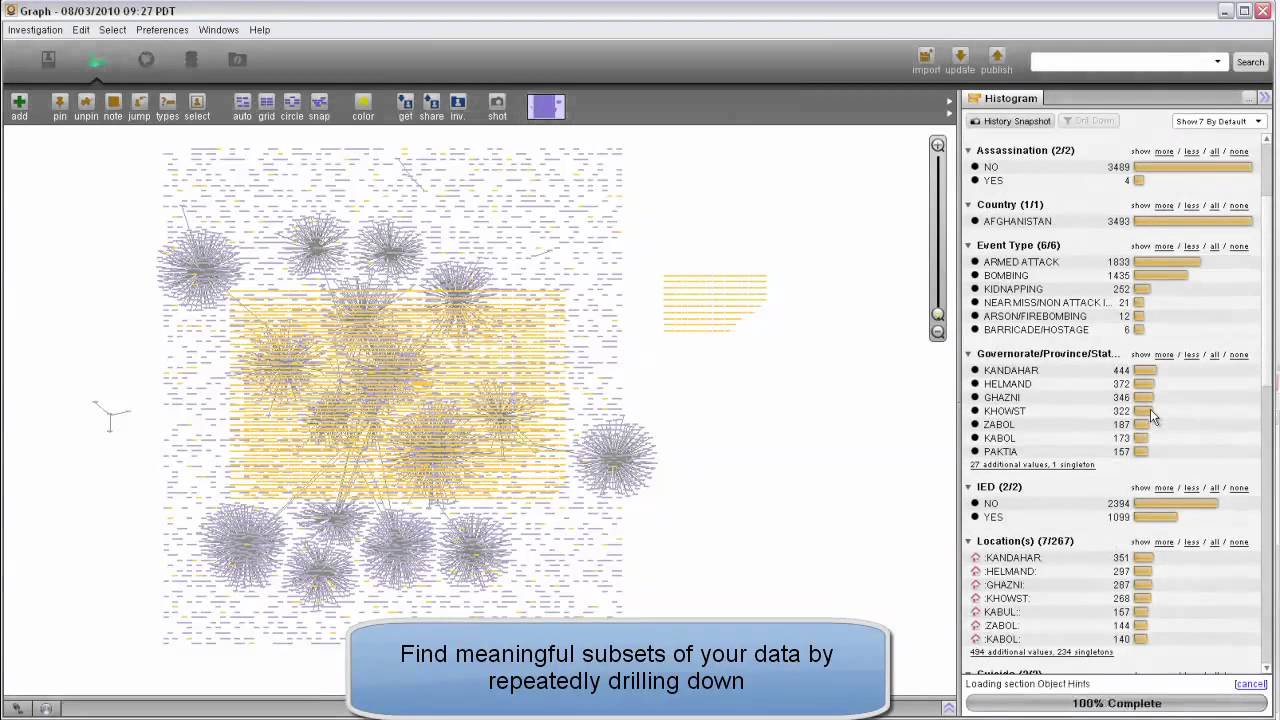

Palantir: “drill down into subsets of data”

When we say that “racists will train racist AIs” this is exactly what we’re talking about. What is Palantir going to find in those areas? More of what cops have already been finding in those areas. So the places where cops are turning a blind eye to the peccadilloes of the wealthy, we’ll see areas that look peaceful and well-maintained. In the places where cops are looking more closely for gang activity, we will find gang activity. Then, the software will magnify it.

I’m not particularly concerned that this is going to turn into a magical big brother system – I predict it’s mostly a boondoggle that is going to such a bunch of money out into a basically useless (but pretty) system. Here’s where I get a bit worried:

Predictive policing technology has proven highly controversial wherever it is implemented, but in New Orleans, the program escaped public notice, partly because Palantir established it as a philanthropic relationship with the city through Mayor Mitch Landrieu’s signature NOLA For Life program. Thanks to its philanthropic status, as well as New Orleans’ “strong mayor” model of government, the agreement never passed through a public procurement process.

Reading that carefully, it appears that: New Orleans’ dictator mayor tossed a bunch of procurement dollars to a defense/intelligence company under the table, and called it a humanitarian gesture. That’s just plain old garden variety cronyism and corruption. But, it sounds like Palantir’s mostly doing this as a part of their sales cycle – get in the door, get the software in use, do some demos, charge some dollars.

“Palantir is a great example of an absolutely ridiculous amount of money spent on a tech tool that may have some application,” the former official said. “However, it’s not the right tool for local and state law enforcement.”

The concern, to me, is that machine learning is going to amplify the existing expectations of the NOLA police; it’s a self-fulfilling prophecy system.

And then there’s the pesky issue that cops just started sharing all that data about their citizens, with no control and oversight. Remember the Strava heatmap disaster? As police and intelligence agencies keep getting their hands on more and more data, where will be many more stories like that – except they will be personal. The capabilities of such systems are already much greater than most people realize. Want to find out where a certain person spends their time? Consult the license-plate scanner systems, locate them roughly, then search for anything related in the target area. If you have the identifier of someone’s personal tracking device smart phone you can heat-map them just like with Strava. The ability to access and share data is getting blurred more and more, as companies cheerfully begin giving data in response to polite requests from goverment. The police no longer need to get a warrant to place a tracking device in your car, if they can get your location data from your Waze or Google Maps server requests. Data is now being kept in multiple platforms with different resolutions, and combining it to build a very precise picture gets easier and easier. And it’s all happening with, basically, no oversight or regulation.

Someone needs to tell those cops “Hey we just spent your raises on Palantir!” Because, basically, that’s what’s going on. Everyone’s got their hand in the till but Palantir’s got a clever sales rep who made his quarter with that “philanthropy” trick. Want to bet this is going to crop up elsewhere?

By the way, when you see articles about Palantir, they often say something about how the company has tried to expand into commercial markets with less success, what they are really saying is: “It doesn’t actually do very much, but when the intelligence guys use it they classify it so they don’t have to disclose the fact that they bought a bunch of expensive software that doesn’t do anything useful.”

“Palantir”

Tolkien’s estate should be suing …. someone.

Brazilia does seem less and less like a fictional dystopia, when looking over to your side pond. I wonder how much the european governments are into developing software like this (or buying them from the USA?). (Also an…lets say interesting experience, when you see that movie at the age of around ten… .)

Palantir: So people of little willpower only see burning hands on screen? Must be high amount of arson in the neigbourhood!

…the CIA’s venture capital firm.

I. Just. Can’t.

fusilier

James 2:24

Before retiring (and getting into dog breeding) my mother used to work for the State Revenue Service. Almost all employees there routinely abused the fact that they had access to databases with citizens’ personal information. If a state revenue service employee knew somebody’s name, they could access lots of personal information about this person: their home address, information about who they live with, their workplace, their work history, their income, their national identification number, their marital status, a list of all their relatives, what real estate they own. In some specific cases it was possible to get even more data: how much cash they are storing at home (or in bank accounts), what cars they own, what valuable stuff they own in general. Employees routinely accessed this database for poor reasons. Sometimes it was pure curiosity (“I wonder what stuff this celebrity owns and where they live”). Sometimes it was worse (“my daughter’s been dating this person, I wonder how rich he is”). Technically it was possible to cause even more damage, for example, a list of home addresses where people are storing large amounts of cash could be valuable for a burglar.

chigau @#1

I wasn’t aware that the name came from Tolkien’s works. Instead, the moment I read this name, my brain somehow associated it with the Panopticon. Now that I think about it, these names aren’t that similar, only the first syllable is the same, so I’m not sure why that was the first thing I started thinking about.

I have read, and recommend, Cathy O’Neil’s “Weapons of Math Destruction”. I think that the new problem that AI and Big Data presents is not corruption (which has always been with us) but what O’Neil describes: “Big Data processes codify the past. They do not invent the future. Doing that requires moral imagination…”

Starting businesses in poor areas has a discouraging record. Medical insurance for people with bad health has not proved profitable. Women have not succeeded in engineering as well as men. Machine learning can work this out all by itself! It is not racist programmers that is the real problem – it is AIs trained on data from a racist society.

There is an ongoing experiment with face recognition in Berlin, at an Railway/light rail junction. The database was built by a pool of ~300 volunteers, with good photos. False negative rates are 5-30%, depending on lighting conditions.

False positive rate is 0.3%.

Which means to me IMNSHO, it’s mostly useless.

Well, people do like it when expensive software tells them what they suspected all along. It feels good to be right and because the software was expensive* it must be true.

*Or, for the general case, the models was very complex

After signature strikes, to we get signature policing? “This is the police open the door! You scored 0.96 and are a potential danger to society, the judge has issued a search warrant”

If you stare into palantir, does Peter Thiel stare back?

fusilier@#3:

…the CIA’s venture capital firm.

I. Just. Can’t.

The intelligence community used to hire contracting companies to produce specific technologies (like all the Vault-7 malware) but back in the late 1990s someone thought “maybe we should start entire companies.” I think that it was basically a bunch of spooks who wanted to play venture capitalist using someone else’s money.

I’ve had a couple friends work there. It sounds interesting but I think that the technology they cause to be created are things that would have been best left to die on a drawing-board somewhere.

grahamjones@#6:

I have read, and recommend, Cathy O’Neil’s “Weapons of Math Destruction”

Queued!

wereatheist@#7:

False negative rates are 5-30%, depending on lighting conditions.

False positive rate is 0.3%.

Ow. That’s really bad.

The British are having real problems with their face recognition systems, too. But they’re being sneaky about it, because they don’t want to have to face up to its failure.

https://www.theregister.co.uk/2018/02/06/home_office_biometrics_custody_image_retention/