Recently I wrote a piece about The AI That Will Kill Us All [stderr] and hypothesized one thing (which my entire argument hung upon) that looks pretty wrong. I hereby officially declare “I am back to the drawing board.”

To summarize briefly: I felt it was unlikely that AI would be able to become dramatically superior to humans quickly. My premise was that simply pointing two AIs at eachother and saying “have at it” would not result in a system that could quickly beat a human expert, because the AI would begin by embedding human expertise in its training-sets, and would (at best) start off as a human-quality player. I felt that “innovation” is an extension of expertise and creativity and was not a guaranteed output of a feedback loop.

You all were very kind with me, I now realize, because I was basically saying “evolution won’t work” (in so many words) I didn’t realize I was saying that; I thought I was saying “evolution is not very efficient” or something like that. In other words, I managed to miss my own point, and yours.

The tech press has several summaries [verge]

You might not have noticed, but over the weekend a little coup took place. On Friday night, in front of a crowd of thousands, an AI bot beat a professional human player at Dota 2 – one of the world’s most popular video games. The human champ, the affable Danil “Dendi” Ishutin, threw in the towel after being killed three times, saying he couldn’t beat the unstoppable bot. “It feels a little bit like human,” said Dendi. “But at the same time, it’s something else.”

So far, so good: it’s a closed system, we can define “winning” and “losing” easily, and that means that an AI can try all kinds of stuff, and remember what led it toward “winning” and avoid what led it toward “losing.” I would have expected that an AI playing Dota 2 would have some basic expert rules embedded in it, though.

Nope:

Even more exciting, said OpenAI, was that the AI had taught itself everything it knew. It learned purely by playing successive versions of itself, amassing “lifetimes” of in-game experience over the course of just two weeks.

I guess I could print my words on cupcakes, with frosting, the better to eat them.

My thinking was that complex tasks would require a definition of “success” and some nudges in the right direction, but now I realize that my thinking was teleological: I was assuming that there’s a “direction” to evolution – there’s not: it’s just success/non-success.

One other thing about this, which makes me realize my views were wrong: there is commentary that the AI’s grasp of Dota 2 is limited, and it doesn’t handle full teams, etc. [ars]

The OpenAI bot can’t play the full game of Dota 2. It can play only one hero, Shadow Fiend, of the game’s 113 playable characters (with two more coming later this year); it can only play against Shadow Fiend; and rather than playing in five-on-five matches, it plays a very narrow subset of the game: one-on-one solo matches.

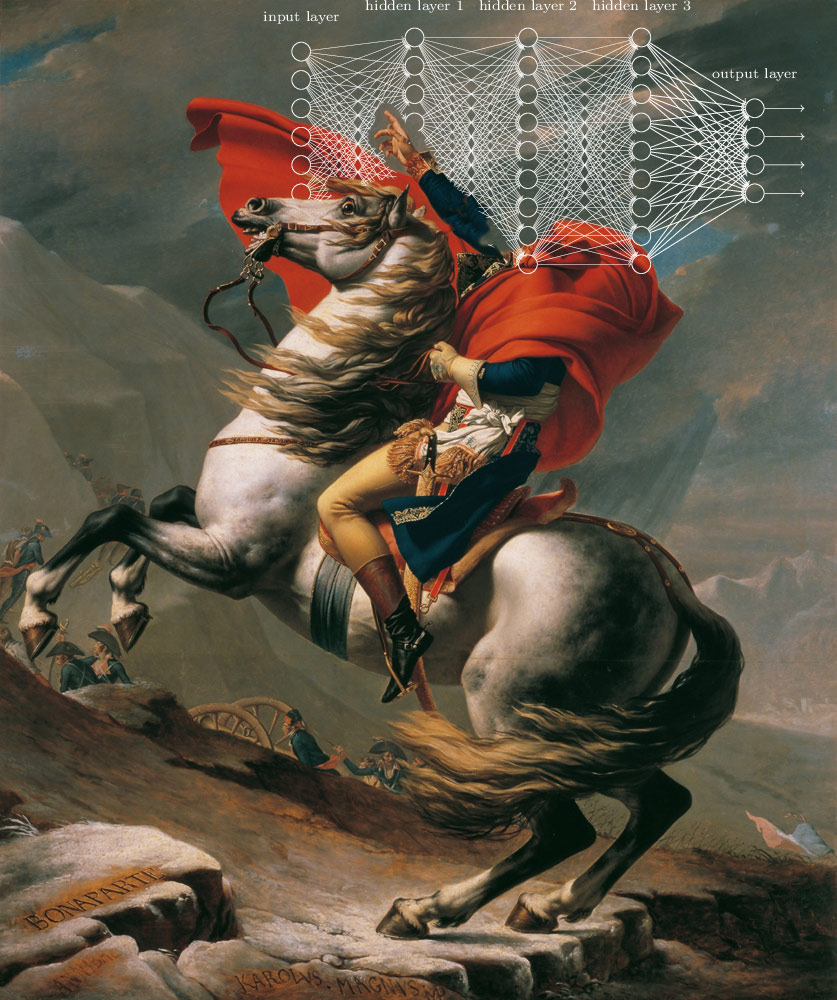

I would expect that the AI will be much much harder to beat in five-on-five because it will coordinate perfectly among its members. When human teams play arena battles, a big piece of the action is how the different players keep each other updated on what they are doing – command and control. My assumption before was that we were looking at an AI Napoleon Bonaparte scenario – a single superior strategist – but what we might see is an opponent that has also evolved perfect command/control. Like the way I use my hand without thinking about my fingers individually. I assume that there is some coordination going on below the level of my consciousness; I assume that Napoleon Bonaparte used his maneuver elements similarly – which is probably why he was freaked out when, at Waterloo, Drouet D’Erlon’s corps deployed in column, and Ney wasted the cavalry in doomed attacks on squares – I’d be just as surprised if my thumb and forefinger started doing the wrong thing while I’m trying to type, right now.

So, it took 2 weeks for the OpenAI gamer to go from zero to one on one champion with a single character; it appears that the learning curve is still there, but it’s compressed (as we’d expect from something that experiences time based on its inner clock-speed not a wall clock) – the five-on-five and using different characters – that’s just a matter of time. Next year, maybe?

Key phrase from the OpenAI tech’s comments in the video: “We had it play lifetimes of Dota against itself.”

I was so, so wrong.

Another thing that caught my attention in the video: one of the Dota 2-playing humans is asked “have you ever played against bots before?” and replies “Are you kidding? The first 2 weeks I played, it was all learning against bots!” So: the artificial intelligence human AIs learned by playing against expert systems encapsulated in bots. That’s as I’d predict. I would have expected the OpenAI to train against the bots too, though it sounds like they had it evolve its playing style by itself before they had it play against the expert systems.

I just re-watched the video, pausing now and then to look at the AI’s prioritization of threats and fire control. It never mis-allocates attacks. Neither does Dendi, which is why he’s a world-class player. Yipe.

Around 12:40 in the video, Dendi starts varying strategies to see if he can come up with a technique that the AI doesn’t respond to. I saw that, and realized that that’s the same thing any other AI would do in that situation, and immediately started thinking about Niko Tinbergen and his greylag geese, [wikipedia] which I read about as an undergrad ~1982: intelligences develop fixed action patterns and will try one or more of them, and, when they run out, they just do something weird and see how it works. As a pilot friend once told me: “there’s a book of all the things you are supposed to do in certain situations and – if you get into a situation that’s outside of the book – try whatever makes sense to you, and if you survive you get to update the book.”

I am not familiar with the game, and especially with the commentators, but I do hope that the comment at the end of the video above is sarcasm. (Don’t worry. D****d T***p will help us.)

Oh, and . . . SKYNET!!!11!

http://www.schlockmercenary.com/2017-08-16 – “A hive-mind’s distributed processing provides numerous battlefield advantages. At the top of the list: perfect synchronization between combat units. Non-hive sophonts must instead rely upon thousands of hours of squad drills, and more than a little bit of intuition. These may be sufficient to win the day of the hive’s comms are jammed.”

Dondo the supamid-a!

Shiv@@#3:

OMG is the “slippery slope” taken as a boss name?

Andrew Dalke@#2:

Yes, for exactly the same reason modern armies tries to crash eachother’s command and control and napoleonic armies shot at cavalry messengers

Aww, I didn’t see that prior post, otherwise I would have pointed you to work from two years ago where a deep learning AI could beat human beings playing Atari games given only the current score as an input. It’s not the AI-vs-AI scenario of your original, but it’s otherwise a great example of going from a blank slate to beating human experts. The paper is here, and it contains a link to the source code so you can try it yourself.

Many years ago, temporal difference learning, TD(λ) specifically, was used to train a fairly simple neural network to play backgammon at expert level. The net started with no prior knowledge, and played many many times against itself. Not only did it figure out all that human experts knew, it even came up with new, better moves for several rolls. The program was called TD-Gammon, and the year was 1992.

Hj Hornbeck@#6:

Aww, I didn’t see that prior post, otherwise I would have pointed you to work from two years ago where a deep learning AI could beat human beings playing Atari games given only the current score as an input. It’s not the AI-vs-AI scenario of your original, but it’s otherwise a great example of going from a blank slate to beating human experts. The paper is here, and it contains a link to the source code so you can try it yourself.

That is a super cool paper!! I love the way they illustrate something about the complexity of games based on the AI’s learning them at human level or not.

And, oooooh, you said “blank slate”!!!

Thank you, fascinating stuff. I need to spend some time absorbing it.

cvoinescu@#7:

Many years ago, temporal difference learning, TD(λ) specifically, was used to train a fairly simple neural network to play backgammon at expert level. The net started with no prior knowledge, and played many many times against itself. Not only did it figure out all that human experts knew, it even came up with new, better moves for several rolls. The program was called TD-Gammon, and the year was 1992.

I’m not sure 1992 is “many years ago”!

Hm, the temporal difference learning seems like a model that’s very similar to how meat AIs learn. [wikipedia] I believe that’s also referred to as a “hill climbing algorithm” – as long as your altitude keeps increasing you are probably doing something close to correct.