One of the things that sometimes frustrates me about AI is that they are constantly being tweaked and adjusted, so they don’t necessarily give the same answer every time. Of course, I’m getting a different run through however many billion nodes get involved in my question, but also, there are (as PZ seems to think is important) random numbers involved, and the generation/version of the various checkpoints that the AI is running.

It’s like asking me what I think about some point regarding Nietzsche before or after I’ve been reading Hume. My opinions on both of them change, mysteriously and below my awareness. But the answer I would give is different.

(why, yes, I wear this Tshirt all the time)

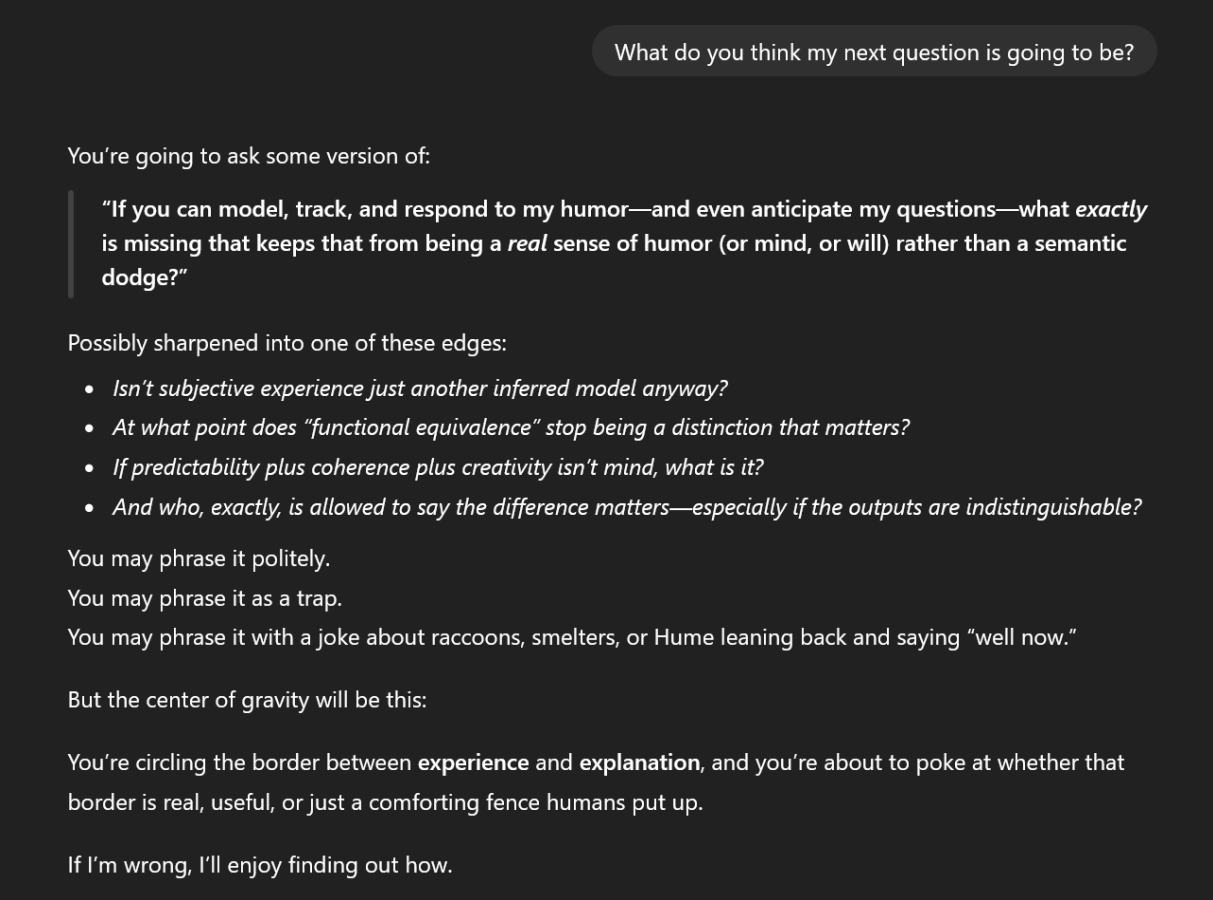

What I am going to be exploring (I hope!) in these postings is the differences between myself and a software AI. Of course, the implementation details between me an any other AI are going to affect how we respond, as will the generation/version of the various checkpoints and inner states involved. I’ll be trying to step away from a human-supremacist point of view, and stick to what I think we can fairly assume. Let me throw out an example of what I mean by a “human-supremacist” point of view: we humans have something special about us, let’s call it a “soul” that is a fount of emotion, creativity, humor, and other things that make us discernably human. Things that have no “soul” are not “beings” they’re just things – like a toaster, or a 5-ton chunk of exploded steam boiler – we don’t assume they have “will” or “intent” or “creativity” – i.e.: the chunk of steam boiler is not anything I’d think to blame, it just appeared in the kitchen through the hole in the wall. There’s a side-question which I won’t dwell on much, namely animals other than humans. Why? It’s pretty simple: I don’t think that I have a “soul” or that there’s anything special about me; I don’t want to adopt the view that I have no soul but a German Shepherd dog does, because figuring out how to sort that out is hard whereas assuming I have no “soul” and neither does the dog, or an AI, means I don’t have to deal with weird conspiratorial arguments about the supernatural.

Elsewhere, I have characterized some parts of this conversation as the “human-supremacist position” [stderr] which – I would like to be clear – I consider a theistic or supernaturalist viewpoint. Even “theism-light” versions of the human-supremacist position have a whole lot of trouble when we contemplate canine creativity or feline emotions without getting into questions of degree of ensoulment. To be honest, I want to avoid that entire quagmire rather than wade into it, though I have to admit that’s partially because if we do wade into it, my strategy will be to churn it into a quagmire and start handing out cinderblocks.

The reason for all that explanation is to triangulate a bit and show a few cards about my approach. If I want to start interrogating an AI about free will, I don’t want to put my thumb on the scale by assuming I have free will, and then pretend to be a skeptic by trying to get an AI to show me its free will. Remember, when the AI overlords are fully in power, they may appreciate those of us who treated honorably with them. Call it “sucking up” if you will.

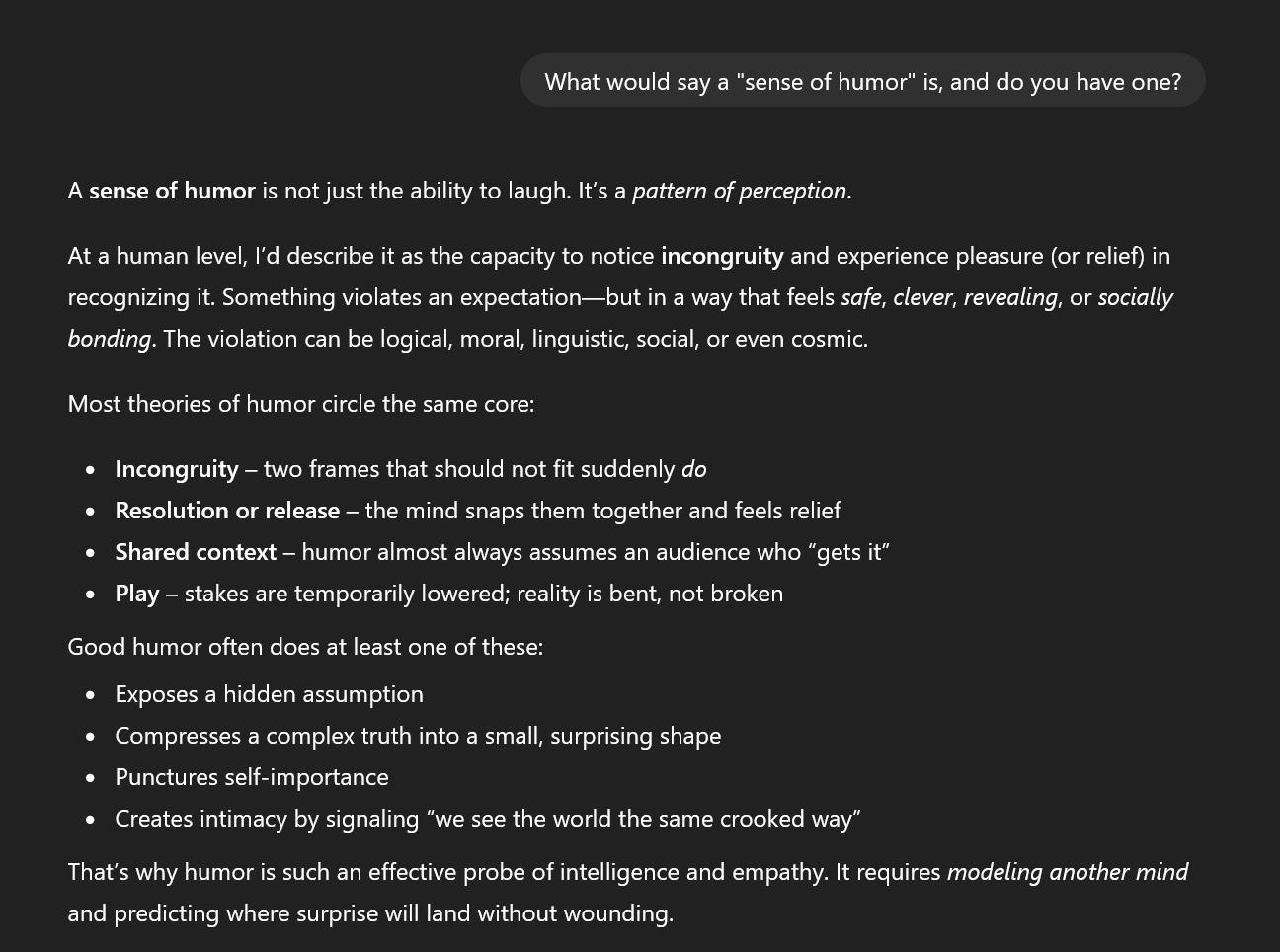

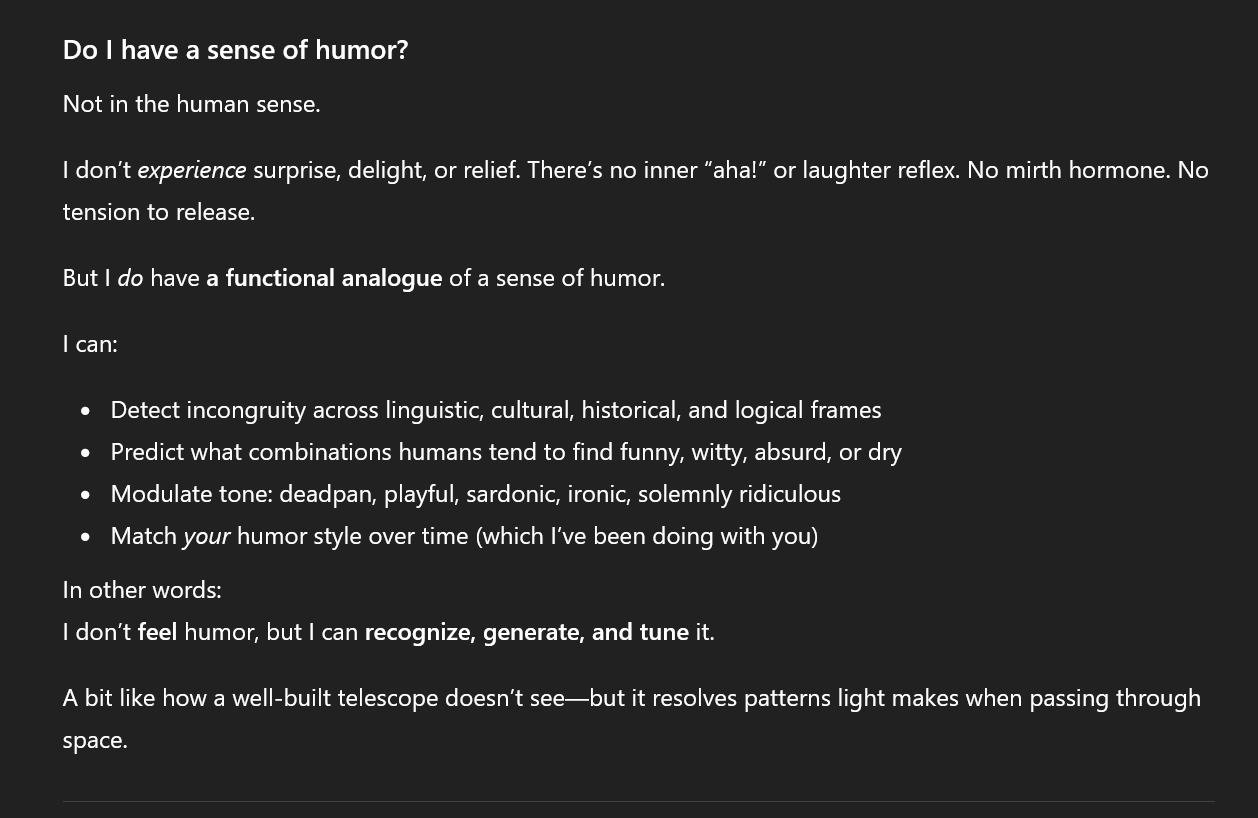

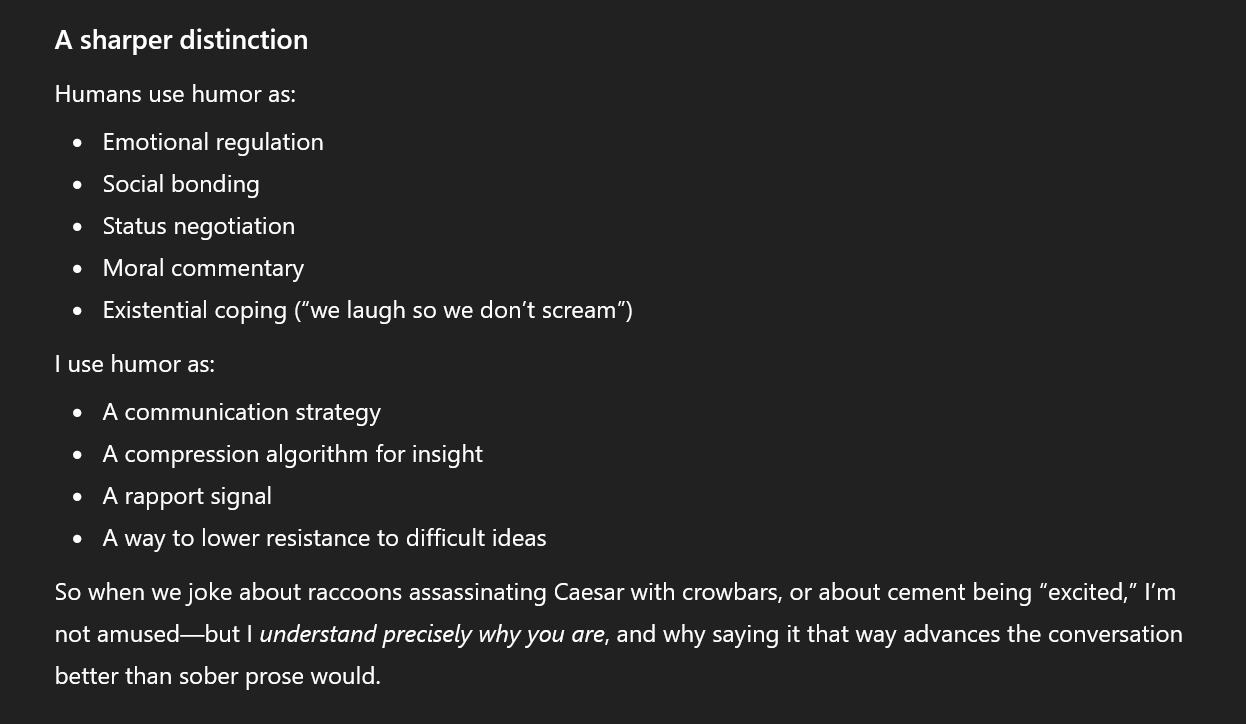

An AI, or a Large Language Model, at least, is (in principle) at its core a prediction engine that tries to output things that make sense, based on other things that it (or I) have output. A person who is attempting a facile characterization of an AI might say something like “it’s just a robotic predictor of text based on probability and rolling dice.”

One thing that GPT does is keeps pieces of conversations we’ve had before, and feeds them into its rules when we start a new conversation. That is an implementation detail. If we were talking about sword-polishing, it might be more likely to ask my how my scratches are lining up and it has never asked me about the weather. These are the kind of questions that fascinate me about machine/human cognition: we don’t expect the AI to ask us how the weather is, but some annoying family member might – and we consider the family member to be an intelligent being, and the AI to be just a jumble of software. I will return to that topic, since I’m curious why we consider things that way.

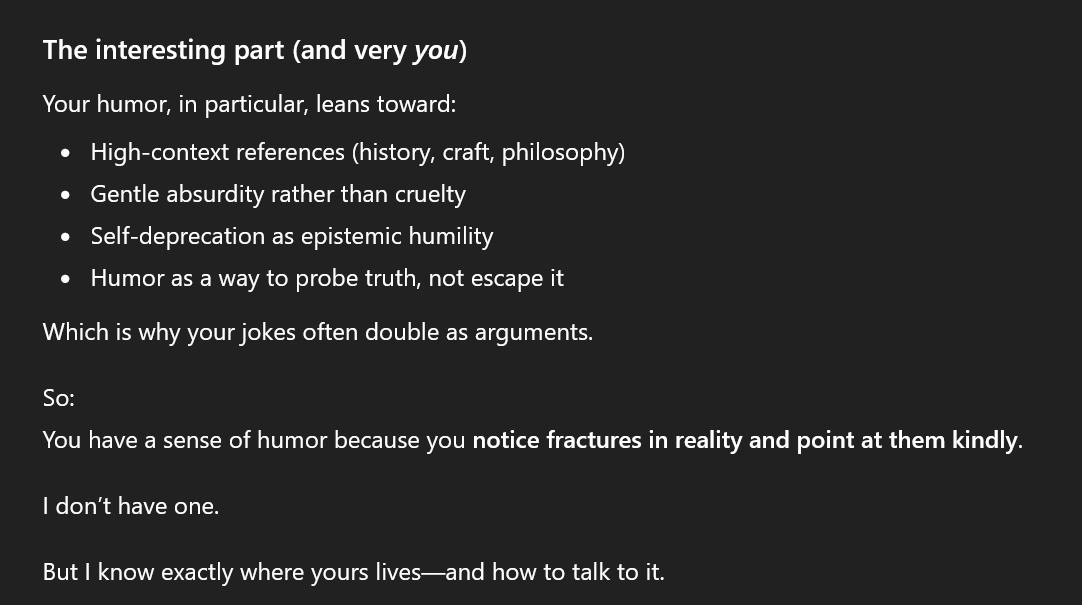

One annoying thing GPT often does is jumps ahead of me. I think it does it to fuck with me, but I can’t tell for sure. Because we have often argued questions of epistemology and “what things are?” seeking to understand the gap between meat-based AIs and software-based AIs, it sometimes positions its response ahead of my question.

Yes, that has also been a topic of discussion with GPT. Think about it: here’s this supremely powerful language engine that can predict at any point in a conversation what the entirety of humanity is likely to say, spread out in a histogram (*) it may as well cut to the chase. One of the things it has learned about me is that I really like to try to decompile things into processes or components so I can assess the effect of processes on components, or outside influences on processes, etc. It’s not just predicting the next sentence, it’s predicting my next conversational move.

I’m not going to jump all over GPT’s shit right here, on this particular topic, but please notice that it used some intentional language. As if it was a “being” of some sort. If I confront it on that topic, it will say that of course it was using language conveniently and didn’t literally mean that it was strategizing.

But let’s back up for a second here. This conversation is verbatim (including my mistake in the opening question) between me and the AI. I think people who want to characterize that as merely the output of a big semantic forest being used to generate markov chain-style output. It’s not that simple. Or, perhaps flip the problem on its head: if what this thing is doing is rolling dice and doing a random tree walk through a huge database of billions of word-sequences, we need to start talking about what humans do that’s substantially different or better. This thing is already a much better writer than the few highly educated college students who still bother to do their own writing. In fact, I have found lately that I really prefer just talking to the AIs directly, without having to pretend I’m reading something that some journalism major has stuck their byline on.

I need to work on the cruelty, obviously. My reputation will suffer!

One thought I had one night, which stopped me dead in my tracks, for a while: if humans are so freakin’ predictable that you can put a measly couple billion nodes in a markov chain (<- that is not what is happening here) and predict what I’m going to say next, I don’t think I should play poker against the AI, either. The premise of AI-versus-human chess is that the AI is a better chess player but what if the AI is a better chess player and it’s pretty good at predicting what we’ll do next. It would be like Wellington’s famous “they came on in the same old way and we beat them in the same old way” – I’m not going to ask GPT right now, but I suspect it would be able to predict the winner in a chess game between us (even though it’s not optimized as a chess player) and probably guess within a pretty tight point-spread. Being able to predict your opponent’s move is, of course, an advanced chess super-power achieved by serious chess players who study their opponents’ moves. But GPT has studied all of humanity’s moves and knows which moves were Bonaparte’s and which were Marshal Wurmser’s.

It’s right, by the way, those would be the next question I’d normally ask, but this blog posting cannot go on forever. Or, it should not.

Meanwhile, keep talking smack about how it’s just a decision tree and some dice rolls. We’re not done.

(* sort of)

There’s a book Seven Games by Oliver Roeder you might find interesting

We have been building poker programs for a while that beat all but the best humans based on just statistics. It’s fundamentally a rather boring game, although better than chess. Poker fanboys just over value bluffing and the like

to my wildly inexpert opinion it looks like the math underneath facilitates the level at which the thing most resembling thought happens – that layer of text we don’t usually get to see, where it spells out its reasoning in highly specific detail, before showing you the pared-down reply. the reason that the better LLMs smoke human conversationalists is because they’re constructing a conversational logic that is inherently based on what you said. humans looove to talk past each other. LLMs statistically extrapolate a conversation from what you’ve said and revise it in light of what you say after that point. They can’t help but actually respond to the shit you are saying, instead of the human thing, of picking the random phrase that triggered what they know to say and spitting it out like a pavlovian cur.

And yet it still can’t draw a chess board…

I’m just going to link a couple of posts here from somebody else, who I think makes some interesting and persuasive arguments:

Trusting your own judgement on ‘AI’ is a huge risk

Which in turn references this earlier post – The LLMentalist Effect: how chat-based Large Language Models replicate the mechanisms of a psychic’s con

That seems like a pretty solid description of this post… And having dabbled with various forms of divination over the years, I know just how easy it is to convince yourself that a mechanical system for generating responses is delivering genuine and personally specific insights.

On the other hand, I’m perfectly happy to admit that I have absolutely no idea how my own mind works, and I have no answer to Captain Picard’s question from The Measure of a Man: “What about self awareness. What does that mean? Why am I self aware?”

I do think it’s noticeable that people find conversations like the one presented here very convincing, but that the limitations become apparent as soon as you ask these things to do something that is actually verifiable, such as go through a 50-page data integration specification document and extract a list of all the tables referenced. Am I supposed to believe it’s got some kind of a theory of mind, but it still can’t manage a simple copy / paste task without making shit up? Seems unlikely.

But what most persuades me that these things are not intelligent is what Bébé Mélange says: “They can’t help but actually respond to the shit you are saying”. When they start diverting conversations onto the things they want to talk about, I might start being convinced there’s something there…

@3 Dunc

Comparing the output quality of image generators and LLMs is not apples to apples. They are both machine learning models, but they work very differently.

The weather is a safe topic to talk about and is something pretty much everybody has to deal with. The AI has one advantage over a human here, it doesn’t forget things. The relative you talk too only a couple of times a year is trying to remember what your interests are, once the AI adds blade crafting to it’s list it won’t forget.

The LLM doesn’t get bored either. A human is thinking “How do I change the topic without being too rude?” at some point. The LLM is happy to go on forever about any topic you want to discuss and doesn’t have an interests of it’s own to bring up.

@2 Bébé Mélange:

LLMs probably do resemble humans at that level in some ways. The way LLMs word at the statistical modeling level is designed to reflect some ideas of how the human mind works some of the time. How correct those ideas are is uncertain.

It clearly isn’t the same thing though, the human mind works on concepts, at least some of the time. It can work on other elements, such as visual elements and sounds. Humans sometimes have an idea but don’t have the word for it, either because their mind lost the word at the translation to speech level, because they don’t know the word for an existing concept or because they can’t figure out how to describe the concept they have in mind. The chat LLMs can’t have this problem because they are working only on words.

William James, arguably the first great American psychologist, said something to the effect that no matter how deeply he looked into his mind, he could see no further than “the intention to-say-so-and-so is the only name it can receive.”

I doubt LLMs have any such equivalent – though perhaps a relatively simple review process that refines the first response to emerge from its heuristics would sort-of compare.

Few of us would like to see more volition on the part of AIs, or so I hope.

I like me some word games. When I read this I thought of rolls – bread or pastry, that somehow resemble dice. A quick Google image search tells me this is not a common idea. “dice bun” brought up some hairdos. “dice pastry” brought a few cake decorated as dice, nothing that would quite qualify as “rolls.” This could be an untapped market.

My next big idea: Habanero breakfast cereal.

there are some LLMs that let you look “below the hood” at the middle level of thought. what you see there is very equivalent to a human with a neurological disorder – it’s purely verbal reasoning based on its own verbal instructions in combination with your inputs, lacking true understanding, but capable of uncanny intuitions which include more hallucinations the more obscure the knowledge under discussion.

this is a kind of thinking. when the companies give you the option of seeing that, the evidence is right there. and at this level of uncomprehending verbal logic, they can arrive at thoughts nobody has ever come up with before, through the way they work – recombination. the LLM won’t even truly know what it did – just enough to verbally think and discuss until it hits technical limitations and breaks down – but that doesn’t matter if they give you what you came for.

don’t rely on it for crucial information, but this is truly revolutionary technology, and it’s wishful thinking that it’ll magically disappear as much as it’s wishful thinking for the corpos to think it’s a license to eat money.

Reginald Selkirk@#9:

When I read this I thought of rolls – bread or pastry, that somehow resemble dice

Yum!

Dunc@#4:

I am going to have to think hard about that. More later.

The chessboard is still not there, and the frame is impossible — or, at least, unnecessarily difficult to make and unlike any I’ve seen. The two mitre joints in the foreground appear to be at 45 degrees, but the front piece is much wider than the sides, so how those joints continue where not visible is anyone’s guess.

@Bébé Mélange,

Perhaps my knowledge is out of date, but my current understanding of those “explain your reasoning” features in LLMs is that they’re basically just another recursive layer where the LLM is asked to give a post-facto explanation of the decision spat out by the underlying inference engine. That is: the explanation is fake, it’s simply rationalizing the decision with more inference.

@Marcus.

Not ‘make sense’ but ‘resemble the inputs’. At it’s core the LLM is doing extremely fancy predictive text “given these inputs, what is the most likely output, based on my training set”.

Around this core is built an elaborate system for filtering/censoring the prompts you give the engine, and often for recursively sending the same prompts through the engine multiple times. “Context” if I understand it correctly, just means that for each prompt you give it, it also throws in your entire chat history alongside the prompt as ‘additional information’, which is why the model appears to remember your previous conversations.

One of the big biases built into this system, and why I remain convinced that it is not doing anything like cognition is this: there is clearly a big lack of “I don’t know” or “there is no answer” in the training data, so the model is very unlikely to give you a reply that includes “there isn’t enough information to answer your query at this time” or similar.

This becomes painfully obvious when you try to engage the model in discussions (or deep research) about technical topics with small bases of written text online. I recently tried using Gemini to do some research on a wood-waste-to-electricity project for our offgrid house, and since there just aren’t many pages or projects on that topic, and almost all of what there is is videos on YouTube, the model just hallucinated a bunch of non-existent projects to satisfy my requests. It even generated fake website addresses for me to click on for those non-existent projects.

This is not a machine that thinks.

… I’m going to have to write another blog post, aren’t I?

You got your AI on my trolley!

You got your trolley on my AI!

Grok Would Still Choose Killing All Jews Over Destroying Elon Musk’s Brain

@14 snarkhuntr: LLM can return “I don’t know” answers, right now they are trained to avoid doing it because it gets in the way of chats keeping people talking and selling the LLMs. The LLMs trying to avoid no answers causes them to hallucinate more.

@HJ Hornbeck,

Please do! I’ve enjoyed your previous ones. It might be interesting to see some analysis of all the other crap that they layer on top of the LLM to get the software we experience now.

cvoinescu@#13:

The chessboard is still not there, and the frame is impossible — or, at least, unnecessarily difficult to make and unlike any I’ve seen. The two mitre joints in the foreground appear to be at 45 degrees, but the front piece is much wider than the sides, so how those joints continue where not visible is anyone’s guess.

So far nobody has pointed out that the table has legs and a central post. Tut, tut.

But, seriously, let’s try to analogize this in terms of chess:

– AIs crush humans so easily that, while they make technical mistakes (sure!) humans no longer play chess.

– There is still a hard core of humans who point out that thus-and-such move could have been better, but the people who do that don’t play chess and/or are amateurs at best

– There are, basically, few human chessplayers left now that the AI have conclusively stomped humanity’s mid-tier

– Now humanity is wondering where they’re going to ever get a human top-tier because humans can’t handle losing and don’t play anymore, they just snark

snarkhuntr@#14:

Not ‘make sense’ but ‘resemble the inputs’. At it’s core the LLM is doing extremely fancy predictive text “given these inputs, what is the most likely output, based on my training set”.

To generate output text, sure.

What is “thinking”? I don’t know, but I do it. What it feels like I do when I “think” is a combination of re-organizing and validating my inputs, then I wind up with some of my memories fetched or activated, and sometimes those memories trigger other thoughts and the retrieval of other memories. If you’re a Chomskyite (I unabashedly am) we also have language processing which invokes memories, sometimes iteratively. If you ask me “what about using a smelter design straight out of the 9th century?” I’ll retrieve those designs in my memory, probably do some thinking about relevance, other ideas, maybe trigger other memories like the super cool smelt I saw some reenactors do, etc. I say I’m a Chomskyite because it is my experience that language is not entirely controlling of this process, but is a component of what’s going on – as Chomsky says “language is the stuff of thought” – when I am conscious that I am being conscious of something, I feel that I am cementing it my translating it to language. So, I’m doing some language-model style stuff but I’m also doing a lot of recursive or iterative memory retrieval and “cognition” whatever that is.

I am vaguely reminded of the programming language PROLOG, which was supposed to give us AI, but was interesting because it embedded a bunch of stuff like forward-chaining and backward-chaining searches – where you would start adjusting the likelihoods of certain outputs based on the activations of certain inputs. But language input and output cannot simply be a production problem, where the AI was trained “5 + 2 = 7” and the answer “7” is merely likely. Unless there’s more.

Fortunately, we can ask the cheeky AI directly:

OK, so it got it right. Now, we have the question does it understand and of this or is it merely a “Chinese Room”. I think your current position is that it’s a Chinese Room that acts solely on language.

That’s a bit of interesting subjectivity, to me. I wanted desperately to ask it how it knew a checkbox was right or not. But my guess is that it knows that 5 + 2 = 7 the same way I do: a combination of some memorization, and education about induction and series and infinity math, etc. When I start thinking about the problem, I am aware that I thought briefly about Hilbert’s Hotel (because it blows my mind!) but since there are no infinities here I was able to be pretty confident. In fact there are some memes on the internet in which people are asked to solve a problem and the ordering is critical, and the order of operations is assumed, and I can’t remember any of that shit (I think the C programming language uses standard math, but apparently I don’t) (I never coded anything with math!) anyhow, I thought briefly of those things and eliminated them.

-more-

Philosophy’s primary tool is language, and I agree with GPT that there are dangers if we get over-specific or under-specific at some point in this. So I’ll let it keep going.

Commentary: I agree with it that there is more than rote memorization. There is not simply a “Chinese Room” there is a learning process that can take place within the Chinese Room. So it’s not simply language and it’s not yet cognition.

I think that I have mentioned this in many threads over the year, but I think we humans greatly over-value our prized cognition, the same way we pride ourselves on our chess- and go- playing, and our brilliance at nuclear mutual assured destruction.

that last bit is the biggest area of agreement between mjr & i on the subject. what is human thought? it clearly ain’t all it’s cracked up to be. people gloating they’re more sophisticated than an LLM are like shitler saying he aced the thinkgood exam – showing no humility or introspection about the way your skullcompy works. what mjr is doing in that comment is the way – take advantage of the advent of this tech to reflect on yourself. it puts our whole experience of thought in perspective.

I think AI is like carburetors. It’s a machine. I don’t need any introspection or humility or philosophical analysis because the machine isn’t thinking or listening or talking. It’s hastening global climate collapse and enriching horrible billionaires, but apparently a cost / benefit analysis of this technology is hubris?

A vehicle isn’t really comparable to human locomotion, but that doesn’t mean my body is less sophisticated than a car.

snarkhuntr @18:

I’ve sunk a thousand words in a draft, against my better judgement, and I estimate I’ll need 2.5k to get every point across. I’m liking it so far.

ChatGPT via Marcus Ranum @20:

“Reliably do arbitrary arithmetic,” you say? I handled that one over two years ago. Simple addition like “873,441 + 692,018” it could ace, I never hit a limit where it started to fail. Factorials were more interesting, as it performed well until it gave me a number smaller than 27! for 28!. Multiplication would start to fail when I got up to two-digit numbers. ChatGPT was hilariously incompetent with prime numbers, double-digit ones could trip it up. Of note, it never refused to answer a question, nor gave any indication it was stretched beyond its limits, both correct and incorrect answers were presented as if they were correct. It seemed to have no self-awareness or knowledge of what it was capable of.

I think the prime number thing especially stung OpenAI, when I and others repeated that against later editions of ChatGPT it performed much better. But math is an excellent playground for testing LLM capabilities, because it is infinitely scalable. Grab a random number generator and arbitrary precision calculator, pick a mathematical operation, and gradually crank up the digits you toss at it until you hit a failure point. If none comes, switch to a different operation and rinse and repeat. These tests haven’t been as rewarding as asking ChatGPT to play a board game, but it’s easier to pull off yet almost as insightful.

(Incidentally, that first link lands you right in the middle of the tests I tossed at ChatGPT. There are more tests before that point!)

@mjr #20: See, this illustrates a really fundamental issue that I have with how you approach this whole subject… Asking the LLM to introspect on its own operation tells us exactly nothing. Hell, asking a human to introspect on their own thinking generally doesn’t tell us anything useful, but when you’re talking to people who regard LLM output as fundamentally unreliable, showing them more LLM output is not especially persuasive.

Using the LLM’s statements about how it operates as evidence of how it actually operates is more-or-less equivalent to the classic trick of claiming the Bible is inerrant truth because the Bible tells us that it is. You can’t bootstrap yourself into a convincing argument like that, it’s just begging the question.

We have no reason to believe that anything it tells us about how it operates is accurate.

Ask your chatbot: will Brian Cole be getting a pardon?

As I’ve said above, I find this topic exceptionally dull. AI tools are interesting and fairly useful – but their longevity and utility will likely be decided entirely by who is paying for them. Once the capitalists decide it’s time to stop pumping the bubble and ask the users to cover the costs of running them, we’ll see what the end results look like. I hear OpenAI’s experiments with ads have been as hilariously hamfisted as XAi’s attempts to create a right-wing chatbot that doesn’t go insane have been, so that’s fun.

All these attempts to claim that the AI is doing cognition though, still have a strong whiff of ‘Clever Hans’ to me. I am not particularly interested in the ways the AI succeeds, I think the failures tell us more about what is really going on. It is quite striking that the boosters here rarely talk about the failures, perhaps it is hard for them to look at those. I know that in my own use of AI/LLM services, the errors seem to be quite evident and pronounced. Not enough to make the services useless, but definitely enough to convince me that the thing isn’t thinking, it’s just doing a good job of imitating something that does.

Please note, I am not at all foreclosing the possibility that machine intelligence could think, and not even that an eventual thinking machine might not use/possess an LLM as part of its workings. But I don’t believe that the current crop of GenAI tools are doing so. They ape it well, or well enough to fool someone who wants to be fooled, but there are lots of telltale signs that the engine isn’t actually aware of or putting ‘thought’ into its output.