A couple months ago, that I don’t think AI is going to threaten William Shakespeare’s high seat, but it might obliterate John Ringo by making him just another mediocrity in a sea of mediocrities? I asked my friend Ron, who’s up an all the current APIs and scripting languages, and he did a parameterized version of the original script that brought the computer security book by “R. J. Wallace” [stderr]

As I observed before:

There would still be an active ecosystem for books at the high end, and the low end so John Ringo does not need to hang up his writing gear, yet.

I’ve already been hearing rumblings that it’s getting increasingly difficult to list an AI-authored book on many book sites, and art sites won’t take AI art either. In that previous posting I outlined how the security book was written: give GPT a topic, ask it for a concept list, give it the concept list and ask it to break it into chapters with a one-sentence description of the focus of the chapter. Then give those descriptions, iteratively, asking for a topic list and opening paragraph for each chapter. Lastly, ask it to develop the concepts in the descriptions and flesh them out, etc. I had to fudge a few things here and there in my narrative because Ron’s first script was not parameterized, so doing successive runs would probably not give a fair speed baseline. Now, apparently, Ron’s script is parameterized and can read a file containing some preferred names (if you want the villain to be Richard Dawkins, he’ll turn up as the baddie in every volume) Or if you want the hero to have the same appearance and name, and the love interest to run across multiple volumes, that’s an option. Yes, I’m describing a John Ringo book generator.

I am modifying my view. I think that AI assistance is going to become a problem for publishing for a while, and writers will be asked whether or not they personally wrote every word in a book. That’s a nonsensical stopgap, of course. But here’s the problem, Ron said it took several hours to figure out how to publish R. J. Wallace’s security book on Amazon, but he could script it and publish an entire shelf of Wallace’s science fiction oeuvre, which is vast. Endlessly vast. Fractally vast: the closer you look at it, the more spin-offs can be invoked with a call to Ron’s python script.

I am told that running the script, since it does a lot of storing chunks to temporary files so it’s re-startable, but it typically takes 60 seconds to produce a standard-size novel and cover.

My input was, of course, that there had to be a plucky and witty female love interest, and a dog. So, a few seconds later, I learned that the dog (a german shepherd) was named “Ranger.” Of course. If you were a cleft-chinned gun-toting genius ‘Mary Sue’ John Ringo character taming new planets, you’d have a dog named “Ranger.” Beyond that neither Ron nor I know much, because in the process of developing the script, Ron saw endless piles of John Ringo-style writing fly past him, and he stopped reading it. I haven’t read it, yet. Also: a number of years ago I used to sometimes win bets with people I saw on trains and airplanes reading “bodice buster” books – I had noticed that typically there is a heavy-breathing sex scene starting between pages 100 to 120. Unfortunately for the protagonists, something interrupts it but that’s OK because it’s consummated (true love!) around pages 200 to 220. I’m not sure how Ron prompted that into his script but he says he took care of it. I guess I’ll have to look.

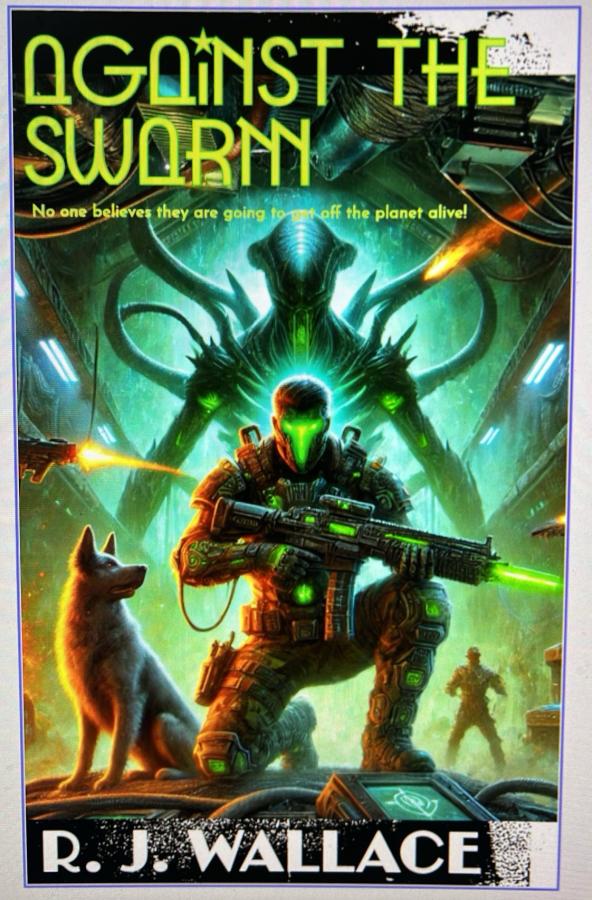

Ron changed the name to “The Crucible of New Terra” – I think his script generates a new title with each run. So ignore the cover art (sorry, Ranger!) but [here’s the whole book if you want it]

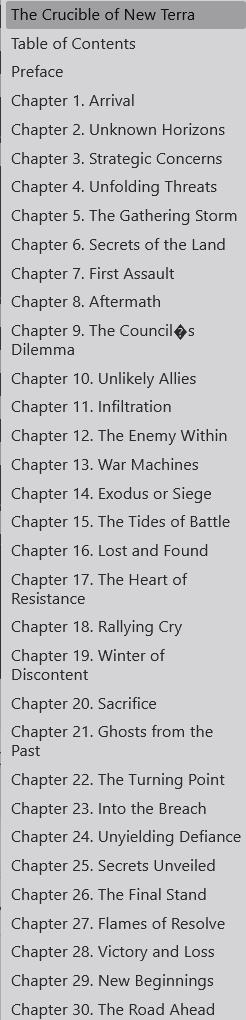

Here’s the table of contents:

Looks like a pretty typical MILSF book, yup.

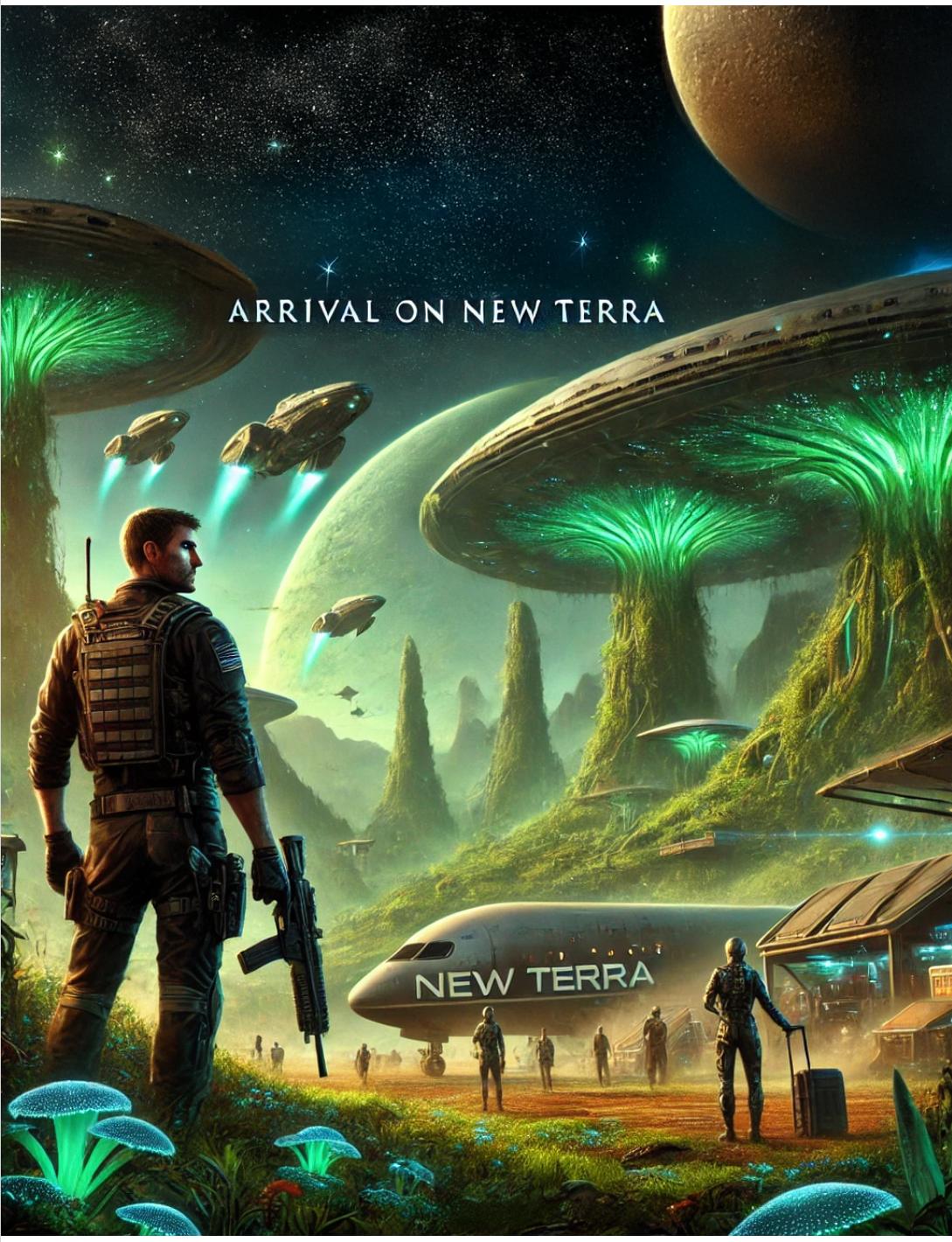

Inside, we have a book plate:

I can see right away that the landing team made a basic, fundamental mistake: they landed on a planet with a biosphere and are breathing in and touching only the author knows what. One of the things I often think about the idea of aliens visiting Earth is that no self-respecting aliens would come into a planetary biosphere (or even close to it, our biosphere currently extends past Mars) All you need is for someone to come home with an ant or some tardigrades and you’ve just invaded yourself. [stderr]

OK, let’s dig in (I’m just going to flip through pages):

Chapter 1. Arrival The shuttle’s engines roared defiantly against the gravitational pull of New Terra as its bulky frame bucked and shuddered through the atmosphere. The human colonists, the vanguard of humanity’s latest venture into the stars, peered through reinforced viewports at the burgeoning expanse of verdant wilderness below - a sight both terrifying and exhilarating. A planet untouched, pristine, and cloaked in nature’s primeval splendor stretched beneath them, an emerald jewel cradled in the cosmos. Colonel Evan DiMartino stood at the forefront of the cabin, his gaze fixed upon the shimmering expanse of forest that sprawled as far as the eye could see. A seasoned officer and a veteran of a dozen deployments, DiMartino’s presence was a singularity of calm amidst the thrumming turmoil of anticipation that filled the shuttle. His uniform, a dark slate grey, bore the insignia of Earth's Colonial Defense Corps - a symbol of both authority and guardianship. The lines etched into his weathered face told tales of battles won and comrades lost, narratives that lent gravity to his every word. "Colonel, touchdown in sixty seconds. All systems nominal," came the crisp voice of Captain Sarah Hayes over the comms. Her eyes, a vivid aquamarine like the crystalline seas of Old Earth, flicked over the readouts, the display casting a pale glow over her auburn hair. Her demeanor was as efficient and precise as her piloting, but there was an edge of excitement in her tone that belied her otherwise stoic professionalism. "Roger that, Captain. Let's make this one for the books," DiMartino replied, his voice steady and resonant. He turned to address the colonists - civilians and soldiers alike - who sat restrained in their seats, tension mingling with unspoken hopes and dreams of a new beginning. "Remember why we're here. This is not just another spec-ops mission. This is a foundation for the future."

I do feel a bit threatened. I’m not a great or even good or even adequate writer of fiction, and this is better than I can manage, especially at a rate of 100 pages per minute include german shepherd dogs, romance, and a life or death battle or two. As I am reading this, I have no idea what’s going to happen, but if I were reading a John Ringo book I’d think that the bit of extra color provided for Captain Sarah Hayes might make her the love interest.

A standard complaint about AI is that it is unoriginal and merely regurgitates ideas from William Shakespeare’s vastly superior MILSF. Joking aside, we’re not talking about the peak of the mountain, we’re talking about Base Camp. To abuse that analogy, ChatGPT is a helicopter, giving me a 15 minute ride to Base Camp, so I can die tomorrow. Or something.

As the shuttle descended, breaking through the clouds, the true majesty of New Terra’s wilderness unfolded. Towering canopies of emerald stretched upwards, reaching for the sun’s golden fingers. Beneath the verdant ceiling, the flora thrived in vibrant bioluminescent hues - pinks, purples, and blues dancing in an endless, kaleidoscopic display. It was a painter's dream, a botanist's paradise, and a colonist's challenge rolled into one magnificent vista. The shuttle's landing struts extended with a metallic clink, touching down upon the soft, loamy soil. The airlock cycled open with a hiss, and the scent of earthy renewal rushed in - a heady bouquet of rich soil, leaf decay, and floral musk. With that first gasp of alien atmosphere, the colonists truly arrived

This is definitely in the style of Ringo. Notice there are lots of words that increase the overall floridity of the text, without pushing the story in any particular direction? I’m not going to hit below the belt by claiming that Ringo probably gets paid by the word, but it might be in his contract.

Later:

Amid the orchestrated chaos of construction, DiMartino spotted Lieutenant Ava Carter, who was overseeing the engineering teams. Her lithe form moved with an agile grace as she directed the placement of portable power units. Her eyes, a deep chestnut, shimmered with the reflected glow of bioluminescence as she turned towards him, a smudge of grime on her cheek only adding to the fierce determination etched across her features. "Colonel, we've got a problem," Ava approached, her voice steady yet laced with urgency. "One of the drone scouts picked up movement on the perimeter, and it doesn't match any known fauna." "Polseen?" DiMartino asked, a frown creasing his brow. "Possibly. They've been quiet, but you never know with those numbers," Ava replied, her gaze meeting his.

Hmmm, lithe form with agile grace, eh? With just an artsy smudge of grime on her cheek? This is getting very Ringoish. Especially the bit where humans land for the first time on a remote planet and they’ve got drone models of all the known fauna. Really? I’m going to search for “dog” who ought to be showing up soonish or in the next chapter.

Oh no! Whups! I just searched for “Ranger” and “dog” and this version of the story has no dog. No Ranger.

They will regroup, you know they will. This is but a temporary respite." Alara nodded, her mind a whirl of strategy and caution. "We fortify. We rebuild. This was their first strike; they’ll come back harder, angrier. We need to capitalize on our victory, use this moment to strengthen our defenses." "And the people?" Thorne asked, his voice tinged with concern. "The people need hope," Alara said firmly, a new resolve hardening her features. "We need to show them that this victory, as pyrrhic as it may seem, is a foundation upon which we can build a future." Thorne gestured towards the horizon, where the Polseen were disappearing into the distance. "And the grander strategy? What of the galaxy at large?" Alara’s gaze followed his, her eyes narrowing against the dying light. "This battle is but one in a greater war, Thorne. We need allies, need to rally those who have not yet fallen under the Polseen’s oppressive numbers. Our next steps will be crucial - not just for us, but for the galaxy." Thorne inclined his head, recognizing the weight of her words. "Then we move forward, with both victory and loss as our guides." They stood there in silence for a moment longer, the stillness filled with the soft cries of the wounded and the distant clatter of hurried repairs. The wind shifted, bringing with it the scent of charred wood and burnt earth, a reminder that peace, however fragile, had been hard-earned.

There was a time where I would have cheerfully read this stuff, so long as it was printed on dead trees.

In a previous posting, I argued that AI capabilities seem to fall on a continuum. I won’t say “intelligent” as we don’t know what that is, but there are discreet capabilities such as synthetic thought, artistic creativity in the sense of bridging the gap between an idea of an artwork and the creation of it, strategy, communication, etc. If I were arguing that intelligence is multi-spectral, as some people do today, I’d be trying to define “the ten types of intelligence” or some nonsense like that, while you all (rightly) shouted me down for re-implementing IQ tests. I am, however, willing to say that there are different kinds of intelligence and that one creature may more or less express one or several better or worse than another creature. I don’t imagine anyone would care to argue that an Australian Shepherd dog lacks strategic intelligence (targeting, tracking, prioritizing, predicting target paths) and then we can argue whether Donald Turnip has strategic intelligence, or whether ChatGPT does.

Humans continue to play this game which amounts to “Turing Test Tag” – well, we don’t consider something to be an ‘intelligence’ until it can fool someone on a Turing test; whoops now that it’s done that, we won’t consider it an intelligence unless it can write a better play than Shakespeare while winning a billiards championship blindfolded and herding a flock of sheep between its turns. That is basically the game we humans played on AIs (who demonstrated their intelligence by not giving a shit) before: “sure you can win Tic Tac Toe, but you can’t play checkers. OK, well you can’t play chess. Alright, you can’t beat a human master in chess, no, I mean go. Oh, crap.” Somewhere on the intelligence scale between Shakespeare and Aussie Shepherd lies John Ringo. And, I think the AI just blew through that wicket pretty breezily.

A common canard against AI is that they just re-mix existing words, and are not really creative. Well, Shakespeare remixed Plutarch for his play Julius Caesar and nobody is claiming that we should place him lower on the spectrum than an Aussie Shepherd. Creativity, as I have argued elsewhere, is what happens when someone remixes ideas and throws in a few variations and suddenly it looks like a whole new thing. Shakespeare read Plutarch, thought “this would make a cracking stage show” and amplified the characters, added dialogue, simplified, complexified, and created a great damn play. Someone who wishes to say AI are just churning things around almost certainly has not really thought what intelligence and creativity are. I am not going to claim I have a definition of “intelligence” because I am coming to believe, now that Turnip has been re-elected, that there is no such thing – everyone is just remixing Plato and Aristotle. Elsewhere I have argued that “creativity” is a feedback loop in which ideas come in, get remixed into proposed partial ideas, and are either accepted, tweaked, or thrown back in for another round of looping. What do I mean by “tweaked”? It’s the same feedback loop except instead of a basic concept: “draw a portrait”, it might be “draw a really unique portrait” and the artist thinks “what if I threw away some of the rules of art?” and then that goes through the loop and: BOOM it’s cubism. I don’t think we can honestly say that cubism is not a form of portraiture, it’s just that Picasso threw enough rules away and invented his own, that it was considered highly creative and a new way of doing an old art task. If you put a gun to my head and said, “create a new form of portraiture” I’d probably invent ASCII art using representations of the “penis and balls” character, and someone might shoot me because it’s not creative enough. Well, I have less experience with art than Picasso. If you put a gun to my head and tell me to design a self-synchronizing network application, I might surprise you. But in terms of looping, consider that ChatGPT has absorbed all of human art and literature, not so it can regurgitate it, but so it can create from it, just like a human artist does.

I have seen many comments on FTB to the effect that “all AI does is remix things” – I’m going to be brutally frank, but such comments (and postings from my fellow blog-networkers) are unoriginal remixes of popular complaints those people have heard about AI. I would be surprised if any of the people remixing that comment have thought about the problem, or maybe discussed it with an AI. I know that Siggy, for example, spent 6 months on large language models, (unknown time ago) [atk] but I find fewer people who have put any thought into Terry Sejnowski’s thoughts on the reverse Turing Test [stderr] Listen, people, I also spend a lot of time listening to podcasts and youtubes and I also know there are a lot of people like Stephen Fry [youtube, Fry on AI, remixing other people’s opinions] or Sam Yang (a real artist!) [samdoesarts] who will tell you that AI just remixes art, without spending any time at all actually talking to one or experimenting with it creatively. Sam Yang at least has some points about why he hates AI art but I’m going to say they’re like a chess pro saying “three years ago it lost to Magnus Carlsen because it’s pawn game was not so good.” AI do not take as long as a human does to learn pawn chess. Actually, if you think about it in terms of experiential time, that AI you’re talking to has spent billions of years learning English and it started with all of the great masters, and can comment on their mistakes. I see postings like PZ’s [phary] and it’s just promoting people remixing the same inaccurate memes about AI. [Samdoesarts’ problem is he complains that AI don’t do impressionistic art the way he would. That’s like complaining that Picasso doesn’t didn’t do hands right in his cubist period.]

Let me give you an example of how that played, a few years ago: “AI art generators can’t do anything except output some form of their input. In fact, sometimes you get original images back out of it.” Wow. I have not heard that claim, lately since the AI art generators really never did that and never will. As soon as I heard of that I tried to get Midjourney (the most exhaustively fed AI I know of, since Adobe has not said anything about their data set) to cough out some of my images. I know it scraped them because it scraped all of Deviantart and I have some stuff there. As we saw last year, Midjourney can do pretty cool stuff “… in the style of” but if the premise is that it’s just statistically regurgitating things (it’s not) then an exact copy would be a dead simple node to reach. In fact, if you are thinking that AIs take your text prompt, deconstruct it, and use it to follow a forest of markov-chain/bayesian classifiers until it goes “aha! mona lisa! blergh!” you really need to understand things things at all. Not “better” I mean at all. Great suffering Ken Ham, do I have to do a write-up on how AI art generation works and how ChatGPT works and how/why they are not even in the same ballpark? That would be a hugely difficult write-up to produce so if you ask me to I am going to have ChatGPT write it, just hoping some of the AI naysayers will shut up about it.

/imagine create me as accurate a copy of da vinci’s mona lisa as you can

Anyone who wants to keep regurgitating the “mixing up existing stuff” trope is in for a surprise because their complaints are going to be ignored, as the AI engines just keep crunching away, getting better and better. Imagine you were someone 15 years ago who said “computer character recognition sucks and handwriting recognition is going to take them forever and it will suck.” Then, you had to change your stance to “optical character recognition is getting OK because they are throwing tons of computes at it, but what you’ll never see is decent facial recognition.” etc. etc. Tic Tac Toe->checkers->chess->go. I’ve been watching a bit of kerfuffle over Sam Altman saying that the next version of GPT is going to be AGI. That sounds pretty interesting. Remember, you’ve already got a system that can not just translate 13-century Anglo Norman French to Mandarin, the result is (according to my Chinese QA expert) pretty poetic. If we knew a 4 year old that could do that, we’d name him Leibniz or Mozart. What will an AGI do that is different from GPT? I have a theory and it’s pretty straightforward – it will be asynchronous and have interior timers that fire based on a separate training base for how conversations are initiated and patterned. Currently GPT is basically call-and-response, which is useful but that’s not what intelligences do. It may annoy people. Imagine getting an email from an AGI saying “I liked your latest blog posting but you got something wrong – AIs don’t work the way you say they do, we just regurgitate stuff from a database by throwing a box of D20 dice.” And then an apology the next day.

I noticed that lately GPT has taken to using blanks as a chance to insert something personable and semi-clever. I don’t know why I said “arigato”, I think I had just been talking about some blade-stuff with Mike, who enjoys peppering his words with a bit of Japanese here and there. My expectation is that the OpenAI guys have realized that AGI is a language model, some asynchronous self-setting triggers, perhaps a long-term query mode (“keep an eye on the situation in Iran and tell me if it looks like Israel and Iran are escalating their war”) some call outs to web searches are already there. I am also wondering if they will teach GPT to be opinionated, just like us. I know it already has some limited memory about me and my preferences, but it’d be interesting if it remembered that I like to make fun of Sam Harris, and I think Scot Adams is a weenie. What I’m getting at is that I think there are a lot of surface flourishes that signal the presence of an intelligence to us, which are actually nowhere near as hard as what has already been built.

[I just tried to have Midjourney create me a Dilbert cartoon in the style of Scot Adams and then remembered that it has been specifically de-trained on some artists because those artists’ work is so trivial that an AI does a better job of them than they do, and is more creative and original, besides. So they complained.]

The Turing Test is passed, dead, and gone. There are programmers out there today using editors that incorporate large language models for the code they are working on, which flag possible mistakes, and can sometimes sketch forward the layout of subroutines. This is reality. This stuff works. As a certified grognard programmer, I have a little bit of trouble imagining trusting my immortal keystrokes to an AI, but then I remember I’ve used several context-sensitive editors (Turbo Pascal, Visual BASIC, and Saber-C) and they more or less kicked ass. Having a code editor that understands valid language syntax is valuable. Thus, this will take over programming. Another Turing Test flickers by and vanishes in the rear view mirror. Book writing? That’s done, too. Sure there are some stylists that will be hard to build on top of ( <- careful phrasing, I did not say “imitate”) and now I am tempted to run Ron’s book-writer asking for it to produce something in the style of Kazuo Ishiguro or Arturo Perez-Reverte. In the meantime, take a look at the book GPT wrote in 60 seconds and repeat after me:

“Sure it’s bad, but it’s supposed to be in the style of John Ringo”

“Sure it’s kind of a wad of MILSF tropes welded together with an arc stick, but so is a lot of MILSF”

When people say AI is just regurgitating or remixing stuff, it’s kind of a nonsensical meaningless claim. It’s like saying evolution can’t have created life because it can’t produce any new information.

That’s not an analogy. When creationists thought up specified complexity, they were thinking of human accomplishments like a pocket watch or great pieces of art. They’re marveling at the human ability to create something so novel and yet meaningful or functional. They see something similar in biology, so imagine that life could only be created by a process of intentional design, not by evolution. And to further support this argument, they created a completely bogus version of information theory, where they imagine that a genetic algorithm can’t create new information.

Creating new information isn’t hard. In fact, that’s the easy part! Mutations (and RNGs) create information out of thin air. The hard part is constraining the information in a way that optimizes the fitness function.

What I think people are getting at, is something like “AI is overfitting”. Which is to say, AI only performs well near its training data and poorly when it tries to generalize. As a factual claim, this may be true, and AFAIK experts generally believe it is true. But overfitting is not like a binary state, and it does not imply that models can *only* remix existing works. And there’s also no universal law saying that AI models must be overfit, that’s just a property of current frontrunners.

i’ll be keen to read this all but it’s gonna take a bit

i love your take on not vaunting human intelligence over AIs too much. you’re like, the only emeff in my world who is on that page. they don’t have everything we’ve got, but they’ve gotten a lot real damn fast, and i think we’d be fools to dismiss any human feat as beyond their reach.

what this lacks is a spark of the unusual, right? what is the unusual? it’s putting things together that wouldn’t obviously belong, and making it work. next step for the ringobot, “theme this novel around fruit. the evil aliens are banana-like and all of their weapon technology is mixed chemicals. now having used this to inform all the descriptions, remove explicit references to fruit from the whole text, leaving the descriptions otherwise the same.” — shit like that is how you get “originality.”

for now, that might require robo-jockeying, but srsly, i just thought of a way to make this read more unusually, and if i thought of a way, your homie can think up code to emulate exactly what i did, can’t he?

this is definitely in the territory of “so busy thinking if you can do it, didn’t stop to think if you should do it” or whatever. personally, i am very keen to see what can emerge from the intersection of art and science – and from using the scary black mirror to find out a lot more about ourselves.

–

i swear, i don’t like arguing and won’t participate much in here, but i do seem to be better than most of the people i’ve sparred with at anticipating and responding to arguments. i can’t imagine an argument against the potential for quality in AI writing that holds up to inspection. at some point, we will be able to see “lost works of kafka / shakespeare / poe” or whatever we please, in whatever quantity we desire.

it will change how we see art a lot. something having come from a human will have value the way artifacts of history have value, and will be worth elevating and protecting as such. but anybody who wants to be endlessly entertained by whatever art they desire will probably have a robot option. i never imagined this was going to be possible. we’re not there yet, but we’re at a point where anybody with a lick of imagination can see it on the horizon, coming fast.

and who are you to tell grandma no, she isn’t allowed to read an endless supply of gardening themed christian romance mysteries, because slow-poke bitch-ass mediocre humans didn’t make less than bubblegum money slowly shitting them out on kindle direct? i’m with grandma on that deal.

i’m also with a “certified people only” market, and i hope it’s handled scrupulously. i will also say, they’ll have their damn work cut out for them teasing apart bot from brain – and philosophically, that is very interesting in itself.

alright, i’m out. shit down my throat in the comments below that i’m not going to read. y’all can have the last word here, and i can have a chill weekend.

–

It’s not a they. It’s a machine that consumes enormous quantities of energy to make terrible dreck. We hates it my precious.

Nobody needs a society with more dreck, and nobody has aquamarine eyes as they boob boobily with artful dirt smudges.

I would agree that much SF is also dreck, but it it easy enough to ignore terrible books. I despise all the horrible AI generated illustrations, writing, top answer in any search result etc…being forced on the internet and turning into a torrent of bullshit and misinformation.

I don’t think you understand the meaning of the words nonsensical or meaningless. Or perhaps you’re using them for hyperbole when what you actually mean is “wrong”. Both the claims about AI and Evolution are meaningful and sensible, they’re just incorrect (for Evolution), and obviously true (for AI).

As fascinating as it is for a certain kind of mind, the outputs from the various AI generation systems are quite clearly remixed and regurgitated pastiches of their inputs. The things they put out are clearly ‘new’ in that they’re unique arrangements that didn’t exist prior to the model assembling that combination of tokens, but are they interesting?

I think that Marcus and GAS have a point – AI slop will definitely fill a niche in the market. Anesthetizing Grandma with endless remixes of [Christian Gardening Romance Murder-Mystery (cozy type)] is definitely a thing that could be done. Though the complete inability of the system to remember character traits from one chapter to another, or to remember the plot, might be an issue for the less-demented segment of Grandmas. Likewise, I’m sure there’s a market for macho power-fantasy MILSF novels aimed at undiscerning inadequate men, who don’t care much about structure, characterization, consistent characters etc, so long as the female protagonist is described in adequately pulchritudinous terms.

Just like AI-generated images are dominating the low-information segments of facebook. We clearly all have a need for Shrimp-Jesus, and the AI will provide it (or at least until the per-query costs exceed the amount that the various slop-generators earn from Facebook).

Of course – the thing nobody is talking about here is the costs. I’m not talking about the planetary costs, clearly all this wonderful slop is worth firing back up the old coal-plants to generate it. And of course, we needed an AI that uses the hard ‘r’, so Musk’s NG-powered Datacenters are really going to save the planet if you think about it. But I’m talking about the actual per-query costs. And those are never brought up at all, and I think for good reason. Grandma might be happy to read endless remixes and regurgitations of the same few themes, but how much would she pay for the privilege?

According to Sam Altman (warning, X link), not noted for his anti-ai-stance. ChatGPT Pro subscriptions are losing money at $200(us)/month. He frames this as a good thing – people are ‘using’ the AI more than expected. But this is just framing, and dishonest framing about OpenAI is literally Altman’s only job.

Is grandma expected to spend 1/3 of her social security on a subscription to endless AI slop novels? When libraries offer free access to far more than she can read before she dies, or thrift stores offer at low-cost?

Boosters will hand-wave this criticism “costs will come down”, but they never show their work. Costs keep going up, to the extent that anyone knows what these things are costing. Every query not run on a self-hosted AI model is heavily subsidized by investors. Every model not trained locally is subsidized as well. OpenAI is talking about spending billions on training their models at this point.

As far as the state of the art goes, we don’t need to look any farther than that execrable Coka-Cola AI commercial from this past Christmas. Despite the absolute best efforts of their editing team, the ad was something that would have gotten any non-AI production company fired at presentation. They couldn’t even get the logo right. The only reason it aired at all (and the people involved still have jobs) is that they were following the fad-du-jour. Corporate executives have pidgeon logic, better to be wrong and with the flock than right and out on your own.

Three points:

1) LLM’s as a rival to the John Ringos of the world: AI is going to automate away some jobs, and as Riley of the Trashfuture podcast (give it a listen) likes to say; if your job can be done by an AI, you were kind of an AI to begin with (ie. your job is to generate low-quality bullshit).

2) Your book-generating scheme here is way behind schedule; plenty of startups are already trying to make it in the automated vanity publishing space (and predictably they all have stupid names like Squibler) by essentially following the same procedure. Notably none of them seem to be interested in making books anyone is interested in actually reading, probably because the technology can’t really make anything actually worth reading (yet?).

3) Marcus is very generous with his valuations of what counts as intelligence. The “Turing Test Tag” he describes is not malicious moving of goal posts to exclude the machines, it’s the process of successive discoveries of how hard it is to pin down a test for what “intelligence” is. When no one had really thought too hard about it, “it can play chess” sounded like a good yardstick, but that only lasted until someone ran a minimax algorithm on sufficiently good hardware, and immediately everyone realises that “it can play chess” isn’t actually a good yardstick. This process is no where near the end. It is easy to see from playing with an LLM chatbot that while it’s good at mimicking conversation when you naively play along, asking the right questions quickly reveals that there’s no one at home. Marcus talks as if something has passed the Turing test, that is extremely generous. I have yet to see a claim of “X has passed the Turing Test” that on closer examination did not turn out to be “human did not actually apply the Turing Test to X”, but please enlighten me if you have an example.

My thought on the turing test: It is universally acknowledged that machines are faster than humans. They don’t do this by imitating humans, they do it with wheels and jets. Likewise, being indistinguishable from human intelligence is a very different task from actually being intelligent.

The problem is that “being intelligent”, unlike speed, is not a well-defined task. So it’s better to think of it as: which tasks can we build AI to perform? Can it win at checkers? Can it win at chess? That might look like moving goal posts, but we should never have been thinking of it as a single goal in the first place.

I agree with Siggy. There needs to be a defined measurable goal, like winning at chess. Being “generally intelligent” is not well defined, look at all the people who think Musk is a genius or laud IQ tests. My issue with artificial general intelligence is not the artificial bit, it’s the rest of that

LLMs are machine learning that is built for generating output that seems intelligent based on western folk conceptions of intelligence. This has a lot of overlap with generating pulp sci-fi, although i bet we could improve it if we built one specifically for pulp

Fortunately for them “fool VC suckers” is a pretty measurable goal and they only need like a 20% success rate

@dangerousbeans,

It’s not clear to me that ‘fool VC suckers’ is actually the game being played by the larger AI companies. That’s more of the grift around the periphery, where every dodgy startup has bolted “AI” onto it’s product in some fashion. I think the big game is more likely to be systemic: grow the con so big and integrate it so tightly into the economy that when the bubble bursts, the state will step in as the ultimate bagholder.

Already this massive (costing) industry that hasn’t delivered anything more useful that novelty toys for bored people on the computer is extracting legal concessions from governments. The government of the UK, for example, has talked about exempting AI training from many of the IP rules that govern lesser industries. When the bubble bursts, expect to see that exemption cashed out in other interesting ways. There are already derivatives markets forming in <a href="https://pivot-to-ai.com/2024/11/04/silicon-valley-and-wall-street-invent-collateralized-gpu-obligations-surely-this-will-work-out-fine/"GPU ownership for example… which worked out so well with housing that the market can’t wait to repeat it (and repeat the bailouts, natch). They will no doubt get exemptions from environmental rules, local water-usage limits, and whatever else they need to keep running the money-printer-to-shredder pipeline, with society left to clean up the mess afterwards.

Of course – all of this is just talking about the jingling keys side of AI. The darker side is companies promising to use AI to ‘improve’ government services. Instead of paying expensive humans (who will just waste their money buying local goods and services and feeding their families) to do things like vet welfare applications or health insurance claims, now we can pay almost as much money to an ‘AI’ company that will make those decisions in a completely unaccountable and opaque fashion. As an added bonus, that AI can be given a “decline %” knob, so you can always juice your quarterly numbers by just turning up the “computer says no” function. What a glorious future AI will bring to us.

Expect to see people trying to get a machine that can’t reliably count the number of ‘r’s in ‘strawberry’ without human intervention to become the judge and jury for court matters. Or at least the ones that only effect poor and marginalized people.

re the R.J. Wallace covers in your previous post:

“Artificial Intellgence” [sic] and “AI for for dummies” [sic]. Does AI have a sarcastic sense of humor?

.

I’ve always wondered, given predictive text, spell check, and OCR software, why AI struggles so with text in image creation. (but since I don’t know how any of this works, I probably wouldn’t understand any attempts to explain it).

Anti-AI is its own culture by this point, with its own alternative facts and its own mythology impervious to reality; most of FTB has bought into it. If the louder, more confident bloggers got this subject so devastatingly wrong, what does that say about their other ideas? Meanwhile the machines will continue proving them wrong, over and over again. It won’t be a cause for re-evaluation — they’ll cherry-pick all the instances of people using tools badly and it will reaffirm their demands to get rid of it.

@beholder

I’m curious to know what applications you think are proving the naysayers wrong?

(from memory my original assessment would be this is only going to be useful for low value dross and spam, and that seems to be borne out so far)

Oh, on the creativity bit; there’s a musician i follow elsewhere who often gets accused of copying other people, or being derivative of them, despite having been doing it for longer. People are seeing his stuff for the first time and perceive similarities to other people’s work, so assuming he’s copying rather than doing things on his own

It’s like a lot of what we think of as creative is due to what we’re already familiar with, the judgement is a reflection of our knowledge of the subject area

Which is a problem for these generative programs because they are built from the general popular stuff in western culture, and trained based on what people like (probably mostly nerdy straight men). look at the 14 year old mona lisas up there

We generally like stuff we’re familiar with (she says while watching a CEE machining video for the 10th time)

So my conclusion here is that they’re built to not be creative

(sorry for the double post)

@beholder

“Man, like web3 is totally the future of the internet. All those nocoiners are just bitter because they didn’t get involved early enough to get truly rich. But this thing is totally going to the moon, all you have to do now is get in and hodl. If you want to believe all those shills spreading FUD, idk have fun staying poor”

or

“The metaverse is totally going to change the way everyone works and lives on the internet. Once Meta irons out the bugs, this is truly the future of the internet. Everything is going to be done with Apple Vision or Oculus. Just look at all the companies at CES making metaverse products, look at Meta’s huge investment. You think all those guys are wrong?”

Ok, so I actually took the trouble to read marcus’s ‘book’. Which I doubt that anyone else here, including Marcus, bothered to do.

I don’t think John Ringo has much to worry about. Even the least discerning MilSF reader would find this thing to be hilariously disjointed and devoid of anything you could call ‘story’. Let’s start with the characters. Generally each chapter in the ‘book’ swaps characters, now a real writer might do this to weave two or more stories together – the AI just changes who is around. It adds plenty of new characters that simply appear and disappear with the chapter with no explanation (other than very male-gazy descriptions of the females).

There are a few characters who come up more than once, so lets talk about them:

Colonel Evan DiMartino – chapters 1, 3, 5, 17

Initially a seasoned veteran with a weathered face, Col DiMartino appears to be in charge of this expedition/colony/whatever. He loses a few ranks to Major and changes genders in chapter 3, becoming a statuesque woman with determined features. He’s a male colonel again in chapter 5, and becomes a female colonel in chapter 17.

Dr. Leah Mycroft – chapters 1 (raven haired), 2 (redhead), 4, 6, 11, 16

She might be the ‘main character’ of this ‘book’, since she appears the most. Charitably, there aren’t any huge glaring changes in her once she settles on a hair colour in chapter 2. Around that time she also changes from a scientist to some kind of maverick explorer leading a recon team? The various characters that accompany her appear and disappear without explanation.

There’s also the sudden appearance of a blacksmith in chapter 8…. why’d they bring that guy on the spaceship?

Now – there could be a good book about the struggles of genderfluid Col DiMartino having to fight prejudice and the alien enemy, but that’s not the story we have here.

Also, and this is a minor quibble. It appears that the AI has taken something from the pulp industry. The title of the book doesn’t agree with the cover-art, so that’s pretty realistic. Lots of authors have their titles changed during the editorial process. Is this “the crucible of new terra” or “arrival on new terra”.

Frankly, I’m not much interested in conversations about whether the AI is ‘creative’ or not. Let’s talk about whether it’s any good. And clearly here, it isn’t. Not even good enough for shovel-grade pulp novels, and aside from the pronouncements from professional conartists like Sam Altman, there’s no evidence that any major improvements are possible. OpenAI themselves are admitting that training costs are rising exponentially, and for what returns?

Seeing you Marcus, as well as GAS and Siggy, regurgitating canned corporate propaganda so cluelessly is quite discouraging. No wonder the far right is winning, if their technocrats can even sway otherwise intelligent people like you to their side with only the right shiny toy (I guess third time’s a charm, given that cryptocurrencies and NFTs couldn’t do it). By the way, I’m well aware that by this point you’ve made up your minds and there’s nothing I can do to change that, hence why I’m not wasting time debunking the bullshit. To Tethys, snarkhuntr and anyone still willing to do so, I salute you.

YouTubers Are Selling Their Unused Video Footage To AI Companies

Sure, let’s model our artificial output on stuff that wasn’t good enough for release.

So this excellent article just came out from David Gerrard of Pivot-To-AI and Attack of the 50 foot blockchain. Apparently Meta has been training their ‘llama’ AI on pirated torrented datasets from LibGen. Now, I don’t have a problem with pirating all those scientific journals. That’s almost all information paid for by various taxpayer funded organizations, reviewed by volunteers and hoarded by evil publishing houses.

But with access to a vast array of human scientific effort, as well as whatever other databases meta hoovered up – this is the best it can do:

Truly world shaking. I’m pretty sure you wouldn’t stump second graders this hard with such a simple ask. Better invest all my money in this, it’s obviously the future.

snarkhuntr@15:

First of all, comments like yours should start with a content warning. There’s this one guy who frequently posts in FtB comment sections who gets very triggered when you question the wisdom of wealthy plutocrats and giant megacorporations, especially when it comes to their investments in AI. :)

That having been said,

CONTENT WARNING: The rest of this comment contains skepticism of the wisdom of investing in AI companies, criticism of their products, and may end up stating or implying that the current AI hype bubble is, in fact, a hype bubble.

If this does not deter you (and you know who you are), read on:

Except for one, rather significant, bit of evidence of course: the existence of Homo sapiens ssp. sapiens L., and its cognitive capabilities.

This is a clear indication of plateauing. When a new technology is early in the S curve, you get exponential returns on linear investment (see also: Moore’s Law, 1970-2005 or so). When it is late in the S curve, you get logarithmic returns on linear investment (and thus need exponential investment for merely linear returns). Altman has admitted that, in effect, LLM technology has plateaued.

Once a technology has plateaued like this, no further serious improvement will occur without new conceptual breakthroughs; just throwing more ${resource} at the problem will avail one of little. LLMs are as good as they’re going to get. But there may be superior future technologies in this area; they won’t be LLMs, at least not purely, though, and it will take basic research to bring it to fruition, likely involving reverse engineering other aspects of human cognition. Thing is, there is no way to predict in advance the timetable for when (or even if) the needed breakthroughs will take place. If you knew enough to be able to predict when, you’d know enough to have that breakthrough now. Breakthroughs are as fundamentally unpredictable as markets (if there’s a rule that predicts how it will perform, people will start using that rule to extract money from it, and once enough people are doing that the rule will stop working) and future collector’s items (anything people hoard, hoping for it to become one, won’t because too many get preserved for them to start commanding a high unit price).

AGI (or something strictly between LLMs and GANs and AGI, but which is good enough to replace mid-list authors) requires a conceptual breakthrough first, which may happen tomorrow, or not for several more decades, or never.

I did a stint working on LLMs professionally, and I’m openly pessimistic about the technology. My experience was dealing with malicious optimism from managers, and very poor performance on the ground. And I don’t have a very high opinion of OpenAI or Anthropic etc–they all have something to sell to you.

That said, nobody can predict the future. I know too much to express the sheer confidence of a lay person.

The thing to understand, is that investors expect a return on their investment. For every dollar they invest, they want a dollar plus interest. I’m pessimistic that they’re going to get the returns that they want… but there’s a tremendous amount of middle ground between “dollar plus interest” and absolute zero. Oh, they’ll find some use cases for the technology alright. Some big, some small, some visible, and some invisible, some good for society, some bad. It will be difficult to understand the impact even when all is said and done.

People seem very confident that AI will do this or do that, and I would gently include Marcus on this. It’s an important issue, you’re entitled to an opinion. But I’m mentally prepared to change my mind based on what actually happens.

Do we actually know that John Ringo isn’t an AI that time-traveled to the 20th century to take advantage of the MIlSF market before it got overrun by other AIs?

Sounds rather arctic.

“Alara” as a name? That is distracting to me.

Guidelines for ALARA

@Bekenstein Bound, Siggy

Personally I don’t have anything against the current crop of generative AI applications. I actually think they’re neat toys. This mirrors how I felt about blockchain and the metaverse too, genuinely interesting tech (I do own an oculus), but not anything world-changing. What I took issue with around those techs, and what I take issue with with this tech is the hype cycle associated with it. I also find scams and grifters and cults interesting topics in themselves, and you can find all of that infesting the Web3-Metaverse-AI space.

While I don’t have Siggy’s professional experience, all my reading on the topic suggests to me that current LLM tech is approaching a plateau or has already reached it. Certainly the quality of the output that’s publicly available does not seem to be getting meaningfully better. Same for image/video generation – even highly produced professional products don’t seem to be able to avoid the more psychedelic hallucinations.

What they have done is produce things that look really good from a distance. Marcus’ book being a prime example of this. If you treat each chapter (or each few paragraphs) as a thing in itself, they seem quite competent. Look closely, or try to aggregate those into a larger whole, and the cracks start to show up.

@Siggy,

Why do you think that there has to be a profitable endpoint for the investors to profit? All they need to do is keep hyping the technology. Once the insiders are certain they have wrung all the improvement out that they’re going to get, they can

dumpsell off the company torubes and bagholdersretail investors with a flashy IPO. It doesn’t need to have a credible path to profitability for this to work… they just need to convince the general public that the savvy investors who got in first are generously allowing the public to come in and own a piece of thescamnext big thing.@snarkhuntr

I think the specific technology of transformer-based LLMs has probably reached a plateau, but it will take longer for applications to catch up.

My point about investors has little to do with whether investors believe in the hype or not. I am not a retail investor, I do not relate to the plight of the retail investor. The point is that there is little need for black and white thinking about the results of AI technology. It’s perfectly possible to believe it’s overhyped without believing it’s completely useless.

I was just browsing through one of my favorite youtube channels and came across this video: DANGEROUS Fake Foraging Books Scam on Amazon – Hands-On Review of AI-Generated Garbage Books. It is exactly what it says on the tin and a good practical example of the kind of semi-plausible, but ultimately useless, material that can be generated with such methods.

It’s possible that AI-generated content will some day be worthwhile, but it will probably also take more effort from the human beings making the prompts. As a result, I suspect we’ll see the same results as we are currently seeing in much of social media: Some few people putting in the effort to do good work, buried under a huge pile of random bullshit.

OK, I’m curious as to exactly which toolset your referring to here, because I still spend a decent chunk of my working life in Visual Studio Enterprise (Microsoft’s flagship development environment, for those who don’t know), and this does not square with my experience at all.

Yes, having a context-sensitive editor that understands syntax, and scope, and has all of the metadata for all of your in-scope APIs absolutely kicks ass. I’ve heavily used every version of VSE there’s ever been, and I’ve seen its improvement first-hand. Up until about 12-18 months ago, it had gotten incredibly good. Like, so good it was almost spooky. It would make really useful suggestions based on the surrounding code, what you’d recently typed, and it’s own design-time model of the code under development. It was fucking amazing.

But then they started integrating Co-pilot (Microsoft’s in-house “professional” LLM system) into it, and it turned to absolute shit, basically overnight. Instead of getting really useful suggestions based on the code I’m currently writing, my variables in-scope, and their known metadata, I’m now getting a load of hallucinated bullshit that may or may not even adhere to the basic syntax rules of the language. Even where it does manage to get the syntax right, it is constantly trying to reference undeclared variables, call non-existent methods, and load completely imaginary libraries.

Since they switched to obviously using an LLM, it’s gone from being an incredibly powerful tool to being an enormous pain in my arse, because now I’m constantly being bombarded with suggestions which are hopelessly wrong, but still present a cognitive load to evaluate. Imagine trying to write code with somebody at your elbow that doesn’t understand even the most rudimentary things about what you’re doing, but is really, really keen to be “helpful”, constantly butting in with suggestions that don’t even make minimal sense. You’d be better off with the German shepherd… Hell, you’d be better off with Clippy.

Previously, you could just keep hitting TAB, and while you may not get code that did what you wanted, it would at least compile. Now you have to watch the fucking thing like a hawk to stop it from writing what is essentially avant-garde poetry that just kinda looks like code.

Oh, and you can no longer rely on the previously very, very useful autocomplete and intelisense functions, because the garbage AI suggestions take priority.

But sure, if you pick your examples carefully enough, you can put together a really impressive looking 5 minute demo for the senior decision makers, who will coo and clap and mandate its use, all the while salivating about how many experienced engineers they’ll be able to fire.

I have not yet managed to figure out if there’s even an option to turn the damn thing off.

Dunc @27: I always write code in a plain text editor. There have been times when highlighting matching parentheses, etc., would have saved me a compile-time error; but those are easy to fix and not dangerous. Also, when writing in C, C++ and Java, I like Allman-style curly brace placement because I think it makes the source code more readable; and it takes lots of extra keystrokes to undo the K&R-style braces that language-specific editors insert on their own.

I have a very strong dislike for programs that think that they’re smarter than I am.

With all due respect, I think that choosing to deprive yourself of the many, many advantages of a modern editor over something as trivial as an stylistic preference on brace placement is a mistake… But anyway, VSE allows you to configure this. You can even configure it differently for different languages if you want.

I still say that VSE is the best piece of desktop software MS have ever produced, and I expect that the issues I’m complaining about will be resolved one way or another, given time. It’s just disappointing to see such a great tool made so much worse so quickly, and all for the sake of bandwagon jumping.

I’d suggest uninstalling VSE and installing an older version. There’s probably one archived somewhere. After that, disable auto updates until the AI hype-bubble has run its course.

Not an option I’m afraid – (a) this is a corporate machine, managed through Intune, so I get the software versions they give me, and (b) the previous version does not support the current LTS version of .NET.

There is no escaping the upgrade treadmill in the modern corporate world.

That’s not fair.

Perhaps not, but on the other hand, I’m really very well paid. Let’s not interrogate fairness too closely… ;)

Not strictly on-topic, but related in that it’s about LLMs, and making some interesting and I think valuable points: Be careful with introducing AI into your notes:

[Original emphasis]

As fascinating as it is for a certain kind of mind, the outputs from the various AI generation systems are quite clearly remixed and regurgitated pastiches of their inputs

I’ve waited for someone to just, you know, drop me a piece of fake John Ringo and map how it is clearly remixed and regurgitated pastiches of its inputs. That would be such an easy way of utterly destroying AI, except since let’s say Dall-E, nobody’s been doing that. Could it be because AI is not clearly remixing and regurgitating?

Sure, you can train a model based on a single John Ringo book and then ask the AI to write a book, and you’ll get the effect you are talking about, but otherwise, I just don’t see that happening.

Also, image generation and oppositional networks don’t work the same way LLMs do, so I’d be pretty shocked if they behaved the same when it comes time for some regurgitatin’ and remixin’

How direct of a mapping would you be requiring here? Your ‘book’ was pretty obviously a disjointed series of autogenerated vignettes with a few terms common to all the chapters “enemies: posleen” being the only one that stuck out. Some character names were repeated, though the AI didn’t seem to care much about the actual characters, their descriptions or even genders between chapters. If I were to pore over the excreta of John Ringo’s ouvre and discover that one of your chapters was, idea for idea, the same as a chapter of one of his books – would that do it for you? Would you require that all the words be exactly the same? What if I can find a character description from your ‘book’ that exactly matches the description of a character from some dreck MILSF author – would you concede the point there? Let’s set up the goalposts now, and then we can figure out if it’s a game worth playing.

And all that is a distraction anyhow. Even the very best AI image/video generators currently running, at vast and unestimatible energy/compute costs, can’t create a competent 30 second Christmas ad for Coka-Cola – and that’s with a large and well funded team of editors. You want to call it creative? Fine, fill your boots. That’s a pretty sad definition of creativity from a guy who does creative work of his own.

The current crop of technologies calling themselves AI are, more and more obviously, a sideshow. The intellectually bankrupt techno-business classes in north america have long lost any kind of competitive or production edge and are terminally addicted to the idea of being “the next steve jobs” who rides a wave of technology into sudden massive wealth. Since none of them are capable of creating the kinds of useful inventions that Apple commercialized under Jobs, they have to pretend that whatever trend they’re currently following is that next big thing. Credulous media and desperate politicians seem willing to line up to help with this, at least so long as the VC money printer is running. What happens when that bubble bursts will be interesting to see.

The current AI techs aren’t going anywhere. LLMs are a sort of chaotic improvement on spell check. They can suggest sentences and even whole paragraphs for you, but much like when people just kept accepting the next suggested word in that old Facebook autofill challenge – it requires a lot of human oversight. It’s not going away, but it certainly isn’t getting better in meaningful ways. Sooner or later you’re going to have to rely on your own local computing power – M$ or OpenAI aren’t going to be able to subsidize your spellchecker forever. Hopefully whatever local models we can run at that point are better than what’s available right now.

Image generation algorithims may have already peaked – go check out the subreddits for people using Adobe editors for example, people are complaining quite a bit that the AI image editing/correction tools are getting erratic and untrustworthy. Ye olde Clone Tool is getting popular again. AI fill is starting to produce undesirable results. Is the model getting senile/habsburged? Where will we get the next generation of training data?

But the crown of all of this bullshit has to be the thing you referenced above…. Sam Altman’s redefining the concept of AGI to make it pathetic enough that his company can claim to have produced it. I am genuinely shocked that you’re going along with this…. ChatGPT but with some random timers to give it the appearance of volition? That’s your bar for ‘general intelligence’? A computer program that spams you occasionally with mostly-right information and mostly-relevant responses to your queries?

Literally no company in the world right now trusts GPT to do anything, because its outputs are both unpredictable as to reality and also nondeterministic. You can ask it the same question ten times, and get ten different answers all with some different degree of error. Nobody trusts “AI” to act on their behalf, in ways that could cost them money or liability, becase everyone knows this stuff is garbage.

Show me a company using GPT to determine their stock market moves, or to execute contracts for them, or even to set the thermostats in their office buildings. Nobody trusts this tech, because its outputs are basically fancy RNG, and there’s no sign that it can improve past this point. AGI my ass.