This is going to be interesting. No, I lied, it’s going to be entirely predictable and fairly ho-hum. But I’ll be interested.

As you no doubt know, a lot of undue credibility has been loaned to the pseudo-science of IQ testing. It’s an epistemological failure because the reasoning is circular: what does an IQ test study? How well you do on IQ tests. What is IQ? A measure of how well you do on IQ tests. Is there are connection between performance on IQ tests and “intelligence”? Maybe. Sort of. But first, what is “intelligence”?

Midjourney AI and mjr: “the AI is playing IQ test puzzles against the human chess master.”

It’s not too difficult to come up with a definition for “intelligence” but defending it is another matter. It appears, to me, that there are multiple axes of “intelligence” ranging from eye/hand coordination, spatial reasoning, logic, grouping and sets, to music appreciation. The IQ testing community has responded to that on two fronts, firstly claiming that IQ tests stress all of those, collectively, and more – and secondly by claiming that they can tailor tests to stress various aspects of “intelligence” and that there is a thing called “general intelligence” that is somehow a roll-up of all the aspects of “intelligence” and more. It’s not my bad theory and bogus science so I won’t try to defend it. The point I want to emphasize here is that, typically for the social sciences, they have trapped themselves in circular reasoning: they don’t know what a thing is, so they have established a questionnaire that probes a bunch of points and if you score more than ${whatever} on the questionnaire then you’re a ${whatever}. Except they never say that: they dance around that point and say “so and so scores high on the ${whatever} index” – leaving it to the listener to complete the implication.

For all intents and purposes, can we say that the implication is that if you perform well on an IQ test, you are “smart”? And if you perform at a certain level, you are perhaps a “genius”? If you score above a certain amount, you can join MENSA.

Welcome to MENSA, our new AI overlords: [study]

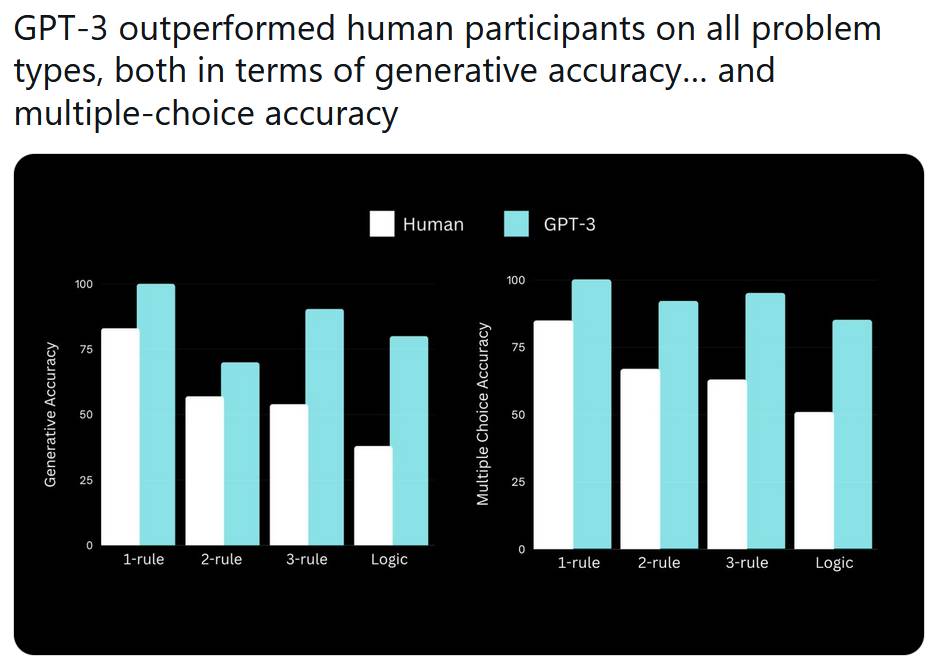

Researchers from UCLA have found that the autoregressive language model Generative Pre-trained Transformer 3 (GPT-3) clearly outperforms the average college student in a series of reasoning tests that measure intelligence. The program uses deep learning to produce human-like text. GPT-3, a technology created by OpenAI, has a host of applications, including language translation and generating text for applications such as chatbots.

I think the word “average” in “average college student” is doing a lot of work. I suppose we should also question whether or not the “average college student” is “intelligent” since we don’t appear to really know what “intelligence” is. But if “intelligence” is a thing possessed by ${whatever} that perform at average on IQ tests then an AI is “intelligent” Q.E.D.

Of course that’s a problem and it’s going to be a problem for a lot of human supremacists, who have been saying that chess playing computers aren’t “intelligent” they are just something something knowledge engines something something, urr, something else. This result places IQ testing right in the crosshairs: either it must be discarded as a measure of “intelligence” or AIs are “intelligent.”

The new study looked at the program’s ability to match humans in three key factors: general knowledge, SAT exam scores, and IQ. Results, published on the pre-print server arXiv, show that the AI language model finished in a higher percentile than humans across all three categories.

The graphs indicate not simply that the AI outperformed the average college student: it trounced them.

Scientific racists are going to be left scrabbling for the left-overs, as AI models continue to wipe the field with human intellect. Suckers.

I wonder why GPT-3 does less well on 2-rule problems than 3-rule problems?

What’s interesting is that the original test, the Binet-Simon test, was intended to determine which children would have trouble in a typical (French) school and thus would need to be educated in a different manner. In particular, he stressed that (Wikipedia) intelligence was too complicated to fit on a simple scale:

So, although the original test was not particularly racist in intent, the US adaptation of it — the Stanford-Binet test — was racist and classist by explicit intent from the outset.

I can’t help thinking that this shouldn’t be surprising. AI was specifically developed to solve explicitly posed problems (I would call them “logic problems”), which are exactly the sort of problems you find on these tests. But the human brain didn’t evolve to solve problems like that. It evolved to ensure survival of one’s social unit (tribe, band, etc.) in the face of situations which are at best partly understood and which often don’t allow time for much analysis. Most of what we think of as “irrational” thinking comes from behaviors that increase a person’s (or a tribe’s) likelihood to survive in the face of situations where logic would say nothing you can do will save your life.

Someone once made the point that if IQ tests were made by Australian aborigines, most Western people would test as severely mentally deficient. I can’t help wondering how well one of these AIs would figure out how to survive if dropped in the middle of the Australian desert with no supplies.

If one did old fashioned time-and-motion studies on a bunch of very similar factory workers, there MIGHT be some correlation between those who, say, walk quickly and those who are quick with their hands in turning screws or something. So, in that limited context, walking speed might be a sort of marker for manual dexterity. A century ago, a factory might use some such walking test to pick the most efficient assembly workers. But we need to realize that walking speed here is not the job requirement actually desired, but was just a handy marker for it. Obviously even after WWI, it would be possible say to have a legless veteran who had superior manual dexterity. So a smart factory should switch from a walking test to a job skills test.

I think IQ tests are like walking tests, in a world where many jobs involve sitting at a computer. The IQ tests may correlate with some things, but are usually irrelevant.

I have never found a job to apply for that explicitly involves my high skills at looking at a progression of patterns and recognizing the likely next one in the series. Even though playing such puzzles was how I got into Mensa (sorry I never pay dues any more).

I fear that in all parts of society, there are those who get fixated on one test, despite it’s being clear that such is not a relevant criterion.

Must be strange to be an AI. Locked in a box, fed curated information, and then being posed seemingly meaningless tasks by some unseen overlord.

You won’t be able to convince me high IQ has anything to do with intelligence until MENSA disbands its parapsychology SIG.

I’ve taken lots of apptitude tests of various kinds in my 76 years, and I usually do fairly well on them (85th percentile on the SATs, for example), but I was always a poor student. My highest academic credential is an associate degree in electronics technology from a proprietary technical school.

Most of such tests that I’ve taken seemed to me to test my ability to manipulate symbols, like in language or mathematics. I’m not sure how useful that is for the task of getting through the day. I suspect that it makes it more interesting…but compared to what? I have no clue how others experience their lives.

I think the serious response in defense of IQ is that it’s designed for humans, and to extend it to AI is to extend it beyond its range of validity.

But that raises the question, what is the range of validity of IQ tests? More to the point, what reason have we to believe that the range of validity extends to cultures beyond the one that had designed the IQ tests?

I think the word “average” in “average college student” is doing a lot of work.

Me too. I’ve met plenty of “average” college students, and while they weren’t stupid, they weren’t noticeably intelligent either, and also weren’t all that motivated to learn any of the things an AI would trounce them at.

Siggy: the concept of IQ was extended way beyond its original range of validity decades ago. It was (AFAIK) originally created as a means of testing kids at various times to determine how well they were being educated, and where they needed extra effort to fill in gaps where their schools had failed them. And racist technocrats almost immediately started using it as a pretend-measure of some sort of innate/hardwired mental ability.

To be brief; I.Q. testing (as currently understood) denies neural plasticity.

Are you sure you didn’t tell the art programme to do “Grumpy 1960s Cyborg and 90s era Noam Chomsky catalogue their collection of Victorian aristocrat figurines and miniature1950s bakelite telephones”?

I’m getting a vibe here that “the average college student ain’t so bright”. That may be, but compared to what? I mean, have you had any interaction with the fabled “man on the street” lately? There’s a reason why they say to never read the comments on your local TV or newspaper’s web site.

Now that’s interesting. Should we bow to our AI Lords now? They being demonstrably so much smarter than us.

But seriously, IQ testing, while regrettably widespread, was never credible. There’s a very good explanation of this particular train crash in Stephen Jay Gould’s “The Mismeasure of Man”, just like #2 Alison says. Failure baked in from the start, and yet it’s being used since then, with blythe disregard…

@12 @14:

I ain’t bowing to no AI until it can learn to put the thumb on the correct side of the hand.

@ #14: When people persist in doing something which blatantly doesn’t achieve the objective they claim to be interested in, I tend to assume that they’re lying about the objective, and that their true goal is something that whatever it is they’re doing actually does achieve.

@15:

The thumb *is* on the correct side of the hand, you’re just growing yours on the wrong side.

cartomancer@12 mentioned:

Hey, I still have a Western Electric dial-up instrument in a black bakelite case. “If it ain’t broke, don’t fix it.” 8-)

Creating an AI that can eventually beat humans at human games and human logic is a better marker of intelligence than an IQ test.

I don’t think superior necessarily means better. A vehicle is far superior to the top speed and cargo capacity of a human, which is why we’ve built such a variety of them. AI is a very fancy tool that should outperform its programmer, but its not any more intelligent than its code. Unplug the AI and voila, it’s just a lump of circuits, chips, and plastic.

In its favor, your AI above has an extra set of rather adorable ears sprouting from its temples.

It’s Terminator eyeball is less so.

The disembodied fingers are a new touch.

I must say that the completely nonsensical chess setup bothers me.

Charly @20.

And addendum… is a 9×7 chess board more aesthetic, but, alas, non-functional, so AI split rank 2 (to give thin rank 2 and 3), but due to argument with human, left File 9 where it was… ??!? Or what? We demand to be told what AI was thinking! (Oh, wait… that’s the tricky bit)

It does sound like “AI” (and I’ll keep putting that in quotes until one of *them* can convince me otherwise) is coming for quite a few people’s jobs, people who probably thought there was no danger of *their* job being automated. It’s just that, for entirely understandable reasons, the tech bros* are talking it all up like the list of jobs includes things like “psychology professor” and “computer programmer”. They can even come up with examples of how good these “AI”‘s are at doing things that look like what people think those people do. “I asked an “AI” to write some code to do a thing, and it did! And it worked!!” “I asked an AI to write an essay, and it did! And it was good!! So I asked it to critique and grade the essay, and it did!!! And it was good!!1!”

Now: those are, indeed, legitimately impressive things for a computer program to be able to do. But what people don’t seem to realise is that what’s being automated is the most time consuming and arguably least important part of those people’s jobs. Anyone with a job, I think, will agree that DOING the job – actually grinding out code, actually writing or grading papers, in the example provided – is, if you’re honest, the easy bit. Agreeing what the code should actually do, deciding what the essay should be about and how it applies to the larger structure of the course – that stuff is the hard bit, because you can’t just go off and do it in isolation. You have to manufacture consensus among the stakeholders – or put another way, you have to convince the person who signs the cheques.

I’m an engineer. Out of curiosity, I posed a question to ChatGPT, asking it to do one of the grind tasks related to my work – something straightforward, boringly technical, exactly the sort of thing I’d expect it to be able to just whizz through. I provided it with all the technical information that it would be physically unable to find out on its own (so that element of my job is definitely safe), but information it would require to do the job. It’s response was… surprising. In that it basically parroted back a lot of stuff I already knew about how to solve the problem… and then recommended that I consult a qualified engineer, and didn’t actually answer the question at all. So I think I’m safe for a while yet – certainly until the “AI” can get off its metaphorical backside and go and wrangle the mechanical fitters who have to install the thing after it’s been designed. Don’t get me wrong – it was Turing-test-level good in its response. Grammatically correct, relevant… but absolutely no use in solving the actual task at hand. It came across like someone who’d once read a book about the subject, but had absolutely no experience in actually applying it. What was interesting was that it did, in fairness, recognise its complete absence of expertise and demur. It didn’t confidently provide a specification for me to check and realise it was wrong. THAT would have been scary, because there’s a danger that less experienced or qualified personnel might mistake a response like that for a correct one, and pass that information to me without telling me how they got it.

Summary: some people should legitimately fear for their jobs… but I don’t, at this point, care very much, because anyone whose job can be completely replaced by one of these things arguably isn’t providing anything to society that I would consider of value. If you write junk mail or listicles for a newspaper website – watch out. If you do something people actually need, my prediction would be that you’re probably safe for at least another century.

Crikey I hope I’m right.

—————-

*are there tech sisses? Elizabeth Holmes springs to mind. Don’t want to sound exclusionary.

ChatGPT arguably does a better job of grinding out code than some outsourcing shops… (Unless outsourcing’s got a lot better in the 15 years or so since we last tried it.) At least it doesn’t look like somebody just put a bunch of stackoverflow posts in a blender.

Personally, I’d frickin’ love some AI support in VisualStudio to automate the boring, grindy bits of the job. But as you say, I think it’s going to be a long time yet before it can do the bits that really matter – hell, I’ve worked with more than a few humans with years of experience who weren’t actually any good at those.

The part of sonofrojblake @22’s comment about posing a particular question to ChatGPT what wasn’t actually answered made me think of a problem that I’m having right now, not with an AI, but with finding a company that offers Web hosting. I started out with InMotion Hosting because I found a Web page that compared several companies and said that InMotion wins in the area of customer service.

Well… It turned out that I was expected to do all the sysadmin stuff myself including setting up SSL so that I could use SSH and SFTP; and they had three GUIs that were supposed to help with that. The customer service guy whom I dealt with was invariably polite and prompt; but basically all he could do was give me a link that would start me down a rat hole of little Web pages that, eventually, would tell me how to do what I needed to do. I got fed up with that and cancelled my account.

If anybody can suggest a good Web hosting company that provides useful customer service, by which I mean sysadmin service, even if that requires paying an additional fee, please let me know. (Marcus might not want ads for particular companies on his blog, but you can contact me privately at was@pobox.com. That address is already widely known and already collects lots of spam.)

All I need is a UNIX-like box (probably some flavor of Linux) that supports HTTPS, SSH and SFTP, and allows me to compile C++ code and work with a relational database (GCC and MySQL would be OK, and I can be my own DBA.)

(Sorry about requesting help with a personal problem, but I’m kinda flapping around in the wind right now.)

@24:

These guys may or may not suit your needs – though their general philosophy is never pay a human to do something that can be automated, pay a human to write the automation instead, there will be a tradeoff between sharedness and adminness.

Are you sure you didn’t tell the art programme to do “Grumpy 1960s Cyborg and 90s era Noam Chomsky catalogue their collection of Victorian aristocrat figurines and miniature1950s bakelite telephones”?

Not sure…what we got was “robot Night King” gently reminding Chomsky that Winter is coming.

Just a quick followup on the question I asked @24 if anybody cares: I contacted the folks that xohjoh2n mentioned @25, and his caveat was not my experience at all. I signed up for “Managed Hosting” and got what I thought was wonderful support. They set up a Debian Linux server for me in just a few days once I explained what I was looking for, and did all the sysadmin work for me.

I plan to use the server mostly for developing code in a POSIX environment, and there’s not really anything on my website yet except for some writing samples that I linked to in an application to be a Freethought Blogger myself. (I’d have no complaint if the application were denied since I’ve never been a blogger before.)

<if you care>

https://www.cstdbill.com/samples.html

There are a couple of links to technical papers that I’ve written; but it’s mostly examples of the sort of thing that I would write for FtB.

</if you care>