US president Donald Trump has gone to the edge of the cliff defending his right to privacy, although he would never phrase it that way. For Trump, it’s all couched in the language of “executive privilege” – i.e.: the right of the rich and powerful to do whatever they want (especially if it means getting richer and more powerful).

Meanwhile, the forces of global police/surveillance states are willing to subvert anything and everything in order to make sure that nobody but the rich and powerful have privacy. Have you ever noticed how the media make a big deal out of it when some Hollywood actor’s camera roll gets leaked to the internets, but they utterly fail to give the ghost of a shit when complete communications systems are backdoor’d and compromised? They fail to give a shit, that is, until one of them gets arrested by some country’s secret police. Unfortunately, the media do not solely support the rights of citizens, they often try to straddle a non-existent ambiguous line between a right to privacy and the needs of the surveillance state. As I have mentioned before, that is stupid: the surveillance state has only ever been able to use the information they collect retroactively – i.e.: to bust people after they have committed a crime or done some lèse-majesté that needs to be punished.

I am not supporting crime or terrorism – I do not approve of (major) crimes whether they are committed by the US president or by the guy down the street. I do not approve of terrorism whether it is committed by ISIS, or Israel, or the CIA. The issue, there, is that those crimes have to be committed in order for the malefactors to be (retroactively) caught and prevented from doing it again. At present, there is only one effective way of pro-actively preventing a crime – sort of – and that’s the FBI’s trick of finding suckers who are prone to commit a serious crime, then leading them into temptation and busting them when they cross the line from “wanting” into “acting.” That is an anti-crime/anti-terror tactic that works, but it only looks good on the books because it catches malefactors but only fairly stupid ones. So, if the premise is that all this infrastructure is to prevent crime and terrorism, then it’s not very good at it and the cost to everyone else’s civil liberties is way too high. The way the US has dealt with that cost, naturally, is by not targeting the rich and powerful – who are the biggest criminals and conspirators of the lot – but rather going after the low end of the competence and crime spectrum. The whole thing, in other words, is a scam. There has been a great deal of justifiable concern lately that the police exist primarily to protect the interests of wealth, power, and corporatism – well, the same applies to the intelligence apparatus. The FBI is a large customer of the intelligence community [insert some joke about the FBI lacking their own intelligence] and it generally has existed to serve the interests of the white supremacist state.

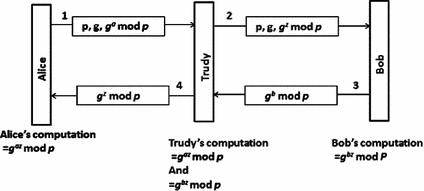

Included for illustration, because all postings need pictures. You get nerd points if you immediately recognized it.

In the past, I worked on a few projects for the NSA (1997-2001 on and off) including serving as an expert for a “Senior Industry Review Group” – we were responsible for listening to and making brilliant recommendations regarding the intelligence community’s ‘reinventing government’ program for redesigning an interconnect between the 20+-odd agencies of the intelligence community. My recommendation was: “don’t do it.” And I think that, in the end, that’s what happened. But during the course of that effort, I wound up taking a few meetings and off-the-record back porch lunches with some of the movers and shakers at NSA. One delightful fellow I spent some time with was Brian Snow, the commandant of the US cryptologic academy – the part of the agency that teaches code-breaking and system penetration. It oughtn’t surprise anyone to know that system penetration and subversion has been codified into a science of sorts, including a (fairly accurate from what I can tell) set of procedurals for doing various things, which embody value-weighting for techniques. I.e.: the new cryptanalyst is trained to look at encryption option negotiation first when attacking an encryption protocol, because programmers seem to screw that up, often. Anyhow, I had some interesting conversations on the back porch of Snow’s understated but lovely home in Columbia, MD, about system subversion and traffic analysis. One thing that he said, which completely changed my understanding of security, was that, “we attack the system at every level where it appears possible, because if we penetrate it we can’t be locked out if we have multiple access paths.” That observation has colored my perception of subversion ever since, which is why my view of the state of security is so dark. And, history since then has borne out my perception.

“Privacy” is hard to define so I’m going to wave my hands and avoid that quagmire, but let’s just say it’s enough freedom from surveillance to be able to communicate with others in a way that governments cannot observe. Privacy of that sort is a necessity for a large class of crimes, ranging from insider trading, to leaking secrets, arranging drug deals, or paying off porn stars who want to describe your penis a few months before an election. Privacy means being able to do certain things secretly, whereas privilege means being able to do those things openly without suffering consequences. Trump, when his privacy to commit crimes was threatened, suborned the entire US justice system in order to privilege his actions. It was an interesting move and it will probably work – but it is a certainty that Trump’s approach has left a paper trail a mile wide and an inch deep. Contrast that to privacy, which is basically all about not leaving a paper trail.

Consider this a case study: Vice [vice] describes how French intelligence compromised an encrypted chat network. When you think about using Signal, Facebook messenger, Zoom, or PGP you can and should assume that similar efforts have been put into compromising them as well. The size of the target community will determine its attractiveness to the system-breakers, who will orient toward getting the maximum “network effect” from their compromises:

Police monitored a hundred million encrypted messages sent through Encrochat, a network used by career criminals to discuss drug deals, murders, and extortion plots.

Those hundred million messages were not all legitimately captured traces of criminal activity – the actual criminal activity would have made up a very small percentage. All the other users’ privacy rights were “collateral damage.” Judging from how the intelligence community operates, they didn’t even worry about that.

Something wasn’t right. Starting earlier this year, police kept arresting associates of Mark, a UK-based alleged drug dealer. Mark took the security of his operation seriously, with the gang using code names to discuss business on custom, encrypted phones made by a company called Encrochat. For legal reasons, Motherboard is referring to Mark using a pseudonym.

Because the messages were encrypted on the devices themselves, police couldn’t tap the group’s phones or intercept messages as authorities normally would. On Encrochat, criminals spoke openly and negotiated their deals in granular detail, with price lists, names of customers, and explicit references to the large quantities of drugs they sold, according to documents obtained by Motherboard from sources in and around the criminal world.

Maybe it was a coincidence, but in the same time frame, police across the UK and Europe busted a wide range of criminals. In mid-June, authorities picked up an alleged member of another drug gang. A few days later, law enforcement seized millions of dollars worth of illegal drugs in Amsterdam. It was as if the police were detaining people from completely unrelated gangs simultaneously.

Silly criminals. Only presidential attorneys get to openly discuss crimes like that without consequence. Basically, what happened was that the French intelligence agency waited until there was a critical mass of users then scooped up the data and handed it all to the police, who then started picking people up in the middle of crimes.

Back around 2003, I was having lunch with Dan Geer (another computer security guy) and discussing start-ups. He suggested that one could probably get seed capital to build an “unlimited file storage for free” and encrypted messaging app, with the plan to make it available to the intelligence community all along – for a price. Hahaha, that’s funny. Except that Dan was part of the CIA’s venture capital fund IN-Q-TEL, at the time, so he was literally assessing and funding start-ups that he thought the intelligence community would be interested in. I know he was dropping me a huge hint, but I played dumb because I’m comfortable being dumb. But if I were interested in doing anything naughty with a computer, I’d think twice or maybe three times.

Unbeknownst to Mark, or the tens of thousands of other alleged Encrochat users, their messages weren’t really secure. French authorities had penetrated the Encrochat network, leveraged that access to install a technical tool in what appears to be a mass hacking operation, and had been quietly reading the users’ communications for months. Investigators then shared those messages with agencies around Europe.

Terminology: “trusted computing” is computing that you trust. “Trustworthy computing” is computing where your trust is not misplaced. There is remarkably little trustworthy computing.

Encrochat’s phones are essentially modified Android devices, with some models using the “BQ Aquaris X2,” an Android handset released in 2018 by a Spanish electronics company, according to the leaked documents. Encrochat took the base unit, installed its own encrypted messaging programs which route messages through the firm’s own servers, and even physically removed the GPS, camera, and microphone functionality from the phone. Encrochat’s phones also had a feature that would quickly wipe the device if the user entered a PIN, and ran two operating systems side-by-side. If a user wanted the device to appear innocuous, they booted into normal Android. If they wanted to return to their sensitive chats, they switched over to the Encrochat system. The company sold the phones on a subscription based model, costing thousands of dollars a year per device.

For one thing, using a system like that, where messages are routed through a server cluster, is a huge fingerprint; all the spooks have to do is capture traffic to/from those servers then go after the keys for an offline data attack. As I said before, I am not a cryptographer, but I know enough to have my concerns whenever I see an end to end encryption system that is easy to set up and use. The fact is that key exchange is a very difficult problem and if you make it easy to enroll in a system, you have probably skimped on it, somewhere.

Back in the time of the Clipper Chip Wars, AT&T was about to release a thing called the “TSD-3600” – a “bump in the wire” codec/decodec and encryptor. NSA and FBI were pitching literal shit-fits because the first version used a very clever point-to-point key exchange that was very hard to “man in the middle” (unless you compromised the key exchange in software) – some people think that the whole clipper chip debacle was triggered by FBI’s freakout at the idea of having an inexpensive, easy, and reliable system that provided perfect forward secrecy. Terminology: “perfect forward secrecy” is a system that specifically resists offline attack by generating per-session keys which are never retained anywhere; an attacker has no choice but to go after the encryption envelope unless they have subverted the end-point system and can just read the keyboard. In the case of the TSD-3600, since it was a fairly simple device, there was no place to put storage to record audio of the calls. The NSA’s response was simple and direct: they strong-armed AT&T into removing the TSD-3600 from the market and replacing them with a version containing the “lawful government access” clipper chip (which, by design, disclosed the session key in the encrypted data stream, so the traffic could be swept up and handed to NSA for decryption). What sunk the clipper chip? Take a guess and the answer is below the bar so you can see how you did.

What the TSD did was clever: it exchanged the session key, then displayed a hash of the session key on a little LCD panel. Assuming Diffie-Hellman key exchanges work correctly, it is not possible for a third party to participate in the key exchange, since they would have to exchange different keys – and if they did that, the hashes would be different. In theory the first thing you were supposed to do when you used the phone was to push the “go secure” button then read the LCD to the person on the other side. If it didn’t match, your call was being intercepted. Clever. NSA hated it. FBI freaked out.

The point of all of this is that the FBI has always been pushing for NSA to subvert systems, and that is, after all, NSA’s job. This dynamic plays itself out in other nations, as well – you should assume that every nation tries to spy on “its” citizens, which is a profoundly anti-democratic principle, but that’s how it is. The example of how the French compromised Encrochat is another data-point in the history of secure communications: “all your base are belong to us.”

What sank the clipper chip? FBI was furious that they’d have to ask the NSA to decrypt intercepts. NSA refused to give FBI access to the clipper chip keys because they thought FBI were blockheads who couldn’t keep the keys safe. The final nail in the coffin was when (as I mentioned earlier) Ross Anderson and his team at Cambridge began gearing up to sand the protective layer off one of the clipper chips (an MYK-78T by Mykotronix, an NSA owned and operated fab plant) – the reason that was a threat was because the hardware implements an encryption algorithm that was a version of the military’s TYPE-1 encryption, which is considered to be Very Special Stuff and the NSA was terrified that the algorithm would be available for public inspection, since it would dramatically improve the quality of civilian encryption. Apparently the NSA thinks their crypto is The Shit. It’s probably pretty cool, to be sure – apparently it functions using a variable-length key, somehow, which means that you can turn the cipher’s level of difficulty up on demand, something that cannot be done with Feistel network ciphers, which means that TYPE-1 is not a Feistel network, it’s something else. Something interesting and yummy.

What sank the clipper chip? FBI was furious that they’d have to ask the NSA to decrypt intercepts. NSA refused to give FBI access to the clipper chip keys because they thought FBI were blockheads who couldn’t keep the keys safe. The final nail in the coffin was when (as I mentioned earlier) Ross Anderson and his team at Cambridge began gearing up to sand the protective layer off one of the clipper chips (an MYK-78T by Mykotronix, an NSA owned and operated fab plant) – the reason that was a threat was because the hardware implements an encryption algorithm that was a version of the military’s TYPE-1 encryption, which is considered to be Very Special Stuff and the NSA was terrified that the algorithm would be available for public inspection, since it would dramatically improve the quality of civilian encryption. Apparently the NSA thinks their crypto is The Shit. It’s probably pretty cool, to be sure – apparently it functions using a variable-length key, somehow, which means that you can turn the cipher’s level of difficulty up on demand, something that cannot be done with Feistel network ciphers, which means that TYPE-1 is not a Feistel network, it’s something else. Something interesting and yummy.

You can still sometimes get AT&T TSDs on Ebay but they won’t work with modern phones, naturally.

Your definition of the word “privacy” sounds a lot like my definition of “peace” – the usual petty struggles without the immediate threat of annihilation.

Clearly criminals don’t read history. it’s an Enigma that they don’t.

What the heck is a “decodec”? “Codec” is shortened from coder-decoder, so does “codec/decodec” mean “coder-decoder/decoder-dedecoder”?

My brain is fizzing right now. I’ve never really been tech-savvy, but it seems the amount of stuff I don’t know is way more staggering than I could have imagined. Apparently, I’ve been living in a deep, dark hole somewhere. As far as personal privacy is concerned, I knew that was gone when surveillance cameras began to appear in public. Big Brother is surely watching, and the thought police are on their way. And most people don’t seem to care.

It’s almost certainly made up, but I like the story of a newspaper which published, in its “letters to the editor” page, a pseudonymous screed praising the rise in casual surveillance and actually using the phrase “if you have nothing to hide, you have nothing to fear”, and made just the following editorial comment below it:

“Disgusted of Tunbridge Wells” sealed the above in an envelope.