Computer systems have grown so complex and interconnected that it’s very difficult to identify a failure; back when I started things were simpler and were not as virtualized as they are, now.

Virtualizing everything increases complexity and complexity, per Charles Perrow [wc] becomes non-linear – eventually we have failures that we cannot understand or control because they are subtle interactions of dependent parts. Consider something like a modern internet connection: it appears to be a wired connection because that’s what the most affordable routers on the market prefer to work with. But, actually, the wires are just an interface/signalling mechanism into a “cloud” of virtual wires. I used to have a T-1 line into my house (the only broadband option at the time in Verizon Country) and it ‘came’ from Verizon, but I knew it was actually Covad provisioning the service. The wires were actually copper carrying data back to a box, somewhere, which pretended to have copper coming out the other side but which actually packetized/repacketized the data onto fiber. Of course the fiber was also virtual – layers of software telling higher-level abstractions “there is a fiberoptic cable here” when actually there were more layers of virtualization creating virtual fiberoptic cables, etc.

Here’s what’s interesting: when layered virtual systems go down, because they are simulating a single thing, the way you diagnose a problem is by burrowing down into layers of virtualization until you find something that affects everything within the same scope. So, if my home line is down, and everyone in my area who’s using the same virtual layer is down, then the problem is at or below that virtual layer.

Here’s what’s interesting: when layered virtual systems go down, because they are simulating a single thing, the way you diagnose a problem is by burrowing down into layers of virtualization until you find something that affects everything within the same scope. So, if my home line is down, and everyone in my area who’s using the same virtual layer is down, then the problem is at or below that virtual layer.

Software works the same way: in the early days, you ran your program on a computer. Then, there were virtual machines starting in the 1970s, where each “process” on a multitasking system thought that it was running on the bare metal, but it was running in a software simulation of its own address space and its own bare metal. Then there were virtual machines (starting in the 90s) where you had a computer running a bunch of processes each of which thought it was a bare metal computer, and which ran its own operating system which, in turn, runs its own processes. Now, with cloud networks and software-defined networks, you can have a system that’s running a bunch of processes that think they are a cluster of separate computers connected over a network. It’s a very powerful capability since you can have all the computers and networks you like – so long as nobody pulls the power cable of the one computer on which all the simulated computers and networks are running.

I’ve seen some pretty crazy things happen, in the virtual world. One time, a systems administrator accidentally re-initialized the wrong partition because he thought he was running in a virtual machine and was initializing the virtual machine’s storage – in fact, he re-initialized the storage in the host operating system in which all the virtual machines were running. Normally, that would not be a big deal, except that the cluster of virtual machines included some important production servers as well as test/development systems for software engineers. That’s why Charles Perrow and graybeard computer programmers say “do not mix development and production systems.” That piece of advice is ignored constantly by each successive wave of system engineers. They learn. If they are lucky, they learn from watching horrible things happen to their peers.

Unfortunately, the technological fixes have frequently only enabled those who run the commercial airlines, the general aviation community, and the military to run greater risks in search of increased performance. – Charles Perrow

In terms of complexity in computing, this equates to “virtualization and software-defined networks make it easier to build things you cannot comprehend.”

When you read about cloud personal information breaches, the breaches are almost always, at the core, a result of mis-managed complexity: someone built something that they could not understand. As Ken Thompson once said:

Debugging code is harder that writing it. So, if you are writing code that is close to the edge of your ability, you are writing code that you cannot fix.

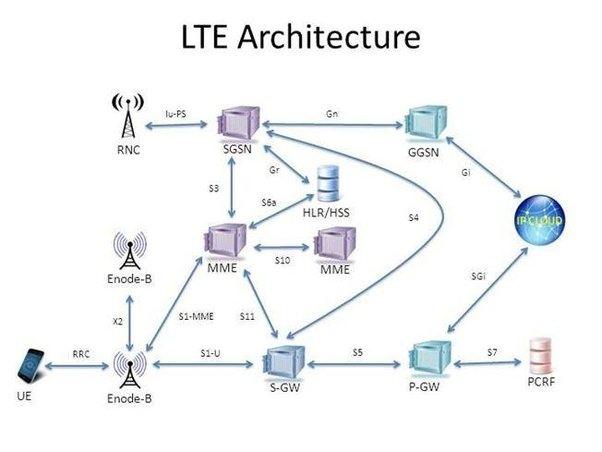

Monday the FBI came to install a new wire-tap on my house, and in the process of doing that, they cycled the power. When everything came back up, my internet didn’t work properly any more – between the lot of them, my home router, can-tenna, desktop system, and Verizon’s LTE cloud somehow managed to decree that IPv6 traffic works, but IPv4 traffic does not. So I called Verizon’s tech support and explained the whole thing to them, and all they can do is replace my router. I’m 99.99% sure the problem is something in the virtualization layers that make LTE work (perhaps my IPv6 address is OK but my IPv4 address has been dual-allocated) but the system is so complex that first-tier support doesn’t understand much beyond “cycle power” and if that doesn’t work “replace the thingies.”

Back in 1987 I joked that the future of computing would be that we would have a special mode in our car where the steering wheel could adopt the channel-change function (very convenient!) and everyone would be weaving all over the road between lanes as they channel-hopped. I realize now that I was an optimist, then.

When you hear transhumanists talking about “uploading” to a computer, point out to them that humans suck at system administration and, as complexity continues to increase there will be bigger, more complex, outages. Imagine your soul is uploaded to some future AWS-like cloud service and some junior systems administrator re-initializes you and a whole planet-ful of avatars, because they were too lazy to enforce a separation between production and development systems. Look at the personal information leaks that happen constantly: they will leak your soul, and hackers will be able to spin up instances of you, in dark cloud servers, to torture endlessly for their amusement.

The start-up we were trying to kick off, which did not get funded, was a simplifying management layer for “internet of things” devices. As a side-effect of its operation it solved a large number of provisioning and security problems (by changing the direction of communication from cloud->home to home->cloud) but the hardest part of doing it would have been getting device manufacturers to specify administrative operations. We had meetings with developers and their attitude was “that would constrain us.” They actually said (translating from developer into management-speak) “taking the time to understand what we are doing would constrain us.” Halfway through our fund-raising process, I was starting to get very depressed indeed.

As part of my column at Techtarget/SearchSecurity, I hunt down and interview interesting people in computing. The last one I did was Tom Van Vleck, who was a systems programmer on MIT’s MULTICS system. Tom has great experience and deep thoughts on system administration, reliability, and complexity (security – my field – is just a side-effect of getting those wrong) it was a great time and a delight to interview him. One of the things he said that I loved was that there was a specification for all the stuff that an operator might normally do to the system, and for each of those things, there was a program that did it, and a manual page for that program. Developers did not simply decide “I am going to back up the database now” and throw a bunch of commands at an interpreter – they would run the “back up the database” tool. That tool was responsible for knowing all the constraints under which it was expected to operate, and would only allow the operator to do things that they were supposed to be able to do. In other words: the system was fully specified. Nowadays when you talk to a programmer the discussion goes like:

Programmer: “I need administrative access to all the systems.”

Security Person: “Really? Why? To do what?”

Programmer: “All the things.”

Security Person: “What things?”

Programmer: “Whatever things I think up when I think them up.”

I have email to handle, and some documents to send to a client. So I thought “well I will just fire up my laptop, sync my email over from my desktop, queue up the messages, and hook my laptop to the personal hotspot in my iPhone and send them.” Except Windows’ file-browsing and sharing capability does not appear to work under IPv6 and since IPv4 is not functioning for some reason, I guess I’m going to have to move things on a USB drive. The miracle of virtual layering and cloud computing has reduced me to “sneakernet” in 2018. And I’m not unhappy because at least I know where my data is going.

New FBI wiretap: The guy said he was from the power company and was installing a new meter. How do I know?

Does that mean it’s a smart meter? Over here if you need a new meter the power company have to install a smart one. I would very much like to move my meter and fuse box which are in my understairs cupboard at the low end; in order to read the meter or reset a fuse you have to lie on the floor, and of course you can’t store things in that space. However if I move my meter they will insist on replacing it with a smart meter which I don’t want. I don’t understand the ins and outs of it, but my understanding is that the meters specified are not a good choice.

Nothing to do with the main topic, but I don’t have anything intelligent to say on that, beyond it sounds all too human.

jazzlet@#1:

It’s probably a “smart” meter. Which means it has a communications stack of its own and some encryption and a key, etc. so it can be read from a distance.

In other words it is a bug; just not a very good one.

Don’t you mean “at or above that virtual layer”? Or are you envisioning the hierarchy upside-down from the way I am?

DonDueed@#3:

Everyone at or above the affected virtual layer has the problem, the problem/solution is in the next layer or layers down. I’m assuming “reality” is at the bottom of the stack.

When more and more ISPs start providing virtual IPv4 clouds NAT’d atop IPv6 this problem will get much worse. I wish I had patented NAT in firewalls when I could have (there was prior art but I could have written the patent around it if I knew then what I know now…)

Did all this virtual layering serve a purpose? Was it necessary or was it just a matter of convenience / expedience / laziness (delete as appropriate) to have a system run inside another when they could equally as well have set it up to run on its own?

I’m sure there are plenty of good reasons to do something like this. You won’t be able to put in a new set of phonelines to set up a new, non-virtual version of something without having to get rid of its predecessor. But the way you’ve described it it sounds like people are wrapping all the new things up inside progressively older systems simply because each layer is (just about) able to function with the previous iteration of legacy hard- or software.

On the one hand it’s a natural technological progression. On the other hand it seems like you’re forcing yourself to hold on to the old technology because things will fail without it. It’s as if modern industry could suddenly fail because someone threw out the last stone axe needed to keep it going.

—

Maybe systems engineers can’t think about this without wondering if they themselves are running on “bare reality” or just in another meatlayer. The resulting existential crisis and nervous breakdown might be a career-ender.

—

Don’t worry, It Will Be Fine.

—

Re: Smartmeters

I’ve been reading about those things in my engineering monthly for years. Mostly readers’ letters from (presumably) professional engineers who can’t fathom what the point of the damned things is, why on earth anyone would want one and how much more flawed the concept (or IoT in general) would have to be to convince the powers that be not to install them.

I am sort of looking forward to the first major smartmeter-induced catastrophies, though. Then again, maybe not, since the damage, if any, will hit the customers, not the people selling the electricity. The bit that remembers how much money you owe is probably protected by layers of redundancy.

Maybe we should burn everything to the ground and rebuild it from scratch. We just need to to find that one sys-eng who didn’t lose their mind contemplating their profession so we can put them in charge of it all. I’m not that engineer, thank goodness.

Ranum’s Basilisk. No, Basilisks. Or Hydra. Basilisk-Hydra-hybrid. BasHydralisk?

komarov@#5:

Did all this virtual layering serve a purpose? Was it necessary or was it just a matter of convenience / expedience / laziness (delete as appropriate) to have a system run inside another when they could equally as well have set it up to run on its own?

It’s a consequence of backwards compatibility. See, what happens is that certain things become a “standard” interface between system components. For example, the signalling protocol on a T-1 line. Now, you have oodles of routers with line-drivers that can handle that, so when you build your new wizbang network layer, if you need to interface with the old routers you just make your new thing look like an old thing and plug it together and it just works. There’s sometimes a cost for translation/de-translation but moore’s law generally takes care of that.

Back in the 90s I worked for a company that had what appeared to be a large cloud of T-1 connections (SONET) to various remote offices. Of course there were really no T-1 lines at all, it was all simulated T-1 lines which were an abstraction that was presented on top of multiplexed multi-mode fiber. So, the actual traffic was packet switched at several levels in the ‘stack’ of its reality.

That’s how you you get completely F’d architectures like: a company has virtual T-1 lines connecting all their facilities and runs Voice over IP atop those. So it’s a streaming protocol being broken into packets which are packet-switched virtual connections over the T-1 line. But the T-1 line is an abstraction, which is built atop packet-switched virtual T-1 lines running on multimode multi-connected fiber. The conversation thinks it’s a “phone line” but it’s actually being packetized and de-packetized at multiple levels and dog knows what’s real and what’s not. Actually dogs just take one look at a thing like that and go sleep in a shady spot.

It should all be burned to the ground every 15 years, and all the interfaces should be re-designed and re-certified and standardized based on what was learned before. But instead The Demon of Backwards Compatibility actually controls everything from his virtual operations center in the darkest pit of hell.

A friend of mine commented in an email that I should have mentioned another fun virtualization screw-up:

Two other funny virtualization things I saw:

1) An organization had a primary and secondary DNS server; they were both running on virtual machines on the same physical CPU, which eventually blew up.

2) An organization that was heavily virtualized had a hacker get in to the host system running all their virtual machines. The hacker simply paused the running production systems, then re-started them after a checkpoint, but copied away the checkpoint images of the running system, including all the encryption keys and credentials for the customer database(s). Best of all, the hacker copied the stolen system images to an AWS bucket owned by the victim, then completed the exfiltration in a liesurely manner via a VPN link to a compromised machine in Malaysia.

Owlmirror@#6:

Ranum’s Basilisk. No, Basilisks. Or Hydra. Basilisk-Hydra-hybrid. BasHydralisk?

Can we work very grumpy badgers in there, somehow?

I assume you’re referring to Roko’s basilisk… That’s a scenario Iain Banks somewhat explored in The Hydrogen Sonata (I think it was?) – one of his last books. Some religious cult had decided that since god didn’t appear to exist, it was incumbent on them to upload people who had done wrong to a hell of parallel eternal torture.

It’s interesting to me that the idea of parallelizing hell and putting people into virtual hells seems outre and bizzare but really it’s not any weirder than catholic theology.

komarov@#5:

Don’t worry, It Will Be Fine.

That was really good, btw.

I am still trying to figure out how it is that IPv4 would stop working and IPv6 would work fine. It may be Microsoft’s fault – have they pushed a new release of something that borked something? I can’t even tell any more. Sometimes I turn my computer off and it comes back up and Microsoft has adjusted the settings. The last time that happened, they turned the Windows firewall back on, so none of my in-house file service or print service worked. Did I ask them to turn the firewall back on? No, I actually had turned it off for a reason. The current state of systems engineering is really really scarily bad.

Marcus, @ #9: The one with the virtual hells was Surface Detail. There’s also a lot in there about how it’s really important to know what your dependencies are in base reality, which this post reminded me of a lot.

komarov, @ #5:

Believe me, you do not want to know anything about how the world’s major banking systems work…

Well, they have a significant advantage for the utility companies, in that they don’t need to send people out to read them, so they can fire all the meter-readers and keep the money. They also have advantages for facilities managers, in that you can get lots of juicy data that lets you monitor your facilities more effectively, which is especially useful if you’re trying to monitor a geographically distributed set of facilities… In a previous career, I did energy usage monitoring for a large government department with buildings scattered all over the country – thanks to the magic of smart metering, I came in every morning to a set of half-hourly power usage reports from every one of them, with a bunch of automated exception reporting built on top of that. Moderately useful for managing an office estate, incredibly useful if you’re managing industrial facilities.

For ordinary punters though? Yeah, no big deal.

Komarov (#5):

Well that would suck for me, since (averaged over the year) I’m the people selling the electricity.

The real problem with smart meters is they’re not smart enough. Electricity still mostly comes from giant thermal plants that sit there sucking down mountaintops and spewing out atmospheric insulation, mercury, and power. As those sources slowly change into propeller power fields and solar panel sides, the supply will naturally become more variable, and we’ll need to either massively overbuild the supply side or make the demand more responsive. When I’m selling power, I have the ability with just a signal to adjust the voltage and phase. If everyone in the area who also has solar panels and a modern inverter were told to do that when frequency control is needed, you would have the grid stabilization effect of a dozen thousand-ton turbines, only a hundred times faster (cf Hornsdale Power Reserve in S.A.).

Likewise, if the utility company told my meter when they are running low on renewable supply it could tell my water heater to hold off a bit, or my dishwasher to wait, or my car to not charge for a while (or even, with my next car, to sell them a bit of energy). But they don’t, so I just keep pulling power made from squished very old trees.

@Ranum (#9):

I wonder if that is where SMBC got the idea for this comic: https://www.smbc-comics.com/comic/hell

In other news it probably says something about me that it took me so long to figure out how to block quote. I kept trying to use markdown of varying types.

From the consumer’s perspective, backwards compatibility is great. When I buy a new shiny external HDD with countless terabytes of memory, I want it to be backwards compatible with my ten years old computer’s antique USB port. Reducing backwards compatibility actually results in a form of planned obsolescence—the moment one part of your system wears down and you need to replace it, it turns out that the new thing is no longer compatible with everything else, and thus you need to replace everything.

I have a friend who is still using a Windows 98 computer on a daily basis. Not because he wants to, but because he is forced to do so. He earns his living by modeling atmospheric pollution dispersion and calculating concentrations of atmospheric pollutants emitted from various sources. For that he is using software that is installed on his antique Windows 98 computer. He has got a license for an old version of the atmospheric dispersion modeling software. That old software doesn’t work on anything newer than Windows 98. Of course, theoretically he could purchase the license for a newer software version. Practically, it would cost thousands of dollars (I think it was about $10 000), hence he just keeps on using what he’s got.

And it’s not just my friend. I know of some institutions and companies where they are still using Windows 98 or even Windows 95 computers only because some ex$pen$ive software is installed on them. As long as everything works, people are careful not to modify or update anything. Lack of backwards compatibility means that it wouldn’t be possible to replace just some part of the system, it would be necessary to replace everything, and that would mean spending a huge amount of money.

Joseph Weizenbaum said this in Computer Power and Human Reason… in 1976: “Our society’s growing reliance on computer systems that were initially intended to ‘help’ people make analysis and decisions, but which have long since surpassed the understanding of their users and become indispensable to them, is a very serious development. It has two important consequences. First, decisions are made with the aid of, and sometimes entirely by, computers whose programs no one any longer knows explicitly or understands. Hence no one can know the criteria or the rules on which such decisions are based. Second, the systems of rules and criteria that are embodied in such computer systems become immune to change, because, in the absence of a detailed understanding of the inner workings of a computer system, any substantial modification of it is likely to render the whole system inoperative and possibly unrestorable.”

We’re doomed, aren’t we?

Also, I’m not sure if “recommend” is the right word but the RISKS Digest is indispensable reading on what can go wrong when well-meaning people come along and think, “hey, that process is working well, but what it really needs is to be computerised!” without thinking about the consequences much. Or at all…

Re: Backwards compatibility #7 / #14

“Good” or “safe” backwards compatibility is probably a matter of scope. Personal devices being able to support legacy hardware is unquestionably useful. But when you keep stacking successive generations of critical and large-scale infrastructure on top of each other maybe you ought to rethink matters.

It’s also a question of how you implement said backwards compatibility. Actual compatibility should be fine: A device will work with older kit if it is asked to. Great! But if I understood correctly, what people have actually been building is backwards dependency. A new device has to go through the old kit to work at all. That would most definitely be a bug, not a feature.

Those examples were lovely, by the way. I guess if you really want a backup system you’ll have to build it yourself, from scratch, just to be sure.

—

Re: Smartmeters (#12):

Well, most griping was about private installations of smartmeters. When you’re dealing with large organisations that are really keen on power figures they could probably afford to build their own purpose-built system to do it. Having off-the-shelf-hardware is nice, though, provided it does the job and doesn’t turn out to be a liability.

But none of that should result in mandatory “upgrades” if they’re not wanted. Incidentally, another common readers’ complaint on metering is how it is apparently “too complicated” for private households to feed their excess power back into the grid. The UK power grid seems to be a very contentious topic among engineers.

On the smart grid in general and having it adjust appliances in individual houses: I like the principle but have serious doubts about its successful implementation. I’m generally not a technology sceptic but this is one of those cases where I’d need a pretty convincing demonstration before allowing it in my home (assuming I’ll have a choice in the long run). It’s the sort of technology where early adopters could run serious risks when the system fails in new and creative ways. (I’m reminded of reports about smart lighting, with people were having issues like third-party bulbs being incompatible after software updates or lights being on continuously while updating – in the middle of the night.)

Cat Mara@#10:

I’m not sure if “recommend” is the right word but the RISKS Digest is indispensable

Let me second that. Peter Neumann is a fascinating character (I interviewed him [search] in 2013 for my column at Tech Target) he’s one of the deep thinkers about this problem. I had the pleasure of serving on a blue-ribbon panel with him, back during the early Bush years, and his commentary was always wise and fascinating.

At various times Peter and I have both advocated scraping the internet off and rebuilding from scratch, with some neatly layered basic services and new APIs. Of course it will never happen. But in 1999, I actually came up with a solution: crash it and rebuild it and blame it on Y2K. I suggested that in a speech I gave at Black Hat and everyone laughed, but I wasn’t joking.

Cat Mara@#15:

First, decisions are made with the aid of, and sometimes entirely by, computers whose programs no one any longer knows explicitly or understands. Hence no one can know the criteria or the rules on which such decisions are based. Second, the systems of rules and criteria that are embodied in such computer systems become immune to change, because, in the absence of a detailed understanding of the inner workings of a computer system, any substantial modification of it is likely to render the whole system inoperative and possibly unrestorable.”

There has to be a cool rule-formulation of that. Something like “all AI eventually implements bureaucrats”

Ieva Skrebele@#14:

I have a friend who is still using a Windows 98 computer on a daily basis. Not because he wants to, but because he is forced to do so. He earns his living by modeling atmospheric pollution dispersion and calculating concentrations of atmospheric pollutants emitted from various sources. For that he is using software that is installed on his antique Windows 98 computer. He has got a license for an old version of the atmospheric dispersion modeling software. That old software doesn’t work on anything newer than Windows 98.

The industry is full of many stories like that. It’s even worse when there are critical systems – process controllers, robot controllers, medical devices, that are tied to old operating systems. In some cases, it’s even regulated (medical devices) that you can’t update or change the system once it’s in its certified configuration – so you wind up with an MRI machine hooked to a computer running Windows XP and the first piece of malware that gets loose in the network blows it to pieces. It is a very dangerous and incredibly stupid situation.

We’re mostly past the time when people coded applications in assembler (which is tied to processor architecture) – most stuff is higher-level languages which ought to be portable; the real question becomes one of the value of the software versus the cost of a forward-port. A tremendous amount of code is just flat-out crap, that can’t be ported because it’s written so badly. And then there’s the software that’s source code has been lost. That’s … problematic. One of my first successful consulting gigs was re-implementing and ancient application for which the source code was lost – it’s a huge pain in the neck but I find myself questioning the business wisdom of companies that insist on running an ancient version of something – often at great expense – because a re-write would be too hard. The old Compuserve system would be a good example of that: they ran some of it on DECsystem-20s which were kept alive through scrounging and kludging for almost a decade after DEC had gone out of business. They probably should have started writing a better, portable, version of their software and if they had they might still be with us today.

But, yes, it’s complicated. One of the premises of “throw everything away every so often” is that you have to build new versions.

Oh, here’s another one: a few years ago I ran into a business that was running a DOS box application with a Dbase-III database. That was amazing! Alexander of Macedon used to code in Dbase-III when he was a kid. It’s what drove Nietzsche mad. I asked them what the database did and it turned out it was a completely simple forms app that a friend of mine re-wrote in php and MySQL over a weekend for $3,500. And now suddenly, copy/paste and printer output work. Amazing! What they were losing in productivity would have paid for replacing the application many times over.

What all of this points back to is that businesses don’t always make good decisions about technology strategy. It’s hard for them to do because there are “externalities”* – like that vendors lie. A lot. It’s scary how many times companies buy something that’s basically the software equivalent of a Potemkin Village, then try to put it into production. See the FBI’s Virtual Case File project as an example – it has been attempted several times by different beltway bandit contractors, and almost $1/2bn has gone down the drain. What is it? Basically, it’s a Wiki. They can’t make it work. Incredible. Throw more money at it!

(* Externalities – an economist once told me that whenever economists don’t know what caused something, they say it’s “externalities.” So I’m stealing that idea.)

BTW – I run DOS6.0 in an emulation box so I can play Master of Orion(1996) it works fine!

During the incident response I was just involved in, I dusted off an old tool I wrote in 1998 and it compiled and ran perfectly with only a minor change where the Linux crowd broke backwards compatibility with errno. It’s possible to write very foward-portable code, you just have to care to.

@ 16 Cat Mara

Ah, thanks for the RISKS Digest reference. I used to read comp.risks but got out of the habit. I was just thinking I should have a look at what was new and terrifying or bewildering.

@ 20 Marcus Ranum

[RE Dbase-III] It’s what drove Nietzsche mad

No, no, it was IBM’s JCL (Job Control Language for the kiddies) on the mainframes.

BTW, did you notice that NASA was looking for one or two FORTRAN 4 or 5 programmers about 5 or 6 years ago?

Marcus, it must have been a real honor to interview PGN. Given how long he’s managed the RISKS list in its various forms, he’s a real unsung hero of the profession.

And, yes, we totally missed an opportunity to throw everything out and blame it on Y2K 😂

(BTW, the comment system appears to have eaten my link to the RISKS archive. It’s http://catless.ncl.ac.uk/Risks )

Well, that’s one aspect of it… Another (and probably much larger) problem arises when you start tying lots of different applications together into complex distributed systems: now you can’t move any part without updating everything that depends on that part to reference the new location. And in order to do that, you need to actually know what your dependencies are…

A while back, the UK government set up a scheme to virtualise critical IT infrastructure (mainly around banking) – lots of shiny new kit, lots of money to throw at the problem. Take-up was very low – because nobody really knew how all these critical systems worked or interacted any more, so nobody was willing to risk any nasty surprises. We’ve all heard the stories about forgotten servers in bricked-up rooms running equally-forgotten – but nevertheless critical – services, right? Some of them are true.

Did you hear about the recent TSB debacle? (TSB are a fairly major high-street bank in the UK.) They’d been sold from one banking group to another, and were paying pretty substantial sums of money to keep their operations running on their previous owner’s IT platform. So, obviously, they had a big, carefully planned project to move to their new owner’s platform… Just a lift-and-shift, right? Come the day, everything went completely tits-up, many customers couldn’t access their accounts, many customers could access other people’s accounts, people couldn’t get money out of ATMs or use their VISA cards – basically everything that could possibly go wrong in consumer banking all went wrong at once – and it took them weeks to sort it all out.

Then there was the time that the VISA payment authorisation system for most of western Europe collapsed one Friday afternoon because of the failure of one bit of critical infrastructure…

Then, for super extra bonus fun, there are all the systems that rely on either undocumented features or actual bugs in some of their component applications…

Dunc@#25:

Another (and probably much larger) problem arises when you start tying lots of different applications together into complex distributed systems: now you can’t move any part without updating everything that depends on that part to reference the new location. And in order to do that, you need to actually know what your dependencies are…

That is exactly what Charles Perrow calls “connected complexity” – when you wind up with something full of dependencies you can’t unpack. Then, the safety margin gets eroded by pieces being replaced or used wrong and you wind up with Bhopal.

I am unconvinced by Perrow’s argument, as his definition of “connected” is squishy – I don’t think he can really talk about complexity and connectedness without a way of measuring it. It’s a bit hand-wavey.

Then, for super extra bonus fun, there are all the systems that rely on either undocumented features or actual bugs in some of their component applications…

I frequently have discussions that go like:

Them: “We need to segregate our networks with firewalls but we have no idea what traffic is going back and forth. It’s all so hard. Maybe we should just leave them open.”

Me: “What you’re seeing is ‘technology overhang’ – if it had been deployed correctly you’d have design documents and updates and change requests – you’d be able to go back and understand what talked to what and why. Parsing that all apart with no roadmap is going to be expensive. It would have been cheaper to build it right in the first place, but now that it’s too late you’ve got to pay the price for your sloppy admin practices.”

That makes me so popular.

Yeah, I see that ‘technology overhang’ all the time.

The basic issue seems to be the difference between real engineers (the sort that sign their name to something saying they’re taking on personal liability if it goes wrong) and ‘software’ engineers.

When a real engineer designs a system, he looks at it and starts thinking of the circumstances under which it would break. He then tweaks the design to mitigate this and iterates until he decides that further reliability isn’t cost-effective.

When a ‘software engineer’ designs a system, he looks at it and tries to think of any circumstance under which it might work. If he can think of one, he says ‘my job is done’ and releases it.

This cost/benefit analysis gets different in poor countries where the employees’ time is very cheap (low salaries). Paying your employees to waste part of their time, because they are using outdated technology, is often cheaper than importing the newest technology from some rich country where they sell it for a lot of money. By the way, this is how it is for my friend’s company. Sure, using a separate Windows 98 computer to do some calculations wastes a bit of employees’ time, but buying the newest software from a UK company would cost a lot more. (There are no security risks this time. If that old computer gets infected with some virus, you can just spend a few hours reinstalling all the software. That’s unlikely though, since that computer isn’t connected to the Internet, people use floppy disks to get data out of it. The computer is used exclusively for modelling air currents, it’s not being used for any other purposes whatsoever, no other valuable data is stored on it.)

Hmm, if you want to be popular, maybe you can try this one: “We will build a wall. A yuuge wall. A beautiful wall. It will keep all the bad guys out of our computer systems, and every single of our problems will be fixed.” /sarcasm tag

Curt Sampson@#28:

When a real engineer designs a system

…

When a ‘software engineer’ designs a system

I am reminded of old joke. What is difference between mechanical engineers and civil engineers? One makes weapons systems, the other makes targets. Software engineers can’t do either particularly well.

[My first product, an internet firewall, had zero bugs ever found with a production version for 3 years, until after I had gone elsewhere and another programmer took it over and added a 3rd party integration that included a buffer overrun. I don’t just mean ‘no security bugs’ – I mean, no crashes at all.. There was one system deployed with some sketchy RAM that failed, but that was a hardware problem.]

Ieva Skrebele@#29:

Hmm, if you want to be popular…

You can stop right there.

If I wanted to be popular I wouldn’t do 9/10 of the things I do.