There is a huge battle between Microsoft and Amazon over who gets to control the future of government computing. To me, the whole thing looks utterly surreal.

By “surreal” I mean “so mind-bogglingly dumb I can’t believe it’s even happening” but then this is 2020 and this is Trump’s America, though the trend-lines go back to the early 00s and were kicked into overdrive by massive surveillance state build-out under the Bush administration, followed by Obama’s laissez-faire “who-gives-a-fuck?”

Briefly: cloud computing. The idea of cloud computing is compelling. Organizations like Google, Amazon, and Microsoft built gigantic server farms for their own purposes, then realized that they had solved system administration for their own purposes, and decided to come up with price structures and rent rack space in their systems for organizations that didn’t want to build that capability and buy that extra capacity, themselves. It’s pretty cool: if you stick 4tb of data on your image recognition server and it starts filling up, there’s no need to call a system administrator to go find the server, power it down, add a new hard drive, and spend 48 hours watching it while it cross-populates, or add the new hard drive to a RAID array if there was some forethought spent on designing so that capacity could be increased. The premise of cloud computing is that you don’t need to do that forethought because the cloud providers have built their systems so that the capacity is “elastic” – you just use more storage and your monthly bill goes up a bit. Rock on.

One of the side effects of cloud computing is that it has more or less completely stripped skilled system administrators out of the work force. The good ones work for the cloud providers, now, and the bad ones have gone somewhere else. My view of all of this happening was from the management plane: through the late 90s till a few years ago, I witnessed the thinning-out of federal IT skills, to the point where management’s job became oversight of cloud computing usage and resource planning (hence, budget) and security were swept under the rug. In truth, there was a considerable improvement in security because federal IT security was absolutely horrible and showed no sign of hitting bottom and turning around. Now, instead of having a contractor slam a mis-configured machine into a rack and copy 4tb of important data, forget about it, and let Chinese hackers have it, they spin up a virtual machine at Amazon AWS (Amazon Web Services, FYI) and copy the data there, then leave the virtual machine set up (it’s easier that way) so that the 4tb data bucket is world readable. The Chinese hackers agree, BTW: it is easier. The process began to spin out of control in 2002, and I recall I made a very unpopular remark at a conference: “Federal IT managers have experienced such a skill-drain that we can assume that, more or less, all they are capable of doing is reading Powerpoint presentations from vendors.”

war clouds

Around that time, I started telling clients of mine that cloud computing removed some costs of system administration and capacity planning by allowing you to have access to infinite system administration and infinite storage at a slightly higher price than actually doing it yourself, but much faster because you can bypass governance. One of the hidden costs of IT security is governance: understanding what your data is, how it’s used, who has access to it, the degree to which availability is important, and who is responsible for making sure it is correctly set up, etc. Starting in 2002 I saw large corporations hemorrhaging data into the cloud as fast as they could copy it there. Then, I was doing incident responses and would get called in to figure out how such-and-such a document had leaked; my first question was “do you know if this came from inside your network, or the cloud?” In 2002, I’d get blank looks. By 2016, people would sometimes reply “we don’t have any data, it’s all in the cloud.” Next question: what audit data do you collect from the cloud? None, right, collecting that stuff is the kind of painful detail your systems administrators would do, if you had any.

Basically, cloud computing means a gleeful roll-back to the 1980s, when every department had its own computing budget, there was no planning at all, and no governance at all – data went everywhere and stealing it was a matter of getting inside of someone’s perimeter where they had no idea what was going on so they couldn’t tell “what is going on that is good” from “what is going on that is bad.” Perhaps some of you remember the 80s’ departmental computing, where each department had the secretary sometimes make backup tapes and take them home and store them in the closet in a shoebox? Corporate IT geniuses can’t draw a line connecting that practice to the huge pay-outs some of them have made to crypto-locker malware operators.

Here’s something that will surprise you a lot: when it comes to government, cloud computing represents a huge shift of money from the public sector to the private sector. It’s the privatization of of government data. Lock-in is completely ignored: how will government departments ever get their data back out of the cloud? “Not my problem,” says the federal IT manager, “besides, there’s nothing about lock-in in these Powerpoint slides.”

Now, I want to say something alliterative about “razor blade hidden in an apple” except cloud computing is an apple made of razor blades that has a sliver of yummy fruit hidden somewhere inside. Everyone is studiously ignoring the applications. See, the cloud is just where the applications run and where they store their data. Where do the applications come from? A well-designed server application might not need elastic processor power or storage but it’s going to get it, anyway. Who is going to code these things? Contractors, that’s who. I have no problem with contractors but the contractors building applications out there range from amazingly great IT wizards to guys whose idea of “coding” is to ask “how do I complete the next step of my program?” on Stack Overflow, then – when they get an answer, copy and paste it into their release-candidate, they ask the next question. Back in the 80s I used to call such programmers “hunt and peck programmers” and I thought that by now everyone would grow up knowing their way around a C compiler and BSD UNIX but apparently I was wrong. Some of those important government apps that will run out in the cloud were written by really skilled people, but most of them weren’t. Which means they will be unreliable, insecure, and will leak data and not scale. In many cases being able to throw unlimited storage and data space at a problem makes it scale but some processes are serialized and actually get slower if you scale them and your design is wrong. Currently, government computing is implementing bajillions of applications like that, except they are not comprehended by anyone who works for The People – those projects are overseen by beleaguered IT managers who are struggling to keep up with their daily input of Powerpoint briefings; that stuff is too detailed. What I am saying is that a lot of this stuff is crap that will crash and burn whether it’s in the cloud or not. Of course the data will leak.

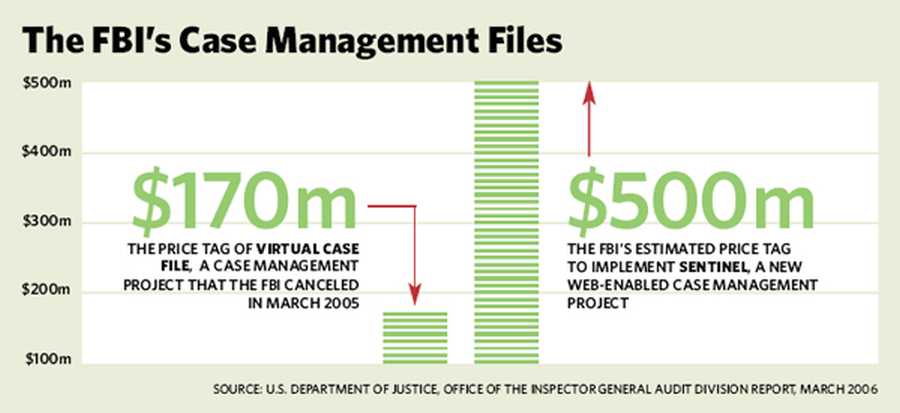

Back in 2002 my favorite example of this kind of horribleness was the FBI’s Virtual Case File project. At that time, VCF had consumed $170 million [wik] and never even came close to working. It was started in 2000, and development was undertaken by beltway bandit SAIC. I actually have some sympathy for the folks at SAIC who were chained to that sinking ship (in fact, I interviewed two off them off the record at USENIX) because, as one of them said, “How can you develop a system to a specification that was put forth by someone who doesn’t know what they are specifying?” It was a perfect storm of federal IT incompetence: the FBI didn’t even know enough to know what to ask for, and SAIC didn’t know how to deal with that. The correct answer, by the way, would have been to build something that worked, based on their understanding of what the FBI probably needed, and maybe they’d have gotten lucky. Except, as my SAIC friend told me: half of the programmers working on the project were the “hunt and peck coders” who didn’t know what they were doing, either. The chances that such a system would ever be anything but an expensive disaster were about the same as of a whirlwind going through a junkyard and assembling a flyable Boeing 737, or some bullshit like that.

From article by techtarget

This is the other side of the cloud enthusiasm: the software. The FBI was not competent to specify and manage (governance!) the development of a $170 million application, so they’re going to do a $500 million web-enabled cloud version that they will still not be competent to specify and manage.

By the way, they’ll be lucky if they get: a wiki with CAC card authentication. But they’re probably going to get a wiki with 4 character pin-code authentication and it’s going to be on a public server behind a firewall that lets SSL in from everywhere except China and Russia based on IP address blocks (which is garbage security). The sad thing is that a Virtual Case File is kind of like a multimedia wiki with authentication – it’s just that building one that scales to that size is hard. [To go back to what I was saying before about governance: the thing that makes Wikipedia work is its governance model, not its underlying technology]

Multiply SENTINEL out a few hundred thousand-fold and you’ve got the future of federal IT. It’s going to be frightful, insecure, unreliable, and developed by the lowest bidder for managers whose idea of designing a complex system is reading a Powerpoint deck and saying “that sounds good.”

This why, to a degree, I am not super-scared of some of the scenarios we hear about, where the FBI is building a gigantic unregulated database of faces for facial recognition. My immediate reaction is “so what?” It won’t work in realtime, or even quasi-realtime, it will produce jillions of false positives, and it will be canceled at massive cost in 10 years. Of course the data will still be sitting on some AWS or Azure data-bucket (probably world-readable) but – again – who cares? The data will be worth about as much as if you took every .JPEG off of facebook and stuck it in a database, which is basically, what the FBI did (except they added drivers’ license pictures and arrest photos, too) it’s unregulated by anything but computing’s laws of nature: who cares if it’s unregulated if it doesn’t work? It’s more or less the same story as the NSA’s vaunted PRISM system, the “collect it all” unregulated data-trawling that the Bush administration put in place after 9/11. PRISM never really worked but, god damn, did it make some storage vendors happy.

[mashable]

Don’t worry, PRISM is not dead; it’s more like the FBI’s VCF: there will be another version and it will cost more and work about the same. [NSA program is shut down, Aide says. And, yeah, the New York Times totally believes someone who’d spill the cheese about an incredibly top secret program like that.] PRISM continues to have value as a retro-scope for catching corrupt civil servants and their attorneys, who are stupid enough to use backdoor’d cryptographic applications. They should use Chinese cryptographic applications, which are backdoor’d by the Chinese who won’t cooperate with the FBI. God, isn’t this stuff obvious?

Afghanistan is not necessarily the US’ longest-running war.

The only way it could get funnier would be if the cloud computing deal became a political football. Which, I expected it would because the dollar amount is just too large for any one vendor to allow another to run away with that gigantic, steaming, chunk of pork. This ain’t F-35, people! You Seattleites need to learn how to lock down a procurement process or you’re going to keep stepping on your own drooling tongues as you rush for the trough! [wp]

On the surface the story is simple, but it’s really not: there are applications that have been being developed for AWS and now the Trump administration wants the pentagon to use Azure? In other words: “port your code to Azure” – unless it was carefully developed in order to avoid any of AWS’ lock-in technology that is not available on Azure. Prediction: there is going to be a gigantic flood of waivers coming down the pike. The Pentagon should have allowed cloud development to use either one and spent its time developing governance frameworks (minimum operational standards) and negotiated large-customer pricing, instead. From where I sit, the very fact that The Pentagon thinks that all its cloud apps are going to work under either AWS or Azure shows how ignorant they are. It was never an “and” it was an “or” and their strategy needed to reflect that all along.

The next decade of federal IT is going to be exciting. Working in federal IT is going to be like being a coal-stoker on the Titanic, except that if you survive one ship-wreck, the ship that rescued you is going to hit an iceberg or catch fire or get bombed, too. [Tempted to insert a reference to the Nupple Duck and the cargo of cat, but it may be obscure even for here] Sticking with the nautical metaphor: “One hand for you, one hand for the ship” and understand that the ship isn’t worth saving. It’s going to sink anyway so make sure you’re one of the first into the life-boat.

One of the other great potentials of the Amazon VS Microsoft war on the JEDI contract is the potential for major government software development projects being put on “pause” because of restraining orders as the inevitable court case grinds its way toward a cliff. Earlier I mentioned the F-35’s horribly broken logistics software [Probably written by cut-and-pasting from Stack Overflow] – what if that gets put on “hold” thanks to a lawsuit? I guess if we’ve got to have Big Brother we’d prefer him to be really, really, stupid.

In the picture of PRISM, the “Named Company” employees that are skinning data and putting it into a drop box for NSA: those are folks who work for Facebook, Instagram, Google, etc.

I took a couple of days off around christmas because christmas is always depressing to me, and I didn’t want to just spew hatred at Hallmark. Also, the temperatures are above 50 right now which means I can paint and glue and I ought to be building doors. Besides, as you can tell, I’m hardly full of christmas good cheer: “ho ho ho government IT’s a shit show!”

And if you thought that was crazy, how about going for the ultimate craziness:

https://www.reddit.com/r/kubernetes/comments/efeqnc/how_the_us_air_force_deployed_kubernetes_and/

I’d have directly linked to the article but the comments on the Reddit thread are priceless for the 1% of us standard issue nerds who get them.

@Patrick Slattery:

Holy shit.

Well, I feel better about my coding skills now :P

FYI, all this is happening in Australia too. Someone is going to hack Azure or AWS and walk off with a huge amount of government data. I just hope it’s defense data and not anything important (like my medical records)

The way I see it, Christmas are just a few work-free days during which people can do whatever they want. In my opinion, that’s great. Granted, you are retired and I am self-employed for now, so for us it doesn’t matter anyway, because we get to decide what we want to do at any date. Anyway, personally I just use holidays to do whatever I want, and I ignore all the weird things other people do around me. It’s not like I am being forced to go to a church or anything during Christmas. And I see no reason to care about other people or get grumpy because of the ways how they choose to celebrate Christmas. I don’t celebrate Christmas in any way, and work-free days during which I can do what I want are always nice to have, so I’ll happily take them.

Tangentially relevant: the US Navy refitted destroyers with a touchscreen navigational system which could be controlled from four different stations on the bridge – with no apparent way of telling which screen, if any, controlled which function:

Collision Course

When the USS John S. McCain crashed in the Pacific, the Navy blamed the destroyer’s crew for the loss of 10 sailors. The truth is the Navy’s flawed technology set the McCain up for disaster.

I’m sure it did. However, given that I’m on the technical and not the business side of things at an HDD company, I’ve never heard anything about any government contracts we have (and I’ve been around a while).

We apparently compartmentalize and/or obfuscate quite well. As part of my job I do hear that “${I4 company #1} isn’t buying anything because we’re not providing feature X”, and that we’re doing features Y&Z for ${I4 company #3} and ${I4 company #4} respectively.

Pierce R. Butler@#5:

Tangentially relevant: the US Navy refitted destroyers with a touchscreen navigational system which could be controlled from four different stations on the bridge – with no apparent way of telling which screen, if any, controlled which function:

I don’t think that was entirely fair; the controls are a bodge but the crew of a ship at sea is expected to know the controls of their ship. The controls helped make the situation more likely, but having a military ship in commercial shipping areas, crewed by people who don’t understand the controls – that’s the worst part of the problem.

That’s a harrowing story, if it’s the same article I read. The Aegis boat’s captain had what is probably the worst wake-up, ever: knocked across the room, severely injured, and then the water comes in.

Sunday Afternoon@#6:

We apparently compartmentalize and/or obfuscate quite well. As part of my job I do hear that “${I4 company #1} isn’t buying anything because we’re not providing feature X”, and that we’re doing features Y&Z for ${I4 company #3} and ${I4 company #4} respectively.

Ah, “sales-driven engineering” is always interesting. At one company where I worked for a while, literally nothing got done except one-offs based on lobbying for favorite features on behalf of customers. Even if we said “that is a stupid feature and should be an option in ${other feature}” someone would jump straight to the CEO and make the feature request come downward. Fun times. And by fun I mean, hellish.

@Patrick Slattery(#1):

If they could connect with the missile and induce it to go into Crashloopbackoff mode.

Marcus Ranum @ # 7: … if it’s the same article I read.

Different story: no Aegis involved. This one’s rude wakeup involved 12 sailors sleeping in a below-waterline berth; when the collision occurred (more than three minutes after the bridge lost control of steering), only two managed to get out. In another berth, twisted metal pinned two in their bunks, but others managed to pry them out. Why the captain neglected to issue an all-hands-on-your-feet-now emergency alert was not explained, but his court-martial initially charged him with homicide.

Both crew and supervising officer received inadequate training and an out-of-date manual.

Which is exactly why you need a good product owner team (or marketing team, it’s sometimes called): these are the people whose job it is to collect customer requirements and desires on the one side, information and estimates from the technical team on the other, and create and manage a plan for product development and marketing that produces a good result for the aims of the business.

It ain’t rocket science, at least no more than the technical side of software development is, but as we well know, most people and teams can’t do that, either, so it’s no suprise to see product management broken.

100x this! We just went to “The Cloud”. Our data doesn’t go to a magical fairy land somewhere in the atmosphere, it goes to someone else’s computer. It was a pain in the [redacted] to get our data out of our old system when we actually owned it. Hopefully, I’ll be retired or dead when the hosting company fails or the contract under which the data is captive expires.

And you can’t customize anything. And your in-house IT can’t resolve issues or improve workflows. The company offers a web-poll where customers can nominate and vote on which is really a problem. That’s (supposedly) the main driver of their work queue of non-critical fixes.

!!!

We’re not technically government, but were government-adjacent. They might work for garbage, but their sales powerpoints were impressive.