When I was done interviewing Tom Van Vleck, he suggested I might want to read Mechanizing Proof, by Donald MacKenzie. [wc] Which, I did.

It’s a history of how computer scientists and programmers have confronted the problem of software reliability. Since it’s a history, it doesn’t take “sides” in particular; instead we learn about the various currents of thought and the factions and disagreements, who, how, and why. Consequently, I found it a bit unsatisfying at the end, but that’s not the author’s fault – dissatisfaction is a familiar feeling for anyone who starts thinking about software reliability.

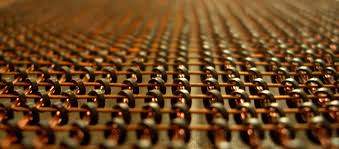

When it comes to computer security, software reliability is the innermost circle of hell. None of the outer circles are exactly fun, but it just gets worse and worse the more you look at it. As Tom Van Vleck mentioned in the interview, hardware was not as reliable back in the MULTICS days: it was not out of the question that a value you stored in a place in memory might occasionally change unexpectedly. Memory, after all, was implemented with little magnetic rings being moved about by varying charges on wires that ran between them. When the ancient programmers wrote software, they were writing stuff that had to run on an unreliable system. Computer security programmers are “programming Satan’s computer” – it’s not just unreliable it’s out to get you. That value you stored may be the return address from a function call, and a hacker may be trying to stuff a new value into that memory, in order to make your program do something it was not intended to do.

When it comes to computer security, software reliability is the innermost circle of hell. None of the outer circles are exactly fun, but it just gets worse and worse the more you look at it. As Tom Van Vleck mentioned in the interview, hardware was not as reliable back in the MULTICS days: it was not out of the question that a value you stored in a place in memory might occasionally change unexpectedly. Memory, after all, was implemented with little magnetic rings being moved about by varying charges on wires that ran between them. When the ancient programmers wrote software, they were writing stuff that had to run on an unreliable system. Computer security programmers are “programming Satan’s computer” – it’s not just unreliable it’s out to get you. That value you stored may be the return address from a function call, and a hacker may be trying to stuff a new value into that memory, in order to make your program do something it was not intended to do.

In other words, computer security programmers have to worry about every possible unintended side-effect – which tremendously magnifies the complexity of things that you’re worrying about as you write code. And, as we know already, writing complex code is hard. Writing secure code is harder than hard. Yet, we continue to want to write code that can function reliably in a landscape full of hostile actions. I believe it was around 1989 when I remarked to one of my co-workers, “whoever invented computer networking shot us all in the foot.” That was around the time I was realizing that what we’d now call “attack surface” (I called it “zone of risk”) was a problem. Here’s what’s crazy, to me: it’s still a problem but we don’t even know how to measure it. Which, per Lord Kelvin, means we don’t know what it is. Pause and let that sink in for a minute: we are running risks that we don’t even have a model for.

That’s not a hypothetical. There are several documented incidents in which software flaws nearly resulted in full-exchange nuclear war between the US and USSR. That was a result of normal glitches, nothing as fancy as some hacker deliberately fiddling with the communications. That would be unthinkable, right? No, it’s US government policy – and apparently Chinese, Russian, North Korean, and Israeli government policy, too. And then there are the people who do that stuff for fun.

Mechanizing Proof is about the way computing responded to this problem. It tracks through the realization that there was a problem, and then through some of the responses to it: formal design, formal verification, provably secure design, etc. MacKenzie is straddling the interface between software development and philosophy of science – how do we know things? – and, like philosophy in general the discipline of software as science falls into a scorched-earth battle of epistemology and skepticism. It’s fascinating, to me, to read this stuff but someone who’s not interested in software will probably want to steer away from this book. Computer programmers, like myself, who have a nodding familiarity with the corrosive effects of radical skepticism, will find a lot of this to be eerily familiar. Computer programmers who lived through the era of “Structured Design” and “General Systems Theory” (1985-1990) will know, as we knew then, that there was a lot of time wasted.

From Ranum and Avolio, 1992 [usenix]

The book is a little less humanizing than something like The Soul Of A New Machine [wc] – it’s an event-driven and time-line driven story, not a character-driven one. That’s OK, but some of the events MacKenzie writes about could have used a more dramatic presentation. I think my favorite part [mild spoiler alert!] is his description of a meeting that took place at Garmisch, in October 1968. It was where the second generation of computer scientists got together and collectively said, “we have a problem.” MacKenzie somewhat rotates his entire history around that fulcrum. If he were a writer like Siddartha Mukherjee or James Gleick he would have dramatized that meeting as the focus of the book, but he doesn’t. Probably, because, in real life, it wasn’t that important. But it was important:

The breadth and depth of the problems with software development were the focus of what was to become one of the most famous of all computer science conferences, the meeting on “software engineering” held in October, 1968 at Garmish-Partenkirchen, a resort nestling beneath the mountains of the Bavarian Alps. The idea for the conference had come most centrally from a leading German computer scientist, Professor Fritz Bauer of the Technische Hochschule München. Bauer was not the only one to coin the term “software engineering” – Douglas Ross of the MIT Servomechanisms Laboratory proposed a course on the subject in January, 1968 – but the choice of the term as the conference’s title greatly increased the salience of the idea that, in Bauer’s words:

The time has arrived to switch from home-made software to manufactured software, from tinkering to engineering – twelve years after a similar transition has taken place in the computer hardware field … it is high time to take this step. The use of computer has reached the stage that software production … has become a bottleneck for further expansion.

The context was that a number of significant software programs were being attempted (operating systems such as IBM’s OS/360) and nothing was working: the more programmers that were thrown at a problem, the longer it took. Software was becoming a money-sucking black hole of despair. Programmers working on OS/360 were literally losing their minds.

Buxton recalled the tone being set by frank admissions in an after-dinner talk on the first evening by Robert S. Barton, who had played a leading role in the development of Burroughs’ innovative B-5000 computer. Barton told participants, “that we were all guilty, essentially, of concealing the fact that big pieces of software were increasingly disaster areas.” The confessional atmosphere – “everybody else just chimed in and said ‘Yes!'” – created an unusual degree of rapport and openness.

And:

To some of those involved, the situation was bad enough to merit being called a “software crisis.” Not all agreed. Some Garmisch participants pointed to the large number of computer installations that appeared to work perfectly satisfactorily. K. Kolence, of Boole and Babbage commented:

I do not like the word “crisis.” It’s a very emotional word. The basic problem is that certain classes of system are placing demands on us which are beyond our capabilities and our theories and methods of design and production at this time. There are many areas where there is no such thing as a crisis – sort routines, payroll applications, for example. It is large systems that are encountering great difficulties. We should not expect the production of such systems to be easy.

MIT’s Douglas Ross countered:

It make no difference if my legs, arms, brain, and digestive track are in fine working condition if I am at the moment suffering from a heart attack. I am still very much in a crisis.

When I was a young pup at Trusted Information Systems (a company which crops up significantly in the book) I was coding an “internet firewall” and found myself challenged by some of the characters who crop up in the book. One project that was spun up at TIS was a formal verification attempt to analyze my firewall’s code. As team lead/lead engineer/chief cook/bottle-washer for the firewall product, I supported a group that attempted to prove – I still don’t know what – about my code-base. It was a fascinating experience because, as I realized, there were 10 people who didn’t know how to program at all – let alone do network programming on UNIX in C – and they were trying to analyze my code. At the time I was privately derisive. When I later learned that the results of the analysis had been classified, and ran to hundreds of pages, I stopped pretending any respect for that process.

The rise and fall of formal methods for software correctness is another central story arc in the book. It boiled down to a problem that I encountered, myself, in those days: why take 20 pages to ‘prove’ 3 lines of code? Especially when the code is its own documentation? I remember saying, “I don’t care if you can’t understand it – I can, and the compiler can.” What was being built was a parallel edifice, also complex notation assembled by humans – so it was also prone to errors. That dynamic became and remained the rock on which formal methods ran onto.

I enjoyed the book. I can’t recommend it unreservedly because it’s appeal will tend to be narrower than a popularized treatment would be. For an “insider” it’s fascinating stuff.

Hoo boy, back in the dark ages. Good thing nothing like that could happen today.

Exploiting the DRAM rowhammer bug to gain kernel privileges

Is that the Multics guy? You sure have met some interesting industry figures in your travels, and not just the much-lauded ones either!

The complexity of the proof is not a problem for correctness, as it can be machine-checked, more relevant is the complexity of what is being proved. If you do it right you can IMHO reduce the probability of errors by several orders of magnitude. The main problem that remains are errors in requirements which went into both your software and the correctness proof. And system errors, where the problem is at the edge of the system part with the correctness proof.

The main problem why formal methods aren’t used more is that they aren’t economically viable except in cases of extreme safety or security requirements, and only for very small systems. It’s just too much work to model the system and do the proofs. And it takes too much time, and time-to-market is much more economically important in most systems.

Still, there are some impressive works, for example CompCert or L4.verified.

It was Technische Hochschule München, which is, so to speak, my alma mater, but then (in 1983) it was called “Technische Universität München”.

I can’t search for this on Amazon.com; when I type ‘Mechanizing Proof’ into the search box, it returns with, ‘We found 0 results for “merchandizing proof”‘. There seems to be no way I can make it search for what I typed in. Oh, the irony.

@Curt Sampson

You can use double quotes, then it works for me.

Cat Mara@#1:

Is that the Multics guy? You sure have met some interesting industry figures in your travels, and not just the much-lauded ones either!

Tom is one of the multicians, yes. In my opinion there were two great lines of thought regarding operating systems – MULTICS and Project Athena. There were others (UNIX most notably) but those two projects tried to solve something new on the horizon: management. Other operating systems tried to solve technical problems but they were doomed because they made management worse, not better.

I’ve had the good fortune to learn from some of those guys, when I could.

Another multician is Peter Neumann, who has been a constant drum-beat in security and reliability. Peter’s RISKS digest has been a fascinating series of articles, served as a mailing list, that has gone on for decades. I’m flattered to say that some of my comments variously attracted Peter’s approval. I was on a blue-ribbon panel for NSA back in the early 00s, and sat next to Peter, and listened carefully to what he said. At one point Peter and I suggested (seriously) that NSA might want to not connect to the internet at all – it might be better/more efficient to simulate being connected.*

I interviewed Peter years later, [here] By that time Peter and I had both hit on the idea that the internet should be wiped and started over. My idea was to do it and blame it on Y2K and I remember Peter laughed long and hard when I suggested it.

When I was a young systems engineer at DEC, I was assigned to “Help port MIT’s Project Athena to ULTRIX” which took me a while (the Athena widget set was horrible, and I developed an intense hatred for X-Windows) – the leading luminary of Athena was Dan Geer, who became something of a personal hero of mine. Whenever I say that, he grumbles and says something witty and profound. Geer is, in my opinion, one of the greats. He sees a lot of security coming, years before it arrives. I thought I had an interview with Dan but I can’t find it (!) Dan’s worth listening to; he grew up listening to rural preachers and he can deliver a talk like nobody else.

And then there’s Dr Roger Schell. Roger Schell is/was one of the greats – he practically invented computer security and the idea of trusted operating systems. I interviewed him [here]. He was born in 1940, on a farm in Montana that didn’t have electricity. Today he is still overseeing trusted kernel development. When I interviewed him, he hauled me up by the scruff of my neck regarding some injudicious comments I made about the Orange Book around about 1993. Can I have two heroes please?

(* I also recommended that NSA develop its own branch of GameOS for Playstation 2, and take advantage of it as a network endpoint with a controlled hardware distribution. At that time NSA was talking about making a browser be the delivery vehicle for everything, so a multi-level GameOS was actually a good idea, not a crazy one. One of the very high-up NSA executives listened to my idea, seriously, then said, “you are dangerous.” Then he ignored me the rest of the week. It was one of the most sincere compliments I’ve ever gotten.)

wereatheist@#3:

It was Technische Hochschule München

You are correct. Our quality control department has been sacked due to budget cuts, so I don’t even have a sack to sack myself with.

(fixed)

How realistic would this idea be? You have written in various blog posts that all the software we currently use sucks and it should be scrapped, and then something entirely new and better should be written from scratch to replace our faulty and insecure software. Would this even be possible? Assuming some really wealthy tech company or maybe a government agency of some rich country wanted to do that (thus they had plenty of resources and cash to hire good programmers), would it even be possible to wipe out the Internet (and bad software in general) and start over? I suspect there might be some problems with such an idea (namely it would be impossible to realize even if somebody very rich decided that they want to give it a try).

Ieva Skrebele@#8:

How realistic would this idea be?

Not very. But the current idea isn’t very realistic, either. Remember – the current model is to keep building more and more crap atop poorly layered and badly designed abstractions. The maintenance costs for doing this are non-linear. As I see things, “cloud computing” is a repudiation of the current state of affairs – a shift to centrally managed systems that are configured to all be more or less the same (more precisely: operation and implementation details are separated) That’s basically what Amazon web services and Google have done. In a very real sense, AWS and silverlight, etc, are re-designs of operating systems and administration. The application stack is another problem, of course. But they indicate that it can be done and that there is a great deal of money to be made by saving money.

something entirely new and better should be written from scratch to replace our faulty and insecure software. Would this even be possible?

I believe that a certain point it will be necessary because the cost of throwing it away and doing it over will be lower than the cost of maintaining the old stuff.

We are about to witness some truly gigantic expenditures as backwards compatibility efforts are deployed to maintain existing investments in IPv4. This will amount to tens of millions, perhaps hundreds or even billions of dollars. The point is that the money is there are people are going to pay it, whether they want to or not.

would it even be possible to wipe out the Internet (and bad software in general) and start over?

I am honestly surprised that it hasn’t happened, already. I expected some government like China or perhaps Iran or Saudi Arabia to realize that if they are dependent on US software, they will never be free of surveillance in their systems. The US has already amply demonstrated its willingness to use its dominance of software and infrastructure to embed itself in everyone else’s communications. That is what the big China/Google debate is about – will China be allowed to develop an internet stack that is not owned by the US? The kinds of money we are currently talking about are relatively small, still (the price keeps going up and up!) Someone with the chutzpah of Elon Musk and the corporate vision of Jack Ma could pull it off, and could improve control over at least 1/2 of the world’s infrastructure.

A government like Russia, China, Iran, or Saudi Arabia could spend way less than they spend for a weapons system, and have something they owned top-to-bottom in about 5-10 years.

I’m not a fan of “cyberwar” but that doesn’t mean I don’t think computing is a strategic technology. Hoooooo boy it sure is!

Marcus Ranum @6: It was your comment on another post that you’d interviewed Peter Neumann that provoked me to leave my first comment. I only knew him through his work with RISKS; I didn’t realise he was a Multician too.

What would a re-designed software environment look like?

– one programming language, that included multi-system development tools, debuggers, and correctness-checking at run-time

– well-documented APIs for low and mid-level functions including databases, store/retrieve settings, store/retrieve user preferences, network connectivity, authetication, session encryption, session resumption

– one implementation of document encapsulation and encoding used by web pages, email clients, etc. None of this mishmosh of markup languages

– separation of specification for document layout from rendering; the current model, which relies on javascript as a sort of do-everything object code, is utterly horrible

– separation of specification of document layout and content and execution. the idea that you’ve got downloadable code all over a document is utterly absurd and its consequences are obvious

– shared and global/enterprise options encoding for runtime and choice of execution

– single system image (why does it appear that we are using lots of computers? what is a matter of very bad interface design indeed!)

– enforced separation between operating system, user data, and application data

– identity pushed all the way through from layer 7 to layer 1; this would also require a notion of disposable or fake identities.

… stuff like that. These are not unsolvable problems – in fact, they are being solved all the time, just not as a unit – everyone is reinventing the wheel as fast as they can. Google and Amazon are solving them for themselves, they’re just using a kludgy foundation to do it on.

When the cost of maintaining some old system exceeds the cost of replacing it, humans often end up maintaining the old system.*

So, how would this business plan/world domination plan work? I mean the practicalities. How could somebody implement it? Write the code for a totally new operating system that runs on the existing hardware? Or replace the hardware as well? And what do you do with connectivity and all those users who will need compatibility with something that already exists and cannot be replaced so easily? Let’s assume some billionaire decided that they want to tackle this problem. How should they approach it and where could they even begin?

The idea of Russia making good software made me cringe. That’s not how authoritarian and corrupt governments work. Putin tends to give contracts to his buddies. Those, in turn, tend to hire their good-for-nothing relatives and friends. Anybody competent actually getting hired is a lucky accident.

——

*Totally unrelated, but this got me thinking about writing systems. While studying for my master’s degree in linguistics, I took some courses about writing systems in various languages, one of those courses was even called “Writing systems in comparison.” The thing is, some languages have very nice writing systems (for example, Italian, Latvian). Other languages have absolutely ridiculous and outdated writing systems that have been made many centuries ago. For example, English writing system hasn’t been changed much for centuries. Compare that with Latvian writing system that was created in 1920ties by linguists who actually understood what they were doing. Back in 1920ties Latvians were using a writing system that was made several centuries ago by German priests. It sucked—it was inconsistent, some sounds were represented by a string of up to three letters, some other sounds weren’t represented at all. In 1920ties Latvians decided to completely scrap the old writing system, and replaced it with another new one that was completely different. Current Latvian writing system is extremely consistent. Each letter represents the same sound in every word where it’s used. And each sound is always represented by one and the same letter. In Latvia there is no equivalent to the American spelling bee competition. It would be impossible to make such a contest, because kids quickly learn all the spelling rules and afterwards they make hardly any mistakes. You cannot have a contest when everybody always gets the spelling right. By the way, when I have to read Latvian books printed before 1920ties, I actually struggle with that. I have tried to learn the old writing system, but I never learned it well enough to be able to read old books quickly. Anyway, my opinion is that the current English writing system ought to be completely scrapped, the spelling is too inconsistent, there’s no point even trying to learn the rules, because they have too many exceptions. A writing system that requires people to memorize the spelling of every single word is just a really bad writing system. Replacing an outdated writing system has some costs (each person has to learn the new one, some people want to cling to old traditions, etc.), but in the long term doing that would be beneficial. Yet people who speak languages with shitty writing systems are reluctant to scrap those and replace them with something better.

Incidentally, new problems being created on top of the old ones is something that happens also with writing systems, not just with computers. As we all know, every language gets new words all the time. In a consistent and well designed writing system like Latvian, it’s not hard to decide how each new word ought to be spelled. You decide how each new word ought to be spelled simply by applying the existing rules. This cannot be done when you have an inconsistent writing system, after all there are no uniform rules, whatever rules you have tend to be contradictory and non versatile. Thus with each new word, the whole poorly designed writing system ends up getting worse and worse, it just keeps on growing more and more inconsistent.

Example: in Latvian the word “Europe” is spelled as Eiropa. In past, the name of the European currency used to be spelled as eiro. A couple of years ago the European Commission ordered Latvians to change this spelling. Instead of eiro we were ordered to spell it as euro. Latvian pronunciation of the word “euro” starts with a sound that is always spelled as ei in Latvian spelling system, that’s also how this sound is spelled in the word Eiropa. When the European Union ordered Latvians to change the spelling of the word “euro,” people in Latvia were pissed off. The new spelling was contrary to the rules our spelling system had, and you couldn’t have even a single word that has a different spelling contradicting the existing rules. People were dissatisfied, because Brussels was messing up Latvian spelling system. In a consistent spelling system like Latvian even the idea of a single word contradicting the usual rules makes people dissatisfied. Compare that with an inconsistent spelling system like English where nobody can even tell whether the spelling of some word contradicts the existing rules or not.

Does Latvia have a wide range of different accents?

One of the problems that many people seem to overlook when they propose the simplification of English spelling is that we have a very wide range of different accents, so if you were to try and do a straight 1:1 mapping from sounds to letters, either everything would be spelled differently in different parts of the country, or you’d have an inconsistent and variable mapping (some accents can modify certain sounds differently in different words). As soon as you allow some variation in how you map sounds to letters (say, by having everybody learn the “official” sounds for the most culturally dominant area of the country, which is more-or-less where the language we call English came from in the first place) then you start to run into problems as pronunciation drifts over time…

It will be interesting to see how the Latvian system holds up over time.

Dunc @#14

There is some regional variation. Quantifying it depends on where you draw the line between an accent, a dialect, and a different language. In Latvia there’s one region where pronunciation is very different from the rest of the country. If we call that one a dialect and not count it in, then in the rest of the country variations in pronunciation are smaller than those between various English dialects.

As for Italian (I really like Italian writing system), then there are a lot of regional variations. Those differ from each other way more than the differences between American, British, Irish, and Australian English.

Anyway, the existence of dialects doesn’t mean that a language must have a writing system as shitty as English. Spanish and Italian are examples of perfectly normal writing systems. German is sort of OK too. It’s not like these languages aren’t spoken differently in various places all over the world.

This one is an example from German. In what is considered the standard pronunciation within Germany, there’s a phoneme /ç/ and this sound gets represented with the letters ch. In various German regions (for example Mainz where I used to live), locals simply do not pronounce the /ç/ sound, instead they replace it with the /ʃ/ sound. This is an example of a single writing system working perfectly fine for various dialects. When somebody speaking the standard German sees the letters ch they pronounce them as /ç/, simultaneously somebody from Mainz would pronounce the same letters as /ʃ/, and nobody has any reason to complain about spelling. Of course, I know that often dialects don’t just replace one sound with another one, often the differences go beyond something as simple.

1:1 mapping is actually extremely rare for languages. Latvian is pretty far from a simple one-to-one letter–phoneme correspondence. Spelling is very dependent upon underlying grammar structures. Anyway, linguists call this “orthographic depth,” and differentiate between deep and shallow orthographies. English and French are considered deep orthographies. Finnish and Italian are generally given as examples for shallow orthographies with a high letter–phoneme correspondence. The thing is, I don’t even necessarily want a shallow orthography. I just want it to be consistent; I want it to make sense. I’m perfectly fine learning spelling rules for words where spelling differs from the pronunciation. I just want these rules to be consistent; what annoys me is being told “sorry, there are no rules, just memorize the spelling of every single word.”

Well, it was made almost 100 years ago, so plenty of time has already passed. So far, it’s been fine. There have been some changes (letters ŗ and ō got discarded from the alphabet, the spelling of some words got changed as people started pronouncing them differently), but there have been no significant problems so far.

Thanks Ieva, that’s very interesting…

I’d just note that there are far more variations within “British English” (whatever that is) than there are between American, Irish, and Australian English, and I that I don’t think 100 years counts as much time at all in such matters. Heck, there’s probably more variation between North and South Yorkshire than between American and Australian…

Oh, and I’m certainly not defending English orthography!