Artificial intelligence programmers are getting good results with self-training neural networks. That’s something I originally thought [stderr] wasn’t going to work, but I revised my opinion when Dota2 champion Dendi got beaten by a neural net that trained to play against a copy of itself. [stderr]News is that a google AI that had trained against itself, defeated some humans and previous AIs that had trained against humans. [ars]

But there are some situations where an AI can train itself: rules-based systems in which the computer can evaluate its own actions and determine if they were good ones. (Things like poker are good examples.) Now, a Google-owned AI developer has taken this approach to the game Go, in which AIs only recently became capable of consistently beating humans. Impressively, with only three days of playing against itself with no prior knowledge of the game, the new AI was able to trounce both humans and its AI-based predecessors.

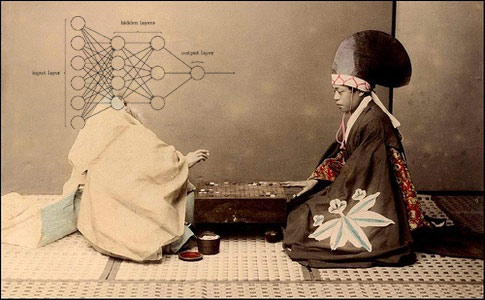

The Go Master

Watching the Dota2 game with Dendi, I could see that the AI’s play was similar to the human’s – it just didn’t make a single mistake and its fire control was slightly better balanced. In other words, it played like a human, but better; one of the possibilities I am wondering about is whether an AI that was not training against humans would invent its own new tactics. If it was playing against an AI that had trained against humans, and beaten them, can we expect it to play like the humans, only better?

One other thing that occurs to me, is that with an AI we have things we can measure: how many neurons are involved in the AI’s “thinking” about each move? How many cycles does it take? How much memory gets accessed? Is there a way of determining which neurons in the AI are being used to ‘remember’ games versus ‘strategizing’? Can we tell them apart? Given an AI that plays Dota2 at a superhuman level, and an AI that plays go at a superhuman level, can we make any inference about which game is ‘harder’ based on the AI’s internal computation? Would that match a human’s intuition about which game is ‘harder’? Note: I scare-quoted ‘harder’ because I have no idea what that means – perhaps ‘harder’ may come to be defined in terms of how many cycles of a certain type an AI needs to win.

The DeepMind team ran the AI against itself for three days, during which it completed nearly five million games of Go. (That’s about 0.4 seconds per move). When the training was complete, they set it up with a machine that had four tensor processing units and put Zero against one of their earlier, human-trained iterations, which was given multiple computers and a total of 48 tensor processing units. AlphaGo Zero romped, beating its opponent 100 games to none.

Tests with partially trained versions showed that Zero was able to start beating human-trained AIs in as little as a day. The DeepMind team then continued training for 40 days. By day four, it started consistently beating an earlier, human-trained version that was the first capable of beating human grandmasters. By day 25, Zero started consistently beating the most sophisticated human-trained AI. And at day 40, it beat that AI in 89 games out of 100. Obviously, any human player facing it was stomped.

So what did AlphaGo Zero’s play look like? For the openings of the games, it often started with moves that had already been identified by human masters. But in some cases, it developed distinctive variations on these. The end game is largely constrained by the board, and so the moves also resembled what a human might do. But in the middle, the AI’s moves didn’t seem to follow anything a human would recognize as a strategy; instead, it would consistently find ways to edge ahead of any opponent, even if it lost ground on some moves.

We can’t transfer assumptions between human cognition and AI cognition, so we won’t be able to dissect how the AI thinks and make inferences about how a human thinks. I wonder if AI will help us learn more about ourselves.

My bet would be no. Humans have had a wealth of opportunities to learn about themselves, often by applying a very simple rubric, like learn from history. So far, we’ve utterly failed on that one. I have no doubt there will be a tonne of pretentious bilgewater tossed about, making all manner of claims.

It seems that this answers your question in the affirmative:

This has happened before:

That’s from 1992. I’m not surprised it’s happening again. At some point, we’ll learn new things about useful domains, I hope (with some anxiety, too: in real-life fuzzily defined problems, there’s immense scope for garbage in, garbage out).

Caine #1

I tend to think that learning from history is a very difficult and tricky business. Certainly history provides countless examples of human behavior, but the meaning of it all is often not very clear, and what a person takes away from studying history often has more to do with their preexisting beliefs and values than the history they study.

On a different note, I think that scientists are learning quite a bit about how the human brain works, although they’re still in the early stages and have an awful lot to learn. In that sense, people are finally starting to get a rudimentary scientific understanding of how their own minds work, after thousands of years of speculation.

Have you seen this?

I imagine the singularity people are getting ready to throw a party, though it may yet be premature. Neural networks really are stupendously powerful tools, however, and I think making them more accessible will be a good thing.

Of course, I’m also reminded of Asimov’s “The Last Question”. We may not know exactly why a particular NN does what it does, but the experts are intimately familiar with the overall design. That may soon be a thing of the past.

I, for one, welcome our robot overlords.

Eric Weatherby@#5:

I, for one, welcome our robot overlords.

Me too! Could they be worse than the mass of plutocrats that run the world now?

Caine@#1:

Humans have had a wealth of opportunities to learn about themselves, often by applying a very simple rubric, like learn from history. So far, we’ve utterly failed on that one. I have no doubt there will be a tonne of pretentious bilgewater tossed about, making all manner of claims.

I wonder if we do; I think we’re mostly short-term thinkers but we try to learn from history. It’s hard – the lessons of history aren’t clear; it’s too easy to convince oneself “this time it’ll be different” because often, it is.

cvoinescu@#2:

That’s from 1992. I’m not surprised it’s happening again. At some point, we’ll learn new things about useful domains, I hope (with some anxiety, too: in real-life fuzzily defined problems, there’s immense scope for garbage in, garbage out).

Yes, I recall you pointed that out before, back when I still believed self-reinforced learning was not going to result in superior performance. It was part of the information that got me to thinking and reversed my position.

This is really interesting stuff.

Anders Kehlet@#4:

Have you seen this?

I imagine the singularity people are getting ready to throw a party, though it may yet be premature. Neural networks really are stupendously powerful tools, however, and I think making them more accessible will be a good thing.

I hadn’t. That’s … really interesting. I think there are still going to be problems with respect to figuring out how to tell an AI what you think an application should do. On the other hand, anyone who’s worked with software engineers will have already experienced that problem.

Another thing I am wondering, now: suppose an AI achieves superhuman capability in tasks where success is difficult for us to program – how will a human be able to tell if the AI is doing a good job?