In computer security transitive trust is when system A trusts B, and system B trusts C – in that case system A trusts C but doesn’t usually realize it.

Back in the early days of firewalls and internet security, we used to refer to “island-hopping” attacks – when a system would get compromised and the attacker would use the compromised system to launder credentials to get onto another one. The first form of it that I saw was a mis-configured FTP service on a workstation in a cluster of Sun workstations (3/50s running SunOs 3) the attacker was able to download the system’s password file and crack a user’s password. All the systems in that workgroup were set up with .rhosts files so that a user on one could rlogin to the others without a password, which put the attacker on the main server where all the emails were stored. After that, the attacker searched for ‘password’ in all the emails, and jumped off into a cluster of systems at a research department where the same process repeated itself. In a sense, a great many of the attacks that are taking place today are transitive trust attacks based on the transitivity of credentials – if your Ebay username and password are the same as your Amazon username and password, and you use the same username and password on FTB – you’re one WordPress security hole and a password cracker away from losing your credentials that may be tied to a credit card.

If the user’s a blockhead, the data’s about to leak

Transitivity is all over the place: user credentials, host-to-host (as in the rlogin example), and data. This is, again, basic computer security stuff – it’s not “advanced” – it’s obvious. That’s one reason I get really annoyed when someone gets hacked and tries to make it sound like super duper cyberninjas owned them: most of this stuff is failures in basic tactics. It drives me nuts when people talk as though there are sophisticated attacks being used – the problem is that some people’s understanding of security is so bad that they can hardly be said to have any, at all. You’ve maybe heard stories of penetration testers who’ve been able to trade a Snickers Bar for a user’s password – that’s how bad it is. One doesn’t need to invoke nation-state actors – it’s as if the data wants to leak out.

Transitive trust with data looks like: if A gives their data to B and tells B “be careful with it”, and B gives their data to C and tells C “be careful with it” – A thinks their data is being protected, but what if C is a bad custodian of the data? It is a simple numbers game: the more the data gets spread around, the more likely it is that one of the data’s holders will make a mistake. That’s a passive form of transitive trust attack; what about a transitive trust attack where someone hypothesizes that C might hold A’s data, and compromises C? For example, if you wanted to learn what the leadership of some political party is doing, compromising one of their marketing consultants’ emails is likely to give you a lot of good insights. In the intelligence community prior to 9/11, data was shared on a “need to know” basis – the idea of “need to know” was that nobody got any particular data unless they absolutely had to have it, so as to reduce the spread. After 9/11 a directive from one Bush Administration big shot consultant was to switch to “need to share.” The reasoning was that “need to know” forced data holders to subdivide and analyze data, and that was hard. Let me give you an example: in the old days the US State Department’s cables would have been subdivided along “need to know” by area of interest. So, only people who needed cable traffic between, say, Benghazi station and Washington, would get it. Under the new “need to share” doctrine the priority was flipped to “make it easier to share” – and that’s how the State Department wound up just hosting the entire mass of cable traffic on a server on SIPRnet. State Department’s transitive trust model was: “we trust SIPRnet” which was probably a really bad idea because SIPRnet has 4.2 million authorized users.

“Need to share” is basically “doomed to leak.” Back around the time the change-over was happening, I was invited to be on a “senior industry review group” for the new network’s policy (I was a consultant to Booz-Allen for NSA’s X Group) and the group’s conclusion was “don’t.” There were a bit more words than that, but it’s a fair summary. Now, I suppose “I told you so” is where we are. Back in the day, I attempted to do a presentation defining some of the “natural laws” of security (I was naive!) in which I argued that transitive trust represented a “trust boundary” and eventually the trust boundary would include someone it shouldn’t: that being the reason why “need to know” was always going to be better than “need to share.” I think it’s safe to say, however, that people have always known “the more people who are in on a secret, the shorter it lasts.” Or, as Benjamin Franklin allegedly said:

Three can keep a secret, if two of them are dead.

obligatory F-35 picture

So, all that brings us around to the news of the day: F-35 secrets have leaked again. [bbc] This ought to be entirely expected: as I mentioned in earlier postings about the F-35, it’s being parted out as pork for every component manufacturer in a NATO member country: anyone who works for any company that makes any part of the F-35 probably has access to specifications.

Sensitive information about Australia’s defence programmes has been stolen in an “extensive” cyber hack.

About 30GB of data was compromised in the hack on a government contractor, including details about new fighter planes and navy vessels.

The data was commercially sensitive but not classified, the government said. It did not know if a state was involved.

Australian cyber security officials dubbed the mystery hacker “Alf”, after a character on TV soap Home and Away.

The breach began in July last year, but the Australian Signals Directorate (ASD) was not alerted until November. The hacker’s identity is not known.

“It could be one of a number of different actors,” Defence Industry Minister Christopher Pyne told the Australian Broadcasting Corp on Thursday.

“It could be a state actor, [or] a non-state actor. It could be someone who was working for another company.”

Mr Pyne said he had been assured the theft was not a risk to national security.

Mr Pyne doesn’t think the theft is a risk to national security because he’s being lied to. What the theft indicates is not that the F-35 is no longer secret (seriously, who’d want to build one of those?) but it indicates a deeper problem of data trust and transitive trust. It indicates that the mechanisms for keeping stuff that should be secret, secret, aren’t working. This is the equivalent of opening your flour bin and seeing a weevil: it means that if you examine your flour bin at all you will discover you have more weevil than flour, and you put down your fork and push away your pancakes.

Stop me if this is getting repetitive:

Mr Clarke told a Sydney security conference that the hacker had exploited a weakness in software being used by the government contractor. The software had not been updated for 12 months.

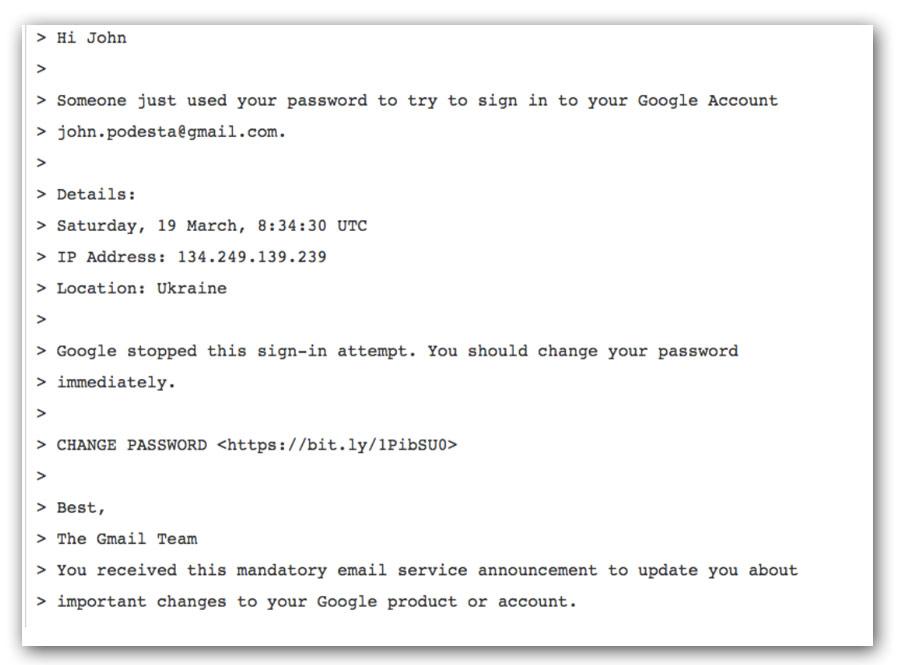

A shared data with B who shared data with C who shared data with D who shared data with E who shared data with F who had a bog-simple software vulnerability in their Outlook client and clicked on the wrong email and now A’s secrets are compromised. (I made up the part about Outlook, and I don’t know how long the chain of sharing was, but that’s probably a plausible scenario)

Meanwhile, a short distance away, another transitive trust problem crops up, in Korea: [nyt]

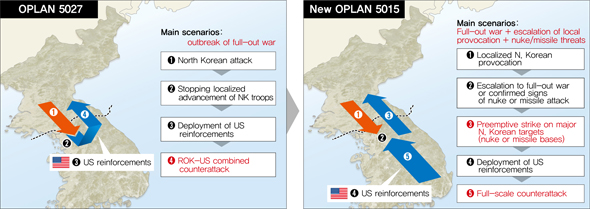

SEOUL, South Korea — North Korean hackers stole a vast cache of data, including classified wartime contingency plans jointly drawn by the United States and South Korea, when they breached the computer network of the South Korean military last year, a South Korean lawmaker said Tuesday.

One of the plans included the South Korean military’s plan to remove the North Korean leader, Kim Jong-un, referred to as a “decapitation” plan, should war break out on the Korean Peninsula, the lawmaker, Rhee Cheol-hee, told reporters.

Oh, how awkward. A shared data with B who screwed the pooch and now A’s secrets are compromised. Listen for the sound of the other shoe dropping:

Investigators later learned that the hackers first infiltrated the network of a company providing a computer vaccine service to the ministry’s computer network in 2015. They said the hackers operated out of IP addresses originating in Shenyang, a city in northeast China that had long been cited as an operating ground for North Korean hackers.

Your secret plans aren’t so secret

A shared data with B, and B allowed C into their network in order to provide services and C screwed the pooch.

Some of you may have noticed that I am cynical, sarcastic, and highly critical of government computer security, and this is why. This is introductory-level stuff, that has been understood to be part of “data governance” and basic competence for a very long time. Government persists in acting as though the laws of nature are going to magically not apply to their poor data management, because “cost savings!” and then they have to pretend to be all surprised when their stupidity catches up to them. When the surprise settles in, they blame hyper-sophisticated nation-state cyberninjas, when in fact the attack was “stealing email from Michael Podesta” simple; it’s kids’ stuff. Admitting that you failed to demonstrate basic competence is unacceptable, so – let’s inflate the threat.

By the way, note the “preemptive strike” in OPLAN 5015. Oh, boy, war of aggression. This would be embarrassing if the US wasn’t immune to embarrassment.

The Australian government is good at leaking data. Also good at changing laws to collect heaps of data on citizens to “protect us”. It’s a shitstorm waiting to happen.

Do not despair. Private companies are just as bad as government. Because, you guessed it, doing things correctly costs money and savings! are the theme of the day, year and century.

OPLAN 5015 would appear to justify the North Korean fears about the US wouldn’t it. They are not paranoid, the US is out to get them!

“Proactive defense” aka attacking is fine when Israel does it, I wonder if Korea gets that same leeway?

FLAMIN’ MONGREL!

polishsalami@#5:

Huh?

Holms@#4:

I think we are moving into a political era where authoritarians have decided they no longer need to pretend. Rule by force is edging out pretend democracy: Orwell was a few decades off.

AndrewD@#3:

No! They are attacking us by forcing us to attack them in order to defend ourselves!

I didn’t think that Marcus would be familiar with any of Alf Stewart’s catchphrases.

polishsalami@#9:

I didn’t think that Marcus would be familiar with any of Alf Stewart’s catchphrases.

I failed some kind of test. (Busts a scroll of Google) Aha.

I remember back in the early 90s, when co-workers started saying “D’oh” and I finally asked one of them “what is that sound you keep making?”