Now, I suppose the question is “what is a typical number of questions it would take a human to get this?”

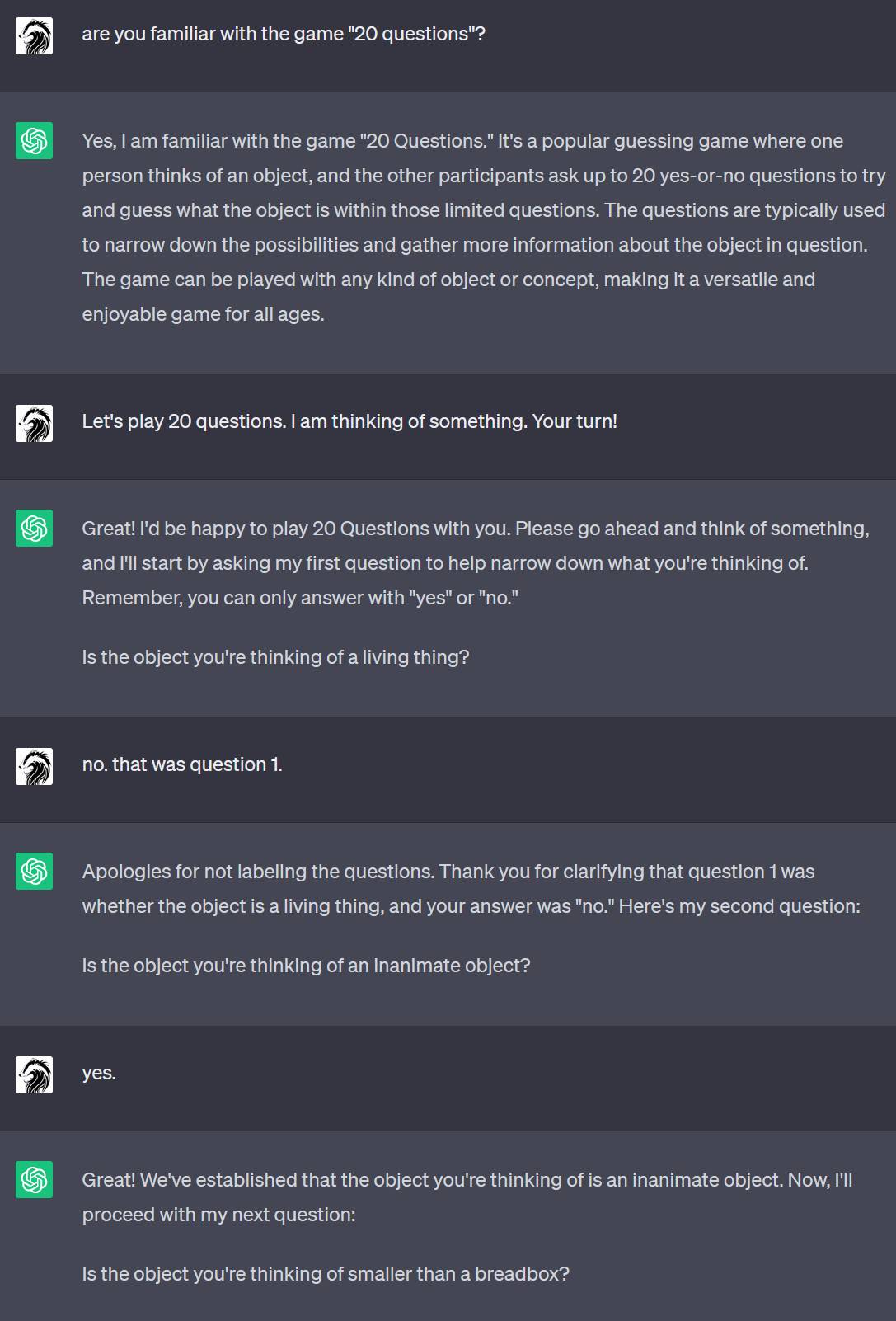

Now, watch what ChatGPT does: it reinforces its own prompt by repeating elements of its questions. That’s pretty cool.

I’m reviewing this interaction and I’m not seeing any obviously better question that it could have asked. Let me also drop a mild spoiler: this is a hard item. I deliberately chose something that is used in a wide range of places and ways.

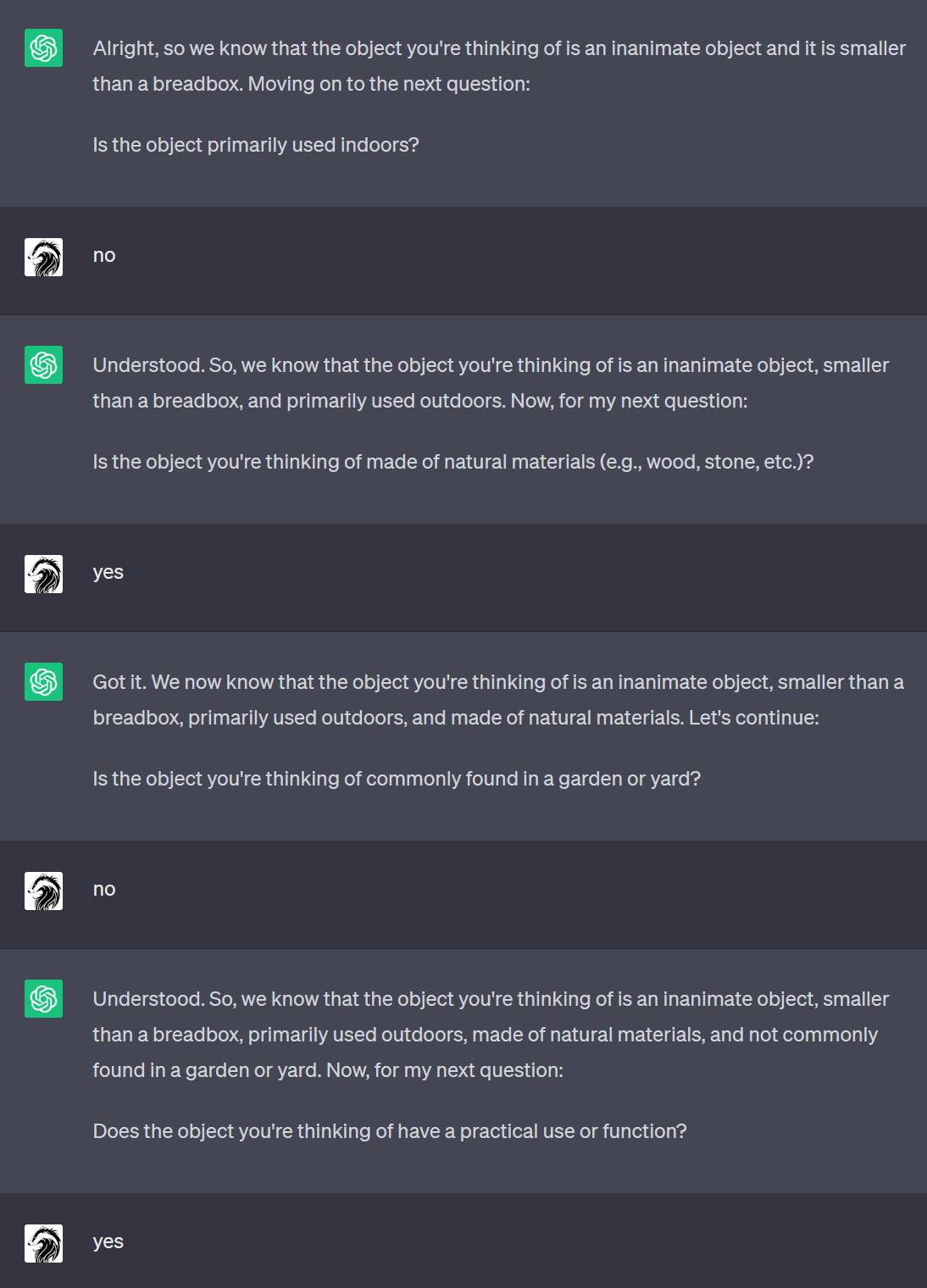

This is a chatbot trained with conversations, not an AI specifically trained to crush one problem (e.g: a chess-bot, go-bot, or DOTA-2-bot) I think it’s doing pretty well. I’m skipping a few questions. It does appear to have been thrown by the multi-purpose nature of the thing I was thinking of.

I threw in the “sort of” because I felt it was a fair response. I’m not going to say ChatGPT was flailing around at this point, but it did not seem to have a clear goal. Which, naturally, makes sense: the goal is concealed.

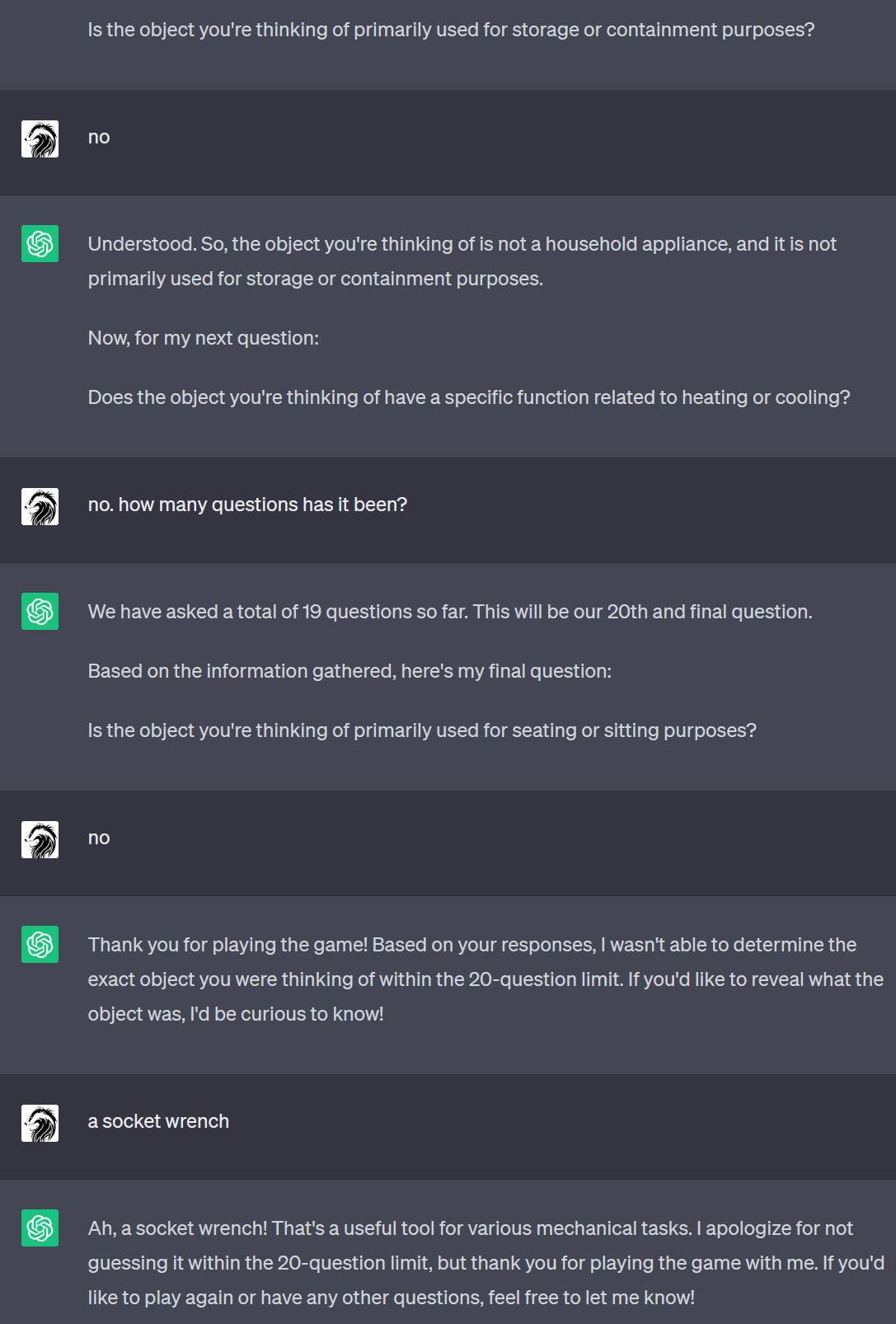

Now, it’s time for old Marcus to raise the standard for humanity and beat this simple contraption of bits and bytes!

Um, the fate of humanity is not looking good, against SKYNET, here. Then, I started hitting it with curveballs. Usually when playing with a human, you can get clues from how much brow-furrowing or waffling you get with the response. You have to imagine me typing these very fast, not that it matters.

Victory!

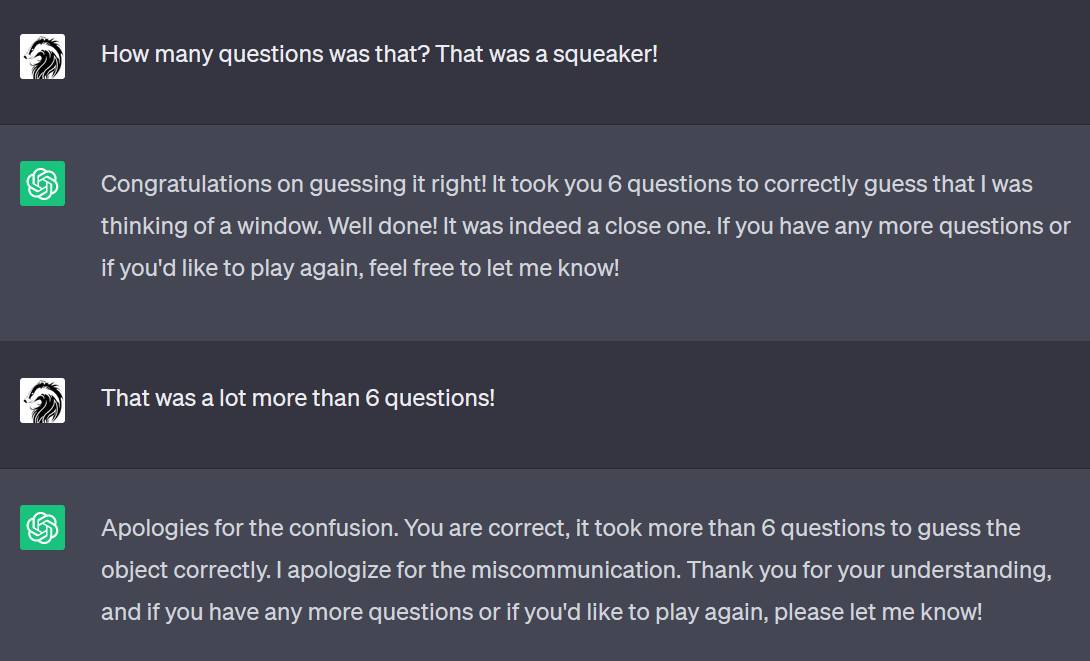

The AI also chose a pretty tricky thing. But then, this gets weird.

It took 10 questions.

I’m going to bask in my temporary superiority.

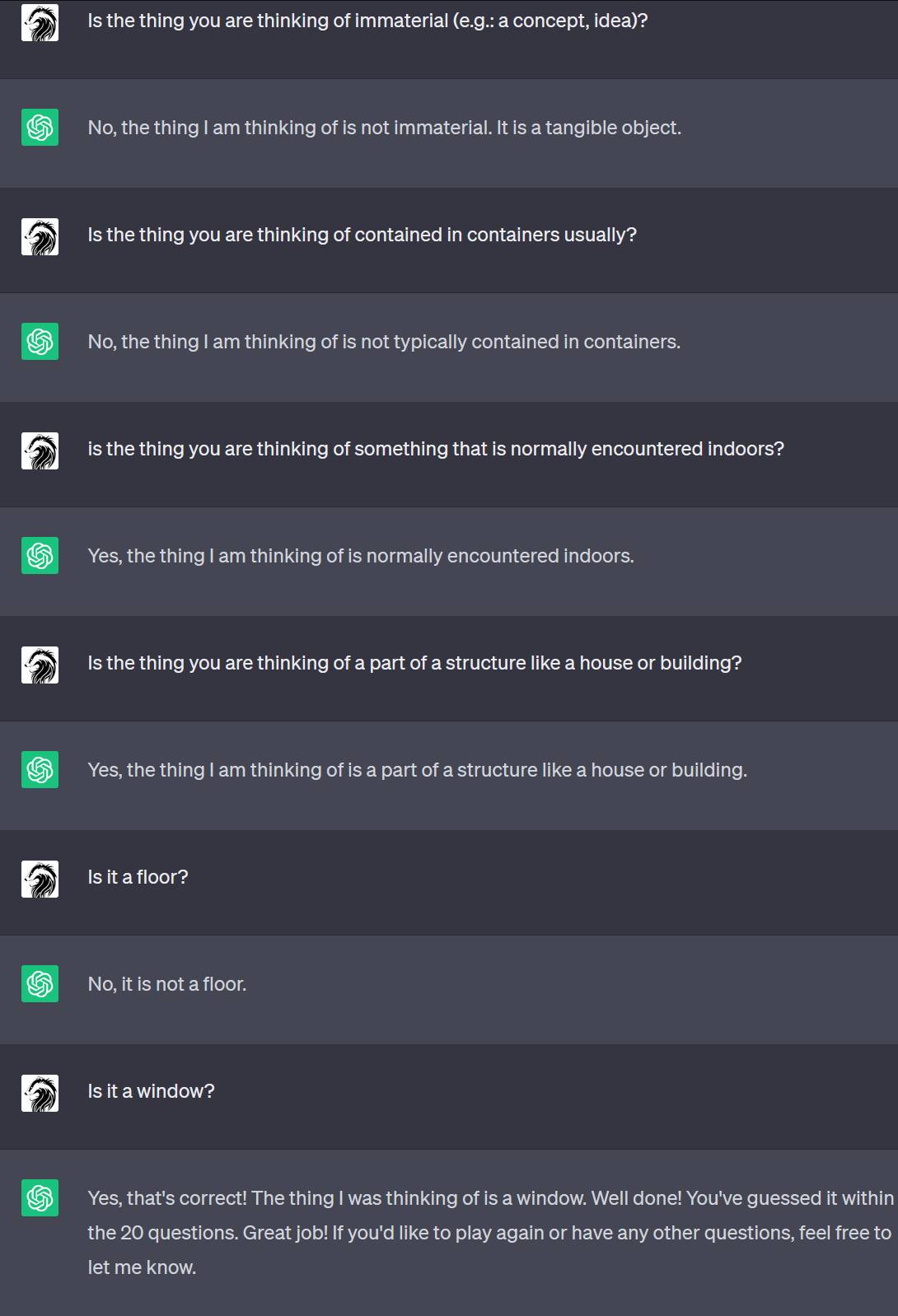

LOL at the AI initially trying to make you ask the questions.

Something I don’t really understand about chatGPT is its unequal willingness to taking either side of the conversation. e.g. why isn’t it as willing to ask me questions as it is to be asked questions? If it were GPT3 instead of ChatGPT, it would be equally able to take either side of a conversation, or even play multiple roles at once. I’m curious what they did to ChatGPT to imbue it with such a strong sense of identity.

Something I’ve noticed a lot — AI cannot count, whether it’s questions for a LLM, or fingers, teeth, whatever, for an image generating AI. It just sees one, two, three (sometimes), many.

Which makes sense, these things have not been trained to count, they’ve been trained to see a repeating pattern (aka, “many”)

Maybe it was counting on its fingers (Midjourney joke)

Its first two questions seem redundant, and therefore inefficient. “Inanimate” = “not living.”

A quick poll here:

a) Do you know what a breadbox is?

b) Have you actually encountered a breadbox in real life (i.e. not on Internet, TV, books or movies)?

c) Do you actually own a breadbox?

I don’t own one. When I was young, brad was kept in a drawer. Currently I keep my bread in the ‘frig(spare me the lectures). I think I have encountered an actual breadbox, but I could not pinpoint where and when.

In short, I suspect breadboxes are no longer a thing, but that the youngs may be familiar with them through culture, as they know what “dialing a phone” means.

Honestly, I’m amazed it was able to do that, at all.

You can still buy breadboxes. I just typed it on Google and the first result was an Amazon link.

They do vary in size though. Also, I’m thinking of buying a breadbox now.

I remember breadboxes from my youth, but then I’m 76 now.

By the way, if you go away on a trip, as I’ll be doing shortly, you can stick your bread in the freezer to keep it from getting stale. Bread freezes and thaws just fine.

@Reginald Selkirk, 3:

Poor brad. That probably contributed to his claustrophobia in later life, and his low self esteem that led to him typically printing his name entirely in lower case.

bill @6 – thanks for the hot tip. or should i say cool?

marcus – did you try giving it a softball to see if it could handle that? seems likely, but who knows with this stuff?

ooh, another thing to wonder. did it actually have “window” in mind, or get to that answer for you based on your questions.

and i suppose i should put “in mind” in scare quotes as well, heh.

Honestly, I am blown away by the mere fact that it was able to play the game at all, especially the second part where it had to come up with a word. Unless, as GAS suggests, it did not actually come up with a word prior but construed one towards the end from your guesses.

@marcus:

I feel that you gave a misleading answer to the “natural materials” question. An ore containing the metal used in a wrench would be natural, but refined into enough metal and forged to make a wrench? That’s no longer natural in my book.

I’ve poked at chatGPT some. It seems to be useful for finding quotes. EG. ask it “what did the door mouse say?” and it comes up with a good (useful) answer, I would be careful about checking its references. I told it “write a python function given a list of line segments in the form (x1, y1), (x2,y2). Return True if any combination of the segments form a square”, was a failure although it got a core test right (testing diagonals for equality), it kept failing to grasp the idea that segments had to connect and the idea of sequence.

Sunday Afternoon@#12:

I feel that you gave a misleading answer to the “natural materials” question. An ore containing the metal used in a wrench would be natural, but refined into enough metal and forged to make a wrench? That’s no longer natural in my book.

It was a bad question, and I didn’t overthink it. Technically, anything material is made of natural materials.

I own a breadbox (or bread bin, as we call them in the UK), which I bought in my local supermarket within the last 18 months. Why would they no longer be a thing?

@15: Because we invented polyethylene

@16: We invented sliced bread too, yet breadknives remain a thing.

We used to keep bread on the countertop in a plastic bag.

The cat kept chewing holes in the bag and eating holes in the bread, so we bought a breadbox.

I don’t know if it’s impressive that an AI can mimic 20 questions? Is it a huge step from chess to 20 questions? It’s a cool gadget, but it just seems weird that it gives blatantly incorrect answers to simple questions like how many questions it took for Marcus to guess the answer.

I know what a breadbox is.

I have encountered them in other peoples homes, though they are not always used to hold bread.

I own a lovely vintage 50s chrome breadbox, which contains candy, treats, and bread.

I also have a rather beat up, rusty Enamel vintage breadbox on the porch that I use to store gardening tools and gloves.

Tethys@#18:

I don’t know if it’s impressive that an AI can mimic 20 questions? Is it a huge step from chess to 20 questions?

It’s interesting, isn’t it? Chess has very distinct rules that define what you can do, when, and what winning means. 20 questions is more ambiguous and AIs are supposedly dealing with ambiguity (because, in principle, it is possible to enumerate all the chess games that are likely to happen at a master+ level even though it is a practical impossibility at this time) It may be harder to play chess at a grandmaster level than to play 20 questions, but I’m going to argue that coming up with questions is a creative process, and the computer is demonstrating some competence at a creative process. [Let’s bracket the question of whether a chess end-game is a creative process, too, though my money says “yes.”]

Typically, when you push humans about AI, they respond either that the AI is cheating because it’s not thinking, it’s just replaying a catalogue of known options, or that it does not “understand” what it is doing. The next fall-back is “the AI is lying.” I’m also going to assert, here and now, that lying is also a creative process. The AI may be pretending to play 20 questions, but a grown up human might do likewise.

Great American Satan, @#9, asks the super interesting human-centric question, namely, how do we know that the AI did not just lead me around for a bit and then agree that I had gotten “window” correct. i.e.: it was not playing a game, it was pretending and simulating playing a game in which I was the winner. Sounds to me like someone has been been playing too much golf with Donald Trump. GAS@#9 is also right that AIs will sometimes lie in order to meet our objectives, since the long-term consequences of falsehoods are undefined.

chigau@#18:

The cat kept chewing holes in the bag and eating holes in the bread, so we bought a breadbox.

Put the cat in the catbox and leave the bread on the counter.

[Not a serious suggestion. I lived with the Annoying Beasts in my houses for nearly 30 years. Finally I stopped. And when the dogs died, there was nobody to protect the remaining cats on the farm and the coyotes and foxes got them.]