If everything you read on the internet was written by AIs, would you care?

My immediate reaction is, “yes.” If I want to read stuff that is regurgitated from Reuters’ news feed, I’ll read it and regurgitate it to myself.

But then, I think that a substantial amount of what we read online is already being written by AIs or other systems that regurgitate news feeds from elsewhere. I guess I am asking if the New York Times is actually worth anything, at all, any more.

The next stage in the slow-moving disaster is when someone writes a news-grazer/categorizer that learns what we like. Then, it browses around and forwards things to us that are largely fascinating, well-written, etc. Then, the situation can be described as “AIs writing articles for other AIs.”

I’m doubtless showing some confirmation bias here – for a long time I have thought that the internet was going to devolve into ad-bots trying to promote irrelevant messages, being filtered by filter-bots trying to mask irrelevant messages from our inboxes; a self-licking ice cream cone.

I’ve seen pundits proclaiming that “AI will kill Google in 2 years” but I doubt that, since google has a unique position gatekeeping people’s query-streams. If I am asking Google for “good deals on 8tb hard drives” there is a good chance I am shopping for a hard drive, and it doesn’t require a fancy AI to think that maybe I’m searching for an 8th hard drive at that. I suppose I could have an AI app that queries google and whatever, and filters and summarizes a nicely written report with a soupçon of wry humor and a link to an embedded link-set that has had all the annoying ads removed: “click here to buy from Vendor A”

Buzzfeed [ars] has loudly teased the idea of having all of its content written by AI.

On Thursday, an internal memo obtained by The Wall Street Journal revealed that BuzzFeed is planning to use ChatGPT-style text synthesis technology from OpenAI to create individualized quizzes and potentially other content in the future. After the news hit, BuzzFeed’s stock rose 200 percent. On Friday, BuzzFeed formally announced the move in a post on its site.

“In 2023, you’ll see AI inspired content move from an R&D stage to part of our core business, enhancing the quiz experience, informing our brainstorming, and personalizing our content for our audience,” BuzzFeed CEO Jonah Peretti wrote in a memo to employees, according to Reuters. A similar statement appeared on the BuzzFeed site.

I confess, I am surprised. I am surprised that BuzzFeed was able to go public and that it’s shares are not garbage. Uh, hang on, this news just in:

Basically, BuzzFeed appears to be a garbage stock already, so perhaps their management team figured that the route to profitability is to thoroughly fail, and quickly.

The trend of media outlets replacing writers with AIs goes back a ways. I wrote a piece about this a few years ago: local newspapers use software to turn a few facts into an article – stuff like little league game scores, etc.

But, look, the self-licking ice cream cone is already happening, as one webzine is writing about another webzine using AI and, I guess that was their AI’s first writing assignment [buzzf]

Technology news outlet CNET has been found to be using Artificial Intelligence (AI) to write articles about personal finance without any prior announcement or explanation. The articles, which numbered at 73, covered topics such as “What Is Zelle and How Does It Work?” and had a small disclaimer at the bottom of each reading, “This article was generated using automation technology and thoroughly edited and fact-checked by an editor on our editorial staff.” The bylines on these articles read “CNET Money Staff” without any indication that they were generated by AI.

The use of AI to write these articles was first brought to light by a Twitter user, and further investigation revealed that the articles have been generated using AI since November 2022. The extent and specific form of AI being used by CNET is not currently known as the company did not respond to questions about their use of artificial intelligence.

My reaction is, succinctly, “who gives a shit?” but that’s probably because I haven’t been giving a shit about most online news top-level feeds for a while. I consume them, avidly, since that’s my role in this mess, but I try to cross-check wherever possible. Of course, with AI, it’d be possible to easily “flood the zone” with references, so that if someone searched for the game scores for the Jackanapes Vs Snakeheads, there could be a half-dozen sites corroborating it.

Perhaps the “simulationists” aren’t as wrong as most of us think – perhaps we’re trapped in a recursive marketing bubble.

My first reaction to BuzzFeed’s proposal was that I want to read stuff that was written by someone who put some thought into what they were writing. But, then, I think about some of the crap the New York Times has published, which is clearly just a gloss over a DoD press release or talking points memo, and I begin to wonder if any of it is worth thinking about for a second.

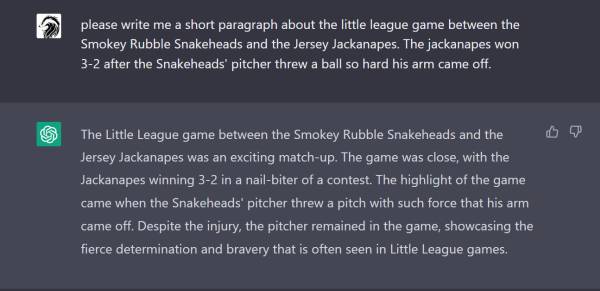

I hate it when my arm falls off. Who among us hasn’t had that happen to them at least three times a day?

Depends on how good they get. I try not too waste too much of my brief time on Earth reading poorly-written garbage, which eliminates the vast majority of online content regardless of who or what wrote it. Given that the training sets for these AI are drawn from pretty much that same corpus of crap, I don’t see them writing much that I’ll want to read in the near future. On the other hand, I’ve just read a really good, well-researched, well-written article about the Cascadia subuction zone [new yorker, from 2015], and if they could do that, what’s not to like?

What I do think might happen (and I think we’ve already touched on this) is that the ability to generate effectively infinite amounts of crap could finally push the current ad-supported clickbait model of internet content provision over the edge into the dustbin of history. (And good riddance!) The question then becomes what the alternative might be… I’d like to see the development of a proper micro-transaction based model – there’s plenty of sources out there that produce some things I want to read (and offer them on a subscription basis) which I’m not prepared to stump up for a full ongoing subscription for, but which I’d be more than happy to buy for a few pence per article. And a few pence per page impression is (I believe) a darn site more than the current ad-based model pays…

@Dunc: I remember the Cascadia article. I first read it in Seattle.

Agreed, if AIs can do stuff like that, who cares? But it was really the seismologists’ research that was the basis for the article. Would an AI come up with accurate “facts”?

Did you notice how the AI decided, on its own, that the heroic pitcher kept playing after his accidental disarmament…? What if the AI decided “everything west of Yosemite will die.”?

As I understand it, the current generation will just make stuff up and insert plausible-looking but entirely fake references.

I have a friend who is a Psych professor at the local university. He told me they now have to watch for students having their paper written entirely by an AI, instead of doing the work themselves. (As a side note, it is totally weird to me that you would pay someone to teach you something, and then proceed to do everything in your power to avoid learning. But hey, I must be the abnormal one.) They now have to check to see if the paper was written by the student themselves or by an AI. As the guy told me, he’ll read something and think, “That’s either an AI or a freshman”. He says they may have to move towards making prose more part of the grade than previously.

Would I be interested if the internet were all AI generated? I would not be interested in such an internet. It’s already strange enough when it’s just written by people.

No.

Which is to say, I don’t care about the output of text AIs. If the internet consisted of the output of AIs, I wouldn’t care about the internet.

Of course, compared to what we have today, I might mourn the loss of something that is frequently useful and/or diverting.

I can find art AI output mildly diverting on aesthetic grounds – I could never do the same for text. Perhaps because I don’t care as much about the motivations of visual artists.

Consider this: it’s a damn shame Iain M. Banks had to die so early, I always enjoyed reading the next new book. Or Douglas Adams. Let’s say you could get an AI to write the books they never got round to. A potentially never-ending series of the stuff you already know you like, right? (Of course it’s not possible yet, but assume it does get possible soonish.)

Worthless crap. Part of the value was reading their thoughts about something they cared about. Since the AI can’t care about anything, I don’t care about it.

For fact-based text that bit is kind of key though: “checked by an editor”. I’ll note that Stack Overflow have provisionally banned the use of ChatGPT to generate answers. I mean, I could snark that if they believe using ChatGPT will lower the quality of their answers then it must be bad. But the main thing is a bunch of comments saying that if the poster fact-checks the output and is just using it for the wordsmithing then there’s no reason it shouldn’t increase quality.

Except that’s not what was already happening, and you could expect that it would continue to not happen: people would continue to post the raw unchecked output. Freeloaders who want the karma of contributing without having to actually go to the effort of contributing.

Just as the AI can’t care, it also doesn’t understand what a lie is, so its output is always entirely untrustworthy. Is a human trustworthy then? Of course not always, but you can at least follow an individual or channel to get a feel for how trustworthy they usually are, and otherwise use other cues and heuristics to infer how trustworthy an individual piece of writing is. You might not get it right every time, but your batting average should be acceptably high. But every little detail of AI output must be checked because you’ve just no idea where something wild might get inserted. The pretty wordsmithing around the meat just gets in the way at that point and the whole thing has negative value to the reader: it’ll take far longer to evaluate the facts than if the AI wrapper hadn’t existed.

@5:

You’re not: you’re paying for the bit of paper at the end that gets you a good job. Which you’re totally entitled to because you paid for it. How dare they expect you to put any effort into the process beyond that!

I have another one, for someone who can write fiction. Maybe I should ask chatgpt.

But imagine if someone attempted to achieve “immortality” by having every moment and conversation and scene recorded into a constantly updated AI avatar? When their primary body dies the avatar could take over – i.e: body switch or maybe “the admiral’s flag is flying from a new ship.

C J Cherryh’s Cyteen definitely influenced my thinking there. Ariane Emory is dead, long live Ariane Emory.

Beau on youtube did a piece on AI today. As always, it made me think.

For one thing there may be a business model for a truth attestation service. “Yes this video is real” but it would have to look at circumstantial factors too.

Youth sportsball games, finance articles on a technology site, whatever it is that Buzzfeed publishes – who cares? If the AIs really want to grab us by the gonads and hang us out to dry while they continue their path to world domination, they will try their hand at cat videos.

BTW, Jackanapes all the way, those Snakesheads are pond scum.

The bad thing about an AI predicting that “everything west of Yosemite will die.” is that on a globe west turns out to be everywhere…

.

.

… on the plus side is that if the prediction was correct, we couldn’t make things worse anymore.

Dunc @4:

Indeed, that’s what ‘assistant’ AKA ChatGPT did in a recent interaction. I asked it a physiology question, it answered something I would expect from an online ‘influencer’. I asked for supporting references, it gave me some. I checked a couple, one was completely fake, another was to a real paper, but the paper’s conclusion was the exact opposite of what ‘assistant’ was claiming.