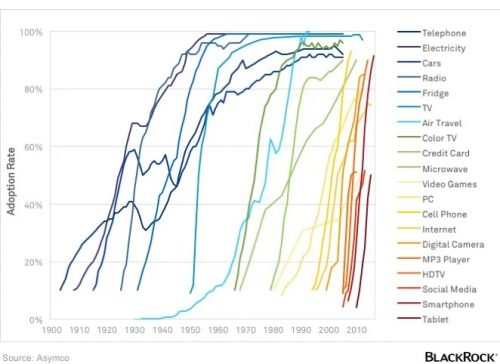

This is a fascinating chart of how quickly new technology can be adopted.

It’s all just wham, zoom, smack into the ceiling. Refrigerators get invented and within a few years everyone had to have one. Cell phones come along, and they quickly become indispensable. I felt that one, I remember telling my kids that no one needed a cell phone back in 2000, and here I am now, with a cell phone I’m required to have to access utilities at work…as well as enjoying them.

The point of this article, though, is that AI isn’t following that trajectory. AI is failing fast, instead.

The AI hype is collapsing faster than the bouncy house after a kid’s birthday. Nothing has turned out the way it was supposed to.

For a start, take a look at Microsoft—which made the biggest bet on AI. They were convinced that AI would enable the company’s Bing search engine to surpass Google.

They spent $10 billion dollars to make this happen.

And now we have numbers to measure the results. Guess what? Bing’s market share hasn’t grown at all. Bing’s share of search It’s still stuck at a lousy 3%.

In fact, it has dropped slightly since the beginning of the year.

What’s wrong? Everybody was supposed to prefer AI over conventional search. And it turns out that nobody cares.

OK, Bing. No one uses Bing, and showing that even fewer people use it now isn’t as impressive a demonstration of the failure of AI as you might think. I do agree, though, that I don’t care about plugging AI into a search engine; if you advertise that I ought to abandon duckduckgo because your search engine has an AI front end, you’re not going to persuade me. Of course, I’m the guy who was unswayed by the cell phone in 2000.

I do know, though, that most search engines are a mess right now, and AI isn’t going to fix their problems.

What makes this especially revealing is that Google search results are abysmal nowadays. They have filled them to the brim with garbage. If Google was ever vulnerable, it’s right now.

But AI hasn’t made a dent.

Of course, Google has tried to implement AI too. But the company’s Bard AI bot made embarrassing errors at its very first demo, and continues to do bizarre things—such as touting the benefits of genocide and slavery, or putting Hitler and Stalin on its list of greatest leaders.

Yeah. And where’s the wham, zoom, to the moon?

The same decline is happening at ChatGPT’s website. Site traffic is now declining. This is always a bad sign—but especially if your technology is touted as the biggest breakthrough of the century.

If AI really delivered the goods, visitors to ChatGPT should be doubling every few weeks.

In summary:

Here’s what we now know about AI:

- Consumer demand is low, and already appears to be shrinking.

- Skepticism and suspicion are pervasive among the public.

- Even the companies using AI typically try to hide that fact—because they’re aware of the backlash.

- The areas where AI has been implemented make clear how poorly it performs.

- AI potentially creates a situation where millions of people can be fired and replaced with bots—so a few people at the top continue to promote it despite all these warning signs.

- But even these true believers now face huge legal, regulatory, and attitudinal obstacles

- In the meantime, cheaters and criminals are taking full advantage of AI as a tool of deception.

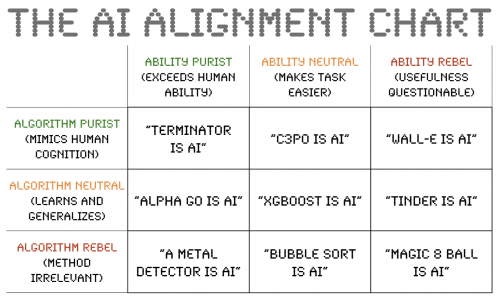

I found this chart interesting and relevant.

What I find amusing is that the first row, “mimics human cognition,” is what I’ve visualized as AI, and it consists entirely of fictional characters. They don’t exist. Everything that remains is trivial and uninteresting — “Tinder is AI”? OK, you can have it.