The major cataclysm that struck my inbox was, of course, that silly incident with a cracker. I still get hate mail from Catholics, and intermittently still receive politely horrified regular mail from little old Catholic ladies who want to pray for me.

But the second biggest outrage I ever perpetrated may not have caught the attention of most readers: I criticized Ray Kurzweil! I still get angry email from people who stumble across this post I originally wrote in 2005, and are really pissed off that I think Saint Kurzweil is a charlatan.

“Singularly silly singularity” – You have much in common with the creationist you so despise.

For a PhD and self-proclaimed intellectual you show an utterly remarkable incapability to understand what the Singularity even is, though this does not stop you from attacking it in the cocksure fashion of the creationist attacking evolution as what he believes is the direct conversion of ape into man.

The Singularity, though inextricably related to the increasing rate of technological advancement, IS NOT a statement that this acceleration alone will lead to the sorts of things Kurzweil proposes. The Singularity is describing what occurs after the creation of a smarter-than-human artificial intelligence. By it’s very nature the workings of this AI’s ‘mind’ will be unintelligible to us. This incapability of understanding, which will compound upon itself as the AI makes advances and improvements of it’s own, acts as an ‘event horizon,’ (I should take this moment to point out that you would do well to learn what a gravitational singularity is, as it may help you understand why you are so off the mark in your incorrect understanding of the Singularity) obscuring the ability to make predictions about what course the future will take.

I’ll even grant you the underlying argument of your article opposing Kurzweil’s “Countdown to Singularity” graph (even though you clearly do not understand log vs. log graphs, which cannot be extended into the future). Stating that there is no trend of the acceleration of the rate of technological advancement DOES NOTHING to disprove the existence of the Singularity as the Singularity is a statement about what happens AFTER the creation of faster-than-human AI and not about what happens before it.

You should perhaps try thinking rather than just knowing.

-Wyatt

You know, I appreciate the fact that there is an increasing pattern of technological change — I’ve lived through the last 50 years, where we’ve gone from computers being vast arcane artifacts that cost millions of dollars to plod through mundane calculations, to being stupid little machines that let us play pong on our televisions, to becoming the routine miracle that we now use to process all our media and communicate with our friends. I get that. I do expect to be dazzled over the next few decades (if I live that long) as new technologies emerge.

But predictions of incremental advances on the basis of past experience are routine; predictions of a single, species-defining moment of radical transformation for which there are no predecessors is a data-free assumption. It can’t be justified.

Despite my correspondent’s claim that the source for the claim of a singularity is not accelerating technological advancement, that’s all Kurzweil talks about: the entire first third of The Singularity is Near (yes, I have a copy…it’s even a signed copy that he sent to me!) is a repetitive drumbeat of graphs, graphs, graphs, all showing an inexorable trend: per capita GDP, education expenditures, nanotechnology patents, price-performance for wireless data devices, on and on. That really is the foundation of his whole argument: technology advancement is accelerating, therefore we’re going to get immortality before we die. All you have to do is hang on until 2029.

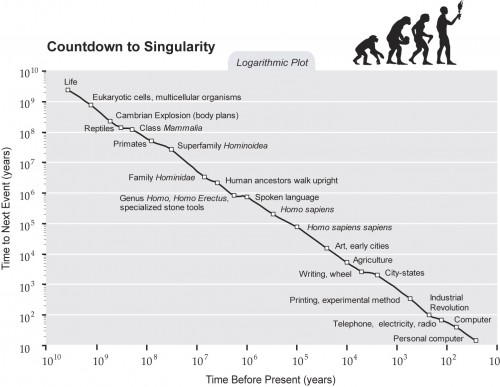

What really bugs me about Kurzweil is that he blatantly fudges his data. I picked on this chart before: the data is nonsense, comparing all kinds of events that don’t really compare at all — speciation is equivalent to Jobs and Wozniak building a computer in a garage? Really? — and arbitrarily lumps together some events and omits others to create points that fit on his curve. Why does the Industrial Revolution get a single point, condensing all the technological events (steam engines, jacquard looms, iron and steel processing, architecture, coal mining machinery, canal building, railroads) into one lump, while the Information Revolution gets a finer-grained dissection into its component bits? Because that makes them fit into his pattern.

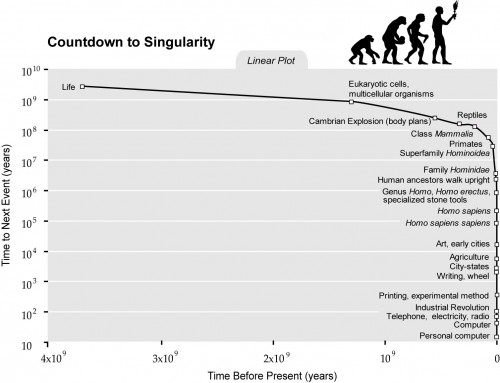

He also shows this linear plot of the same data, which I think makes the problem clear.

It’s familiarity and recency. If a man in 1900 of Kurzweil’s bent had sat down and made a plot of technological innovation, he’d have said the same thing: why, look at all the amazing things I can think of that have occurred in my lifetime, the telegraph and telephone, machine guns and ironclad battleships, automobiles and typewriters, organic chemistry and evolution. Compared to those stodgy old fellows in the 18th century, we’re just whizzing along! And then he would have drawn a little chart, and the line would have gone plummeting downward at an awesome rate as it approached his time, and he would have said, “By Jove! The King of England will rule the whole planet by 1930, and we’ll be mining coal on Mars to power our flying velocipedes!”

I would also suggest to my correspondent that if he thinks extrapolating from graphs is not appropriate, he look a little more closely at Kurzweil’s writings and wonder why he’s extrapolating from graphs so much. I didn’t create those charts I mock; Kurzweil did.

Whatever. I just want my flying velocipede.

It’s sort of like Star Trek, hey, we can get to the moon, soon we’ll be moving faster than light across the galaxy and beyond to other galaxies.

At least the people behind Star Trek knew it was sci-fi.

It takes a prophet to do the fuzzy thinking to proclaim the new age upon us. Works as well as any prophet.

Glen Davidson

It’s a coal-powered flying velocipede. It’s going to be awesome. There have been a few glitches on the path in the last century, but technology is zooming forward so fast that I’m sure you’ll have it in, oh, say…2029.

I think the martian coal miners would go on strike.

Someone is projecting hir ignorance of AI research and computer programming onto others. When people create it, they program it / build it. Normally to program or build something you have to understand it on a rather intimate level. Else it simply does not work. That it has an unpredictable behavior is not the same as not understanding it. One person might not understand everything about it, but each builder has to understand hir part and the way it interacts with other parts.

If it “obscures the ability to make predictions”, how the fuck can he make predictions about it ? Aren’t predictions about the future, because of the very nature of technological advances, always pretty much uncertain ? Who could have predicted the advent of the internet in the 1900’s ?

Because he has graphs?

I have a book that was published back in the 80s, written by the same people who did those books of lists that came out around the same time.

It is a book of predictions about the future. Almost without exception every single prediction is either totally wrong, or the timescale is way out. Not one of them managed to predict the demise of the Soviet Union for example.

If there is one thing we can predict, it is that predictions about the future, especially anything more than a couple of years ahead, are probably going to be wrong.

Yes, giant velocipedes… Soon they will be our most important form of transport (with the obvious exception of the zeppelin, of course).

“When people create it, they program it / build it. Normally to program or build something you have to understand it on a rather intimate level. Else it simply does not work.”

The idea is that a human-built A.I. will make another A.I. that is smarter than itself, and then that A.I. will make a smarter A.I., and then that A.I. will make an even smarter A.I. and so on and so forth. This is supposed to allow A.I. development to exceed human abilities. It’s a fairly silly science fiction idea and there’s no way to predict if it will ever happen, or if it work work at all, but that’s what the guy e-mailing PZ is referring to.

PZ, I’m always left a little perplexed when you demolish Kurzweil – I’m never sure exactly what you consider the flaw in his reasoning to be. I think that it is one (or more) of the following:

a) it’s not that you think that an AI singularity can never happen , but rather that Kurzweil has no way of predicting when it will happen.

b) you think that a AI singularity won’t happen because strong AI can never be developed by humans

c) you think that an AI singularity is possible, but it’s more likely that we kill ourselves off before we get there.

d) ahh nuts, I failed to see an obvious other possibility which is in fact the position you hold…

I think I’ve read pretty much all of your posts on this subject, and I still don’t know where you actually stand on the issue.

Love in the post-singularity universe:

http://questionablecontent.net/view.php?comic=2158

So one day there will be enough computers connected to each other that they will be able to infer the existance of sour cream? Cool.

That Kurzweil is a fraud who is making money by lying?

Personally, I never make predictions about the future, and I never will.

It’s unfortunate that he undermines what is actually an interesting thought experiment by trying to turn it into a prediction by using shitty “data”.

I think that’s your answer jennyxyzzy. Rather than simply speculating about the implications of our potential ability to create an entity that is better at thinking than we are he goes full-crack-pot and says “THIS WILL DEFINITELY HAPPEN LOOK AT MY CHARTS!”.

Redmcwilliams – your flying velocipede – Gossamer Albatross

BTW, PZ, by definition velocipedes are human powered so no “coal powered velocipedes” for you. Sorry.

Don’t forget! Kurzweil is heavily into the supplement racket. 200 vitamin pills a day and alkaline water!

I note that his transhumanist fans tend to quietly gloss over Kurzweil’s left-turn into blatant alt-med pseudoscience here.

Hah! That’s where you’re wrong! I am predicting that human beings will be uploaded into steam-powered calculating engines. And then of course, we’ll want to go around and visit other calculating engines, so we’ll hop onto our velocipedes and peddle off to their house in a cloud of smoke.

Hmm. Use a sulfer-laden fuel in a machine made of ferrous metal with lots of delicate parts that all have to work together. Then add water (unless you are going for a coal-exhaust turbine?). Yeah. That’ll work.

You do know that there is a very good reason the railroads don’t use the steam engines of your youth, don’t you, PZ?

Kurzweil has some ups and downs. He, DeGrey, and similar folk are fairly obviously making absurd speculations about immortality and the god-like abilities of digital intelligence and nano-technology.

However, an interesting ans worthwhile notion is buried in there about computers becoming so sophisticated that the line between research tools and researchers becomes blurred. Computers are already being used in some specific fields (protein folding and astrophysics come to mind) that create models from imputed data on their own.

As we gain more knowledge cross-discipline research and keeping up with relevant publications become mores difficult, computer systems that can accumulate and synthesize theories become more useful. I don’t see immortality by 2027, but I do see the potential for computers doing mostly independent research by that time, including designing better computers. The implications of that are interesting, and Kurzweil plays around with some of them. He’s still much more a sci-fi author than scientist.

Thanks, PZ, for posting the link to Ray Kurzweil’s video.

I had mentally put The Singularity on the science fiction shelf and so not really looked at the concept, or its author, critically.

Don’t give up on futurology though. Just treat it as fun.

I recommend Arthur Clark’s book Profiles of the Future, both for its appropriate level of humility given an impossible task and for the accuracy of some, but not all, of his predictions.

Written in 1960 and revised in 1981, when the future was more predictable, methinks.

These are mere engineering problems that the exponentially advanced difference engines will undoubtedly be able to work out.

It’s funny to me that the email’s author compares you to a creationist… the irony is almost too delicious.

It is my belief that at their heart, defenders of Kurzweil have the same irrational, reason-deficient, emotional catalyst for coming to the defense of that for which there is no supporting data or evidence as many creationists: that it would just be way more awesome if it were true…

My 10 year old daughter used to have that issue… she’d lose things and swear up and down that they either just disappeared like magic or were stolen by invisible gnomes. Because frankly the alternative that she just left them somewhere and forgot was too boring, and would force her to take personal responsibility, which almost no kid under the age of 10 wants to do, really.

Luckily she’s 10 now and has pretty much outgrown that.

It’s not a velocipede but it is steam powered.

You do know a joke when you read one, don’t you?

@kemist

#5

Not really. Take, for example, artificial neural networks. You can understand the basic components very well (there’s the input nodes, the hidden nodes, output nodes, each combines the input the various way to generate an output, each with a set of variables that determine how to sum the output, etc). Yet, once you get a sufficiently complex neural network trained, it becomes difficult to work out just what, exactly, it learned.

gragra

HaHa!

I predict that around 2029 Ray Kurzweil’s predictions will look about as silly as Harold Camping’s or any other Millerite’s predictions of the second coming. Jesus isn’t coming back, and neither is The Singularity.

And, now I’m going to take the flying car out for a spin.

It was, of course, a well known fact in the 19th century that the whole earth would be 3 feet deep in horse shit by 1950.

Dear Dr. Tentadoom, (aka PZ Myers — You have a tentacle throne if the last post is any indication…),

I agree with you that Kurzweil is full of it, but do you, by extension, feel that transhumanism in general is BS? If so, how come?

My feeling on transhumanism is that change is inevitable, and the only alternative to eventual speciation, anagenic or otherwise, is extinction. I’m a little less optimistic than most transhumanists — for all we know, our distant descendants might find an advantage in a more mindless, rodent-like morphology — but technology-assisted transformation of a subset of our lineage isn’t out of the question. Whether it’s viable, though…a whole ‘nother problem.

They already have, they’re called Republicans.

Well, some are already there.

*cough*GOP

Fuck this singularity shit we got Google making augmented reality glasses and self driving cars with rumors of space elevators and advanced robots! I think now is just as cool.

interesting that you would pick today to write about this. I was thinking yesterday as I was reading the thread about “the purpose of women” that the arguments like that were rather like all those crappy nostalgic e-mails I used to get with all the wonderful memories of how great it was in the past when everything was so kool!

Then I read this from Celtic: “It is my belief that at their heart, defenders of Kurzweil have the same irrational, reason-deficient, emotional catalyst for coming to the defense of that for which there is no supporting data or evidence as many creationists: that it would just be way more awesome if it were true”

I understand your argument perfectly it is the same here. The ability of people to be so selective when thinking to chose facts and the interpretation of facts to only include those which would make it awesome if it was true. They and we do it without realizing it. It takes the discipline of science to over come our limitations.

This guy had a great idea but not the creative imagination to write a good novel or even a short story about it to illustrate it or convey the emotional experience of it. So he just wrote up the speculative premiss as if it was real and you see the results. Same could be said about a lot of things thet do not hold up well when we examine them more closely and objectively.

If there is something world changing going to happen around 2029 it will probably have something to do with economics (there is always something happening with economics) and/or ice.

uncle frogy

The more I listen to Kurzweil and read his publications, the more I come to the conclusion that he is approaching the same kind of at best cognitive malfunction, at worst outright con-job, as that produced by religion, but from a different direction.

Where religion offers some fuzzy (and conveniently undetectable) postmortem afterlife, biologically non-credible ‘reincarnation’, and/or (in some cases) physical ‘ressurection’ at some later, undefined date as its hook to draw in the gullible, Kurzweil promises techno-mystical immortality achieved by ‘consciousness upload’ or the transfer over into a machine body (that one assumes comes with a really good warrenty).

This similarity seems more than just coincidental. Has Kurzweil spotted, whether consciously or not, the main problem facing religion – that its claims are obviously and grossly at odds with scientific knowledge, and that the resulting credibility gap is killing that particular delusion by inches, especially among the better educated members of the younger generation? And maybe he has created a replacement for this out-and-out, magic-powered, ‘goddidit’ based model of religion with a superficially more scientifically credible mechanism in ‘Singularitarianism’ that offers essentially the same bait – the idea that an adherent will be able to survive their own death – without the mountains of increasigly toxic baggage and dogma that afflicts more conventional immortality fantasies.

While I know that I am straying onto the dangerous ground of ‘X is a religion too’, I think that in this particular case it may be justified. Elements of the pro-singularity camp are behaving in a fashion ever more reminiscient of theists, right down to the prickly attitude that criticising their ideas is the same as attacking them personally. Perhaps the reason for that behaviour is the same as well – neither group likes ‘mean’, ‘shrill’ and ‘militant’ rationalists to rain on their respective immortality parades by pointing out that the Emperor is not wearing wonderful new clothes, but is in fact buck naked and frightening the horses…

Whether I am right or wrong, I think that that is enough pseudo-intellectualism out of me for one post. To finish, I will link to this, because in a thread about rampant technological development and strong AI, someone has too.

All I can think about is The Cruciferous Vegetable Amplification. Heh.

Jinx

oooooohhhh creepy… :)

If a man in 1900 of Kurzweil’s bent had sat down and made a plot of technological innovation, he’d have said the same thing … And then he would have drawn a little chart, and the line would have gone plummeting downward at an awesome rate as it approached his time

Wait, isn’t that exactly what Kurzweil’s exponential progress claim would predict? That’s how exponentials work at every point on the curve, right?

Interestingly I just noted the other day how much easier a time I have finding lost things since I’ve rejected the “invisible bastards are fucking with me” hypothesis.

As an aside: I think a good chunk of transhumanism just isn’t going to happen because it would change the base nature of humans too much for many to be comfortable with.

Ing –

Well, that’s only half of the equation… it’s the other half, the part about taking personal responsibility for shit, that I find most grown-ups that believe in crazy shit have the harder time with.

Ing:

Like the 1% is going to give a shit what the

poor peons99% think about things.Not to mention that Korgs and Rolands are better, too.

I’ll say this for them: believers in the Nerd Rapture tend to have much better spelling than the other kind.

I can get an entire trilogy out of this. Anyone know a good publisher?

I think that this is more of an issue the faster things change. There’s more pushback if you introduce radical new technologies and concepts all at once.

For example, there’s no way in hell you’d be able to sell a self-driving car right now, or even get it legalized. However, if you gradually introduce reliable driving-assistance technologies that take more and more decisions from the driver, while introducing more activities that the driver could be doing if she weren’t driving, I could see it happening someday.

Of course, if I’m right, there won’t be any Singularity, and most people won’t ever consider themselves “transhumans” because the baseline for what is “human” will have changed.

PZ @ #32:

That reminded me of Stephen Baxter’s book Evolution. While I can’t comment on the accuracy of the science behind it, the idea of a multi-generational story that spans millions of years was neat, and made for a great read.

@Jubal:

Both of these are actually counterexamples to the principle you’re talking about. Protein folding algorithms perform very poorly and the “research game” FoldIt is the result — people would watch the screensavers generated by protein folding algorithms and complained to the developers that they could see the correct solution while the algorithm was still endlessly iterating blindly through the possibilities. FoldIt makes protein folding into a puzzle game for human beings — humans are actually better than computers at protein folding.

Computers are better for some tasks in astrophysics but computers are horrible at recognizing anomalous or transient features in pictures of the sky. So, similar to the situation with FoldIt, someone put together a system whereby researchers can crowdsource for interesting features in images of the sky since, again, this is a task much better performed by human beings than computers.

So far there’s been very little progress on giving computers abilities that even three-year-old human children take for granted. No one knows what tradeoffs are involved in such a program; do the same faculties that make us better than machines at folding proteins also give us confirmation bias and pareidolia? I don’t know but it wouldn’t surprise me if that were the case.

Human minds are an evolved kludge of tradeoffs that just happened to work better than any alternatives that had been tried to that point. As with almost any engineering situation there is almost certainly a point at which increasing any particular performance metric entails decreasing some other performance metric — the definition of a tradeoff. More attention to detail means less throughput, just for example. So I don’t really think it’s possible for anything to grow in “intelligence” without limit.

You do know that there is a very good reason the railroads don’t use the steam engines of your youth, don’t you, PZ?

Yeah, it’s called “the people who made diesel engines (read: GM) decided that they were going to crash the market.”

You did know that there are still some century-old (or so) steam engines from western Canada in service in India, did you not? Turns out that modern diesels have a shitty thrust to weight ratio compared to the old steamers, and just simply can’t handle some of the mountain inclines. Steam engines are actually pretty damn robust; most of them were built before “planned obsolescence” was a thing, so they were more or less designed to have a 50+ year operational lifespan.

Electrics are also cleaner, more powerful, and have fewer moving parts than diesels, but aren’t as good for steep inclines and inaccessible terrain as steam engines.

/rail pedant mode

Peter Cranny:

It is. Metaphorically, anyway.

Also, A.R and Blacksmith, don’t be so cruel to our rodent friends. What have they done to deserve the comparison to the GOP?

Ms. Daisy Cutter:

Seriously. Chas, Esme & Rubin are complete heretics. Fuzzy little heretics.

Caine: Oh, I know rodents can be godless. How else do you explain squirrels?

The way I’ve always understood PZ’s arguments is that Kurzweil’s utter absolute certainty that an AI singularity is inevitable, as well as his timeframe for when this inevitable and absolutely certain transition must surely take place, is ludicrously optimistic, and derived not from rational consideration of evidence, but from wishful thinking.

Personally, I think that the development of an AI with a human-level degree of general competency, such that we would recognize it as sentient and grant it rights under the law (pass the generalized Turing Test, basically), might just be possible within the next 50 years. But to go from that to the brain uploading implicit in a singularity scenario is another thing entirely, and the assumption that a human level AI would automatically be able to quickly design even smarter AIs is unsupported.

When we finally made a computer chess algorithm that can play at (and slightly above) the level of the very best human players, we achieved this feat by building a machine that plays chess in a way that was profoundly different from the way humans play, but achieved equivalent results. So it will likely be with the first human-level AI – it will achieve its sentience in a way that is different from human brains, and a way that will not automatically translate to one uploading or even plain copying into the other. Just as you cannot extract the chess playing module out of Garry Kasparov’s brain and transcribe it into Deep Blue’s code.

I also find it unlikely that any hypothetical potentially human level AI will succeed in developing a human level of general competence without the benefit of living through and experiencing a human lifespan. ie, We won’t be booting up adult human-level AIs out of the box any time soon, we’ll be booting up baby-level AIs, and then gradually training and teaching them until they reach adult human level competence.

Amphiox:

Great. So now my brother will be able to fuck up the life of a robot, and not just his kids?

That’s a Lifetime Channel feel-good movie, if I’m the judge of such things.

“By Jove! The King of England will rule the whole planet by 1930, and we’ll be mining coal on Mars to power our flying velocipedes!”

OK, now I’m waiting for PZ’s steampunk masterpiece, with a villain that sits on an octopus throne under the ocean.

Amphiox:

That’s already been done: http://www.sciencedaily.com/releases/2008/11/081120111622.htm and with language skills: http://www.sciencedaily.com/releases/2008/02/080229141032.htm

The real reason why diesel-electric locomotives supplanted steam locomotives is quite simple. Diesel-electrics are cheaper to build, operate and maintain than steamers of comparable power.

Here’s a lashup of three 4400 hp diesel-electrics. How many engineers are needed to run this lashup? Answer: One. If it were three steam engines in a lashup, each one locomotive would require a crew of two (engineer and fireman). Railroads would much rather pay one salary than six.

Seriously, Childhoods End is a much better book that this “Singularity” that seems poorly written…

This is actually.. well.. We have two issues:

1. No computer has even, so far, come up with novel anything, including mathematics, which isn’t part of what we already knew in mathematics. It might find new ways to solve the same problem, but it never comes up with “new”.

2. The caveat to the above is that some “genetic” algorithms have produces results that, while they still don’t do things we don’t already know how to manage, they can do so in ways we can’t easily decipher.

Presumably, access to more complex inputs, something like genetic algorithms, and a less strict, binary, interpretation of its own actions *might* allow a computer to derive things that we don’t already know, but doing so won’t be fundamentally different, or faster, than we do it, or, more to the point, confirm what we come up with. Interaction with the real world is a necessity in creating things, and even if a machine can “think” faster than we can, they are fundamentally constrained by the same, “rate of confirmation of ones observations”. They can’t “think” things into certainties, they would have to try them, in the real world, or via simulation, etc., before determining that they actually apply to the real world, and that constrains who quickly they could create, “more advanced machines”.

Its very much doubtful, due to this, that they will outstrip us all that quickly, never mind replace us. If such a singularity did happen, it would be hundreds of years farther ahead, and the odds are probably better that we would end up with a world like Ghost in the Machine, well before the machines themselves ended up with real “ghosts”.

Its not an unlikely prediction, especially with humans actually thinking about it, but how, and when, is definitely way off where he seems to think it would be, or even that it will happen as, or anything near like, what he seems to think. Someone will be figuring out how to do it, the first results will be useless, but, if it happens, by the time people upload into machines, there won’t be a difference between what the “more advanced machine” might be able to do, and what an uploaded person can, or likely all that clear a distinction as to which is which. We will make them to be like us, if they are asked to improve that, it will be to be more like us, or more specific to a job, and thus “less” developed, in all other generalist terms. The odds of one being told, “make yourself better, with no criteria.”, and then producing something fundamentally smarter than us… really is Science Fiction. imho. Its just not what we would “need”, so not what we would ask, or probably allow, one of them to do.

Is a proponent of The Singularity cult allowed to use words like “cocksure”?

To those still wondering what PZ has against Kurzweil: “Unknown unknowns”. Kurzweil is one of those smart people who doesn’t have the humility to admit that he’s in way over his head when it comes to brain development and structure and how it relates to electronics. It’s not out of the realm of possibility that Kurzweil is right, but note for the record that Kurzweil is way into nutritional woo, so it’s almost certain that whatever else he’s very good at, critical thinking isn’t one of his strong points.

It doesn’t help that from a sociological standpoint, the concept of a singularity doesn’t make a whole lot of sense to begin with, because by definition it can’t be defined in the way a mathematical or physical singularity can. It’s not falsifiable because we don’t know what the boundary conditions are, and even if it did happen, it seems as though we wouldn’t notice it happening; we’d only recognize it in retrospect. (Which means that it’s entirely possible we’re already past the singularity, but we’d never know, at least not likely within our lifetimes. )

Kagehi @ 61;

It doesn’t surprise me in the least that Masamune Shirow has a more credible grasp of the possible future implications of technology than Ray Kurzweil, even with his penchant for stories about heavily cybernetically modified posthuman secret police.

At least he doesn’t engage in egregious crimes against charts like the would-be technomessiah does…

Not quite.

Protein folding relies on algorithms programmed in by human beings. The “decisions” the AI makes are predetermined by its (rather complicated but in no way mysterious) programming.

Computers don’t come up with new ideas. Humans have the ideas which they program into the computers. So far all AI consists of is trying to simulate human intelligence with different algorithms. Which are designed by humans.

Exactly.

In the case of human intelligence, I think that having original ideas has something to do with error. Many new ideas were at first errors in a predetermined pattern, just as successful mutations are originally mistakes in replication. Functional computers are made to constantly correct (it is part of all the operations that manipulate data in computers) or recover from errors and to rigidly follow programming, however complex it might be. It has to be that way, because of the way they work -a finite instruction set that is microprogrammed at the material level. A mistake in instruction produces exactly nothing.

So, this singularity thing is called that because it’s an analogy to what (Hawking?) called a singularity where the laws of physics broke down in the center of a black hole and in the early big bang? From the language of the emailer it seems so. Isn’t that already pretty much outdated and nobody really believes in it anymore?

I look at those charts and see “run-up to a Malthusian collapse” not “run-up to a singularity” — it seems bizzare to me that Kurzweil is so sure everything’s going to work out and we’ll have a geek-rapture. We could just as well point at those charts and observe the underlying energy consumption of the technologies and population driving them and scream doom and gloom.*

(*I think that view is just as likely as the geek-rapture. I.e.: we have no idea)

No computer has even, so far, come up with novel anything

How much of what humans come up with is novel re-arrangements of existing cultural elements? Computers can do that, quite well. Yes, there are occasionally new things that humans come up with – like new mathematical proofs or new artworks – but if you treat our cultures and languages as an existing, massive, set of inputs that can be selected from and combined based on semi-randomness and proximity, you might get something that was hard to distinguish from creativity if there was a good enough feedback loop to prune out the stuff that didn’t work. Anyone who has listened carefully to a child in the babbling stage might believe that that’s what humans do. How much of creativity, in other words, is learned?

I also find it unlikely that any hypothetical potentially human level AI will succeed in developing a human level of general competence without the benefit of living through and experiencing a human lifespan.

Interesting point. Certainly it would have to learn how to interact with humans, at a human pace. Until adult AIs could teach baby AIs “here’s how to talk to the meat that thinks…” I wonder if they’ll have to figure it out by experiencing lives alongside humans.

So, wait, we’re going to be assimilated by the Korg?

God, singularity talk annoys me. Esp. that there seems to be a strong bias towards in-lifetime predictions.

A lot of singularity types are also similar to apocalyptic christians: they want this doom/transition/whatever, it does not even occur to them to control it.

2029 is WAY too soon. And IMO even an extremely intelligent AI is not neccesarily going to be all that unpredictable. It might even be MORE predictable.

Not to mention that an AI will have all the gradual tech problems that humans have, just done automatically and maybe faster.

Finally, a completely unpredictable singlularity with AI’s with possibly alien motives going out of control? Sounds dangerous. Maybe somebody needs to tone it down.

My own predictions: Frankly pulling out of ass, but you’ll see I am very humble

Animal level AI: 15-200 years

Human level AI: 50-10,000 years

Non-Socialized AI (AIs that are created w/ ethics, rules, methods, learning, etc preprogrammed, rather than being raised like children and socialized): 100 – 1E6 years. Have the potential to be very stupid while smart, very dangerous if attached to powered systems or trusted absolutely. See lesswrong.

Wide acceptance of transhumanism in Europe: 20-120 years

Substantial transhuman improvements available, safe, and legal: 40-300 years, depending on complexity

Substantial human lifespan improvements: Guessing on a big jump in the next 40-200 years and then another big jump if sufficiently powerful genetic engineering is developed. Nanotech and computer tech will be contingent, but it’s not about AI.

Immortality: 500 – 1E7 years, though actuarial escape velocity (lifespan increases faster than time passes) may occur earlier.

Substantial human cognitive improvements: 1000-1E8 years

Kurzweilian nano-magitech: probably never. However nanotech does have a lot of potential.

Brain Uploading: I suspect this will result in the death and cloning of the user if done in the traditional sense. Probably something like 800 – 1E8 years?

Who could have predicted the advent of the internet in the 1900′s ? – kemist

E.M. Forster, The Machine Stops, 1909.

Charles Stross has done it for him in Accelerando, highly recommended. The solar system ends up consisting mostly of orbiting self-aware corporations.

@ KG #73

Accelerando is a great piece of fiction, even more interesting is Stross’s real take on the Rapture-for-Nerds:

http://www.antipope.org/charlie/blog-static/2011/06/reality-check-1.html

I have to admit that when I was in my early teens this singularity stuff had me hooked for a bit but it really didn’t take long for me to realise that actually, it’s a bullshit argument. I accept the principle that it should be possible to simulate a human being in a computer, I accept the fact that it should be possible to create an intelligent being in a computer and I accept the fact that it should be possible to make an intelligent being, train it in AI science and set it to work building it’s replacement. What’s obviously a crock of shit is looking at disparate technological innovations and thinking it will tell you how development will work in the future: as if the trials and tribulations of inventing steam engines compared to the length of time of the Apollo Project will tell you how long after windows vista augmented reality specs will arrive. Clearly Kurzweil was never shown this

http://xkcd.com/605/

The thing about the future is that it is mostly unpredictable. The longer you try and predict the more chance there is of some disruptive event fucking up your prediction.

Not true. Computers have quite definitely come up with new scientific knowedge. OK, it’s knowledge of a kind people could also produce, not some earth-shattering breakthrough, but it is definitely novel. The fact that it was programmed is also irrelevant to whether it produced something novel.

Probably not PZ’s position, but mine is that the Singularity, if defined by the existence of a super-human AI, happened maybe a million or more years ago, when our ancestors first acquired language. Since then, our problem-solving capacities have not been limited to those of a single human brain. Currently, we have specialist and highly artificial socio-technical systems for problem-solving and knowledge acquisition, notably universities, research institutes, “intelligence” organisations and R&D departments. So simply concentrating more processing power than a single human brain – or even than all human brains put together – in one machine, is not likely to produce anything like such a radical change as the Singulatarians think.

Aside from that, I think their timing of that event is way off. Kurzweil proposes a kind of brute force approach to AI: simulating the activities of the brain in great detail; he thinks that this will more or less automatically produce a “true” AI, and enable us to “upload”. But if we intend to simulate at the molecular level, down to folding proteins and jumping genes (see March 2012 Scientific American for the latter in the brain), the simulation would surely require resources on a literally astronomical scale; and if at some more molar level, we would have to understand thoroughly the brain’s operating principles – if indeed it has any that can successfully be abstracted from the mass of detail.

I’d also challenge the idea that technological advance is, in general, accelerating. Sure, if you just look at the hardware capabilities of computers, you can make a case. But does anyone believe that the capabilities of software has advanced in the same way – outside computer gaming, perhaps? In other areas, such as gene therapy, progress has been much slower than anticipated, while in space exploration, our capacities are in many respects less now than they were forty years ago.

Moreover over the past few decades, investment capital has poured into computing, mkaing up an ever-increasing proportion of total R&D and of GDP. This is not sustainable: Kurzweil thinks the whole economy will become digitised and so partake of the acceleration in computing technology’s advance, but this is absurd – you can’t digitise away the need to produce and transport real physical products, and doing so has irreducible energy and materials requirements.

And you, apparently, don’t recognize sarcasm. I’ll help you find it.

Get a map. Find the Saar River. Follow the river up very near the source. You will note that it goes through a very narrow gorge. This is the Saar Chasm.

Hmm. Had nothing to do with extremely inefficient external combustion? or the shitty power curve for getting a train started? or maybe that a mainline steam locomotive averaged 15 hours of labour per hour of work in repairs and maintenance during the locomotive’s 20-year life? Oh, and do keep in mind that General Motors did not purchase EMC until well after the company’s diesel switchers had shown their effectiveness and the company had also shown that diesel-electric passenger trains were not just publicity stunts.

Yes. And when labour is cheap, steam locomotives are a viable option.

Also, keep in mind that the diesel electric locomotive was an untried unknown. And the railroads already had around a trillion dollars (bringing it forward to today’s dollar values) invested in the physical plant to take care of steam locomotives. Roundhouses, locomotives shops, ashing and coaling facilities, water towers — all to take care of steam locomotives. The first railroad to completely switch to diesel-electric locomotives was the New York, Ontario & Western (didn’t help them as the railroad should never have been built) in ~1943. The last main line, standard gauge steam operations in the US was the Grand Trunk up in Michigan in about 1961. In less than 20 years (far less for most roads) the railroads abandoned a massive physical plant investment. If the diesel-electric locomotive did not promise massive savings in labour and operating costs, why would the railroads abandon that much of their physical plant?

Which is bullshit. Weight is added to both steam and diesel-electric locomotives in order to increase tractive effort. Additionally, steam locomotives, although their tractive effort curve is similar to an electric (which includes diesel electric locomotives) locomotives, their power curve is bell shaped. If you want to move freight, heavy freight, across long distances, the hard part, the part when you really need to the power, is when you are starting — and steam has practically zero horsepower when starting. It is all torque.

As for the inclines? More bullshit. Steam locomotives have no transmission. The only way to vary the gear ration is via the stroke of the piston (done by varying the distance of the main pin from the center of the driver) and by varying the drive wheel diameter. Steam locomotive designers varied the drive wheel diameter to alter the horsepower curve (smaller wheels shifted the curve down) and the tractive effort curve (again, smaller wheels shifted the curve down). Locomotives designed to work in mountains had smaller drive wheels (with lower top speeds). Diesel-electric locomotives have vastly more tractive effort available because of the small (relative to a steam locomotive) drive wheels and the way that electric motors deliver their power.

I have personally experienced, on more than one occasion, a steam locomotive stalling out on a grade with a train of less than 1,000 tons. And this is a freight locomotive, pulling relatively light passenger cars, on only a 2% grade and dry clean track. The freight trains that go up that grade will use far less rated horsepower per ton of train and get it up the hill no problem.

More bullshit. The railroads planned for an economic life of around 20 to 25 years for a mainline steam locomotive (gee, almost exactly the same as a modern diesel-electric locomotive). This meant that, during that period, the locomotive was expected to make enough money to pay for its replacement and its own upkeep and fuel. At the end of that period of time, the cost per mile of maintenance and repair was usually too high to keep it in mainline operation so they were dropped to commuter service, or mine runs, or sold to short lines (gee, almost exactly what the modern railroads do with modern diesel electric locomotives — look at the motive power on your local short line).

First off, diesel locomotives are electic, they just carry their generating plant with them.

No question electric is cleaner. Railroads are in it to make money in the US. The cost to electrify a railroad line is exhorbitant. Unless that line is extremely busy, there is no way the railroad will be able to get any return on the investment in a timetable that will please the investor. Additionally, being clean is not what investors want — they want profit.

It surprises me that you are suddenly preaching cleanliness after extolling the virtues of steam railroading. Phoebe Snow was an advertising campaign, her lily white dress on the Road of Anthracite did not acurately portray rail travel in the age of steam. It is dirty. Very dirty — whether coal or oil fired, steam locomitives are pigs. I, and those I work with, have to buy new flat hats every year because of coal ash and cinders.

Really? Then the Chicago, Milwaukee, Saint Paul & Pacific Railroad’s electrification of their line from Harlowtown, MT, to Avery, ID, from Othello, WA to Tacoma, and up to Seattle at a cost of $23 million (1919 dollars) to handle their steepest grades was a mistake? Even though the railroad figured that by the mid-1920s, the savings of the electrification had covered half the costs? Why would the Virginian Railroad, a coal-hauling road, electrify their mainline? For the same reason: the electric locomotives, because of the torque and power curves of the electric motor (the same ones in a diesel-electric locomotive) are far better at handling steep grades than the large wheels of a steam locomotive with the pulsing torque.

Sorry to go on like this but, for a self-described rail pedant, you really don’t know the history of steam railroading all that well.

[/steam railroading professional mode]

Which is a drop in the bucket compared to the maintenance and repair costs. A modern diesel-electric locomotive requires, during its economic life, an average of 15 minutes of maintenance and repair labour per hour of work compared to a steam locomotive’s 15 hours per hour of work.

Sorry for the tl/dr.

But original ideas can be more than just logical rearrangements.

Humans learn from their errors. Computers crash when they experience uncorrected errors.

Why is that ?

Humans live in analogic space, which means an error will produce an unexpected result, which might be interesting. Computers exist in digital space, where errors produce uninterpretable gibberish. They can only respond to situations which were planned for by their programming.

huh, I beg to differ ?

You really think no new algorithms and programming paradigms have arised to take advantage of increased hardware capabilities ?

Maybe you are a bit blinded by the seeming simplicity of the human-machine interface which hides what’s really going on. It’s not too surprising, that’s what it’s made for.

We’ve already experienced several Singularities, and they’re all only really detectable in retrospect. The first was, as KG points out, the development of language, which allows thought to transcend a single entity. There was another with the development of writing, which allows language to transcend immediacy.

(It also depends on how you define “Singularity”, of course.)

Yeah. Case in point:

http://www.zdnet.co.uk/news/emerging-tech/2011/09/19/foldit-yields-aids-breakthrough-40093970/

This isn’t “folding at home”, which uses computer algorithms to derive solutions, or at least not fully. Its actually a “game”. A computer comes up with a number of possible preliminary solutions, then attempts to refine them, but the game takes snapshots from the process, and lets people try their hand at the solution. In this case, and it seems maybe others, the computer’s “predictions” showed a low probability of the snapshot having a correlation with the real solution, so it was passed over, in favor of others, by the computer. The gamers thought the computer was flat out wrong, and started working on the folding solution from that earlier, computer rejected, possibility.

Basically, if the algorithm, which, as you say, is predetermined, can’t see the validity of a solution, it will pass right over it, as a less likely result, and wander off into the twilight zone, trying to find the correct solution in something that isn’t even close to the correct solution. At best, it might exhaust, eventually, all possible solutions in the dead end, which might be millions, before “backtracking” to earlier possibilities. But there might be billions of such solutions, which still have a higher odds, according to its logic, than the one that, if followed, would have resulted in the correct pattern.

Umm, in principle yes, but this is usually a result of “hard coded” logic. One of the things being worked on, especially in language, but in other areas as well, such as navigation, is a system that operates not on, “X = Y”, logic, but more like, “X may = Y, within a 94% certainty”. This allowed language machines, like the CYC project, to derive its own novel connections between words, by parsing how likely it was, in context, for the word to mean something similar to existing words it already had in memory. Unfortunately, it also got told stupid things, like the idea that humans have souls, and derived idiocies, like calling humans ‘partially tangible’, or some such gibberish, when talking about them. It also never progressed beyond a 4 year old level of language, but that may have been more to do with the speed of processors at the time, the complexity of retrieval, and storage limits.

Its hard to say how much it could have “thought”, but it had the capacity of being asked a question about something, and being able to derive a fairly sound result, to adjust its own perceptions of the information given, so it knew how the words really related, and could ask its own questions, to clarify if it had made a correct association, when constructing new phrases, logic connections, or determining, from experience and context, the meaning of new, undefined, words.

The reason computers crash from errors is because its a lot easier to design something to handle “known” conditions, than design something that handles unexpected results. The former requires clear, well defined, conditions. The later.. adaptability, and some level of capacity to handle uncertainty. This isn’t impossible for a computer, its just not what most applications require, need, or would be much helped by. For one, it would slow things down, since it would have to decide if the new inputs “could be” parsed, and how trustworthy it found them, based on what it had learned and knew. But, even this is, on a basic level, not possible, since hardware can’t rewire itself that way, even if the software can be written to do so.