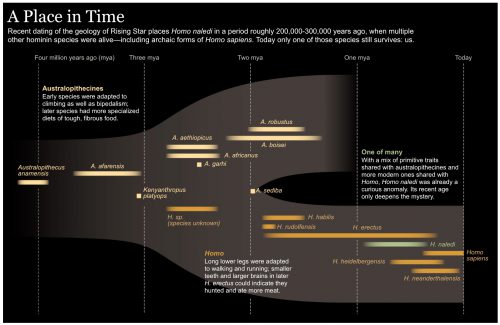

When I first heard about Homo naledi, the question at the top of my head was “How old is it?” It was a hominin, it looked fairly primitive with a small brain the size of a gorilla’s, yet it was found in a mass “grave”, where part of the mystery was how so many dead hominins ended up in this difficult-to-reach, hidden cave system in South Africa. The authors didn’t report a date. Speculation ran from 3 million years old to 300 thousand years old, both dates seeming extreme and unlikely.

Now we have a date: between 236,000 and 335,000 years old. Astonishing.

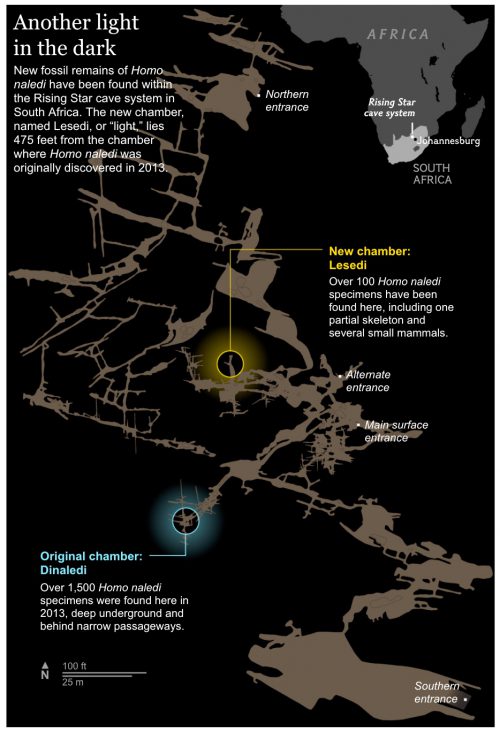

That’s really young. Furthermore, they’ve found another chamber in the cave network with even more bones.

All indications are that this was a thriving population of little, primitive people.

The bones, remarkably, show few signs of disease or stress from poor development, suggesting that Homo naledi may have been the dominant species in the area at the time. “They are the healthiest dead things you’ll ever see,” said Berger.

Homo naledi stood about 150cm tall fully grown and weighed about 45kg. But it is extraordinary for its mixture of ancient and modern features. It has a small brain and curved fingers that are well-adapted for climbing, but the wrists, hands, legs and feet are more like those found on Neanderthals or modern humans. If the dating is accurate, Homo naledi may have emerged in Africa about two million years ago but held on to some of its more ancient features even as modern humans evolved.

It’s still a mystery how all these bones ended up in the caves. These don’t seem to be ceremonial burials, it’s more like they were chucking their dead down some hole to drop them in a deep cave.