You should read the whole comic — it’s painfully accurate.

I’ve seen academics form publication rings — not just agreeing to cite each other’s work, but make each other co-authors on all of their papers. I’ve witnessed colleagues put each other down by snarling impact factors at each other. Academia does get ugly.

That said, I don’t know that the remedies suggested in the comic are practical. Maybe the first one would work: having standards and requirements for data sharing, the better to confirm the work. ‘No scientist could possibly object to that,’ I simper naively. Unless there are patents involved.

The second is for universities to change their hiring policies to encourage greater breadth, which would be great, except that the grand poobahs who administer universities tend to be completely disconnected from both the research and teaching going on.

The third is for the funding agencies to wake up and stop throwing all the money to the big flashy projects. There are things like that going on right now, but you know who has the most power in peer review? The scientists who do big flashy projects.

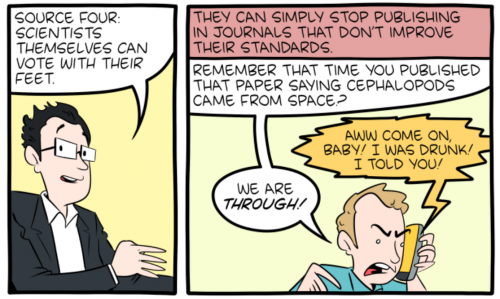

I like this one.

Heh. Where have I heard of that one? The catch there is that that was published by another kind of ring of crackpots, publishing in an obscure specialty journal edited by more crackpots. They can do that. There’s nothing illegal to stop them, and it’s all encouraged by Elsevier, which only cares about money.

You might notice that more than a few people jumped on that as one of the dumbest papers ever written, but there are no academic incentives at all to do that — you can’t get tenure by being good at cleaning up the droppings of bad science, and if anything, it counts against you. Maybe that’s something that could be fixed, but I’m not counting on it.

So, yes, read it, great comic, very accurate, but maybe too optimistic. We need good goals to work towards, though.

Circular references was always the big knock on Marxist theory: everyone quoted from everyone else and it became a closed system that was very resistant to letting any “fresh air” into the conversation.

But it’s hard to think of any profession that’s not being crapified right now. Publish or perish isn’t much different than the demand from investors for constant increases in profit. It’s just not sustainable: not in business, not in politics, and certainly not in science.

I liked the comic a lot, but it was missing one thing: The reason WHY this is happening. A clear call out of a horrible idea, that science should be run like a business.

To be fair, it’s already a very long comic, and a discussion of this may have been too much.

In other words, fucking capitalism strikes again.

Ever heard of this thing called a “union”?

You can say “capitalism” but it’s about status more than money. I also don’t see an easy solution, because and system of awarding points can be gamed, and publication count is just a way to award points for getting recognition and tenure.

I remember a professor at my computer science department (not my advisor) who I do not accuse of doing anything unethical, and in fact he produces a lot of good publications (and was a great lecturer and problem solver), but I observed his m.o. for bolstering publication count, which is probably very common. He would see what was “hot” at the latest conference and just rebrand what he or his students were working on. Remember that that curvilinear doohickey algorithm, let’s submit a paper on amortized analysis of incremental curvilinear doohickey. The conference deadline is next Tuesday. Get busy and I’ll see what grant will pay for the trip.

The above approach doesn’t produce “bad” research in the sense of being an incorrect result. It’s not experimental, and in this case, there are mathematical proofs that hold up. What it does, however, is flood the field with a lot of work that just isn’t worth reading. Once you get the idea that concepts can be combined, you could make up titles of many papers that never need to be published. The problem is that to succeed in a tenure track position, you actually need them to be published.

If it was just about money… well I gave up (for a couple reasons) on the idea of ever succeeding as a professor. But probably have a higher net worth as a result, and a less stressful life in general. Though I hate to quote Kissinger, I like his observation that the fighting in academia is so bitter because the stakes are so low. At least that’s how it appears to me.

None of this is a secret to anyone in any field. Competent academics can separate the wheat from the chaff. The peer review system as such does not tell you if a result is worthwhile, but just ask a professor your trust in the field, and they can probably tell you just by reading the abstract.

me@5 It looks like I’m alluding to Sayre’s law, which thankfully did not originate with Kissinger.

One of SMBC’s suggestions has a potential problem: “The best advice is to check what other scientists are saying.” The majority aren’t always right. Sometimes the “out there” theories turn out to be the best ones, or credible theories should be tested until proven impossible.

Scientists long dismissed Barry Marshall and Robin Warren’s claims about the causes of ulcers, but they ended up with a Nobel Prize for discovering H. pylori bacteria. Scientists used to think it was counterintuitive to use ceramics as superconductors, but it turns out ceramics are the best material. And you can find advocates of Dark Matter who try to dismiss MOND instead of trying to prove it or test it.

Intransitive@7 An idea can sound crazy and still be true, but the fact that it sounds crazy, all other things equal, is usually evidence that it’s not true.

I agree that it would be extremely damaging to discard everything that goes against consensus. I think in a case like H. pylori causing ulcers, another researcher could be skeptical but still very interested in seeing evidence that it’s true. They might not consider it likely enough to pursue themselves, but I don’t think there is a conspiracy to stop people from rocking the boat. That’s why you get awards for results like that–provided you can demonstrate them to enough researchers to move the consensus.

I’m not saying it’s easy either, because it can be academic suicide to go off on a tangent that does not fit the consensus and turns out to be incorrect. So the incentives are there just to work at the margins. Again, it’s part of why people get Nobel prizes. You have to take a risk and find something that other very talented people missed.

@7

I read this and it’s way outside anything I know, but it’s an interesting example. My gut reaction is that anything based on a threshold is not the underlying explanation since it sounds so inelegant. But that’s an aesthetic, not scientific argument.

If MOND can be stated fairly simply and continues to match new observations, then nothing stops it from being a valid scientific model. It could be an empirical model like Hooke’s law of springs. Hooke’s law works approximately within range, well enough to calculate periodic motion, and says nothing about non-linear effects or the fact that if you pull the spring hard enough it will snap. Hooke was certainly aware of this himself, but it didn’t stop him from formalizing the ideal case.

If the underlying explanation is dark matter and some correlation between dark matter and galaxy size explains the observed motion, it doesn’t invalidate MOND as much as gives an underlying cause to an empirical formula. That may not make a physicist happy, but physicists and cosmologists are lucky in working in a domain where they might really find underlying causes instead of modeling empirical results.

Intransitive @7: Quibble: most high-temperature superconductors are ceramics.

But MOND does not belong in a list of theories which have been shown to be true.

Just brain farting here, I haven’t thought this all through:

I think there can be a big role for teaching in here. Have students read papers, and actually try to replicate the results. Have them publish, ideally in a respectable peer-reviewed journal, but if not, simply in a for-students-only journal run by the university, and peer-reviewed by the students and the professor, (but still online and citable.)

It’s a several birds in one stone situation, you get replication studies, the teaching professor (probably) is co-author on the more important studies (meaning better publication/citation scores for those who mostly focus on teaching), students actually start reading papers critically and get experience writing, publishing, and peer-reviewing.

This would of course still require a lot of studies to publish their data, as collecting data may be out of the scope of some lab courses, but now there’s an incentive to do so as they’d get a lot of citations in the “University of … students’ journal of failed/successful replications”.

The underlying problem is similar to that of FB and Google algorithms.

Instead of a direct measure of a papers content strength and importance the methods use “tokens of agreement”.

Those tokens are like ticks of approval rather than facts about the paper.

Citations are just links. They don’t have to cary reasreasons. Appearances in journals are just body counts, they don’t have substantive meaning by just appearing multiple times.

Imagine a protocol of citation vetting however, where each citation must be explained by 3 reviewers independantly. The readers then checj each explanation out and agree or point out why the citation is a dud and something is wrong. That is the beginning of a system that would make citations less gamed.

“Maybe the first one would work: having standards and requirements for data sharing, the better to confirm the work. ‘No scientist could possibly object to that,’ I simper naively. Unless there are patents involved.”

I’m involved in some EU-financed research projects and they usually require that any publicaitons be public access and that data is made accessible, too. I’m not sure if the second requirement is universal for all projects. In our case we’re churning out a lot of 3d volume data and a 1 GB data set (no replication) is considered small. (We’re always trying to trim things to avoid blowing up our local storage) Now projects like these can have more than a dozend participants, all generating their own data sets – with repeat acquision for statistics etc. – which can eat up a huge chunk of storage and bandwidth, unless you want to start mailing HDDs to anyone who asks. It’s not impossible but making research data accessible (and useable) to anyone who’s interested can be tricky.

At any rate, that’s just the availability sorted. Now go find someone willing to downlad a few hundred GBs of strangers’ data trying to replicate or see how some conclusions or results came about. Maybe if that someone has a bone to pick with the results, otherwise I doubt many would ever bother. Not when they could be doing their own research and publications on something else instead. Unless some someone’s hoping to overturn something big there doesn’t seem to be much incentive at all. It’s a thankless task.

Capitalism can be blamed for many things, including Elsevier’s parasitic hold on scientific publication, but I think this particular problem stems from a different flaw: when managers and politicians decide their need for a lazy Key Performance Indicator is more important than making sure the KPI reflects the desired outcome or how the KPI will affect working conditions for people other than themselves.

I know you weren’t defending this. PZ, but patents are NOT an excuse to hold back data. By definition, securing a patent requires the invention be placed on a public register and every patent application requires an explanation of the invention. To quote the US Patent and Trademark Office, “The specification must include a written description of the invention or discovery and of the manner and process of making and using the same, and is required to be in such full, clear, concise, and exact terms as to enable any person skilled in the art or science to which the invention or discovery appertains, or with which it is most nearly connected, to make and use the same.” Furthermore, the application requires the applicant to agree that they have “no objection to the facsimile reproduction by anyone of the patent document or the patent disclosure, as it appears in the Patent and Trademark Office patent file or records.” (As an aside, the very word patent comes from the Latin patere, “to lay open”, because that’s how the system works.)

Anyone using patents as a reason to keep scientific data secret is just trying to avoid data scrutiny, not protect a patent.

The only ethical reason to withhold data is if it could be used to identify vulnerable people. Even then, appropriately de-identified data should be made available. And if this is impossible, it should raise serious concerns about the ethics of publishing the paper in the first place.

chrislawson@14

I’ll give you another “lazy Key Performance Indicator” (emphasis on lazy) created by colleges and beloved by HR departments: the GPA. I’ve been teaching at the college level for 40 years and anyone who thinks that you can boil down a multi-year college experience to a two digit number is dumber than a box of rocks. It makes for a very convenient metric for HR departments and hiring managers but it makes students behave in irrational ways. And sure, you might be able to make broad statements that given two students with the same degree from the same institution, the one with a 3.8 probably has a better grasp of the material than the one with a 2.5, but once you start comparing different institutions and the backgrounds/situations of the students, even that goes out the window. Besides, it tells you nothing about how well that person would fit into your company. But I’ve seen students tie themselves into knots because if they didn’t get a 3.X, company Y won’t interview them or school Z won’t consider them for transfer or a graduate program.

I teach engineering at a community college. The emphasis is on teaching, not on research, so we don’t live by “publish or perish”. Publishing is nice but it’s not seen as a requirement. As a consequence, many of my colleagues are very much focused on the success of their students, which is the way I think it should be.

I find that both the above-cited Lazy Performance Indicators are so correct. Tenure, in my experience, becomes more a matter of “deans (or department committees and heads) who can count, but can’t read”, i.e., they look only at the number of publications in the list without taking the trouble to read either the titles of the papers or the names of the journals.

And, it is so true that a GPA is pretty meaningless! Chem departments and medical and other professional schools found that out the hard way. There is, in fact, no correlation–none at all–between an undergrad GPA and the student’s success in chem grad school. Medical schools have long been confounded by students with 4.0 GPAs who are completely incapable of learning the skills required. Now, admissions to these almost wholly rely on the letters of recommendation: if they don’t say that the candidate has “initiative”, “ability to work through difficulties”, and other euphemisms for “can perform”, it doesn’t bode well. I keep trying to assure the students who plan to apply, but they never believe me. And, as said, it’s true that some companies still cling to this fantasy.

I find that the literature in my field, even in the most prestgious chemistry journals, is becoming more and more boring, as we have discovered what’s called the “Least Publishable Unit”, i.e., chop your research up into as many papers as possible. Publish a paper that contains one result, another that contains the next result, etc. To squeeze the maximum amount of papers out of one experiment. Papers with one result are really boring, and you have to keep waiting and waiting to find out eventually what the research accomplished. If you can maintain interest that long.

I know of academics who’ve formed “reviewing rings”, due to the journals’ increasingly-common tendency to ask authors to suggest reviewers. The journals’ editors naively, but also lazily, think that then they can get reviewers who are familiar with the field of the paper. Not so: what they get is rings of friends who always suggest each other, with the understanding that your friends will always recommend accepting your papers, and vice versa. These leads to ever-smaller Least Publishable Units. It’s less rarely the capitalism of grant money or industrial money, in my experience.

P.S. the performance metric that has the highest correlation with success in chemistry grad school is the undergrad’s GRE Verbal test score. Not the chemistry GRE, or Math and Analytical Thinking. The Verbal score (or, whatever they call it now, the writing part). Tells you something, doesn’t it?

@garnetstar 17,18

I want to figure out how to mesh that kind of information with my background at some point. I went to see a neurologist in year 3 of graduate school and learned that I still had the adhd, and learned about the tourette syndrome. I was in shock and already depressed, and unaware of the depth of my social anxiety and a lot of other things on my life that I’m getting more awareness of.

I’m angry and resentful, and I can see where my conservative, military, religious culture probably messed me up too. I’m still working through it and figuring out what to do with my feelings. Do you know how these areas might intersect or do you know anyone who does?

@11 robert79:

They do that with lawyers, why not scientists? Moot court is a thing. You go through a case that’s already been solved and do your own work to try it again. If there could be a push among the colleges and universities to have a “Science Review” sort of journal that goes through that, it would help a lot. The problem, of course, is finding funding for it. A lot of these studies had to pull teeth to get funding to do it in the first place. Where’s the money going to come from to do it a second time? If it’s a hot topic like cold fusion, there are any number of people who will be happy to fund your project to try to replicate the results. But if it isn’t going to make national headlines, and stands a really good chance of returning negative results, then a lot of people are going to be asking why their money is going toward what they think is the research version of “volcano monitoring.”

Hi Brony @19, I’d love to help you with any info I know. And, I’m sorry to hear about your difficulties.

But I’m not sure what you’re asking? About the lack of correlation of GPAs with success in chem grad school, or that the Verbal GRE score best predicts success in chem? I guess that you don’t mean the Least Publishable Unit and boring chem literature.

So, if you could just be specific about the kind of information, I’d be glad to russell up any I can!

garnetstar@21

I think you mean “rustle” but I will file that away in case I need a philosophy pun.