Who knew we could find unity in the cause of killing children? Right-wing loonies and left-wing moonbats have been working together to erode food safety and loosening milk pasteurization laws and allowing ideological weirdness to be poured down the throats of innocent children. But at least it’s bipartisan!

The contentious belief that raw milk may be healthier than pasteurized is a bipartisan one, however, it has captured the imagination of, as the Atlantic put it in a 2014 story, “urbanite foodies (read: progressives).” That same year, Joel Salatin, which the publication referred to as a “food and farm freedom celebrity,” told Politico that it was nice to have some liberals join the fight for the mainstreaming of raw milk.

“When I give speeches now,” he said. “The room is half full of libertarians and half full of very liberal Democrats. The bridge is food.”

You know, we actually have data. We know that childhood mortality was greatly reduced when we required that milk be pasteurized. It’s a simple and relatively easy parameter to measure: when you require pasteurization vs. allow raw milk to be sold, how many dead kids do you stack up in each category? We know the numbers.

The CDC would want us to remind you here that, yes, you are allowed to take risks in private, but raw milk is 150 times more dangerous than pasteurized milk.

When we were raising kids, we made the decision to exclude Salmonella, E. coli, and Listeria from their diet as much as possible. It wasn’t just the cost and trouble of paying for funerals, but the nuisance of infants with diarrhea. Trust me, it’s no fun for anyone.

If you’d like to see an entertaining discussion of the thrills of raw milk, Talia Lavin has you covered.

Pasteurization changed the dairy game. By 1911, Chicago and New York had mandated milk pasteurization in commercial operations, with other major cities quickly following suit; by 1936, 98% of milk in the United States was pasteurized. This coincided with lots of other medical discoveries and improvements in public hygiene, but the milk-pasteurization push had particularly drastic effects: between 1890 and 1915, infant mortality dropped by more than half. By midcentury, babies drinking swill milk and dying of diarrhea was largely a thing of the past. Most people would agree that this is, generally, a good thing. I personally drink milk daily with my coffee; I am glad it doesn’t come with a side of typhoid.

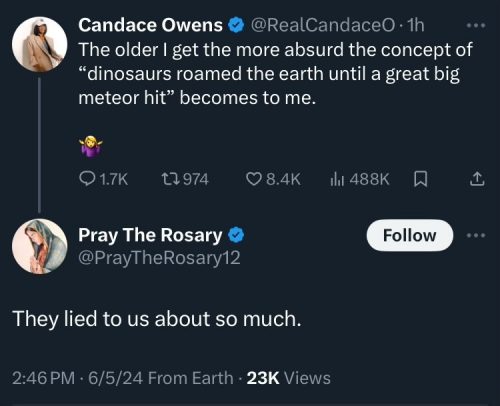

I said that this movement was bipartisan, but now it’s fueled by a lot of right-wing “own the libs” influencers and nutcases.

It’s just that the contemporary opponents of pasteurization—the “raw milk” movement, as they call themselves—are so fucking dumb, and so knee-jerk about it. The movement is endorsed by such disparate grifters as Gwyneth Paltrow; RFK Jr.’s erstwhile running mate, Nicole Shanahan; Christian TikTokers; the existentially stifled Mormon tradwife that is the wanly smiling face of Ballerina Farm. The overwhelming number of recent raw-milk converts—and its loudest current evangelists—are on the far right: over in the raw milk aisle you’ll find an assortment of right-wing Fitness Guys with steroidal vasculation filming themselves chugging raw milk, alongside Alex Jones, QAnon influencers, the CEO of racist Twitter clone Gab, and a motley assortment of also-ran far-right Congressional candidates, plus organizations like the Farm to Consumer Legal Defense Fund and the Farm and Ranch Freedom Alliance.

Now normally I might be willing to shrug off this suicidal insanity — let the kooks voluntarily weed themselves out of the population by swilling contaminated food — except that the primary victims of this lunatic movement are kids who have no idea of the risks, and who are simply expressing a natural trust in what Mommy and Daddy tell them. The problems arise when Mommy and Daddy are named Gwyneth Paltrow and Alex Jones. That’s who the food safety laws are aimed at.