Last month, I discussed how Netflix has a tendency to give us queer clickbait–promo images with men staring at each other, despite little to no same-sex relationship content. I 100% hope that Netflix data scientists see that article, but I would never expect a useful public response. The reason is, we already know what Netflix said last time.

Netflix has denied altering a viewer’s experience depending on their race.

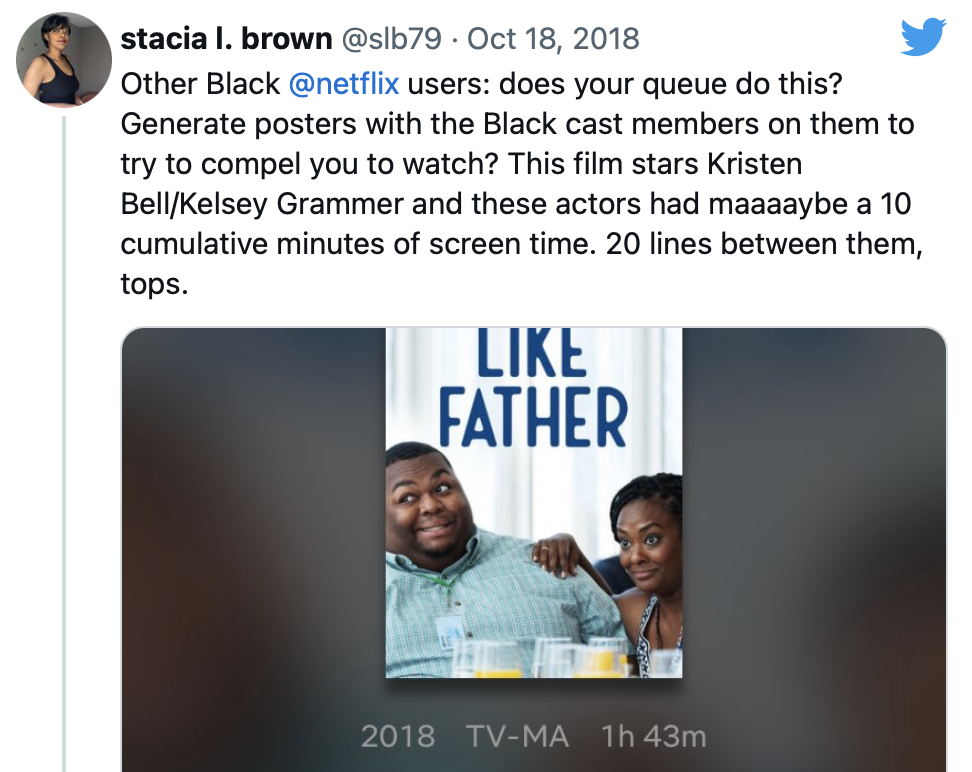

It’s been accused of “misleading” black users by showing promotional shots of black cast members in films and TV shows – even if they had a minor role.

Netfix told Newsbeat: “We don’t ask members for race, gender or ethnicity so cannot use this information to personalise their individual experience.

“The only information we use is a member’s viewing history.”

Here’s why Netflix’s response is laughable. I completely believe that Netflix’s algorithm is not based on race, gender, ethnicity, or orientation. I believe that they only use a member’s viewing history. But that doesn’t make it any better? The primary accusation is not that Netflix is choosing art based on race, it’s that people are seeing misleading promo images. The fact that Netflix does the bad thing without ever using demographic information only demonstrates that they don’t need demographic information to do the bad thing.

I want to write about AI discrimination, and this is a good case study to start with. It’s an illustration of several important points, starting with

Discrimination is not inherently bad

I wrote an article complaining about the proliferation of promo images with men staring at each other, but don’t get me wrong: I want that. I like seeing lgbt titles all over my recommendations, and I like that Netflix discriminates in my favor by giving me what I want. One might say that’s the entire point of the promo image algorithm.

The issue is I just don’t believe the promo images. Too often they are misleading and unrepresentative. So I don’t think the solution is to prevent the algorithm from discriminating. We want it to do that. We just want it to do it better.

The fact that discrimination is sometimes good makes it difficult to regulate. Not that I advocate regulating something so paltry as movie recommendations, but this is a problem in other domains, such as finance. Some discrimination is legal, and some is not, but how do we make a policy that draws the line correctly?

Algorithms can discriminate indirectly

How does Netflix discriminate without using demographic data? The answer is right there: they use data on viewing history.

If you have a history of watching lots of movies with Black leads, you’re statistically more likely to be Black. Nowhere does the algorithm make any guesses about your race, nor does it need to. It would treat a White person with the same viewing history the same, but statistically that’s less likely to happen.

In fact, the algorithm does not even need to know the race of the actors, nor the race of the people in the promo images. It’s possible Netflix has that data and uses it in their algorithm, but it’s also possible that they don’t.

I don’t know what particular algorithm they use for this decision, but you want an algorithm that can identify segments of people and the segments of promo images that work best for them. If you have 10 people and 10 images, the algorithm might determine that persons 1, 4, and 7 are interested in images 3, 6, and 7. Maybe the algorithm identified anime fans and anime images, or maybe it identified Black viewers and images of Black actors. The algorithm doesn’t know. It doesn’t need to know.

And this can happen without Netflix data scientists knowing it. You’d have to look at those viewers and those images to understand what the numbers represent. There may simply be too many images and categories to check them all. The easiest way to know is to look at demographic statistics. But oops, Netflix doesn’t collect that data!

”Neutral” choices can have disparate impact

Deliberately or not, Netflix chose to use an algorithm that shows a lot of clickbait. Presumably this is a problem that affects all users, but I’m going out on a limb to suppose that it disproportionately impacts certain minority groups, such as Black viewers or LGBT viewers. It’s just really hard to imagine that Netflix would show my brother a bunch of promo images that would mislead him into thinking a Mel Gibson film is actually an anime.

This is an example of a neutral choice that isn’t targeted at any group in particular, but nonetheless disproportionately impacts certain groups. We call that disparate impact.

Another neutral choice that would cause disparate impact, is if they didn’t have any personalization at all. We’d all see the same set of popular movies, made to appeal to the average everyman, which is to say straight White people. Disparate impact is quite difficult to avoid.

I believe algorithms can make it better, but they won’t automatically do so.

Leave a Reply