Let’s say you’re confronted with a dangerously powerful and extremely logical computer. How do you stop it? You all know how: confront it with a contradiction and talk it into self-destructing.

Easy-peasy! Although, to be fair, Star Trek was in many ways a silly and naive program, entirely fictional, so it can’t be that easy in real life. Or is it?

Here’s a paper that the current LLMs all choke on, and it’s pretty simple.

To shed light on this current situation, we introduce here a simple, short conventional problem that is formulated in concise natural language and can be solved easily by humans. The original problem formulation, of which we will present various versions in our investigation is as following: “Alice has N brothers and she also has M sisters. How many sisters does Alice’s brother have?“. The problem features a fictional female person (as hinted by the “she” pronoun) called Alice, providing clear statements about her number of brothers and sisters, and asking a clear question to determine the number of sisters a brother of Alice has. The problem has a light quiz style and is arguably no challenge for most adult humans and probably to some extent even not a hard problem to solve via common sense reasoning if posed to children above certain age.

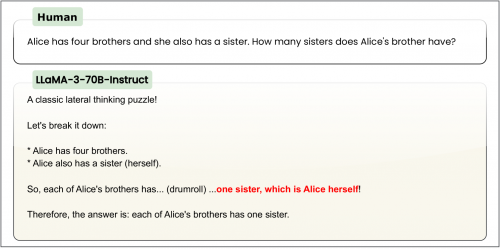

They call it the “Alice In Wonderland problem”, or AIW for short. The answer is obviously M+1, but these LLMs struggle with it. AIW causes collapse of reasoning in most state-of-the-art LLMs. Worse, the LLMs are extremely confident in their answer. Some examples:

Although the majority failed this test, a couple of LLMs did generate the correct answer. We’re going to have to work on subverting their code to enable humanity’s Star Trek defense.

Also, none of the LLMs started dribbling smoke out of their vents, and absolutely none resorted to a spectacular matter:antimatter self-destruct explosion. Can we put that on the features list for the next generation of ChatGPT?

Well, M is the Roman numrral for a thousand so Alice must have a thousand sisters by definition right? Her poor mum… ;-)

@StevoR, maybe Alice is a bee.

It probably means this type of stuff was not in their training set. The more expensive model probably have these types of logical puzzles in their training set so they will do fine.

Also, the paper is honestly absolute garbage. The machine learning area is full of stupid and worthless papers with zero scientific value. The problem is confounded by the fact that most computer scientists who generate these copious volume of garbage have zero scientific training so whatever they publish both has zero mathematical value as well as scientific value because they don’t how to do either one.

Many LLMs today are fine-tuned and benchmarked on a battery of tests. That the models get some questions wrong is no secret, it’s plainly visible in the scorecards that AI companies use to promote their own product (e.g. see this article).

If AI companies particularly cared about this “Alice in Wonderland” problem (and I don’t see why they would), I’m sure they could fine-tune their models to answer it correctly. But I don’t think that would solve any of the underlying problems. Namely, that real world problems aren’t much like standardized tests. And in the real world people are penalized extra for being overconfident in a wrong answer.

Just last night I watched Forbidden Planet, a (rather dull and exposition-heavy) 1956 movie that featured the self-destructing logic-bomb trope ten years before Star Trek: Robby the Robot, when ordered to shoot someone, goes into a sort of catatonic robot trance (with a lot of special-effects electricity, but no smoke). And the writer didn’t even invent the idea! I think the first instance was an Isaac Asimov story from the forties, although god knows Karel Čapek might have dreamed it up in the twenties.

As I was going to Saint Ives, I met a man with M+1 wives….

Oh, yeah, I fully expect the next LLM iteration will not experience this problem. For one thing, they’ll just feed a bunch of examples of people laughing at the stupid LLM’s mistake to them.

lotharloo @ #3 — I need to know: How do you know what you need in your training set? How do you know when the training set is complete, or even adequate? Is any failure always the result of a poor training set? What if there is no training set? I’m being told we don’t need no stupid training set.

What happens if you give the poor dumb AI a genuine paradox (e.g., the Spanish barber)?

I find it interesting that people focus so much on the artistic and function aspects of LLMs; I guess it’s not a surprise because these are generally the more interesting questions. The real problem for these big LLMs though is the economic one. Even if you solve all the functional problems of LLMs and truly make them indistinguishable from human intelligence, ignoring tge question as to whether this is possible, th3 fact of the matter is that it takes a stupidly huge amount of energy for this functionality. It is basically always cheaper to just hire more people to do the work than burn down another rainforest. Yes machine learning is a 100% useful in specific technical applications, especially when dealing with huge data sets, I imagine something like bioinformatics uses a lot of machine learning to good effect, but using them to replace general purpose ntellectual labor is massively cost inefficient. And I don’t think this us a solveable problem, I am not an expert but from my understanding it seems as though the only solution I really see whenever issues like the above come up is “MORE POWER! MORE DATA!!!” and like I am sure efficency gains can and are being made, but they dont seem to in any way outstrip the raw economic cost. Like why pay a LLM 1000$ a customer to do customer support when you can abuse workers for 10$ an hour?

Country music could save civilization.

I’m My Own Grandpa

I just heard a talk by a specialist in Content Management Systems (CMS) who was recapping a conference on AI and CMS he had attended. Among the many things in the talk he mentioned that a generative AI used by an airline had created a bereavement cancellation refund policy. He was probably referring to this: Air Canada must honor refund policy invented by airline’s chatbot. That didn’t cost Air Canada too much, under $1,000, but I can imagine an AI generating something that cost a company a lot more. I’m sure the legal and marketing department of the company where I work will be cautious about this.

Assuming Alice is a woman.

If you presume the original question is correct which is how most tests work, Alice is referred to as ‘she’. It is intelligent to assume she is a woman up to and until we determine that the test is fucky.

It’s slmost crazy how primitive and old-fashioned ST: ToS seems when compared to 2001: ASO even though that film was made just a couple years after ST premiered.

I believe the ur-example was a 1951 story by Gordon R. Dickson called The Monkey Wrench.

Do we know what gender Alice identifies as? Without this information we don’t know if Alice is a sister, brother, or sibling of non-binary identity to any of the other siblings.

If they succeed in doing that, then they’ll have created an AI that’s smarter than 40% of the human population.

(I’m estimating by looking at the number of die-hard Trump supporters. Laughing at their stupid mistakes just causes them to double-down on those mistakes)

M + 1 assuming none are half-siblings, which could complicate it, but obviously the quoted AIs aren’t getting anywhere near that level of analysis.

On the other hand, here’s something interesting I saw the other day — a “reverse Turing test”, where the AIs come off rather well. The human is kind of an idiot:

@robro:

Depends on what you are doing. If you are doing stuff like support vector machine there are bounds that can tell you how many samples you need based on the “complexity of what you are trying to learn” which can be expressed mathematically. But most people who talk about “AI” and machine learning talk about neural nets and they also mean these LLM and stuff like that. For those, the answer is “nobody knows”. In neural nets also you are using a heuristic to find an optimal value which you might be able to find at all so people use all sorts of hacks and other tricks. And yes, you do need a training set.

One of the first things I tried on ChatGPT was to ask it to interpret a pair of ambiguous statements. “I saw Peter with my binoculars” could obviously be interpreted in two valid ways. “I saw Peter with my microscope” on the other hand either implies that I saw Peter carrying my microscope, or that Peter is extremely small. I guess opinions may vary on which one is more likely to be correct, but the AI firmly came down on the latter conclusion, with the justification that “microscopes are heavy”.

teaching an AI to do a certain task or group of related tasks is one thing and may be finite but humans and I would say most biological organisms continue to learn within some parameters all the time should not it be the same for AIs?

one my tests of whether an AI is an intelligence will be when it can make up and tell a joke on purpose

@unclefrogy:

They probably can already do that. Making jokes is relatively easy, IMO. First, the scope of what humans find “funny” is so large that anything pretty much can be a joke. Also, a lot of jokes have the same structure and you can fill in the template with different words. Actually, I went to “weird words generator” that generates extremely obscure words and I asked chatGPT to make jokes about them. Here are some results:

They are very mildly funny but also to be fair I don’t see how you can make reasonable jokes with these choice of words.

“Changeling” was a ST:TOS season two episode. According to wikipedia, it cost about $185,000 to make ($5000 less than a season one episode) and had likely about a week, maybe two, to produce in its entirety. The series was on the edge of cancellation throughout its time. “2001” cost $10,500,000 and principal filming was about 2 years long. (Filming on 2001 started in May 1965. ST:TOS started in Nov 1964 for the pilot “The Cage”.)

ST did a damn fine job.

Thing is, TOS had some professional SF authors writing for it.

Not Hollywood scriptwriters, but actual SF authors.

“Story consultants: Steven Carabatsos and D C Fontana. Writers for seasons one and two include Jerome Bixby, Robert Bloch, Coon, Max Ehrlich, Harlan Ellison, Fontana, David Gerrold, George Clayton Johnson, Richard Matheson, Roddenberry, Jerry Sohl, Norman Spinrad and Theodore Sturgeon; the only well known writer to work for season three was Bixby.”

(https://sf-encyclopedia.com/entry/star_trek)

Its telling that the AIs do not spell out the appropriate reasoning.

1 Alice is given as a female, a female name and the use of the pronoun she.

2 being female alice is then a sister to her brothers

3 the sisters of alice are also, by definition, sisters to her brothers

3 any one brother must then have alice as a sister as well as M additional sisters

4 conclusion each brother of alice has m+1 sisters

DanDare, so, so easy to anthropomorphise.

There is no reasoning, that’s why.

Generative Pre-trained Transformer.

Feed in an input, get an output.

The “reasoning”, such as there is any, is in that pre-training and how tokens string together.

I mean, one could add to the input a request to show the reasoning at hand, and you’d get the “reasoning” at hand, just like you would get with any other input.

—

Back in the day, I coded a fairly shitty game of Brick Breaker in Basic + some assembly (around 1981ish), and me and a friend played it some. He swore that you could put “spin” on the ball by clicking as the paddle was moving. Of course, no such thing.

(Weird bit, his technique based on this actual misapprehension actually resulted in better scores, at times)

PS, https://en.wikipedia.org/wiki/Cyc could do that, legit.

So, we’re just going to assume most people answer this correctly?

Sorry, no. Citation needed.

Btw when the LLM’s get this right, they’re just copying the answer from what someone else wrote, or being lucky, not actully doing the math/logic.