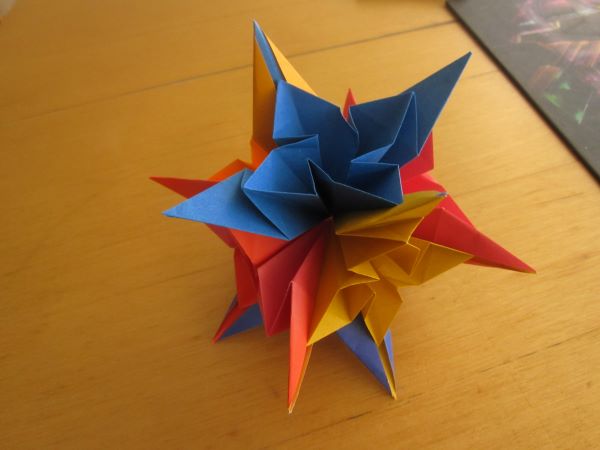

Ixora, designed by Meenakshi Mukerji

I’m saddened to hear that Meenakshi Mukerji recently died. I almost had an opportunity to meet her at a convention, but there was a pandemic and it never happened. I’d give her a lot of credit for getting me into modular origami, and many of my earliest models were from her books. I love her work. This is a simple model that I have folded many times; it can be found in Ornamental Origami.