Over the months, I’ve managed to accumulate a LOT of papers discussing p-values and their application. Rather than have them rot on my hard drive, I figured it was time for another index post.

Full disclosure: I’m not in favour of them. But I came to that by reading these papers, and seeing no effective counter-argument. So while this collection is biased against p-values, that’s no more a problem than a bias against the luminiferous aether or humour theory. And don’t worry, I’ll include a few defenders of p-values as well.

What’s a p-value?

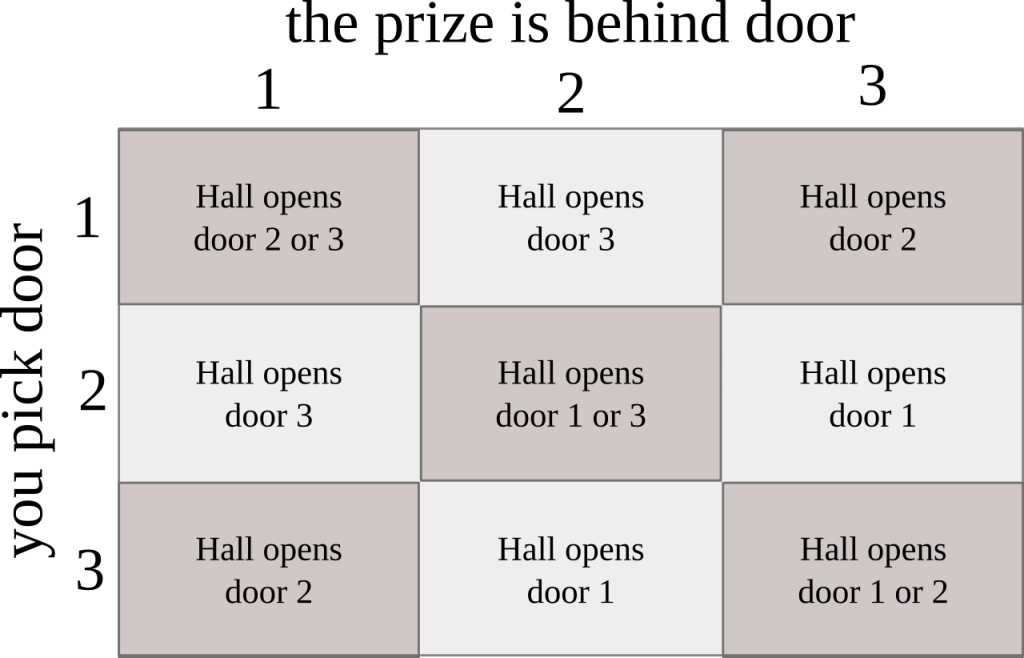

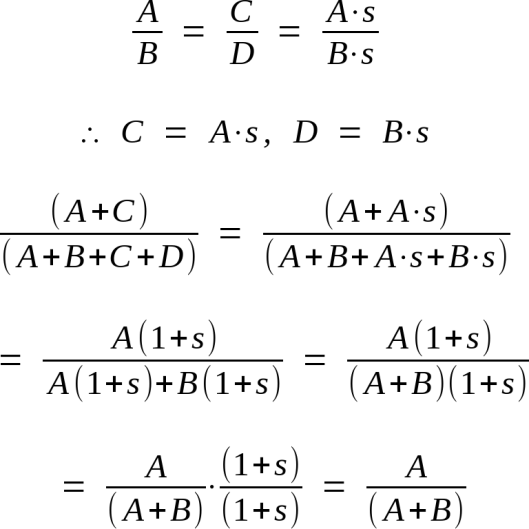

It’s frequently used in “null hypothesis significance testing,” or NHST to its friends. A null hypothesis is one you hope to refute, preferably a fairly established one that other people accept as true. That hypothesis will predict a range of observations, some more likely than others. A p-value is simply the odds of some observed event happening, plus the odds of all events more extreme, assuming the null hypothesis is true. You can then plug that value into the following logic:

- Event E, or an event more extreme, is unlikely to occur under the null hypothesis.

- Event E occurred.

- Ergo, the null hypothesis is false.

They seem like a weird thing to get worked up about.

Significance testing is a cornerstone of modern science, and NHST is the most common form of it. A quick check of Google Scholar shows “p-value” shows up 3.8 million times, while its primary competitor, “Bayes Factor,” shows up 250,000. At the same time, it’s poorly understood.

The P value is probably the most ubiquitous and at the same time, misunderstood, misinterpreted, and occasionally miscalculated index in all of biomedical research. In a recent survey of medical residents published in JAMA, 88% expressed fair to complete confidence in interpreting P values, yet only 62% of these could answer an elementary P-value interpretation question correctly. However, it is not just those statistics that testify to the difficulty in interpreting P values. In an exquisite irony, none of the answers offered for the P-value question was correct, as is explained later in this chapter.

Goodman, Steven. “A Dirty Dozen: Twelve P-Value Misconceptions.” In Seminars in Hematology, 45:135–40. Elsevier, 2008. http://www.sciencedirect.com/science/article/pii/S0037196308000620.

The consequence is an abundance of false positives in the scientific literature, leading to many failed replications and wasted resources.

Gotcha. So what do scientists think is wrong with them?

Well, th-

And make it quick, I don’t have a lot of time.

Right right, here’s the top three papers I can recommend:

Null hypothesis significance testing (NHST) is arguably the mosl widely used approach to hypothesis evaluation among behavioral and social scientists. It is also very controversial. A major concern expressed by critics is that such testing is misunderstood by many of those who use it. Several other objections to its use have also been raised. In this article the author reviews and comments on the claimed misunderstandings as well as on other criticisms of the approach, and he notes arguments that have been advanced in support of NHST. Alternatives and supplements to NHST are considered, as are several related recommendations regarding the interpretation of experimental data. The concluding opinion is that NHST is easily misunderstood and misused but that when applied with good judgment it can be an effective aid to the interpretation of experimental data.

Nickerson, Raymond S. “Null Hypothesis Significance Testing: A Review of an Old and Continuing Controversy.” Psychological Methods 5, no. 2 (2000): 241.

After 4 decades of severe criticism, the ritual of null hypothesis significance testing (mechanical dichotomous decisions around a sacred .05 criterion) still persists. This article reviews the problems with this practice, including near universal misinterpretation of p as the probability that H₀ is false, the misinterpretation that its complement is the probability of successful replication, and the mistaken assumption that if one rejects H₀ one thereby affirms the theory that led to the test.

Cohen, Jacob. “The Earth Is Round (p < .05).” American Psychologist 49, no. 12 (1994): 997–1003. doi:10.1037/0003-066X.49.12.997.

This chapter examines eight of the most commonly voiced objections to reform of data analysis practices and shows each of them to be erroneous. The objections are: (a) Without significance tests we would not know whether a finding is real or just due to chance; (b) hypothesis testing would not be possible without significance tests; (c) the problem is not significance tests but failure to develop a tradition of replicating studies; (d) when studies have a large number of relationships, we need significance tests to identify those that are real and not just due to chance; (e) confidence intervals are themselves significance tests; (f) significance testing ensure objectivity in the interpretation of research data; (g) it is the misuse, not the use, of significance testing that is the problem; and (h) it is futile to reform data analysis methods, so why try?

Schmidt, Frank L., and J. E. Hunter. “Eight Common but False Objections to the Discontinuation of Significance Testing in the Analysis of Research Data.” What If There Were No Significance Tests, 1997, 37–64.

OK, I have a bit more time now. What else do you have?

Using a Bayesian significance test for a normal mean, James Berger and Thomas Sellke (1987, pp. 112–113) showed that for p values of .05, .01, and .001, respectively, the posterior probabilities of the null, Pr(H₀ | x), for n = 50 are .52, .22, and .034. For n = 100 the corresponding figures are .60, .27, and .045. Clearly these discrepancies between p and Pr(H₀ | x) are pronounced, and cast serious doubt on the use of p values as reasonable measures of evidence. In fact, Berger and Sellke (1987) demonstrated that data yielding a p value of .05 in testing a normal mean nevertheless resulted in a posterior probability of the null hypothesis of at least .30 for any objective (symmetric priors with equal prior weight given to H₀ and HA ) prior distribution.

Hubbard, R., and R. M. Lindsay. “Why P Values Are Not a Useful Measure of Evidence in Statistical Significance Testing.” Theory & Psychology 18, no. 1 (February 1, 2008): 69–88. doi:10.1177/0959354307086923.

Because p-values dominate statistical analysis in psychology, it is important to ask what p says about replication. The answer to this question is ‘‘Surprisingly little.’’ In one simulation of 25 repetitions of a typical experiment, p varied from .44. Remarkably, the interval—termed a p interval —is this wide however large the sample size. p is so unreliable and gives such dramatically vague information that it is a poor basis for inference.

Cumming, Geoff. “Replication and p Intervals: p Values Predict the Future Only Vaguely, but Confidence Intervals Do Much Better.” Perspectives on Psychological Science 3, no. 4 (July 2008): 286–300. doi:10.1111/j.1745-6924.2008.00079.x.

Simulations of repeated t-tests also illustrate the tendency of small samples to exaggerate effects. This can be shown by adding an additional dimension to the presentation of the data. It is clear how small samples are less likely to be sufficiently representative of the two tested populations to genuinely reflect the small but real difference between them. Those samples that are less representative may, by chance, result in a low P value. When a test has low power, a low P value will occur only when the sample drawn is relatively extreme. Drawing such a sample is unlikely, and such extreme values give an exaggerated impression of the difference between the original populations. This phenomenon, known as the ‘winner’s curse’, has been emphasized by others. If statistical power is augmented by taking more observations, the estimate of the difference between the populations becomes closer to, and centered on, the theoretical value of the effect size.

is G., Douglas Curran-Everett, Sarah L. Vowler, and Gordon B. Drummond. “The Fickle P Value Generates Irreproducible Results.” Nature Methods 12, no. 3 (March 2015): 179–85. doi:10.1038/nmeth.3288.

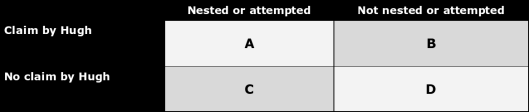

If you use p=0.05 to suggest that you have made a discovery, you will be wrong at least 30% of the time. If, as is often the case, experiments are underpowered, you will be wrong most of the time. This conclusion is demonstrated from several points of view. First, tree diagrams which show the close analogy with the screening test problem. Similar conclusions are drawn by repeated simulations of t-tests. These mimic what is done in real life, which makes the results more persuasive. The simulation method is used also to evaluate the extent to which effect sizes are over-estimated, especially in underpowered experiments. A script is supplied to allow the reader to do simulations themselves, with numbers appropriate for their own work. It is concluded that if you wish to keep your false discovery rate below 5%, you need to use a three-sigma rule, or to insist on p≤0.001. And never use the word ‘significant’.

Colquhoun, David. “An Investigation of the False Discovery Rate and the Misinterpretation of P-Values.” Royal Society Open Science 1, no. 3 (November 1, 2014): 140216. doi:10.1098/rsos.140216.

I was hoping for something more philosophical.

The idea that the P value can play both of these roles is based on a fallacy: that an event can be viewed simultaneously both from a long-run and a short-run perspective. In the long-run perspective, which is error-based and deductive, we group the observed result together with other outcomes that might have occurred in hypothetical repetitions of the experiment. In the “short run” perspective, which is evidential and inductive, we try to evaluate the meaning of the observed result from a single experiment. If we could combine these perspectives, it would mean that inductive ends (drawing scientific conclusions) could be served with purely deductive methods (objective probability calculations).

Goodman, Steven N. “Toward Evidence-Based Medical Statistics. 1: The P Value Fallacy.” Annals of Internal Medicine 130, no. 12 (1999): 995–1004.

Overemphasis on hypothesis testing–and the use of P values to dichotomise significant or non-significant results–has detracted from more useful approaches to interpreting study results, such as estimation and confidence intervals. In medical studies investigators are usually interested in determining the size of difference of a measured outcome between groups, rather than a simple indication of whether or not it is statistically significant. Confidence intervals present a range of values, on the basis of the sample data, in which the population value for such a difference may lie. Some methods of calculating confidence intervals for means and differences between means are given, with similar information for proportions. The paper also gives suggestions for graphical display. Confidence intervals, if appropriate to the type of study, should be used for major findings in both the main text of a paper and its abstract.

Gardner, Martin J., and Douglas G. Altman. “Confidence Intervals rather than P Values: Estimation rather than Hypothesis Testing.” BMJ 292, no. 6522 (1986): 746–50.

What’s this “Neyman-Pearson” thing?

P-values were part of a method proposed by Ronald Fisher, as a means of assessing evidence. Even as the ink was barely dry on it, other people started poking holes in his work. Jerzy Neyman and Egon Pearson took some of Fisher’s ideas and came up with a new method, based on long-term prediction. Their method is superior, IMO, but rather than replacing Fisher’s approach it instead wound up being blended with it, ditching all the advantages to preserve the faults. This citation covers the historical background:

Huberty, Carl J. “Historical Origins of Statistical Testing Practices: The Treatment of Fisher versus Neyman-Pearson Views in Textbooks.” The Journal of Experimental Education 61, no. 4 (1993): 317–33.

While the remainder help describe the differences between the two methods, and possible ways to “fix” their shortcomings.

The distinction between evidence (p’s) and error (a’s) is not trivial. Instead, it reflects the fundamental differences between Fisher’s ideas on significance testing and inductive inference, and Neyman-Pearson’s views on hypothesis testing and inductive behavior. The emphasis of the article is to expose this incompatibility, but we also briefly note a possible reconciliation.

Hubbard, Raymond, and M. J Bayarri. “Confusion Over Measures of Evidence ( p ’S) Versus Errors ( α ’S) in Classical Statistical Testing.” The American Statistician 57, no. 3 (August 2003): 171–78. doi:10.1198/0003130031856.

The basic differences are these: Fisher attached an epistemic interpretation to a significant result, which referred to a particular experiment. Neyman rejected this view as inconsistent and attached a behavioral meaning to a significant result that did not refer to a particular experiment, but to repeated experiments. (Pearson found himself somewhere in between.)

Gigerenzer, Gerd. “The Superego, the Ego, and the Id in Statistical Reasoning.” A Handbook for Data Analysis in the Behavioral Sciences: Methodological Issues, 1993, 311–39.

This article presents a simple example designed to clarify many of the issues in these controversies. Along the way many of the fundamental ideas of testing from all three perspectives are illustrated. The conclusion is that Fisherian testing is not a competitor to Neyman-Pearson (NP) or Bayesian testing because it examines a different problem. As with Berger and Wolpert (1984), I conclude that Bayesian testing is preferable to NP testing as a procedure for deciding between alternative hypotheses.

Christensen, Ronald. “Testing Fisher, Neyman, Pearson, and Bayes.” The American Statistician 59, no. 2 (2005): 121–26.

C’mon, there aren’t any people defending the p-value?

Sure there are. They fall into two camps: “deniers,” a small group that insists there’s nothing wrong with p-values, and the much more common “fixers,” who propose making up for the shortcomings by augmenting NHST. Since a number of fixers have already been cited, I’ll just focus on the deniers here.

On the other hand, the propensity to misuse or misunderstand a tool should not necessarily lead us to prohibit its use. The theory of estimation is also often misunderstood. How many epidemiologists can explain the meaning of their 95% confidence interval? There are other simple concepts susceptible to fuzzy thinking. I once quizzed a class of epidemiology students and discovered that most had only a foggy notion of what is meant by the word “bias.” Should we then abandon all discussion of bias, and dumb down the field to the point where no subtleties need trouble us?

Weinberg, Clarice R. “It’s Time to Rehabilitate the P-Value.” Epidemiology 12, no. 3 (2001): 288–90.

The solution is simple and practiced quietly by many researchers—use P values descriptively, as one of many considerations to assess the meaning and value of epidemiologic research findings. We consider the full range of information provided by P values, from 0 to 1, recognizing that 0.04 and 0.06 are essentially the same, but that 0.20 and 0.80 are not. There are no discontinuities in the evidence at 0.05 or 0.01 or 0.001 and no good reason to dichotomize a continuous measure. We recognize that in the majority of reasonably large observational studies, systematic biases are of greater concern than random error as the leading obstacle to causal interpretation.

Savitz, David A. “Commentary: Reconciling Theory and Practice.” Epidemiology 24, no. 2 (March 2013): 212–14. doi:10.1097/EDE.0b013e318281e856.

The null hypothesis can be true because it is the hypothesis that errors are randomly distributed in data. Moreover, the null hypothesis is never used as a categorical proposition. Statistical significance means only that chance influences can be excluded as an explanation of data; it does not identify the nonchance factor responsible. The experimental conclusion is drawn with the inductive principle underlying the experimental design. A chain of deductive arguments gives rise to the theoretical conclusion via the experimental conclusion. The anomalous relationship between statistical significance and the effect size often used to criticize NHSTP is more apparent than real.

Hunter, John E. “Testing Significance Testing: A Flawed Defense.” Behavioral and Brain Sciences 21, no. 02 (April 1998): 204–204. doi:10.1017/S0140525X98331167.