A hot topic in psychology is the study of infants.

We had great difficulty finding a way to probe their minds. How do you communicate with something that cannot talk, after all? In the 1980’s, scientists found a simple solution: if an infant is puzzled, they’ll stare at something longer than if they understood it.

For instance, put two dolls behind a curtain, then pull away the curtain. Five-month-old infants will pay little attention if there are two dolls behind the drapes, but will take notice if one or three dolls are sitting there. This behaviour only makes sense if they have some basic counting skills built-in at birth.

Or, stage a puppet show instead. Have the puppets nicely pass a ball to each other for a while, only to have one steal the ball and run off-stage. Now set both puppets in front of the infant, each with a bowl of food placed in front, and encourage the child to take a treat. The vast majority of the time, infants will take from the bowl in front of the greedy puppet. Clearly, they wanted to punish this amoral muppet.[104]

But how can this be? Children that young don’t understand language, so they can’t have learned this from an adult. This sense of morality must be built-in. And who better to do the building-in than a god?

The earliest religions we know of seem to agree. Ma’at, the ancient Egyptian code of ethics, is a prime example.

Maat is right order in nature and society, as established by the act of creation, and hence means, according to the context, what is right, what is correct, law, order, justice and truth. This state of righteousness needs to be preserved or established, in great matters as in small. Maat is therefore not only right order but also the object of human activity. Maat is both the task which man sets himself and also, as righteousness, the promise and reward which await him in fulfilling it.

(Siegfried Morenz, “Egyptian Religion.” Cornell University Press, 1973. pg. 113)

The earliest written records of Ma’at date back to 2600BCE. The Sumerians had a similar system involving “underworld judges” since at least 2900BCE. This is as far back as we can reliably look, as these two civilizations were kind enough to write down their morals for us.

Moral Quandaries

Wait wait wait, we’re missing something here. Before we can properly discuss morality, we need to nail down what a moral is.

That seems simple enough, as we’re handed morals all the time via stories. In Shakespeare’s play “Macbeth,” Lady Macbeth is so devoted to her husband that she practically murders the king for him, thinking that because it was prophecized to happen everything would turn out fine. It doesn’t; she kills herself out of guilt over the murder, and her husband’s head winds up on a pike. The moral of the story is: don’t kill people, even if it’ll get you a head.[105]

In part, then, a moral is a description for how to behave (or how not to behave) in a given situation. To be moral, or to be “good,” is to follow that description. But there’s another aspect to it as well; the night of the murder, Macbeth sees a ghostly dagger, and others note strange behaviour from the animals and weather. Back in the 1600’s, that could only mean one thing: something bad or immoral was going to happen to the king, and the gods didn’t approve of it. Compare this with Macduff’s beheading of Macbeth near the end of the play; another king is killed, and yet this time nature doesn’t kick up a fuss, indirectly hinting that it approves. So morals aren’t simply rules you follow, but rules you should follow.

Should? According to who or what?

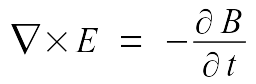

And in that word, we see exactly why the religious love bringing up morality. A moral must have some justification for it, presumably from something or one greater than the entities that must live by that moral. I could declare that everyone should give me a portion of their earnings, because I’m just that awesome, but the justification for that moral dies along with me. There’s also a chance that I may be mistaken about my awesomeness, or inventing it in order to line my pockets. I could even change my mind! We need something more stable than an individual to anchor our morals to, and yet even human culture and society can change dramatically over time. An external, unchanging entity would be an ideal anchor, such as a god.

There’s still one more axis to consider, though. During a royal banquet, the newly-crowned Macbeth has his seat taken by the ghost of his former rival, Banquo. Nevermind the action on stage, take a step back and ask yourself a more basic question: is this banquet moral?

That question seems terribly strange. It’s expected of kings to hold banquets from time to time, and pretty much required of them to host one after their coronation. If someone has no choice in an action, how can morality enter into it?

Aha! We’ve finally clinched our definition: a moral is a description of how something should (or shouldn’t) behave in a certain situation, given multiple choices. Eating does not involve morality, since you have no choice on the matter. Your choice of what to eat is quite different, provided you have more than one choice. Even then, if none of those choices have been approved or disapproved in some fashion, then we’re not making a choice based on morality after all.

This definition opens up new possibilities, too. Suppose we were to walk into a deserted village, poking our heads into the deserted doors. We’re surprised to find the interiors were kept remarkably clean when they were occupied, which is quite unlike all others we find from that time. We’ve got two-thirds of morality in place already: from how consistent this pattern is, we can be pretty confident there was a description of how to behave, and from this village’s neighbours we know they had multiple options. The only thing missing is a “should,” but again the consistency of this behaviour suggests the original occupiers of this village had one.

If you agree to this, we can push back the first evidence of morality to the Çatalhöyük settlement in Turkey, which was occupied between 7500 and 5700 BCE.[106]

Can we go farther? Many archaeologists, including Klaus Schmidt, claim that a site called Göbekli Tepe may actually be the first religious temple.

In the pits, standing stones, or pillars, are arranged in circles. Beyond, on the hillside, are four other rings of partially excavated pillars. Each ring has a roughly similar layout: in the center are two large stone T-shaped pillars encircled by slightly smaller stones facing inward. The tallest pillars tower 16 feet and, Schmidt says, weigh between seven and ten tons. As we walk among them, I see that some are blank, while others are elaborately carved: foxes, lions, scorpions and vultures abound, twisting and crawling on the pillars’ broad sides. […]

And partly because Schmidt has found no evidence that people permanently resided on the summit of Gobekli Tepe itself, he believes this was a place of worship on an unprecedented scale—humanity’s first “cathedral on a hill.”

(Andrew Curry, “Gobekli Tepe: The World’s First Temple?“ Smithsonian magazine, November 2008.)

Again, we find hints of morality; almost all deities encourage their worship, implicitly approving of it, and yet we have the choice of not worshipping. If that place truly was a temple, then our evidence of morality begins at roughly 9500 BCE. To put that date in context, it’s only a thousand years after the last major ice age ended, right about when we learned how to farm, a thousand years before we invented numbers, six thousand before we discovered copper and writing, and eight thousand before Moses was given the 613 mitzvot[107] by YHWH, according to orthodox Judaism.

[104] “The Moral Life of Babies,” the New York Times Magazine, May 5th, 2010.

[105] Sorry. Oh, and while I have your attention: I’m going to be discussing key plot points for the next few paragraphs. Spoiler alert!

[106] http://www.catalhoyuk.com/library/goddess.html . Search for “clean.”

[107] Commandments of behaviour given to you by YHWH. Oddly enough, despite sharing the same holy text, despite Jesus claiming all old Jewish laws apply to Christians (Matthew 5:17-20), despite Jesus only naming six (Matthew 19:16-19) or two (Matthew 22:37-40) commandments explicitly, in violation of Jewish (Simon Glustrom, The Myth and Reality of Judaism, pp 113–114) and Christian (James 2:9-12) tradition that every commandment is important, Christians only recognize ten commandments as absolute divine law. And even then, they ignore the one about sacrificing your first-born (Exodus 34:19-20). Go figure.