I drive a 2011 Honda Fit. It’s an ultra-reliable car, running without a hitch for 14 years now, not even a hiccup. The labels on some of the buttons on the dashboard are wearing off, but that’s the only flaw so far. I feel like this might well be the last car I ever own.

Except…the next generation of Hondas might tempt me to upgrade.

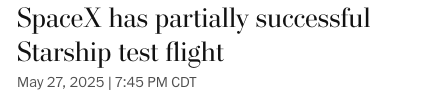

It’s a three hour drive from my house to Minneapolis, and maybe a ballistic trajectory would make the trip quicker.

Also, not exploding is an important safety feature to me.