Every once in a while, I get some glib story from believers in the Singularity and transhumanism that all we have to do to upload a brain into a computer is make lots of really thin sections and reconstruct every single cell and every single connection, put that data into a machine with a sufficiently robust simulator that executes all the things that a living brain does, and presto! You’ve got a virtual simulation of the person! I’ve explained before how that overly trivializes and reduces the problem to an absurd degree, but guess what? Real scientists, not the ridiculous acolytes of Ray Kurzweil, have been working at this problem realistically. The results are interesting, but also reveal why this work has a long, long way to go.

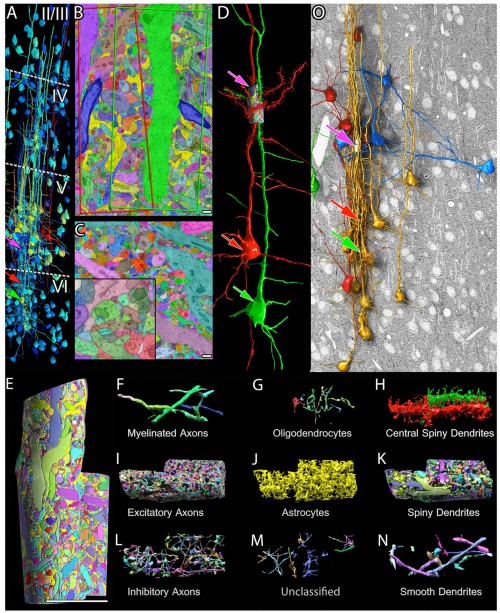

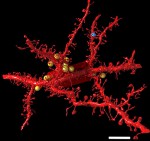

In a paper from Jeff Lichtman’s group with many authors, they revealed the results of taking many ultrathin sections of a tiny dot of tissue from mouse cortex, scanned them, and then made 3-D reconstructions. There was a time in my life when I was doing this sort of thing: long hours at the ultramicrotome, using glass knives to slice sequential sections from tissue imbedded in an epoxy block, and then collecting them on delicate copper grids, a few at a time, to put on the electron microscope. One of the very cool things about this paper was reading about all the ways they automated this tedious process. It was impressive that they managed to get a complete record of 1500 µm3 of the brain, with a complete map of all the cells and synapses.

But hey, I don’t have to explain it: here’s a lovely video that leads you through the whole thing.

I have to squelch the fantasies of any Kurzweilians who are orgasming over this story. It was a colossal amount of work to map a very tiny fraction of a mouse brain, and it’s entirely a morphological study. This was a brain fixed with osmium tetroxide — it’s the same stuff I used to fix fish brains for EM 30 years ago. It’s a nasty toxin, but very effective at locking down membranes and proteins, saturating them with a high electron density material that is easily visualized with high contrast in the EM. But obviously, the chemistry of the tissue is radically changed, and there’s a lot of molecular-level information lost.

So the shape of the cellular processes is preserved, and we can see important features like vesicles and post-synaptic densities and connexins (although they didn’t find any gap junctions in this sample), but physiological and pharmacological information is only inferred.

But it’s beautiful! And being able to do a quantitative analysis of connectivity in a piece of the brain is fascinating.

(A) Cortical neuronal somata reconstruction to aid in cortical layer boundaries (dotted lines) based on cell number and size. Large neurons are labeled red; intermediate ones are labeled yellow; and small ones are labeled blue. The site of the saturated segmentation is in layer V (pink arrow). These two layer VI pyramidal cell somata (red and green arrows) give rise to the apical dendrites that form the core of the saturated cylinders. (B) A single section of the manually saturated reconstruction of the high-resolution data. The borders of the cylinders encompassing the ‘‘red’’ and ‘‘green’’ apical dendrites are outlined in this section as red and green quadrilaterals. This section runs through the center of the ‘‘green’’ apical dendrite. (C) A single section of a fully automated saturated reconstruction of the high-resolution data. Higher magnification view (lower left inset) shows 2D merge and split errors. (D) The two pyramidal cells (red and green arrows) whose apical dendrites lie in the centers of the saturated reconstructions. Dendritic spines reconstructed in the high-resolution image stack only.

It’s also because the authors have a realistic perspective on the magnitude of the problem.

Finally, given the many challenges we encountered and those that remain in doing saturated connectomics, we think it is fair to question whether the results justify the effort expended. What after all have we gainedfrom all this high density reconstruction of such a small volume? In our view, aside from the realization that connectivity is not going to be easy to explain by looking at overlap of axons and dendrites (a central premise of the Human Brain Project), we think that this ‘‘omics’’ effort lays bare the magnitude of the problem confronting neuroscientists who seek to understand the brain. Although technologies, such as the ones described in this paper, seek to provide a more complete description of the complexity of a system, they do not necessarily make understanding the system any easier. Rather, this work challenges the notion that the only thing that stands in the way of fundamental mechanistic insights is lack of data. The numbers of different neurons interacting within each miniscule portion of the cortex is greater than the total number of different neurons in many behaving animals. Some may therefore read this work as a cautionary tale that the task is impossible. Our view is more sanguine; in the nascent field of connectomics there is no reason to stop doing it until the results are boring.

That’s beautiful, man.

Kasthuri N, Hayworth KJ, Berger DR, Schalek RL, Conchello JA, Knowles-Barley S, Lee D, Vázquez-Reina A, Kaynig V, Jones TR, Roberts M, Morgan JL, Tapia JC, Seung HS, Roncal WG, Vogelstein JT, Burns R, Sussman DL, Priebe CE, Pfister H, Lichtman JW. (2015) Saturated Reconstruction of a Volume of Neocortex. Cell 162(3):648-61. doi: 10.1016/j.cell.2015.06.054.

I just can’t get excited over the idea of a computer that thinks it’s me. I’d still be dead.

I agree that slicing up a brain is a ludicrous idea. But how about non-destructively observing each neuron, designing electronic components with the same input-output relationships and hooking them up in the same topology in the actual brain? Obviously you would start with very simple organisms and see if they reproduced any of the behavior of the real animal.

How do you propose to do that?

I predict that the caveats & qualifications stated by those researchers will be seized upon by some of the more scientifically literate members of the ghost-in-the-machine cult(s) as evidence that it can’t all be based on physics & chemistry, & there must be something transcendental/spiritual/whatever going on in human consciousness. Just as wrong as the upload-a-brain-to-a-computer cult, of course…

PZ, I’ll wait for the singularity and hope the AIs are clever enough to do it for me.

Or, somewhat more seriously, in the way these people are attempting to do it http://www.artificialbrains.com/openworm

Re: Aziraphale, #2, in addition to #3:

And how would you verify it worked? The “real behaviour” (in so far known) would be very much dependent on external stimuli, so even if you rebuilt the brain with relatively few errors, you’d still have to build a sensor network capable of recording the same stimuli in a comparable manner to connect to the sim. Otherwise even a realistic brainsim would probably fall over making who knows what of the data it’s picking up. And the same problem should also apply in reverse for any sort of controls the brain is supposed to have. Without that there’d be little behaviour to observe.

So with this approach I suspect you might end up spending even more time mimicking everything other than the brain before you get to test your setup. Or else spend a lot of time trying to unscramble everything or pray that the brainsim is smart enough to adapt to all the scrambled systems (possible, I suppose) and then still come up with behavioural patterns comparable to the original. Validation should be fun. Without it, you might just be able to say “it worked, interesting” or “didn’t work”, followed by a litany of possible causes without any idea which one might be the big issue.

No, you wouldn’t. Very simple organisms (i.e., the exceedingly vast majority of organisms) have only the one cell, so no neurons, synapses, etc.

Of course you mean very simple animals, and further the relatively simple anmals from among those with a nervous system.

Here’s one. Rock on.

I love this bit best: there is no reason to stop doing it until the results are boring.

Wouldn’t extending life be vastly easier if we just kept the current brain alive for longer?

This is one of those times when the scientists desperately need the philosophers’ help. The very idea of scanning and reproducing a functional brain (no matter how) works perfectly (well, given a perfect scan), but only given a particular philosophical stance. From a mechanistic view, it works. From a contextualist view, you are looking at the wrong thing, and are nowhere near working.

Consciousness is something that, in practice, cannot be seen in a static slice; it *must* be seen across time, and across context, and may well necessarily depend on years or decades worth of that context. You cannot, for instance, see “plot” in one frame of a movie; you cannot see “personality” (or “consciousness” or “self” or any number of other such animals) in even the most detailed reconstruction of the static state of a brain.

I’ll stop here, before I write a comment 10 times longer than PZ’s post, but this is something that really irks me.

And you really don’t want to irk a cuttlefish.

Would it even be theoretically possible to interface a brain with a material/system/I dunno that can be influenced by it to the point of acting as new territory for patterning? In other words, give a brain new capacity while not fiddling around with the old? If it was expandable, one could, I suppose, continue to add new material on as the older died off, with no interruption of functioning.

I don’t know if something like this is even possible in theory, but it seems a hell of a lot more likely than trying to replicate something like that all at once.

You guys are harshing my Kurzweilian buzz!

I imagine it wouldn’t be much of a comfort to Singularitarians if it turns out that there’s no way to create a sufficient “map” for brain emulation without destructive methods of scanning it, although they’d still cling to hopes of “Ship of Theseus” style neuron and axon connection replacement. To be honest, I think we’re more likely to figure out some type of “medical immortality”/extended longevity for regular humans than to figure out how to emulate brains any time soon.

Oh well. I’m more interested in stuff that figures out how to send/receive stimuli in the human nervous system such that you can control input and output, rather than brain emulation. Imagine what that would mean for prosthetics, for example.

I agree with Cuttlefish.

Oh, you mean like this:

http://www.dailymail.co.uk/sciencetech/article-2879662/Watch-incredible-moment-double-amputee-uses-TWO-mind-controlled-prosthetic-arms-time.html

http://www.nytimes.com/2015/05/21/technology/a-bionic-approach-to-prosthetics-controlled-by-thought.html

http://www.rt.com/news/260657-mind-controlled-bionic-prosthetic/

I’ve often wondered if transhumanists grasp that the brain is not a closed system. Without a multitude of other body systems the brain is useless. Or will a computer just simulate a cardiopulmonary, renal, endocrine and every other system?

–I agree with Cuttlefish.–

Well, that’s only cos I’m right.

@#10 Brian Pansky–Yes, yes, a thousand times yes. Except, of course, no. Not just keeping the current *brain* alive, as the brain is necessary but not sufficient. So… yeah, tautology. The way to extend life is to keep organisms (or people, to narrow focus) alive longer.

The science of improving the world we actually have, as difficult as it may seem, is actually easier than the science of punting and hoping that future tech will will save us. Or at least our brains. Which are independent of time and context, apparently, and can be uploaded. Or maybe cryogenically stored.

Yeah, no. Let’s make the world a better place. For all of us. It works, it can be done, and goddammit, it’s a better path than the fucking Singularity. And more, it works for more than just the people who can afford to upload their shit into some hard drive.

@19 Cuttlefish – I recall years ago reading a biting rebuke of Cryonic “life extension”, the crux of which was that amount of tissue damage that would occur over the course of a few short years would, even at -124 C, render the “patient” hopeless to all but magic. I’m not qualified to speak for or against Cryonics, but I agree that preserving extant life is much more practical than reanimating the dead.

Cuttlefish @11: Have you heard of Giulio Tononi’s Integrated Information Theory? Haven’t done more than skim it myself yet, but it might be of interest.

From the abstract of the linked article;

The first thing that came to my mind is that complete physical simulation CANNOT be the easiest way to emulate a brain. It would be like trying to pirate software with an electron microscope and a box of Legos. Assuming that running a human mind on a computer is both possible and desirable, we’ll do it by actually understanding the brain and mind long before we have the means to brute-force it this way.

The second thing that came to mind is, why would we want a computer mind if it’s just going to faithfully copy every flaw and problem that our thinking has now? Is someone actually looking at humanity and deciding that the ideal future is “just like that, but faster”? Will my digital brain be taking digital prozac until the heat death of the universe? To put it mildly, I do not agree with this way of doing transhumanism.

@Robert B. @#23–

The box of legos is key.

The singularity people seem intent on ignoring the “embodied cognition” people; distributing the work among the various body parts (that is, the box of legos) is key; it matters tremendously what the legs are that the brain has to interact with. Not to mention (I mention) the heart, the lungs, the gut, the kidneys, the muscles, tendons, and all the other squishy stuff computers have a hard time with.

That box of legos could make a biped, a quadruped, or 6-8-10… or a 5-legged sea star… or some variant combining any of these.

Currently, my right leg is ailing me, and I might need a third leg (a cane) or fourth (crutches) or some manipulation of my current appendages (at minimum, some sort of brace). All of which would change my locomotion, and none of which would change my brain. Well, yeah, eventually my brain would change–as effect, not cause.

Or I could use that box of legos to construct an artificial leg.

Or just kill everybody, as we tentacled sorts are wont to do.

@ Cuttlefish: Yeah, I know, I took Philosophy of Mind in college and the thesis of my term paper was “An AI would have to be a robot,” as a response to the Chinese Room problem.

I was mentioning Legos as an example of a very inefficient medium in which to build, say, Adobe Photoshop. Similarly, I’m saying that a physical simulation of brain physiology is a very inefficient medium in which to build a mind. In theory all your objections about context and experience and embodiment and so on could be solved by adding more stuff to the simulation. In practice, that much technological power could have solved whatever problem you were trying to solve by brain uploading at least a hundred times, and then polished off weather control, economy design, and cancer for dessert.

@Rob Grigjanis

That paper looks like it’s doing what needs to be done in order to make a detailed theory of experience arising from mechanism, but unfortunately most of it reads like word salad to me :/

Also it has this part:

Is that their way of saying “no pun intended”?

Woops, I wrote Information Integration Theory when it should have been Integrated information theory.

It’s mostly computer nerds who assume the signalling in brains is nice and clean and predictable like it (sort of) is in computers.

I am sure that simulating a brain adequately would be insanely hard, but what about the parts of brain that assume/require inputs from parts of body? Would those be simulated too? Because, right now, I am aware that I have a bladder. And a stomach. And eyes. And skin. Would a brain in a box simulate all of those, too, or would I have “phantom skin” disorder and “phantom limb” pain on all of my limbs? And bowels? etc. The wish-fulfillment is strong in these people, but they clearly aren’t thinking things through. It’s not merely enough to simulate a brain, you have to simulate a reality that is compatible with that brain. We don’t have anything like a clue for how to do either of those and it may be that the simulated reality is a bigger problem.

This is completely outside of my areas of expertise, but it seems to me it would make a lot more sense to work on finding ways to either repair cells or replace them as they get too old to function properly. Mitosis already does that for most of our lifetimes, so it seems like it would be somewhat more plausible to find a way to continue that and eventually work on adding incremental changes to the new cells rather than going straight from a current brain to a computer.

That too is probably pretty far into speculation and science fiction, though, so I may just be missing something that would make that concept equally implausible.

Cuttlefish @11, you make some good points, but I’m not sure this is one of them. Presuming that a human being is a physical system, doesn’t it follow that if we could replicate its physical state at a particular time exactly the result would be an identical copy? Then it becomes a question of how much we need to replicate in order for it to be the same person. Of course a human being is the sum of his/her entire history, but that sum exists now only in the present.

If you took the computer you are using to read this message and sliced it very thin and built a model would it help you to understand the program it was running if in preparing the sections you lost the ability to tell whether – at the instant you froze the system whether individual bits were “0” or “1”. Of course such an activity would be a waste of time. The brain involves electrical activity moving along preferred pathways (which may change with time – so any model must reflect not just the connections but also the ease with which electrical activity will pass along the connections. But the sectioning method does nothing to record electrical potentials at an instant the brain was alive – or the “openness” of linked pathways to passing on the activity.

Even if the idea worked all you would have done is to model a tiny portion of an individual mouse’s brain – forgetting that every functioning brain will be “programmed” differently because it will have learnt about the environment through a different range of lifetime experiences.

I think the first step to actual mind uploading would be to build cellular protheses, aka artificial neurons which can mimic the real ones good enough to eventually replace all of them in a natural brain. That would of course require insanely advanced nanotechnology and/or biotechnology. Then, if that works, you could try add features to them in order to move the structure into a digital simulation, but at that point, would there still be a reason to actually do that?

Re #31, in addition to #32 (again..)

With the snapshot you’d also lose the direction in which the system (brain, body, …) was going at that instant. You might see, for example the exact firing pattern of neurons at that very instant you took the snapshot but you wouldn’t now if a given neuron was starting to fire or finishing. There’d be thousands of biological processes, which (I assume) are continuous, rather than discrete. So looking at a cycle that has several similar states you’d be unable to tell in which of the similar states it actually is and so wouldn’t know which one is next. You could guess for each process but every wrong guess might well break your simulation.

My analogy for this would be a ball (brain) rolling down a slope (everything else, stimuli, body parts, …). You take a really detailed picture of the ball but nothing else. When you reconsruct the ball you can do so accurately but. But without the slope, your simulation will have no idea in which direction it has to roll or if it should move at all. Likewise, you brainsim might just ‘sit there’ and collapse into the lowest energy state it can find (the ball deflates), rather than turning into a continuously running simulation – the ball rolling in the right direction at the right speed. It just doesn’t know where it should be going.

P.S.: Analogy 2: A picture of an aeroplane. The picture will give you no indication of what the plane is actually doing and how. If you magically pop a copy of the plane from that picture into reality it would just fall down because of the missing velocity, air flow across the wings, engine thrust, …

#32, #34

I’m coming to this from the point of view of a physicist. A complete specification of a physical system would classically include not only the position of every particle but also its momentum. In principle that determines what it’s going to do next. So it’s not like a snapshot of a ball, it’s like a snapshot that somehow encodes the ball’s velocity as well as its position.

Admittedly such a complete specification is very different from a crude slice-by-slice description, and one can reasonably doubt whether it will ever be possible.

If, instead, we were thinking of simulating each individual neuron in a brain, I don’t see why we couldn’t observe for long enough to know in what stage of its cycle each neuron was. And we are used to representing continuous processes in discrete forms, for instance encoding music or images digitally. Again I don’t know whether it will ever be practicable.

Blah! Blah! Blah!

Kurzweil is a kook who bilks his followers with very expensive seminars to promote what? Eternal life via machine brain interfaces? And by the way, if you consume all the myriad of supplements that I do, and pay the big bucks for my Singularity University, you too can live forever.

It’s all rubbish.

Cuttlecomment

“And you really don’t want to irk a cuttlefish.”

‘Cause if you do they’ll twerk at you!

Ewwwww!

… and possibly violate Heisenberg’s Uncertainty Principle in the process? ;)

Anyway, I have of course no idea how accurate these determinations would have to be for create a reasonable model. That’s where the discrete model may cause problems as well. By discretising all the values you have to introduce errors. How large those errors are depends on how much resolution (how many bits) you can afford to use for every piece of information.

Digitally processed images and music have all taken losses. I believe some audiophile have very strong opinions on the topic of compression and file formats, and there are still people who prefer film over digital photography. It’s all a matter of how accurate you need (or want) to be.

From your phrasing (“at a particular time”) I came to my “snapshot” which I took as an ‘infinitely short’ observation period. However, even if you use a ‘long’ observation period you’ll run into problems because you’re still missing essential data for your simulation. Namely all that external stuff. This might already be ‘encoded’ into all those particles at the moment they’re recorded. But once that moment passes you have no idea what all those externals are doing, what they might be feeding into that brain after you have finished collecting data.

Going back to my ball/slope analogy, a longer observation may tell you what the ball will do in the immediate future after you looked at it. Roll downhill at a particular speed, with an acceleration and a direction. But the slope is still missing, so you still can’t tell what the real ball will do in the long run. As soon as the slope changes or the ball hits a bump, the ballsim will no longer be predictive, or a realistic representation of what the real ball would do.

In the case of the brainscan this means you would end up with two very different people, if you got that far at all. That’s why context is so important. All the bits that attach to the brain, all the data that goes into it – the slope – must be there if you want the brain, as opposed to something that looks like a brain (if you’re lucky) and might briefly behave in an analogous fashion.

#38

Ah , Heisenberg! Yes, in view of quantum mechanics it may be that even a complete specification of the brain would not allow us to build a model that is guaranteed to be the same person in the future as the original is now.

But by the same token, the original himself is not guaranteed to be the same person in the future as he is now!

These are deep waters, and it’s clear that no-one should be planning to upload himself in this century, or even the next. Not to mention the well-known (in SF) issues – what happens if the upload is done twice? What human rights does the upload have?

@Cuttlefish: (11) Yes! Very well said! If you don’t object, I would like to copy your second paragraph & use it (with attribution, of course) in future explanations of my understanding of the nature of consciousness.

In two hundred years there will be three species remaining on planet Earth: humans, cockroaches, and algae. Among humans, there will be a slave class that eats the cockroaches and algae, and a master class that eats the slaves; and all of them will be Christian.

We need not worry about the Singularity.

–aziraphale@#31– “doesn’t it follow…”?

Thank you for illustrating my point! No, it does not follow! I mean, yes, under the philosophical assumptions of a mechanistic world view, it would follow. There, you can stop any system, and like a clockwork, examine the pieces to see what that system would be like at present, at ten minutes or six days in the past, or at some arbitrary time in the future. Everything about that system is contained in the clockwork; something that was manipulated at time A to have an effect at time B must necessarily be stored (in a clockwork, this might be a spring that is wound at time A and released at time B).

But… That is a requirement of the mechanistic model, not a requirement of the actual world. It is quite possible that a mechanistic model is accurate, but it is not *necessarily* true. If, say, one’s personality (or self, or consciousness, or whatever) is something we have inferred from observation over time (my previous example was the plot of a movie–something that must unfold over time), there is no *requirement* that it be stored and observable at any given moment. “Friendliness” is inferred from multiple observations of someone’s behavior; it is descriptive, not some causal spring driving the gears of behavior. We can manipulate environmental variables and change people’s behavior; this is trivially true. Logically, the causes of their behavior include the environment… but the mechanistic model requires those causes to be part of the person, so we infer some sort of mental (or brain state) representation of that environment. Your comment #31 presumes representation; again, it is possible that this is actually the case, but it is by no means necessarily so.

In my opinion, many of the problems of consciousness research (things like “the hard problem”) are the result of approaching it through a mechanistic philosophical stance. This stance has been exceedingly useful (especially so in physics, I should imagine), but it has seduced us into thinking it is the way the world works, instead of one of many possible models of how the world works.

Cuttlefish @#42:

I suppose I was proposing a mechanistic model. I don’t like the term – it sounds like clockwork. Are you proposing a dualistic model – that there is something present, over and above the material brain, body and environment, which constitutes or contributes to our minds? If so, what is that thing?

Of course in practice we infer “friendship” from behavior, not from details of the brain. Similarly a weatherman will often infer a storm from barometer readings, the shape and movement of clouds etc. But in neither case does that preclude that the ultimate causes are in the interaction of atoms. Including, of course, those interactions which we describe on the macro scale as previous acts of friendship.

aziraphale @31:

Shades of past discussions.

To replicate, you have to scan somehow. I’d be surprised if there was a way (even in principle) to do this without destroying the original. Would that be a bug, or a feature? :-)

If you are trying to model something and the model becomes very complex you are probably asking the wrong question. If you are interested in the role of genes in evolution you don’t need to consider all the detailed chemistry underlying all the relevant processes involved in synthesising all the molecules involved. You only try to measure everything that might be involved if you don’t know what kind of model is involved. By examining a very very small portion of a mouse brain in minute detail the researchers have demonstrated that their approach is not a good way of trying to find out why humans are intelligent It is perhaps best to go back to first principles and try a fundamentally different approach,

One should look to other disciplines to see how they tackled infinitely complex problems. Years ago physicists realised that there were far too many molecules in a flask to have a chance of measuring where every molecule was, which direction it was moving, and how fast it was going. In any case the Uncertainty Principle means that such knowledge is unachievable. They invented the idea of an “ideal gas” in an infinite container, and developed a model which can be used to predict how an ideal gas would behave. The value of the model is in discovering how real gases deviate from the ideal prediction. What is needed is a similar general model of how neurons store and process information – as this would help identify which areas of study are likely to be the most profitable.

I would suggest that mammalian brains are so complex, and the knowledge they contain varies from individual to individual, and with time over and individual animal’s life time, that a model which assumes that the brain contains an infinite number of identical infinitely interconnected neurons is more realistic than any model which starts by trying to record every neuron and its numerous connections.

I am currently working on one such model – and how it can store patterns and use them to make decisions. The starting point is mathematically so crude that many ten year old children could point out why it couldn’t possibly be relevant to human intelligence, which “catastrophic weakness” may be why it has not been properly investigated. However million of years of evolution can result in surprising changes and we know that, since the time of the dinosaurs, a small rodent-like mammal was able to morph into species as different as bats, whales and humans. Could such a dramatic morphing process be at work in the evolution of the human brain.

I find that if I consider how my crude model might evolve I find it can in theory display considerable logical capabilities. However the amount an animal could learn in a lifetime put a ceiling on the intelligence of animals without language. This ceiling can be breached because there is a major tipping point once language reaches a critical level, suggesting an explosion of perceived intelligence. The model also predicts the known weaknesses of the human brain – confirmation bias, unreliable long term memory and the tendency to follow charismatic leaders (possibly including imaginary Gods who make tempting promises to their followers) without question.

Re: aziraphale #39

Indeed, and this is where, at the latest, philosophy comes in, which is probably best left to cuttlefish. My very simplistic response would be that the original remains ‘himself’ because he has the complete system. Brain and body, all the inputs. The outside environment providing stimuli will have to change by necessity, but the hardware the brain uses to connect to that environment will (with exceptions) remain more or less the same. Besides, a baseline for comparison is needed so what else is there to use than the very thing you’re trying to copy?

If you were to stick your perfect copy of a brain into a perfect analogue of the hardware (perfectly connected) you might get pretty close. But that’s a lot of perfection to ask for and not very likely to happen.

Cuttlefish may ink me for this but if we stick to the mechanistic we still have host of problems. The biggest one, I think, is that we are terrible at predicting emergent properties (which Cuttlefish may have been thinking of). So if you wanted a really accurate model of a brain you have to observe it for an infinite amount of time, for all possible combinations of stimuli.* It’s impractical, to say the least, but pretty much the only way you could get literally all possible outcomes.

I suspect the brain is much too flexible and far too dependent on the outside to be modelled just with brain data. Not every rule regarding the brain’s response is hardcoded into the brain and there may not necessarily be a rule to govern every combination of inputs, so the snapshot of the brain would end up missing bits. Those bits would only arise out of the combination of stimuli fed through a combination of filters (i.e. the body). All of which constantly shift so that even if you tried you couldn’t derive enough rules to describe all of it. And whatever rules you made up might apply just once, when you first observed them, and never again.

*With the brain learning you might also have to do every possible combination in every possible order. Which would mean an infinite^infinity set of observations, maybe? At last a job with good security. Unless you miss the deadline.

—

P.S.: Where did #41 come from? So oddly specific. I get the algae – algae utilisation is a thing (to come) – but the rest…? And what happend to all those bacteria? Poor things, they deserved better.

aziraphale:

Of course, at least at a practical level we couldn’t beat the uncertainty principle (doesn’t tell us much about reality though), but it’s not obvious that what makes a person who they are relies on interactions at that scale or that level of detail. What counts as a “complete” specification of a physical system is also one of the things under dispute, given two different interpretations. You say it “may be” so, which I guess is appropriate, but there’s a whole lot underpinning that which might lead you to think about it in various different ways.

Also, it sounds like you’re talking about having more than one of these, but any copy wouldn’t be the same person, in the sense of being that thing which has that perspective which generates that set of experiences. If they were identical at the relevant scales (don’t forget the quantum no cloning theorem, while we’re at it), there would be two identical people, not “the same person.”

If that happened to me without destroying me, there would be two CRs, each one having his own brain functioning in the same way momentarily when the procedure is done, at which point their experiences immediately start to diverge. That’s because they can’t exist in the exact same chunk of spacetime, which is because, presumably, their environments aren’t going to be identical as well, for the rest of their lives. Destroyed or not, we’re still talking about two different people (one of whom might be dead).

I don’t understand what that’s supposed to mean. Of course, the particles that I consist of are changing constantly, but there’s a degree of physical continuity from one time to the next. There are various “causal” interactions at work which tie together each little strand of my life, because what I am is not just a formal property that might be instantiated anywhere in the universe at any moment, regardless of what happened before. For instance, to make a really sharp dividing line, if you could destroy me and your friend could make an exact copy outside of my lightcone — which isn’t “the same” in the sense that those same particles are now at my spacelike-separated location, but which is “the same” in the sense that the person was formally identical in terms of positions/momenta — that would not suffice for it to be me. It’s not as if I, the person we started with, would experience myself in the place where we started and then my experiences would shift/move (perhaps instantly) to the new place where the copy is located. There would be some other person who exists over there, not me, who would have a set of memories, personality, etc., just like mine. Then it could be demonstrated to the copy that their thinking is radically deluded: they were created with those false memories of themselves existing in that other place, having a history they didn’t have, feeling/thinking ways they never felt/thought, and so forth.

What if it’s done once? I don’t see how you could avoid destroying the original. That means you die and somebody else begins to exist. Maybe that’s okay with the original, maybe not — but we can’t ask the copy what it wants, since it doesn’t exist yet. Since the second person (the copy) can feel harmed and be helped, they should be treated like any other person.

Cuttlefish:

I’ll be out of town most of the weekend, but I’ll put this out there while it’s on my mind…

I want to understand your complaints (or negative attitudes or apprehensions) about a “mechanistic world view,” which personally I’d just call reductionism. You might be saying two or more different things.

On the one hand, a physical system is in a particular state. You could call those the initial conditions. As time goes by, it’s in that state, lots of intermediate states, a final state, or whatever labels you want to attach to them. So, your complaint might be that you can’t know what the next state will be just by looking at the initial conditions, because you also need some dynamics. Physics has those too. Whether they’re deterministic or probabilistic, the idea is that you time-evolve the system using the dynamics to get to other states in the past or the future. If only we knew enough about the conditions and how the dynamics work, we would know how the physical universe does its thing. There aren’t any other things that violate those dynamics or which aren’t specified by the physical conditions, so any true thing you could say about a person’s brain or its functions (or society/history/economics, anything at all) reduces to physical statements about the fundamental conditions and dynamics. I would agree that looking at slices of a brain, as a more or less static object, doesn’t tell you how the thing works or that you know what’s going to happen next (or even the likelihood of many possible results).

However, you seem to have more general problems about reductionism. In that case, you’d be saying the conditions and dynamics still aren’t enough. Not insufficient for us, but insufficient for the universe to do its job. The claim is that there’s some other thing not specified by any physical conditions, or some things (physical or not) violate the dynamics in certain cases. That’s essentially what dualism says. There are also souls not just matter moving around, or a god occasionally performs miracles to change how the matter moves (or both).

Some people talk about emergentism this way, and I don’t understand in what sense anything “emerges” from fundamental physics, if it’s a violation of it or something that exists in addition to it. I would agree that practically and epistemically, we don’t know enough to do any such reduction for anything very significant at all. But that’s not a metaphysical statement about there being multiple different realms of existence or non-physical “forces” acting on those things. It’s just a statement that we can’t know and do enough to figure out it for ourselves, and in any case, fundamental physics doesn’t need to be our best way of approaching extremely complicated things like a person’s brain, or a society, an ecosystem, etc. We have other arts and sciences for studying those things, and even though all of it supervenes on physics somehow or another, the human activity of physics just isn’t going to be the most useful or efficient (or only) way to study most of that stuff.

I wish Heisenberg had named his principle something else, because people always misinterpret it. The widely-accepted models of QM do not say that the exact position and momentum of tiny particles are hidden or unmeasurable. QM says that what we think of as tiny particles are actually waves, and waves can’t have an exact position and momentum. You can have a wave with a single exact momentum, a sine wave, but then it’s spread out over all space and you can’t talk about its position. Or you can have a wave with a single exact position, a delta function, but to make that out of waves you have to combine every possible wavelength and you can’t talk about it’s momentum. Or you can have something in between, which is spread out over space and has a range or area as its “position”, and which has different parts moving in different ways and has a range as its “momentum.” But if you know the exact shape of something’s quantum wave function, and you know the same about its environment, you can model it to arbitrary precision.

The “uncertainty” comes from something else. First, the nature of this wave is such that predictions from it about future events can only be made statistically. It is a wave of probability, more or less. Also, and probably related, since these are the smallest things in the universe there’s not really anything we can poke them with to make a measurement that doesn’t change them drastically. A simulation-maker could easily live with the fact that very small particles can’t have well-defined position and momentum at the same time. Where they run into trouble is that there can be no such thing as a non-destructive test or even a one-look test of a quantum wave function. To see something’s quantum shape you not only have to break it, you have to break it a statistically large number of copies of it. This is tricky when “it” is an individual brain.

*facepalm* Italics tag should have been closed after “have.” And in the second-to-last sentence the word “it” should be cut the second time it appears.

Your interpretation says that. QM doesn’t say that.

I would hope it’s more than a probability, because saying I’m literally made of probabilities doesn’t make any sense. If it were less than that, I’m not sure if it’s better or worse because I can’t make sense of it in the first place. But if it were less, it does kind of seem like you’re saying there’s nothing physical at all.

Meh. QM isn’t in it. Whatever the relevant degrees of freedom are in the brain that encode things we care about, they’re stable in the face thermal noise (and more radical disturbances, of course). If they weren’t, going to sleep would be morally equivalent to killing yourself.

“How do you propose to do that?”

Infect the brain with a gene-modified rabies virus – it travels through synapses, as far as I remember. Make sure that it mutates a little bit of its sequence each time it infects a new cell, leaving a trace of its prodigy.

Then sequence the whole stuff and reconstruct the connectome from it.

aziraphale @#43–

Not a dualistic model–a functional contextualist model (at least, that’s the philosophical term). And no, I need nothing more than brain, body, & environment, although I would rather just say person and environment in interaction (de facto brain-body dualism is annoying). “Mind”, then, is less emergent property and more label for particular patterns of behavior (both public and private behavior, like thinking). Caused, rather than causal. Inferred from interactions with the environment (especially the social environment) which unfold over time.

Because we are labeling something that is extended in time, there is absolutely no requirement that that something be stored or represented in any given slice of that time (again, we cannot see “plot” in one frame of a movie).

Causation can happen because of mechanistic stuff, but also via selection (variation and selection by environment, both biological evolution and operant behavior); in both cases, things unfold over time (with, potentially, multiple environmental causes), though we may apply a single label to the unfolding processes. Again, the fact that we can assign a label in no way implies that there is an internal, causal representation at any given slice of time.

I’d have to object to the idea of creating or pursuing “singularity” (or whatever you want to call the “mechanization” of human consciousness) for reasons not mentioned yet, I think.

It’s an ‘Even if…’ sort of objection for me:

Even if all the technical problems were solved (laughable);

Even if the issue of what it means ‘to be’ was magically addressed to everyone’s liking — and I don’t think that will ever happen anytime between now and the sun expanding to engulf the world…

Even if one could exist in machine form, one would still have all the problems of entropy, decay, and, ultimately, the end. That end would be just as obliterating.

Long before coming to that end, one would be subject to all of the whims of the “living” (people not otherwise “uploaded”) as well as those of the fellow “dead” who, like you, would hypothetically continue having human experiences and interactions. Not all of those experiences would be positive or benign.

I think this ‘even if’ scenario underscores all of the problems of being that have been addressed already in that the Singularity is construed as some kind of “solution”, when in fact it is simply a deferral! And it isn’t just a deferral of death, but of living. That suggests a peculiar kind of ambiguous pathology, by my reckoning, in those who are most obsessed with the fantasy of living forever.

Cuttlefish@54, you seem to be saying that some aspect of personal identity worth caring about fails to satisfy the Markov property (and not just with respect to some lossy description of reality but with respect to reality itself). Do I have that right?

I confess I have difficulty imagining what that aspect of personal identity might be.

Anyone curious about the level of complexity behind simulating the operations of all these neurons once you’ve mapped them and their connections could take a look at this online book from CUP:

Neuronal Dynamics online book

From single neurons to networks and models of cognition

Wulfram Gerstner, Werner M. Kistler, Richard Naud and Liam Paninski

tl;dr: Mapping the brain is just the first and smallest part of the work of doing a simulation.

Cuttlefish:

But you’re talking as if that’s a feature (or a problem) of a “mechanistic” theory. Of course we can’t ignore the time dimension or otherwise dispense with dynamics, and I’ve never heard of any reductionists who say that we could/should/must do anything like that.

Now things are starting to go off the rails again. Natural selection, for example: that’s not “also” happening in addition to mechanistic stuff. It consists entirely of (highly-complicated) mechanistic stuff. I’m not sure what the talk of labels is supposed to be about, because I just mean what’s actually going on in the world.

Cuttlefish @#54:

Natural selection can be a purely mechanistic process. Robots with minimal initial programming have “learned” to walk, swim, etc in ways that their designers didn’t predict. On one level of description they have learned something new. On a lower level they, are, unarguably, nothing but machines.

Amateur @#55: I personally would settle for a million years of extended life, even if the Sun were going to swallow the Earth in a few billion years’ time. Not that I’m going to get the chance.

That wasn’t really the substance of my critique. The desire and the obsession ignores what it means to be a body and be aware and feel; it ignores, too, the reasons people interact as bodies, for good or ill. Discussions of Singularity always seem to sidestep these in favour of paint-your-bicycle-shed esoterica.

We can discuss what it means to be machine-like and what it means to “be” for all of that million years, but the real issue is: Will someone just come around and unplug you right after you’ve been uploaded because you aren’t worth the system administration load? That is where the rubber meets the road, after all. The messiness of real life.

But, you can have those million years. At any rate, I suspect you’d seek an end to the monotony after five additional decades. There’s hardly enough irony to fill three score and ten, let alone five score — or 50,000 score!

Re : Aziraphale #59:

Not my area so I’m not familiar with examples, but it sounds like an argument against faithful brainsims. For one, if more complex systems really were fully predictable the designers shouldn’t have been surprised at all. They could either have said in advance what the robot would do or else at least laid odds – 20% it’ll do this, 50% that, rest the other thing – leaving no uncertainty.

Without knowing the details I might also speculate that this could be a demonstration of emergent properties as well, which would explain why the designers were unable to predict the behaviour of their own creations.

While the brain is a popular example for ’emergent properties’, these can crop up in virtually any system with a little complexity to it. Whether it’s biological, electrical, mechanical […] or a little bit of everything, unexpected behaviours can creep in at any time. As such they won’t be predictable from the basic rules of the system (e.g. component-based rules such as how logic gates, mechanical parts or an idividual cell operate). To catch them in your brain simulation you’d have to draw very wide boundaries and still couldn’t be sure if you had everything to make a complete system.

Finally, what would really interest me is what would happen if you built a second robot of the same type and put it in the same environment / set it up with the same problem. Would it always arrive at the same solution? Or would duplicates come up with something else (or a variation on a theme)?

(Google is generous but if you have specific examples in mind links would be appreciated. The example I have now looked at – a small four-legged spider-like robot – unfortunately doesn’t make clear if it arrives at the same solution every time or if the group is using just that one example in public. There are several videos that can be found gooling ‘self-learning robot’. I’d rather not post links because I’m afraid the videos might be embedded instead.)

To catch them in your brain simulation you’d have to draw very wide boundaries and still couldn’t be sure if you had everything to make a complete system.

And, since our consciousness is tied to our bodies and senses, you’d find all kinds of weird new failure modes. Let’s imagine for a second (because I don’t think we know..) that my “sense of time” passing is tied to my breathing. I’ve learned somehow that that’s how I count time passing; there’s some little ticker going “that’s probably been about a minute” and I can look and … sure enough: it’s about 2pm! What if another person learned how to do that based on their heartbeat? Or on the pressure in their bladder? In any case, if I were implemented as “brain in a box” that simulation would come unglued unless it had a simulation of ‘breathing’ (whatever that means) … Sure, it would be able to learn how to tell time’s passage another way but then it wouldn’t be me anymore, it’d be something else. And that’s just time’s passage! I suspect there are significant proprioceptive feedback loops going all over the place, and they’d either need to be simulated, or edited out, or you’ve profoundly changed the simulation’s self.

Tl;dr: I am not me, if I can’t eat pizza.