When I was addressing this lunacy about how God exists because minds and mathematics are supernatural, I was also thinking about a related set of questions: biologically, how are numbers represented in the brain? How did this ability evolve? I knew there was some interesting work by Ramachandran on the representation of digits and numerical processing, coupled to his work on synesthesia (which is also about how we map abstract ideas on a biological substrate), but I was wondering how I can have a concept of something as abstract as a number — as I sit in my office, I can count the vertical slats in my window blinds, and see that there are 27 of them. How did I do that? Is there a register in my head that’s storing a tally as I counted them? Do I have a mental abacus that’s summing everything up?

And then I realized all the automatic associations with the number 27. It’s an odd number — where is that concept in my cortex? It’s 33. It’s the atomic weight of cobalt, the sum of the digits 2 and 7 is 9, the number of bones in the human hand, 2 times 7 is 14, 27 is 128, my daughter’s age, 1927 was the year Philo Farnsworth first experimentally transmitted television pictures. It’s freakin’ weird if you think about. 27 isn’t even a thing, even though we have a label and a symbol for it, and yet it’s all wrapped up in ideas and connections and causes sensations in my mind.

And why do I have a representation of “27” in my head? It’s not as if this was ever useful to my distant ancestors — they didn’t need to understand that there were precisely 27 antelope over on that hillside, they just needed an awareness that there were many antelope, let’s go kill one and eat it. Or here are 27 mangoes; we don’t need to count them, we need to sort them by ripeness, or throw out the ones that are riddled with parasites. I don’t need a map of “27” to be able to survive. How did this ability evolve?

Really, I don’t take drugs, and I wasn’t sitting there stoned out of my head and contemplating 27. It’s a serious question. So I started searching the scientific literature, because that’s what one does. There has been a great deal of work done tangentially to the questions. Human babies can tell that 3 things is more than 2 things. An African Grey parrot has been taught to count. Neurons in the cortex have been speared with electrodes and found to respond to numbers of objects with differing degrees of activity. The problem with all that is that it doesn’t actually address the problem: I know we can count, I know there is brain activity involved, I can appreciate that being able to tell more from less is a useful ability, but none of it addresses the specific properties of this capacity called number. Worse, most of the literature seems muddled on the concept, and confuses a qualitative understanding of relative quantity for a precursor to advanced mathematics.

But then, after stumbling through papers that were rich on the details but vague on the concept, I found an excellent review by Rafael Núñez that brought a lot of clarity to the problem and summarized the ongoing debates. It also lays out explicitly what had been nagging me about all those other papers: they often leap from “here is a cool numerical capability of the brain” to “this capability is innate and evolved” without adequate justification.

Humans and other species have biologically endowed abilities for discriminating quantities. A widely accepted view sees such abilities as an evolved capacity specific for number and arithmetic. This view, however, is based on an implicit teleological rationale, builds on inaccurate conceptions of biological evolution, downplays human data from non-industrialized cultures, overinterprets results from trained animals, and is enabled by loose terminology that facilitates teleological argumentation. A distinction between quantical (e.g., quantity discrimination) and numerical (exact, symbolic) cognition is needed: quantical cognition provides biologically evolved preconditions for numerical cognition but it does not scale up to number and arithmetic, which require cultural mediation. The argument has implications for debates about the origins of other special capacities – geometry, music, art, and language.

The author also demonstrates that he actually understands some fundamental evolutionary principles, unlike the rather naive versions of evolution that I was recoiling from elsewhere (I’ll include an example later). He also recognizes the clear differences between estimating quantity and having a specific representation of number. He even coins a new word (sorta; it’s been used in other ways) to describe the prior ability, “quantical”.

Quantical: pertaining to quantity related cognition (e.g., subitizing) that is shared by many species and which provides BEPs for numerical cognition and arithmetic, but is itself not about number or arithmetic. Quantical processing seems to be about many sensorial dimensions other than number, and does not, by itself, scale up to produce number and arithmetic.

Oops. I have to unpack a few things there. Subitizing is the ability to immediately recognize a number without having to sequentially count the items; we can do this with a small number, typically around 4. Drop 3 coins on the floor, we can instantly subitize them and say “3!”. Drop 27, you’re going to have to scan through them and tally them up.

BEPs are biologically evolved preconditions.

Biologically evolved preconditions (BEPs): necessary conditions for the manifestation of a behavioral or cognitive ability which, although having evolved via natural selection, do not constitute precursors of such abilities (e.g., human balance mechanisms are BEPs for learning how to snowboard, but they are not precursors or proto-forms of it)

I think this is subtley different from an exaptation. Generally, but not necessarily, exaptations are novel properties that have a functional purpose that can be modified by evolution to have additional abilities; feathers for flight in birds are an exaptation of feathers for insulation in dinosaurs. Núñez is arguing that we have not evolved a native biological ability to do math, but that these BEPs are a kind of toolkit that can be extended cognitively and culturally to create math.

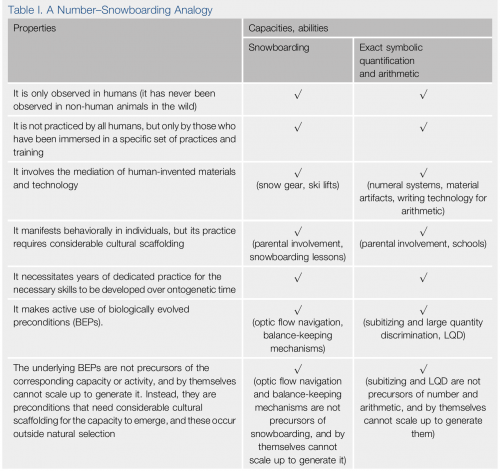

He mentions snowboarding as an example in that definition. No one is going to argue that snowboarding is an evolved ability because some people are really good at it, but for some reason we’re more willing to argue that the existence of good mathematicians means math has to be intrinsic. He carries this analogy forward; I found it useful to get a bigger picture of what he’s saying.

Other interesting data: numbers aren’t universal! If you look at non-industrialized cultures, some have limited numeral systems, sometimes only naming quantities in the subitizing range, and then modifying those with quantifiers equivalent to many. Comparing fMRIs of native English speakers carrying out a numerical task with native Chinese speakers (both groups having a thorough grasp of numbers) produces different results: “the neural circuits and brain regions that are recruited to sustain even the most fundamental aspects of exact symbolic number processing are crucially mediated by cultural factors, such as writing systems, educational organization, and enculturation.”

Núñez argues that many animal studies are over-interpreted. They’re difficult to do; it may require months of training in a testable task to get an experimental animal to respond in a measurable and specific way to a numerical task, so we’re actually looking at a plastic response to an environmental stimulus, which may be limited by the basic properties of the brain being tested, but aren’t actually there in an unconditioned animal. It says this ability is within the range of what it can do if it is specifically shaped by training, not that it is a built-in adaptation.

What we need is a more rigorous definition of what we mean by “number” and “numerical”, and he provides one.

Strangely, because this is one case where I agree with human exceptionalism, he argues that the last point is a signature of Homo sapiens, but…that it is not hard-coded into us, and that it may also be possible to teach non-humans how to do it. I have to add that all of those properties are hard-coded into computers, although they currently lack conscious awareness or intent, so being able to process numbers is not sufficient for intelligence, and an absence of the cultural substrate to enable numerical processing also does not imply a lack of intelligence.

The paper doesn’t exactly answer all of my questions, but at least it provides a clearer framework for thinking about them.

Up above, I said I’d give an example of bad evolutionary thinking from elsewhere in the literature. Conveniently, the Trends in Cognitive Science journal provides one — they link to a rebuttal by Andreas Nieder. It’s terrible and rather embarrassing. It’s not often that I get flashed by a naked Panglossian like this:

Our brain has been shaped by selection pressures during evolution. Therefore, its key faculties – in no way as trivial as snowboarding – are also products of evolution; by applying numbers in science and technology, we change the face of the earth and influence the course of evolution itself. The faculty for symbolic number cannot be conceived to simply ‘lie outside of natural selection’. The functional manifestations of the brain need to be adaptive because they determine whether its carrier survives to pass on its genes. Over generations, this modifies the genetic makeup of a population, and this also changes the basic building plan of the brains and in turn cognitive capabilities of the individuals of a population. The driving forces of evolution are variation and natural selection of genetically heritable information. This means that existing traits are replaced by new, derived traits. Traits may also shift their function when the original function becomes less important, a concept termed ‘exaptation’. In the number domain, existing brain components – originally developed to serve nonverbal quantity representations – may be used for the new purpose of number processing.

I don’t think snowboarding is trivial at all — there are a lot of cognitive and sensory and motor activities involved — but just focus on the part I put in bold. It’s absurd. It’s claiming that if you find any functional property of the brain at all, it had to have had an adaptive benefit and have led to enhanced reproduction. So, apparently, the ability to play Dungeons & Dragons was shaped by natural selection. Migraines are adaptive. The ability to watch Fox News is adaptive. This really is blatant ultra-adaptationism.

He also claims that “it has been recognized that numerical cognition, both nonsymbolically and symbolically, is rooted in our biological heritage as a product of evolution”. OK, I’ll take a look at that. He cites one of his own papers, “The neuronal code for number”, so I read that, too.

It’s a good paper, much better than the garbage he wrote in the rebuttal. It’s largely about “number neurons”, individual cells in the cortex that respond in a roughly quantitative way to visual presentations of number. You show a monkey a field with three dots in it, no matter what the size of the dots or their pattern, and you can find a neuron that responds maximally to that number. I can believe it, and I also think it’s an important early step in working out the underlying network behind number perception.

What it’s not is an evolutionary study, except in the sense that he has a strong preconception that if something exists in the brain, it had to have been produced by selection. All he’s doing in that sentence is affirming the consequent. It also does not address the explanation brought up by Núñez, that these are learned responses. With sufficiently detailed probing, you might be able to find a small network of neurons in my head that encode my wife’s phone number. That does not imply that I have a hard-wired faculty for remembering phone numbers, or even that one specific number, that was honed by generations of my ancestors foraging for 10-digit codes on the African savannah.

Nieder has done some valuable work, but Núñez is right — he’s overinterpreting it when he claims this is evidence that we have a native, evolved ability to comprehend numbers.

Núñez RE (2017) Is There Really an Evolved Capacity for Number? Trends in Cognitive Sciences 21(6):409–424.

Nieder A (2017) Number Faculty Is Rooted in Our Biological Heritage

Trends in Cognitive Sciences 21(6):403–404.

Nieder A (2016) The neuronal code for number. Nat Rev Neurosci 17(6):366-82.

That’s remarkable! “An accident with a contraceptive and a time machine”?

She didn’t acquire that maturity by accident, you know.

This may be naive, but is it possible that abstractions are the *only* thing the brain knows and we are looking at the problem from the wrong angle. It’s not that we figured a way to reinterpret the real world in abstract concepts, but that we figured out how to deduce the real world from the impressions it makes to our abstract concepts.

https://www.amazon.com/Number-Sense-Creates-Mathematics-Revised/dp/0199753873/ref=sr_1_1?ie=UTF8&qid=1495298986&sr=8-1&keywords=the+number+sense

Admitting that there are deep thinkers out there – good ones, e.g. Max Tegmark – who do in fact believe in forms of Platonic idealisms, I’m pretty sure numbers are all of a piece of our other forms of phenomenal representation.

The universe has vast consistency and we are made of universe stuff. Our brains have largish consistency. And when our brains evolved to need representations of themselves in relation to the world (which representation our “us” ourselves) the consistency of such was part of the representation. When such representations achieved Turing Machine status, the capacity to represent such consistency as a thing apart from any instances of it became useful. Such are numbers, abstracted consistency apart from the things they are nominally attached to.

But out in the real world, they don’t exist. There is no “42” out there to be the answer to life, the universe and everything. There’s only l/tu/e and 42 is an abstraction that exists in our heads. Easy enough to generate in terms that any similarly abstracting entity will share … but it’s entirely dependent upon such entity having a similar abstracting schema to recognize our presumptive meaning of 42.

So numbers are strong attractors for Turing machines (which are themselves abstractions based upon our means of making abstractions) but there’s no guarantee, nor any particular reason to believe – and strong reason to believe otherwise – that they exist outside of abstracting entities and their representations.

— TWZ

I am reminded of the book “Babel-17” and how it identifies language as mediating our use of BEPs

Wow… I had no idea that you could get that philosophical, PZ! :-)

In my opinion, once someone has come up with a reasonable model of how the abstract concept of numbers and counting took root in the human mind, they can then rely on the general capability of the human brain to create, update and interlink categories in order to explain how all kinds of such associations pop into the mind.

I think there’s a sense in which it’s reasonable to say that a few numbers… 0, 1, e, and pi (or, pedantically, the quantities those labels correspond to) off the top of my head… “really exist.”

I’m very interested in the concept of cultural evolution – the idea that a bunch of our mental traits and abilities evolved after we began living in civilized societies, when our intensely warlike and competitive nature provided far more selection pressure than the old-fashioned “don’t get eaten by predators” pressure from mere biological evolution.

PZ is right that knowing what “27” means doesn’t do us much good as hunter-gatherers hunting antelopes. But what about if we’re living in a city-state, warring frequently with an opposing city-state for land and resources? If our war chief can say “I want to divide my army into five groups of four hundred soldiers each,” that will give us a tactical advantage if our enemies are using the “You guys go there and you other guys go over there” method of troop allocation.

Also, once we start developing mathematical concepts like multiplication and division, we can start having efficient and fair taxation. Taxation is necessary for a strong central government, and I suspect a strong central government would give our culture a huge advantage in spreading and conquering. So in that sense, yes, mathematics is a survival trait – because if we don’t have it, we’re more likely to be conquered and killed or enslaved by someone else who does.

I guess the vibe I’m getting from PZ’s sources is “something can be developed culturally, or be a product of evolution, but not both,” which I think is wrong. To me, the biggest problem with evo-psych is their over-focus on explaining behavior by pre-civilization evolutionary pressure on biological development of the brain, rather than explaining it by post-civilization pressure of competition for resources between cultural units in a constant state of war. I guess it’s the “Civ V” theory of evolution.

No mention of crows. disappointment. I thought I saw recently a “shocking” observation of a crow in an “intelligence” experiment doing something totally unanticipated by the experimental setup. Something about not only comparing two quantities of stacksBible chapter Revelations. That back then a “dozen” was often used synonymously for “many”, so a “dozen dozen” was used poetically to mean “a whole lotta …” (aka “more than you can count”).

shucks, just a side note. sharing a tidbit rattling around in this empty space where my brain usually is. (where’d it go…) ?

Or as the genius 2nd grader would say, “One, two, skip a few.”

re @10

oops middle section of that note vanished. let it fly, pointless to retrieve it. sorry for the mysterious note.

Our ancient ancestors learned to thrive by cooperation and sharing. Sharing begets quantification. Quantification begets numbers. That your mind can visualize numbers is no stretch. Scratch a line in the sand; hmmm, looks like a Roman Numeral one. Store that vision.

It’s not just about the eyes and brain abstractions. At the beginning, counting was likely a physical act using the fingers. That’s a fairly common starting place for children. Beyond simply counting to ten, it’s fairly easy to count up to fifty using two hands without having a name for every number. This is a useful skill if you need to know how many sheep you have to eat or need to give to the local potentate to keep you head on.

Math is the Devil’s work and numbers are hellish spawn from the bottom of the Tartarus.

Im sure that is somewhere in the Bible.

A bit of intellectual lineage: Rafael Núñez was a student of George Lakoff, and you can see the influence of Lakoff in his ideas. (In fact, they wrote a book together–Where Mathematics Come From–which I assume he’s drawing from here (I confess I haven’t read it).)

What I find fascinating are all of the precursors needed to have an idea of numbers such as pi–that observed reality can be divided into discrete objects, that those object can be grouped together based on some salient characteristic(s), that those groups can be fluid (I have three apples, two oranges, and a tricycle, or I have five pieces of fruit and a toy, or I have six things, or I have two oranges and three non-oranges, or I have a macintosh, a winesap, and a fuji), that things that we normally perceive of as continuous can be divided into discrete units, such as centimeters, and that when we use those units to measure seemingly unrelated things we are describing a shared property. All that before we even get into division and ratios.

One thing that causes much of the problems in trying to comprehend *anything* about cognition and neural processing behind it, whether it’s abstract thought processes or even the simplest of mathematics of counting seven coins is, we truly have no idea how our brain functions.

Oh, we know neural processing in general, but interactions between clusters of neurons, how signal switching and processing to concepts and abstracts and how thought processes are actually conducted at a cellular cluster level, which cluster performing which particular processing, etc, is still not known. Functional MRI has contributed greatly to some degree, but we still are confounded by things like this case:

http://thelancet.com/journals/lancet/article/PIIS0140-6736(07)61127-1/fulltext

How can a person be a white collar worker, socially active and living an overall normal life, when so much of his brain has been obliterated? Per everything that we thought we knew, the man should have been in a persistent vegetative state at best.

We’re still learning how the brain operates, but we’re still quite far from understanding how processing occurs. Learning how the number seven is processed, both in contextual terms and as a discrete number very well may help to answer the question of, how does someone who is missing most of his thalamus manage to not only work as a civil servant, raise a family and thrive.

For those who are confounded by the medical article, a more popular science version:

http://www.iflscience.com/brain/man-missing-most-of-his-brain-challenges-everything-we-thought-we-knew-about-consciousness/

I don’t think that’s exceptionally mysterious. The brain is a pattern recognition engine, when left to roam, it’ll seek all manner of similar and related patterns. That’d be handy for tiny hairless apes, with no exceptional running, jumping or hiding capabilities and exceptionally blunt, short teeth, as predators would be more easily recognized.

An example, I have always been able to tell a camouflaged man from the environment, despite the skill of the camouflaging. I first see a pair of eyes, then resolve the rest incrementally. I posit that that may be an evolutionary holdover from when we have a very high level of concern over predators wanting to make a quick lunch over our bodies. Noticing eye direction and fixation would also be phenomenally handy for social interaction, just as it is today. One just *knows* when someone is staring at oneself, despite how many other people may be in the room!

Perhaps, not the 27 mangoes, but 27 people in one’s group, in the context that one suddenly is missing and from that, concerns over predators being present would be rather important. That’d be important to herd animals, pack hunters and the mixture of the two, groups of humans.

A mother duck knows how many ducklings she is escorting. That can also give us a hint, as the physical size and hence, the number of neurons present in a bird brain isn’t exceptionally great. When one accounts for how many would be responsible for walking, swimming (OK, that’s adapted walking) and flying, a tantalizing hint may be present!

Just incidentally, there have been “idiot savants” (probably not the best term to use these days, but that’s what they were called at the time) who had the ability to subitize up to much higher quantities. (I sort of think one of them was able to go up into the hundreds, but I don’t have the reference in front of me.)

@#8, Azkyroth, B*Cos[F(u)]==Y

The problem with that is that if you even admit that the number 1 has some form of real-ish existence, then it is possible to construct all the others. (In fact, as soon as you admit any quantity has meaning, pretty much all of mathematics “exists”, because as soon as you say “ah, pi exists”, for example, then you have to admit that the unit 1 exists, because if you have 3.14159265… of something, then it implies that there is a unit of that something.)

Frankly, it’s much more likely that the universe is an approximation of mathematics, on a “deep” level, than that mathematics is an abstraction of the universe, as some anthropologists and biologists seem to want to claim.

The rules of the somewhat-tedious “game of life” imply every single possible state of every single possible arrangement of the grid, whether anyone diagrams them out or not, and the rules map one iteration to the next. These states are not made more “special” just because somebody sits down and doodles them out on a sheet of paper (or a computer, or whatever) — even if the rules include randomness, it merely adds possible connections between states, it does not mean that there is no map of states.

In fact, any set of mathematical rules operating on a collection of things implies a set of possible states, which do not become “more real” for having been drawn (or written, etc.). Therefore, if there just happened to be a set of mathematical rules which corresponded to a complete set of the laws of physics in play in our universe, then those rules would imply every possible state of the universe, and the connections between them. There is no need for anything “outside” to think about this to make them “more real” — and, obviously, these states would also include humans, who would be unable to tell whether they were in such a metaphysical construction or not…

Hmm, PZ’s modern notion of 27 is very different from a Roman’s notion of 27, or a Babylonian’s notion of 27. It’s more a cultural artifact than anything related to neurons that respond to small quantities: my fifteen year-old knows it’s 3^3, a fact not known to my 12 year-old. My notion of 1001 is probably a bit richer than your average person’s.

Given some old civilizations had the decency to write things on clay tablets, we have a record that shows it took hundreds of years for some societies to develop the notion of ’27’ as distinct from ’27 barrels of oil.’ And that was after they figured out things like ‘5 fish tokens’ equals ‘5 fish token imprints.’ And they had been doing accounting and war before they even figured that out.

Most dead civilizations never got beyond addition and some simplified recipes for subtraction, ratios, etc. Even by the Italian Renaissance, double-entry bookkeeping was created to only use addition: subtraction was a rare skill and hard to checksum.

By World War 2, we had rooms full of people called ‘computers’ – they manipulated numbers. Your average ‘computer’ in some of those rooms was not asked to work with negative numbers: the rules were too subtle, so -6 times 24 would be punted to an advanced ‘computer’ person.

In summary, your notion of 27 has very little in common with neurons in your brain that respond to groups of 3.

Caleb Everett’s new book Numbers and the Making of Us: Counting and the Course of Human Cultures is also interesting on this topic.

Azkyroth:

That sounds totally unreasonable to me. What secret special sauce does a certain collection of numbers have, which all other numbers don’t, that is supposed to indicate that the ones in that collection “really exist”? If any number exists, then what kind of distinction or principle or rule or whatever would tell you that any other number doesn’t?

Let me suppose you’re somehow correct about those numbers or about any other specific collection you like. Does anything make the number (1+1+1+1) “reality-deficient,” or does it lack something that the existing numbers have? What if we consider (e^(1+1))/(pi-1), or what if we did any other sequence of mathematical operations with them? At some point, somehow, for no obvious reason, we’re no longer talking about reality? (If we ever were talking about reality.) Why should we believe that’s true?

Does a 7×7 identity matrix exist? After all, it’s just made up of lots of 0s, with 1s on the diagonal, so maybe you’d be happy with that. That sounds nice and simple. But then again, a matrix is not a number, so it’s not at all obvious what I’m supposed to conclude given the fact (if it’s a fact) that 1 and 0 exist. Maybe only 1×1 matrices exist (as boring as they are), or maybe it’s only those with various other properties (which may not be boring at all)…. But why should we think any of these considerations have anything to do with what’s real and what isn’t real?

What about the eigenvalues of some arbitrary matrix made of 1s and 0s (or pis or whatever existing numbers there are)? Now we’re back to talking about numbers again, but they’re derived through this possibly suspicious or non-reality-certifying process (although it’s just algebra), so what kind of metaphysical status do they have? How do we tell? What if certain eigenvalues happen to be 1 or 0 or pi or e? Then it’s a mixed bag of real and fictitious stuff, or something like that, or is it not even a bag? How could we go about determining any of this, by appealing to any other facts about “real” stuff? Or are you not using genuine facts to decide questions like that, and if not then what are you doing and why should we think it’s telling us the truth?

The philosopher Gottlob Frege wrote a very beautiful book called The Foundations of Arithmetic (Die Grundlagen der Arithmetik) which asks: WHAT, exactly, is a number? What, for instance, is the number three? Cutting to the chase, he ends up defining the number three as the set (or meta-set) of all sets which contain exactly three elements each. When we say “the number three” we are really referring to this meta-set. So the set of Rheinmaidens, and the set of kings who allegedly journeyed to Bethlehem to pay tribute to the baby Jesus, and the set of rings for the Elven Kings under the sky, and the set of ordinary phases of matter, and the set of blind mice whose tails were cut off by the farmer’s wife, are all elements of the meta-set which is the number three.

handsomemrtoad —

Or, under another definition of “three,” three is {{},{{}},{{},{{}}}}. Somehow, mathematics works fine based on that definition. (The details are mostly beyond me, though. I recognize that natural numbers behave the same under any of a number of different definitions, and work from there.)

I don’t think I’ve seen a definition of natural numbers (let alone numbers generally) which satisfies me both mathematically and practically. The formal mathematical definitions are plenty in order to be able to do math, but don’t help much in clarifying why numbers are useful in interpreting the world; and the non-mathematical definitions seem to slip into mush.

Would it be fair to say that the BRPs are selectable? If so then when the cultural behaviour arises it possibly becomes an environmental context that enhances selection of the BRPs?

I wonder if this isn’t just a smaller part of a bigger question. That would, I think, be “how did brains develop the capacity to abstract?” That seems to be tied up with the development of language, since I think that abstraction requires symbolism. Or does it? If it is do we know that only human brains can symbolize?

Anyway, a good and thoughtful post from our gracious host.

we have been farming which gave us the ability to live in “civilization” for far to short a time to have changed very much from our hunter gatherer selves. I am not discounting the fact of culture nor that culture evolves nor that cultures do not differ greatly. What ever it is that allows us the use of numbers and subsequently mathematics has been there all the time the question what is it?

From some TV programs I have been told that some other animals seem to also posses ideas of numbers of things.

I do not know how it could be tested to see if other animals had an abstract concept of numbers or the ability to think of numbers not connected with any objects but we certainly have that ability.

I may be going out on a limb here but the ability to think of anything at all disconnected from its object to think abstractly seems to be a trait we alone posses though I have no idea how that could be proved. Let alone how we manage to do that in the first place.

uncle frogy

1 = “a thing.” A thing, and another thing, and another thing, is 1, and another 1, and another 1. “Three” is an abstraction.

0 = “noticeable absence of a thing”

e and pi are “real” in the sense of the relationship between physical quantities.

Jesus Fucking Christ, I’m sorry I tried to fucking contribute. Asshole.

Doing some more thinking on my comment in #24.

How would an ability to identify “quantity” be a selectable trait?

One possibility would be if it had the ability to allow harmful behaviour to be regulated.

Consider a squirrel gathering nuts to store for winter. Is it ok for it to just gather as many as it can until time runs out or is there a downside to that? If there is a downside then having an idea of the quantity “enough” would allow a squirrel to avoid expending the energy required to overstock or perhaps to avoid holding out for a storage space that is “too big”, and rarer, than “just right”.

I don’t know if this is a feature of squirrel nut gathering in particular, but I think that is the type of situation that could make the ability to recognise quantity a selectable trait.

Azkyroth at 27 wrote:

Really? The text you quoted was not a belligerent attempt to shut you down. It was a statement of disagreement. You want to chuck in personal insults as part of your response now?

@26unclefrogy

I guess it’s a question of hardware or software. It took millions of years for biological evolution to come up with a brain that was capable of “one two many” — an ability which seems to be something we share with corvids and other animals.

The adaptation that I think makes us unique is our capacity to communicate and copy ideas/memes rapidly amongst a group. Once we had that adaptation, cultural evolution took over — and then, it turned out that the same hardware that let us run the “one two many” software was also capable of running “You have a village of 500 individuals and sixty five cultivated hectares, which means you owe the king 27 bushels of rice.” And the software evolved from the first program to the second in a few short thousand years, which as you point out, is nothing in terms of biological evolution. For me, how that part worked is the more interesting question.

One, two, many, lots.

Speaking of the concept of numbers: I’m reminded of a time when my brother gave me a challenge. He said, “Define what an integer is.” It turned out to be surprisingly tricky.

The answer he told me consisted of two parts.

Part 1: The number “1” is an integer.

Part 2: Any integer, plus or minus an integer, is also an integer.

M just M @23

In my opinion, the Peano Postulates are darned near perfect for that. That’s just my opinion though.

One problem I have in general about “thinking how cognition works” is that it assumes cognition is more or less the same across people. What if it’s not? What if people we consider “more intuitive” have some thought processes that are quite different (let’s imagine they parallelize or multi-thread) from “more analytic” people (let’s imagine they do depth-first tree searches and make sure they are thinking acyclically) Of course that’s a silly hypothetical but if we accept the hypothesis that language is both the fabric of thought and the product of it, we could have very different cognitive systems manifesting as differences in vocabulary.

handsomemrtoad@#23:

he ends up defining the number three as the set (or meta-set) of all sets which contain exactly three elements each.

That sounds as circular as the Place Etoile. Three is three, oh, ok.

I’m not sure how to phrase this but I feel like one requirement of a definition is that it includes something that references something that is not the thing under definition. For numbers that might be possible inductively. We could say “one” is a label for the number of things you have when you have a thing (points to a thing) and “two” is a thing and another thing. And “three” is “the number of things you have if you have two things and another thing”

Marcus Ranum @#35.

No, not circular. He’s not trying to define the meaning of the word “three” using a definition which includes the word “three”–that WOULD be circular. He is trying to identify the referent of the NOUN-PHRASE “the number three” using the adjectival phrase “having three elements”. That is NOT circular.

(Clutches temples) Okay.

not exactly “belligerent” but certainly beyond simple disagreement.

All language–even deictics–are abstractions. “One” is an abstraction–it’s one of the ways languages “mean” or signify.

Whorf, a long time ago, noted a distinction between “real” and “imaginary”–keeping in mind that since numbers are also lexical items and therefore already an abstraction–that is, we can, potentially, see “three” cats–Whorf claimed a realness for that use of numbers, but we cannot see “three days”–unless we are speaking metaphorically and using such metaphors to calque an imaginary as a real (we should be skeptical of the terms here). Whorf then pointed out that some languages treat real and imaginary quantities the same (three years and three cats are constructed the same way), other languages treat them as different. Whorf found this interesting.

Human exceptionalism? Not when it comes to snowboarding. Corvids do it too.

Oops. Sorry. In the preview I saw only the link!

re 31: I’m glad someone said it. Troll counting!

More seriously, good post by PZ. BEP’s is a concept that I think is highly important (I think of it often as I watch people drive cars), but have never had a name for.

There are two different sorts of questions (along with many others) that you might ask.

1. What are numbers? Or to ask a more loaded question, how might we describe what type of thing a number is (if they’re things)?

2. How can we distinguish one number from another? If they’re not all indistinguishable, what makes one different from another? Or maybe you want to know, if there were some consistent and complete systematic treatment of the subject, what would it tell us about all numerical relationships. Perhaps we can’t exactly do that, but the best we can do, whatever that amounts to, is hopefully satisfying enough.

What was described above as Frege’s position looks like an attempt to answer both (maybe a failed one but an attempt nonetheless). A particular number is a particular meta-set, we’re told, or it’s a certain class of them or some such thing. That’s what it is to be a number. I doubt my concepts of “sets” are any clearer than they are for numbers, but that’s supposed to be helpful somehow. And that at least is one requirement for being a number — however, other things may fall under the same banner, so we’d need to do a little more work to get specifically to numbers, instead of more generally all of things which qualify as a set.

How do you tell a number apart from a different number (if there are multiple numbers)? Well, you do that by examining the sets using this sort of set theory, and their differences (if any) are associated with various set-theoretic features that are not logically equivalent to one another. If they’re indiscernible in all relevant respects, then they’re identical. Sounds okay I guess, but of course the way I just put it is probably too sketchy to be very useful to anybody.

Anyway, the point is, these aren’t the same questions. Let’s make this a little more boring and a little bit easier. Suppose I want to know what tables and chairs are. You can tell me various things about tables and chairs, some of which may be wrong or not very well thought-out, while others may be more or less on the right track. A decent first-pass is to say they’re physical objects, certain configurations of matter in spacetime which look and act like the things we refer to when using the words “table” and “chair” appropriately, possessing certain physical properties or satisfying certain conditions, etc. Good enough.

However, it just won’t do, to instead characterize anyone’s concept of a table or chair, not unless we’re actually forced to go down that rabbit hole and be idealists or solipsists; and I don’t think there’s a need for any of that bullshit. That would not be a decent substitute at all, so if you stop there, I think you’re going to be really fucking lost. When thinking of tables and chairs, if that’s what you’re really doing, your ideas are about those physical objects — they don’t somehow constitute the same thing as the objects themselves. You can’t of course logically deduce anything like this; it’s just a fact that we have to take on board, if this line of thought will go anywhere productive and not lead us straight off a cliff.

So, starting from there, you’re not committed merely to the claim that your ideas of them exist, but also that those physical things exist. In other words, you’re also committed to all of the logical consequences of those things, not only what follows from you having an idea but everything that follows from those things being a real part of the furniture of the universe (excuse the pun). Tables and chairs exist, so we have to deal with that somehow, and we have to take them seriously as real, proper, mind-independent objects.

Those are of course “concrete” objects, and maybe you’re a little surprised that anybody could even ask whether there are such things (as opposed to our ideas). In a very straightforward way, they’re made of some kind of physical stuff located in spacetime, and they’re correctly described by physical laws detailing their motion (or properties like mass, charge, temperature, color, interactions with other objects via forces, and various other derived notions). They don’t just exist but exist somewhere at some time, it turns out they’re composed of smaller physical constituents like molecules, they have physical effects on other objects which also satisfy all of these criteria, you as a physical object can therefore interact with them and observe them, and so forth. That’s more or less how I’d describe what it is to be an object of that sort.

So, here’s a fairly simple question: is there anything which isn’t “concrete” in this sense? You might think the answer is obvious, maybe just by the definition of some English word or another, but for millennia some smart people have thought yes while others have thought no — and generally, they have no reason to give a shit about how you define words. So, that’s not going to help. Do you have a logically-sound, rock-solid way of getting one answer or another? How would that work? That’s an honest, non-rhetorical question — I don’t know how that would work. And you don’t get to just make up arbitrary criteria like “if I can kick it like a rock, then it exists and otherwise it doesn’t.” That sort of horseshit may be amusing sometimes, but it just won’t work as a serious proposal. Does this seem awfully, surprisingly, perhaps intractably difficult? Sure it seems that way, if you take it seriously. But why should that stop us? If you think you’ve got better things to do, then go do that, if you feel like it.

There’s another thing going on under the surface of these discussions, and it’s probably good to address that too. I’ll go straight to the point, since this comment is getting too long…. If there are any “non-concrete” things, abstractions or what have you (numbers may be the best example), that’s no reason for atheists/naturalists to be at all worried. That seems pretty obvious to me, but it’s worth saying. If that’s so, you’d merely need to come up with a somewhat richer metaphysical picture, one that doesn’t only have physical stuff moving around, if that’s really all you thought you needed to begin with. Big deal. This is not to say anybody has any easy options, but it would generally help to set aside your worries about theism and so forth, because at best that will just distract from the problem at hand, which is very much a separate problem.

I mean, we’re not talking about ghosts and gods and magical powers here. That simply isn’t the case. And you’d definitely be wrong to treat numbers as somehow the logical equivalent of ghosts, to think that they might constitute any sort of indirect evidence of ghosts, that the origin of numbers (if indeed they’re real) must somehow involve the activities or existence of ghosts (or the activities of anything else for that matter, people included), or that in any sense this fact would mean we need to tell ourselves a ghost story of some sort. (Same deal with gods, etc.) None of those conclusions would make any fucking sense, as seems fairly clear if you just think for even a moment about what they’re proposing. Of course, just saying that doesn’t get us far at all toward a legitimate answer. But you’ll be in a better position to evaluate these questions, if in the back of your mind you’re not assuming the genuine issues here revolve around any bullshit like that. They don’t.

OK, I’ll give the Corvids that one. But, I have them beat.

Never has a Corvid been observed to do that which I, a human do. Walk with a cane.

Ain’t much, considering, but it’s one singular item that they’re not capable of doing, since they also enjoy tool usage.

One thing I wanted to add at this point: Do sets exist? If not, then that would answer the question about numbers (according to Frege, as he’s represented in this thread at any rate). But if it’s just plain assumed that there are sets, then you’re still assuming the conclusion and have just pushed it back another step to a question about sets.

https://en.wikipedia.org/wiki/Yan_tan_tethera

Caleb Everett — son of Dan Everett, whose work with the Pirahã caused such an uproar in linguistics — has just published a book on “anumeric” societies, “Numbers and the making of us”. Here’s an article by him discussing this. His findings are more than startling. And I suspect they bear strongly on this discussion.

https://theconversation.com/anumeric-people-what-happens-when-a-language-has-no-words-for-numbers-75828

Would it matter if a child from a society without large numerical cognition could easily be taught numerical cognition? That is, if this is a specifically-selected trait, then those from cultures potentially shaped by competitive selection mediated by this trait should be much more adept than those from cultures in which only quantical discrimination is practiced, correct? Clearly not an easy/ethical experiment to perform, but perhaps there are data on less ethical versions that have already occurred that demonstrate the phenomenon’s plastic nature?

I’m thinking of something like Sequoyah’s Cherokee syllabary — abstract symbolic representation he devised as an adult despite (presumably) a lack of selection for that specific type of thinking. He trained Cherokee children to read this way, and I think this demonstrates literacy isn’t ‘selected’. If that’s correct thinking, maybe an analogous example for maths would also be helpful?

I actually can’t do this. Visualize numbers, that is. It’s why I can’t do math in my head. Eight is not a word meaning a number to me, it’s a five letter word with the letters e, i, g, h and t. Even the number 8 is a drawing to me, it’s the infinity symbol sideways, not a symbol of a numerical quantity. So how do you add a picture to a picture or two words together and get a number? I’ve never been able to do this.

onion girl

have a ginormous *hug*

.

when I awake for no reason at 3:15AM, I treat 3:15AM as a number:

315

and I try to find the prime factors before 3:16

315 = 3x3x5x7 easypeasy

316 = 2x2x79

317 = gimme a minute … zzzzzzzz

Just this week, a youtube video by Vox appeared discussing colour, and why all cultures worldwide, whether isolated or connected by trade, discern and discriminate colours in the same order: black and white first, red, yellow and green, blue and then others. And mostly in the same 9-12 ranges of colours.

I suspect our ability to discriminate numbers is directly related to our digits any why we use base ten. I find it easy to visibly count by fives or tens and get accurate approximations for large groups.

Do people still take Frege seriously? Try Peirce or Jakobson, Austin too (avoid, of course, Searle). Sapir and Whorf had far more interesting things to say than Frege. See also Charles Taylor’s recent book The Language Animal.