Netflix’s algorithm engages in queerbaiting. Whenever we browse movies and TV shows, Netflix has a clear preference for showing promo images with attractive men looking meaningfully into each others’ eyes.

I think many of these shows actually do have some sort of same-sex relationship, but they’re incidental or on the margins. Others, I have a suspicion that they actually don’t have any queer content at all! And then there are some that I thought must be a trick, with hardly any queer content to speak of, but after some research, I think are actually fairly queer. Netflix’s tendency to show the most homoerotic marketing material regardless of actual content sure makes it difficult to distinguish.

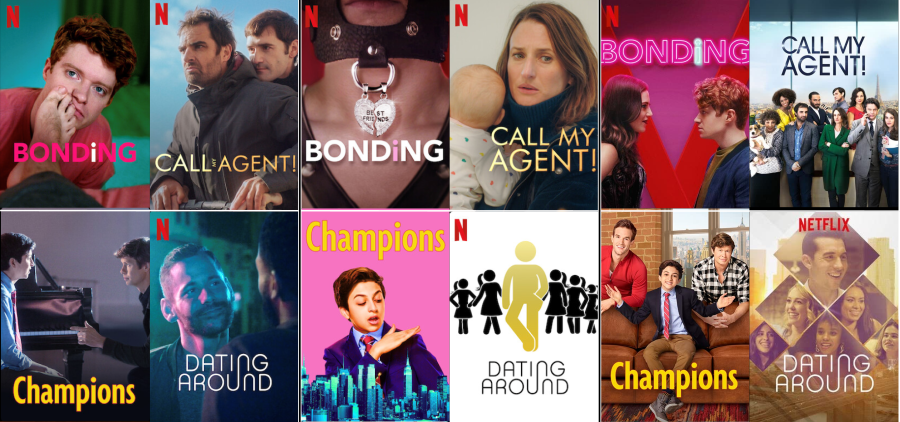

I’m very sorry but I’m going to have to show you some homoerotic imagery. Purely for scientific purposes, of course.

Click images for full size. Sorry I had to break it up into multiple parts to stay within file size restrictions. Please credit me if you use my collage.

To create this image, I screencapped a bunch of homoerotic promo images on the Netflix app, and compared to the equivalent images on my (straight AFAIK) brother’s account, as well as the cover images on IMDB. I tried to exclude shows that I think actually do have significant focus on same-sex relationships, but that’s a subjective judgment, and one I had to make without actually watching any of them. The point is, it’s difficult to tell.

I’m not the first one to complain about Netflix’s misleading promo images. For example, Black users have complained about images that focus on Black minor characters, giving the misleading impression that they are more central to the film.

Now you might be thinking, Netflix is some evil megacorp acting in their own interests, against the interests of their users. But actually, in my opinion as a professional data scientist, I don’t think that’s what’s going on here. Netflix doesn’t stand to benefit from clickbait. They already have our subscription money, they don’t earn more by tricking subscribers. Their goal is to optimize user experience so that people don’t drop their subscriptions. I think clickbait is a problem even from Netflix’s perspective, and they just don’t have a good solution for it.

It’s impossible to say what’s really going on in Netflix without insider knowledge, but Netflix has discussed this very problem on their tech blog.

We also carefully determine the label for each observation by looking at the quality of engagement to avoid learning a model that recommends “clickbait” images: ones that entice a member to start playing but ultimately result in low-quality engagement.

As the article explains, the first guardrail against clickbait is their team of artists and designers, who create a broad pool of images that are representative of the title. However, this is difficult to do at scale, and suffers from inherent subjectivity. And there’s a risk that the algorithm might just select the most clickbaity images in every pool, counteracting whatever wisdom the artist team may have had.

So to further avoid clickbait, their models are optimized for “quality plays”. This excludes, for example, a user starting to watch a film, and then stopping in the middle. This is a good way of dealing the problem, but the devil is in the details. It’s easy to imagine that the algorithm that maximizes high quality plays will also have a high number of low quality plays. Does the algorithm get penalized for increasing low quality plays? How big is the penalty? How big ought the penalty to be?

And what about other potential negative effects of clickbait? For example, the excess of clickbait means I need to hover on each title for a while, maybe look it up on IMDB, to see what it’s actually about. Does Netflix’s algorithm get penalized for promoting hovering behavior? Maybe they can’t penalize such behavior, because hovering on a title may just as often indicate positive interest in that title!

Or what if the clickbait draws attention away from other more worthy titles? This could be exceedingly difficult to account for, because now you need to track behavior not just towards one title, but towards many.

There may also be limitations caused by finite amounts of data. While Netflix might have a lot of data overall, they need to make a promo artwork decision separately for every single title, and some of those titles might not get very many views. Anything we do to address clickbait would introduce more complexity into the model, and more data is required for the model to learn those complexities.

There are many possible reasons Netflix might have failed to address their clickbait problem, including the possibility that it’s simply not very high on their priority list. Maybe it isn’t on their radar, or maybe they believe the problem of clickbait images is too small and difficult to bother with. Who knows? All I can say is that right now, queerbaiting is a dominant aspect of our Netflix experience.

I’m not sure how much the algorithm knows, but I did one notice that the promo/browsing pics on the Netflix account, of a heterosexual friend of mine, featured significantly more attractive women than my own – even for the exact same movies and shows.

Huh… I never realised Netflix adjusted their images to the audience…

It may however be “clickbait” as in it is “clickbait that works”, as opposed to “clickbait that generates clicks”. Who knows… perhaps their algorithm actually caught on to something ‘real’, in the sense that that queer people tend to stick with their subscription more if presented with “promo images with attractive men looking meaningfully into each others’ eyes” (and the opposite for non-queer people)

Or it may just be clicks, and people haven’t realised the correlation between “clicks” and “continuing your sub” is quite weak.

@1 kremer

As for “how much the algorithm knows”, it’s usually something fairly simple such as:

– count how often you click on promo’s featuring attractive women

– if it’s more than average, then display more such promo’s, if less, display less

Great post, Siggy.

@robert79,

Yeah, it’s possible that Netflix has some internal analysis showing, for example, that low quality views don’t really impact subscriber retention. We don’t really know.

Another thing I don’t know, is whether Netflix’s algorithm has any features that explicitly refer to “attractive women” or “men staring at each other”. It’s possible information like that is provided by the artists and designers who create the images. But even if Netflix doesn’t do that, the algorithm can naturally learn this behavior if it just finds a group of people who all tend to gravitate towards a certain group of images. The algorithm may not know that this group of people is gay, and may not know that the images are of men staring each other, it just knows that viewers are watching shows.

Netflix once recommended me one of the Terminator movies, with a picture of Arnold Schwarzenegger looking like… well… Arnold Schwarzenegger, under the category of “movies with a strong female lead”. So I’m pretty sure the images are not human labelled 😀

Interesting. I spent a fair amount of time going through the pictures you provided and obviously they are not random. I wonder how that works when everyone in the household shares a password? If the main watchers are children, are the promos for other users features of what children would enjoy? (Disclaimer: i do not have Netflix so what I know is what I read about it in places like here.)

@K,

The recommendations and promo images appear to be chosen independently for all family members on an account. My brother doesn’t get recommended the same set of titles, so to screencap these images, I searched specific titles and looked to see what promo image was provided in the search.

On the Netflix page, you get recommendations for a bunch of categories, e.g. “LGBTQ stories” or “Movies with a strong female lead” or “Recent Arrivals”. Each category has a list of recommended titles. A single title can appear in multiple categories, and sometimes with different promo images. (Although I only saw multiple promo images for one of the titles in this list.) Promo images can also differ across platforms, for instance in a browser the promo images all are in landscape rather than portrait format, and the algorithm may choose completely different images in that case.

Yeah, I’ve noticed that when I watch movies that feature Black characters and families (“42”, “Raising Dion”, “LA’s Finest”, whatever) that I have an upswing in the pictures that feature Black characters for a while. DS9 features more Sisko than Kira, that sort of thing. But when I watch a string of shows or movies that feature young women leads (Enola Holmes, Gilmore Girls, Moxie) then the promo images feature women (of whatever race) more often, so Kira replaces Sisko on the DS9 icon.

And these seem to be statistical. Meaning that Kira **more often** replaces Sisko, but Sisko doesn’t go away entirely. (The reverse is true after I watch a season of Raising Dion.)

There is also some level of combination, so that on Sex Education Ncuti Gatwa will sometimes feature no matter what my recent watching habits, but he is most likely to be featured when I’ve been watching both “gay stuff” and “Black stuff”.

And, yeah, if things end up being “click baity”, I notice. And that has happened. But … counterpoint! … I don’t mind that the images are not a flood of white guys and 20-something women with long hair and makeup acting as cishet eye-candy. Cause that shit’s just annoying.

@Crip Dyke,

Haha, well personally, I spent a long time looking at that collage, partly because it was a neat finding, but also eye candy. Netflix sure knows how to choose images that look good, it’s just more of a question of what our goal is here.

Good observation. I’ve noticed how many of the promo screenshots don’t really reflect the movie itself. Thing is, they still get my personal predilections wrong, after all this time.

I got Netflix some years ago, then set up an account for my wife.

Over time, the selections on each account have diverged substantially, to the degree that there’s hardly any overlap.

Thing is, neither of us feel our particular preferences are successfully being catered.

My personal experience, most times when I feel like NetFlixing, I spend 20 minutes or so browsing the categories, and then give up. Seen all the stuff that’s any good, and so very much is crap. Especially NetFlixx “originals”, which are bloated, padded pabulum.

Basically, something over 9 out of 10 things I end up checking up are such that I quit the effort after a few minutes. Sometimes after a few fives of minutes, if there’s potential.

So.

My list has a shitload of things that don’t really match my predilections, purely because I checked out something in the faint hope it would be amenable to me.

You know https://en.wikipedia.org/wiki/Sturgeon%27s_law — well, more so for NetFlix.

The thing is, there’s just enough, and it’s there.

—

I don’t doubt that, over time, the algorithm will improve.

(That noted, it’s still the case that the most likely accurate weather forecast overall is “tomorrow will be like today”)

a more model, less gender/orientation focused thought:

based on the limited promo images above, comparing ‘Our account’ images(for 2 users), to the IMDB(I guess this is for the general population):

‘Our account’ often

1) picks 2 people in one image, other than an individual/a group/an object

2) with an emotionally intimate interaction(gentle), other than physically(more aggressive, IMO).

I wonder if Netflix has promo image preference indexes, based on

1) image theme preference (other animals, humans, objects, scenes, or abstract images)

2) population per image

3) interaction aggressive level preference (no interaction, subtle, romantic/friendly, obvious physical attraction, violent, etc)

4) LGBTQIA preference

5) ethnicity/race preference

also, I wonder how they made those images

whether it is designing multiple promo images per show/movie, and pick one to show based on user preference; or, a more advanced way – they have image elements to put together, to create a promo picture, based on the image elements’ characters.

@bubble

The Netflix tech blog says that they have artists and designers select the initial set of images, and the machine learning model selects from among those.

I can only speculate, but it’s possible that the artists and designers add tags or descriptions to each image. For instance they might describe one of these images as “two men staring at each other”, and then the model might pick up that this tag is an important feature. This could cause a clickbait problem if the metadata doesn’t say anything about the relationship between the image and the content.

Alternatively, they might not have metadata, and it might just be selecting images purely based on the performance of each image.