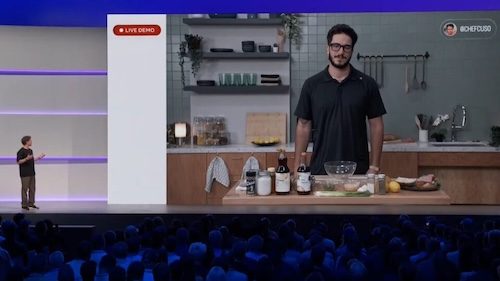

Mark Zuckerberg has sunk billions into AI, and a whole slew of grifters have been doing likewise, so I really appreciate a good pratfall. He set up an elaborate demo of his Meta AI, stood on a stage, brought up a chef, and asked the AI to provide instructions…to make a steak sauce. Cool. Easy. I could open a cookbook or google a recipe and get it done in minutes, but apparently the attempt here was to do it faster, easier, better with a few vocal requests.

“You can make a Korean-inspired steak sauce using soy sauce, sesame oil…” begins Meta AI, before Mancuso interrupts to stop the voice listing everything that happens to be there. “What do I do first?” he demands. Meta AI, clearly unimpressed by being cut off, falls silent. “What do I do first?” Mancuso asks again, fear entering his voice. And then the magic happens.

“You’ve already combined the base ingredients, so now grate a pear to add to the sauce.”

Mancuso looks like a rabbit looking into the lights of an oncoming juggernaut. He now only has panic. There’s nothing else for it, there’s only one option left. He repeats his line from the script for the third time.

“What do I do first?”

There’s then audience laughter.

“You’ve already combined the base ingredients, so now grate the pear and gently combine it with the base sauce.”

Poor Mark, publicly embarrassed in a demo that was all designed to make a trivial, rigged display, and it flopped.

What’s so joyous about this particular incident isn’t just that it happened live on stage with one of the world’s richest men made to look a complete fool in front of the mocking laughter of the most non-hostile audience imaginable…Oh wait, it largely is that. That’s very joyous. But it’s also that it was so ludicrously over-prepared, faked to such a degree to try to eliminate all possibilities for error, and even so it still went so spectacularly badly.

From Zuckerberg pretending to make up, “Oh, I dunno, picking from every possible foodstuff in the entire universe, what about a…ooh! Korean-inspired steak sauce!” for a man standing in front of the base ingredients of a Korean-inspired steak sauce, to the hilarious fake labels with their bold Arial font facing the camera, it was all clearly intended to force things to go as smoothly as possible. We were all supposed to be wowed that this AI could recognize the ingredients (it imagined a pear) and combine them into the exact sauce they wanted! But it couldn’t. And if it had, it wouldn’t have known the correct proportions, because it would have scanned dozens and dozens of recipes designed to make different volumes of sauce, with contradictory ingredients (the lack of both gochujang and rice wine vinegar, presumably to try to make it even simpler, seems likely to not have helped), and just approximated based on averages. Plagiarism on this scale leads to a soupy slop.

What else would you expect? They’re building machines that are only good for regurgitating rearranged nonsense, and sometimes they only vomit up garbage.

And this is how you know today’s AI is not real artificial intelligence. If it were, it would have known to warn him off of this plan because Bill Gates had already shown the world that these sort of highly publicized tech demonstrations easily turn into highly publicized tech blunders.

“hey, computer, help me rescue the Kobayashi Maru.”

“i’m sorry, i can’t do that James.”

That linked article should come with a warning for people with epilepsy. Urgh, how many ads, flashing objects and pop up crap can you install on a page to ensure it’s completely unreadable for humans?

This is the part that truly bothers me..

I supposedly have constitutional rights to privacy and never consented to the tech turning itself on to take pictures of me or my home, or listen to any conversations so it can try to sell me garbage.

As for the AI, even though I never installed it, it’s there giving completely imaginary and utterly wrong answers to questions. I curse it right off my screen every day.

AI Isn’t in One Bubble but Three, Expert Warns Investors and Businesses

Those of you using Gmail: Google has added extra AI features to Gmail, and they turned them on by default without even asking you. You can turn them off by going into Settings > See all settings and clicking off Smart features

The worst part of all this is the audience. They are the problem, not Zuckerberg.

They were laughing WITH him, when in any sane universe, they would have been laughing AT him.

Zuck is so desperate it’s cringe. First he went “hey let’s reinvent Second Life” and now he’s saying “hey let’s reinvent Siri”. Both of those are viable products, but they aren’t tens-of-billions-a-year products.

More people would be commenting about how horrible Zuckerberg is if Elon Musk hadn’t gone full Nazi.

Thank, Reg. Smart Features disabled.

That is true for a billion or so people.

I use Google search a lot, several times a day at least.

Most times the top answer is the AI one. Which is often right but I still can’t trust it so have to check the real sources anyway.

I would really like to turn off the Google AI search function.

Anyone know if that is possible and how to do it?

The alternative might be duckduckgo, which I use sometimes.

That is good to know. Thank you.

I have a Gmail account but it isn’t my main email.

My cell phone keeps wanting me to add a “completely free” AI to my phone, that already has far more functions that I don’t want and never use.

I keep saying no.

I use noai.duckduckgo as my default search.

@10 Turning off AI overview in Google search

“Just give me the f***ing links!”—Cursing disables Google’s AI overviews

What’s the good of a super intelligent computer if it can’t decide whether to have Corn Flakes or bacon and eggs for breakfast?

So what was Mancuso trying to do? Was he deliberately sabotaging? The thing was weird (especially blaming the wifi)

@Raven

As noted by Reginald, you can get the AI off your google search screen by cursing at it. Simply type something like “Fucking google and your fucking goddamned AI crap. stop enshittifying the world”

Et voila! It’s gone. If eavesdropping corporations insist on violating my privacy I can certainly give them an earful. Searching for ridiculous word strings that include a few phrases in ancient languages is also a fun way to discombobulate their algorithms.

Malicious compliance is sanctioned by Jebus himself.

AI is Artificial it is NOT Intelligence, no matter how hard they push it at us. It poses MANY dangers for everything it contaminates.

Here is a thoughtful, accurate article on the dangers of ai in computer operating systems.

http://distrowatch.org/weekly.php?issue=20250922

Avoiding the spread of AI services

While some people have found uses for AI agents, either as tools or toys, there are a lot of issues surrounding the use of AI. AI agents scape (sic – scrape) huge amounts of data from the Internet, send identifying information to the companies running them, This makes most forms of AI a legal, security, and privacy nightmare, not to mention the environmental concerns.

In short, I sympathize with you wanting to avoid any operating systems with AI agents and tools baked into them by default.

I should also explain the segment on Distrowatch about ai is near the bottom of the article I linked to.

Here is another good article on Artificial (non)Intelligence:

https://www.nakedcapitalism.com/2025/09/proof-of-ai-garbage-in-garbage-out-incorrect-results-traced-to-reddit-and-quora.html

@10 I would really like to turn off the Google AI search function.

You can append “-ai” to any Google search.

In the BI (Before iPhone), Apple had a deal with Motorola to put an iTunes button on their phones. At the developer conference that year, Jobs did a demo of the feature. He pushed the button. Nothing happened. He pushed it again. Still nothing. A sly smirk spread across Jobs’s face, and he said something like, “I guess it’s not working.” A year or so later Apple intro’d the iPhone. So, failed demos can be harbingers of the future, although I doubt Zuck is that savvy.

Thank you for the warning Tethys! I will not watch it.

Or …Angel, you can save yourself the three character typing time on each and every single search all the time always by using a browser that allows you to click one button once to automatically turn it off always for all time like: duckduckgo.

Double thanks to Reg! As I was going through the advanced settings, I was discovering (and then turning off) a bunch of features about gmail that I absolutely loathe. Then it says to turn off Smart Features I need to save my changes and reload. So I did and then disabled Smart Features. Now, every single thing I turned off now simply says ‘this feature not available unless you turn off Smart Features”. Danggit I didn’t have to manually turn them off, and also of course pretty much everything I abhorred about gmail was due to this feature.

Mmmm, one wonders how it’s going to end – it’s a pretty overinflated bubble and it will blow sooner than later.

Problem is, the levels of investment are insane and when it goes poof there will be repercussions for many. And as usual the ones footing the bill won’t be the idiots who started pumping up this ridicolous bilge.

Something good will remain: in certain domains like protein chemistry there’s a lot of real, useful progress being made (several good explainers on Nature). Pattern recognition and some branches of applied math are also seeing benefits, I hear.

The rest is a heap of trash shoveled at us by those who should know better.

But they never do, eh. Money cannot buy happiness they say, and brains neither it seems. Go figure.

Seachange:

According to this article published today in the New Yorker-https://www.newyorker.com/magazine/2025/09/29/if-ai-can-diagnose-patients-what-are-doctors-for-A.I. can be very helpful in diagnosing and treating illness or assisting doctors in doing so. And it can make many mistakes. But it can be very useful and will probably be better in future. Seems all or most all of the comments here are ridiculing A.I. It seems to me that often, at least, those here revel in ridiculing certain things or people and ignore the [positive aspects.

chesapeake, yup.

I saw it all before when computers became ubiquitous — late 70s to early 90s.

Tech in general, even: cf. https://www.cheapism.com/old-technology/

It’s not the tech. Multiple people have mentioned areas where the AI tool is useful, though it’s definitely being overhyped.

My concern stems from the violation of my private communications and basic right to privacy. It’s not actually possible to turn off the microphone or camera and I never gave my phone permission to listen in to my life 24 hours a day and transmit my personal conversations in the first place.

Of course, “AI” was around long before this current hype bubble. Computer programs which play chess, checkers or go better than any human being are AI, and so are the expert systems based on machine learning which people have mentioned. Technically, “generative AI” is the term for LLMs and for systems which generate graphics according to prompts, but people have come to just use the term “AI” for short.

[meta]

re: ‘It’s not actually possible to turn off the microphone or camera…’

I have a PC. Old-fashioned box with a keyboard and speakers and a display.

No microphone, no camera. Can’t turn them off because they are not there. :)

So, it is needful to specify which device and how. I presume that refers to mobile ‘phones’, which have those built in. But then, I note phones have lenses that can be covered with tape or whatever until needed, as can the speakers and microphones.

(But yes. That’s why I never got a modern ‘phone’ until mid 2020, when it became less hassle to have one than to not have one. A device that can track you and listen to you and look out the lenses, all software-driven)

Steve, ah yes. https://www.chess.com/blog/Chessable/human-vs-machine-kasparovs-legacy

tethys@27

As to phones, I think in Android, you can turn off the microphone permission, or limit it to certain aps. I vaguely remember having some issues, because i turned it off to the “phone app” :/ It may not be effective, but I also refuse to use my phone for much other then voice/text. I have seen it happen a couple times with my coworkers where they started getting shown ads and such after talking about something otherwise unrelated.

As to computers, some manufacturers have started putting hardware switches to the camera and mic. Not sure of all of them, but I relatively recently got a Framework laptop, and they have dedicated hardware switches to the camera and mic, so I’m no longer putting blue tape over it… :)

lochaber: ‘As to phones, I think in Android, you can turn off the microphone permission, or limit it to certain aps.’

Um. All software-driven.

You can go to the interface and set that, for sure. But it’s software.

(Perhaps I was too obscure; cf. https://en.wikipedia.org/wiki/Pegasus_%28spyware%29 )

@tethys, #3:

That’s a popular misconception, but you probably don’t.

When the US Constitution was written, privacy was very much the default. Anyone who wanted a copy of what you were saying had to be there, with paper and pencil, and better be a quick writer. So anytime you wanted to tell someone something without being observed, all you had to do was just take a wander out in the countryside somewhere, or on a deserted beach, and have them come from a different direction to meet you to minimise the likelihood of you even being seen together. If you were being very careful, you could even carry a stick to poke any undergrowth a person might hide in.

And no-one ever thought to update the law to take into account the technological developments that falsified all the original assumptions.

Yeah, so, so, so many problems. If you assume, as a baseline, that LLMs do replicate “part” of how our brains work, then you still have some serious problems with it. In this case we are presented with the critical issue that they do not, at all, comprehend the concept of, “following discreet steps”, and it would have taken these twits less than 5 minutes on youtube, looking for videos on such LLMs being used to “guide someone through a recipe”, to conclude this, unless all they did was read video titles… That said, another serious issue is that they do not self train, at all.

See, if you where to describe how a person “learns”, or just plain functions with the world around them, then you have the LLM part, which is “long term memory”. It is capable of recalling complex facts, but also pulling things out of its.. hard drive, which has nothing to do with the subject, if pressed to give an answer, and it has no f-ing clue what that answer should be. Its also prone to, like like an LLM, of sometimes being hit with something so complex that it can’t keep real track of what the F it was asked, and spitting out bad information, or inappropriate information, then only catching itself as it “catches up with”, both the answer, and what it was asked in whole, and whether it should answer at all, based on other criteria.

The problem here is than an LLM has no real “short term memory”. What it has is the equivalent of someone with severe dementia, who has been given flash cards, telling them what the conversation they are having is, and a pencil, to try to “write down” a few words, now and then, to try to keep track of the conversation. What “our brains” would be doing, instead, is kind of building a “small scale” LLM, specific to the conversation, or actions, we are currently engaged in, such that, as we continue those actions, or the conversation, we have a clear timeline of what we are doing, and who, if anyone, we are doing it with, and what we have already done, and whether or not there are steps we have not completed. This is then, somehow, later parsed, on a “critical information”, basis, into the “long term memory LLM”. Its not “collecting tokens”, with no flipping clue how they connect to each other, and five minutes into the conversation, having no bloody clue, beyond looking at the last few “flash cards”, what the F the conversation is, what has already happened, and who its talking to.

But, a direct consequence of this is that its a bloody idiot savant – it has insanely vast amounts of “information”, often without any real understanding of context, with no real comprehension of how to do anything step by step, because it not only has never done this, but can’t even “think about” how to do this, and its answers are the literally equivalent of, Statement : “May 4th, 1592” – Answer: “The birth date of blah blah blah. Oh, and there was a plague going on, and blah, blah, blah…” Done with imperfect fidelity, and no more capacity to actually “do” anything useful with it than someone with severe disabilities, who are unable to grasp the concept of “actually doing anything with this information”, beyond maybe making themselves a bowl of cereal. And, frankly, I wouldn’t trust an LLM to even manage that right, unlike the severely disabled human.

All of these idiots are confusing “data retrieval” with “thinking”, and are as amazed by their results as the village idiot, when confronted with someone who memorized a dictionary, but, if pressed, has no freaking clue what most of the words actually “mean”, even if they can spout off perfect definitions for them.