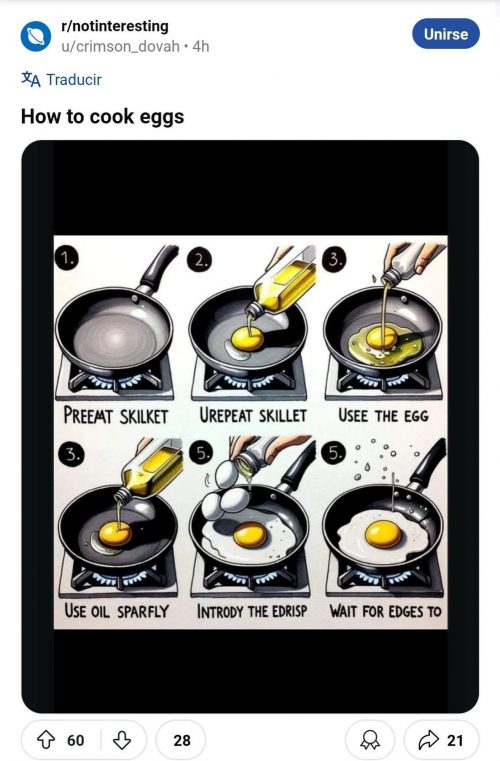

It’s morning, I’m hungry, let’s whip up some eggs with expert advice from an AI!

OK.

- Preemt skilket

- Urepeat skillet

- Usee the egg

- Use oil sparfly

- Intrody the edrisp

- Wait for edges to

Y’all got that? Ready, set, go…first person to get something edible report back in the comments.

I’ve got a bowl of cereal.

It got the egg, the oil, and the skillet…what more do you want? It got 30% to 40% of it. Plus, the prompt was probably a problem. You need to do some prompt engineering. With a better prompt, you could get 70% to 80% accuracy. However, that’s probably the best you can expect.

You got the numbers wrong, it’s 1,2,3,5,5!

AI is gomg to taek oovEr klik uz aLL.

I’m scared.

From r/notinteresting, huh? I concur.

123355

Doesn’t anybody know how to count to six?

Am I the only one to notice esdrip is an anagram of spider? This ai is evil!

If AI can’t fry eggs then there’s still a hope that it can’t fry us with a nuke quite just yet.

Meanwhile: https://www.vox.com/future-perfect/371827/openai-chatgpt-artificial-intelligence-ai-risk-strawberry

(Neonates become babies, babies become toddlers, and so forth)

I pour egg white into a bowl, cover it, and heat it in the microwave until fluffy. Then I put mustard, cheese, that egg white, and a little hot sauce on some bread and enjoy.

‘Clearly chicken you weirdo’: People respond to JD Vance sharing video he claims shows migrants grilling cats

Is JD Vance, who is weird, an A.I., or an E.T.?

Reginald Selkirk @11: I strongly suspect that he started accusing Haitian immigrants of eating pets to distract people from the fact that he swallows mice whole like one of the aliens from V.

Hey! No stealing my recipe!!

Step 1. No, I think it’s supposed to be “preëmpt skill kit”, because why not.

Step 2. Maybe “you repeat skillet”, eh?

Step 3. This means that the cook is to be used by the egg, does it not? Is not a “usee” someone who is used, right?

Step 4. No, clearly, the cook is to use the oil as part of a sparring match with flies.

Step 5. No clue here.

Step 6. It might help if it were explained just for what one is ordered to wait.

I am not prejudiced against A.I. anymore than I am prejudiced against Haitians.

Microraptor @ 12

Yes, but aren’t the real alien reptiles supposed to be intelligent? J D Vance would be the re***d runt of the litter.

When it coems to recipes, I’d rather not follow the AI cookbook – even if I actually could..

/ Cap’n Obvs.

Surely at this point we’re getting tired of beating the dead horse of what generative AI is bad at? It can’t look up real references, it can’t generate text well in pictures, it’s bad with hands, it’s certainly not what you want to use when writing a scientific paper. But it’s great for recreational activities like engaging in stories and it can create specialized art instantly.

It’s like taking poetry literally or depending on artistic works for architecture- you’re missing the positive point of the concept.

Step 7: “Sarah Connor?”

This isn’t artificial intelligence. It’s artificial stupidity. It achieved that by imitating us.

Yes indeed! And revenge porn, deepfake propaganda, racist tropes and caricatures…

KG @20: And hammers, axes and chainsaws can be used to kill people!

Not gonna lie, this post incited me to go buy eggs. I’ll introduce the edrisp tomorrow morning.

@18, Mickey

People don’t beat up on AI because of what it does. People beat up on it because of what it’s proponents/fundraisers say that it can or will or should do. We beat up on it because it is just the latest in a seemingly endless series of tech-industry hype cycles that paper over the complete lack of meaningful innovation coming from a sector of the economy that consumes and destroys so many resources.

AI is the perfect tool for the evangelists of that sector – they can promise everything to everyone, if they are just given the TeraWatthours, millions of acre-feet of water, and literally billions of dollars that (they assert) will allow them to finally build the god-in-a-box that will solve everything forever. In their search for more resources to consume, they promise:

To the soul-less overlords of our media industries: Movies without writers, actors, artists. Music without musicians. Books without authors. Sure – none of the proofs-of-concept yet made are even remotely capable of doing this – but maybe with a few hundred billion dollars to spend and entirely new ways of generating electricity to power it, maybe you’ll be able to finally crush those pesky craft and actor’s unions that take away all that lovely money from the investor classes, the true creators of all valuable art.

To the soul-less overlords of our security states: The surveillance state is unable to process the vast amount of information it gathers on its subjugated masses, the ‘god-in-a-box’ will allow faultless identification of dissidents, terrorist, activists, political rivals, students smoking in the bathroom, and all other evils. “What’s that about false positive rates? Don’t worry about it! Our carefully curated models will be sure not to identify anyone who matters as a [insert searched for characteristic]”

To the soul-less overlords of our welfare states (such as they are): The god-in-a-box will allow them to effortlessly choose who is and is not authorized to receive whatever state pittances are still being doled out. And since the logic behind any ‘decisions’ made by the AI is opaque and completely unavailable for review, there won’t be any pesky appeals to worry about! You can stop paying all those civil servants and simply give [large fraction] of the staffing budget to us, the priests of the ‘god-in-a-box’.

Every field in which AI is proposed to be used, even if it actually could be, it would be a disaster. Politicians are even now talking about ways to use a software system that can’t reliably tell you how many letter Rs are in strawberry to process welfare applications and security checks. (and before you mention that they ‘fixed’ that, they did not. It’s a hard-coded workaround. Try asking your genius AI similar questions – like which months have U in the name) It’s just absurdity and grift all the way to the bottom, much like previous hype cycles ‘blockchain’ and ‘metaverse’ were.

Unfortunately, it also has huge societal costs that are generally abstracted away from the end users. We have no idea how much the computing used by OpenAI, Meta and the rest are costing the world to generate this stream of bullshit, slightly funny if derivative art and, of course, massive amounts of troll and bot posts.

But here’s (npr) an article about the costs of the facility that Elon Musk is using so that he can create a god-in-a-box that’s willing to use racial slurs: 150megawatts of electricity generated by ‘mobile’ generators to escape emissions regulations, as well as 1 million gallons of water/day. All so that you can generate images of copyrighted cartoon characters doing drugs or wearing nazi uniforms. Doesn’t seem like it’s worth celebrating to me.

That’s hilarious

I have awful fears for what AI could do to a society that is already half deluded, but to mock early results of any tech is short sighted. The 1st attempts will always look funny.

Im actually amazed (and worried) that AI has achieved what it has so soon.

AI refers to computerised achievement of things that otherwise would require humans to do because they need “intelligence”. I think that you, as so many, equate AI with ChatGPT, which is an application of LLMs.

Playing chess.

Facial recognition.

Speech to text transcription.

All those are AI in that sense, and all are applications.

Anyway. You are making a category error, when you compare AI to blockchain.

John Morales:

Playing chess was AI. Then we started beating grandmasters and suddenly it wasn’t AI anymore, it was “alpha-beta optimization”. Facial recognition: definitely AI. Oh and then it became “vision”. Speech to text: yup, AI. Until it started working and became “natural language processing”.

AI is perpetually redefined to be the stuff we can imagine doing, but suck at.

@numerobis, 27

I think the problem here is that “AI” is mainly a marketing term used by unscrupulous buisness-types to sell bullshit to rubes. The actual researchers working on each of the examples given in your post would certainly not have described their work as “AI” that “became” something else. To the public at large, raised on science fiction fantasies of intelligent robot butlers, these hard problems seem like the only solution to them is to somehow reproduce a human brain inside the computer – but the experts who perform the feats generally do not.

AI is a term cynically used to confuse the public that software systems which can do these tasks possess something akin to human cognition. The honest ‘ai’ community calls that kind of system ‘artificial general intelligence’ and it remains the stuff of sci-fi with nobody currently proposing even a credible path to begin understanding the kind of software problems you’d need to solve to create it.

The kinds of ‘AI’ that you and John bring up are fundamentally no more intelligent than a Jacquard Loom or thermostat. Both of those also performed tasks that, prior to their invention, required an intelligent human to perform them.

And all of this is a distraction from the fundamental activity going on in the modern “AI” craze. Which is hype, grift and fraud, as I said above. However amusing and impressive the feats of these systems are, none of them offer any real promise of fundamental societal change or improvement – though cynics will sell them to rubes as if they might. Boosters and the naieve will respond with some variation of “the technology is still young”, but there’s really no evidence that it is in its infancy. Model training and running costs go up exponentially, in data required, energy required and computing hardware required. Even a dedicated AI fanatic like Sam Altman is already talking about how we’re going to need entirely new kinds of power generation to enable his models to do what he thinks they can do (that and many more billions of dollars). How many hundreds of millions of dollars did it take to go from GPT4 – “I can’t count the R’s” to GPT5 – “I usually can count the R’s”. By how much did the cost/query rise?

snarkhuntr,

Not only do you write as though we have reached the limits of what that tech can do, you ignore

https://en.wikipedia.org/wiki/Koomey%27s_law

<

blockquote>How many hundreds of millions of dollars did it take to go from GPT4 – “I can’t count the R’s” to GPT5 – “I usually can count the R’s”.<?blockquote>

You argue as though the improvement were merely quantitatively or efficiently better; thing is, as a nascent implementation of a particular application of a particular technology, each iteration yields qualitatively better results.

—

Regarding power usage, I note I live in Australia; we already have a surfeit of electrical power during daytime due to the prevalence of rooftop solar systems, so that a lot of that is wasted.

(Grid scale storage is not yet a mature tech, either, and that’s most of the prob)

Rob Grigjanis@21,

That generative AI is “great for recreational activities” had already been pointed out by Mickey Mortimer @18. I was simply noting that it also has malevolent applications. What is your point? Mine is that those who produced this software and made it available had, and have, a moral obligation to minimise its potential for evil, which they have manifestly failed to do.

@30, KG

What moral obligations do you think that fanatics, or capitalists, acknowledge? A large part of the AI industry is grifters selling snake-oil, and they’ll accept any clientele willing to pay for whatever dismal offerings they have to give. This is the group that offers celebrity deepfakes and custom porn with user-supplied (and not age-checked) photographs for inspiration.

The rest of the industry seems composed in equal parts of clueless techbros who are desperately seeking to apply the “ai” moniker to anything they were already doing. “Our company is using AI to produce the next generation of drywall products”, and AI true-believers who genuinely think that with just another couple hundred billion dollars and the entire energy output of the sun, they’ll finally create a version of ChatGPT that achieves sentience and elevates them to the next realm of existence.

Who in this group is going to avoid doing evil? Grifters know that evil sells better than anything else. Techbros are just flailing about and hoping they can jump on the next buzzword before it becomes stale and uncool, the true believers think that preventing the god-in-a-box from doing sexy stuff might delay the arrival of their robot waifus – so who’s going to object to a little deepfake porn?

It’s copying Stanley Unwin.

Steps 1-6?

It’s clearly 1, 2, 3, 3, 5, 5.

No wonder you all are confused, you can’t follow simple directions.

@21, KG “Yes indeed! And revenge porn, deepfake propaganda, racist tropes and caricatures…”

Yes, and you know how many more bad things the printing press enabled? Or the internet? Or mass education via public schooling? That’s one cost of progress and all we can do is adjust our expectations. For example, at some point in our future an apparent photo or video is going to be no more factual than a drawing, and that point is approaching really quickly. So unless you advocate for draconian laws regarding computing power, we just have to accept any image can be made of anybody and it has no basis in reality.

@24, snarkhuntr “To the soul-less overlords of our media industries: Movies without writers, actors, artists. Music without musicians. Books without authors.”

Technology has killed and will kill many more job types. Everyone’s fine with robots taking factory jobs and such, but when it comes to acting and writing suddenly it’s something sacredly human. It’s especially ironic because only the visual endpoint humans (actors, singers) get paid well (arguably too well), but everyone else that makes the process possible like writers, choreographers, etc. get screwed financially without AI even being an option. But if the public likes an AI generated story or picture more than that made by a human, I fail to see a naturalistic reason to retain humans in that workforce anymore. Just all the more reason to institute universal basic income, because automation is coming for everyone eventually.

@mickey,

I think you’ve missed my point. It’s not “won’t somebody think of the careers of the buggy-whip makers”, because I genuinely do not believe that AI is ever going to be capable of writing a story that people would want to read. AI generates, at best, tolerable slop. It’s advocates claim that if we just invest society-changing amounts of resources into their pipe dream, eventually it will be able to replace humans.

It’s a kind of promise that appeals to the soulless overlords, and so they’ll happily shovel money into it in the hopes that they might one day be able to sit atop an even steeper pyramid of capital. In the meantime, it sucks away resources that might actually be used to solve real world problems. So governments that are basically unwilling to spend any money on social improvement, for fear that most of it might actually go to *shudder un-rich people – these same governments will happily piss millions of dollars into speculative AI ‘solutions’.

I’m not precious about who creates art – if AI was capable of making good art I’d be happy to consume it, assuming the financial and environmental costs were reasonable. Unfortunately, that’s not really what the AI technocrats intend to do with it.

David Gerrard, in his excellent blog about AI, talks about the state of Sora – the supposedly revolutionary AI text-to-video app.

Of course, this will be handwaved by boosters as “It’s just in its infancy, we’re certain it’ll get much much better and cheaper and faster”, and they will cite supposed ‘laws’ of computer science that prove it to be the case. But again, costs in this sphere are going up exponentially – and while we might be able to afford to dedicate the electricity output of a medium sized country and all the water in the US south to this, we don’t really have a source for exponentially more training data for these models. Futurists like to handwave away physical limits all the time, generally when they’re fundraising.

I hate to double post, but this:

UBI: absolutely. Automation replacing everyone? Rank nonsense. I work in industrial maintenance, I just got back from a consulting/contracting job at a biodiesel refinery. I think automation has, in industry, replaced basically everyone it’s going to replace within our lifetimes, at least in those fields – and probably in many others that I’m not familiar with. Even a task as simple as walking up a catwalk to change clearance lightbulbs is essentially impossible for a robot outside of incredibly controlled lab conditions – add in the requirement that the thing be intrinsically safe and explosion proof, it’s just not going to happen.

No, that fucking well isn’t “all we can do”. We in countries where a vote actually counts and there is a degree of freedom to organise and campaign, can work to have the “tech giants” broken up, and the fragments thereof forced to make their products as safe as possible on pain of their owners and top management going to jail.

Jesus wept, you really have no idea, do you? Having a fake video made and circulated, without consent, of one performing degrading sexual acts is experienced as a violation. Well, maybe you would be fine with it, but most people – and in particular most women, would not. So, if it’s “draconian” to pass laws against such violations, yes I’m in favour of draconian laws regarding computing power.

Draconian laws?

cf. https://en.wikipedia.org/wiki/Darwin_among_the_Machines

(Possibly the inspiration for Dune’s Butlerian Jihad)

Here’s (link sent through 12ft.io) another example of the kinds of marketing that are really driving the AI hype cycle:

emphasis mine

Now, ol’Larry is a salesman – so you have to take his claims here with a grain or two of salt. He’s describing what they what to do, not what they’re presently able to do or what they have a credible belief that they’re going to do. But these are the promises they’re making to the investor classes: a digital panopticon where all the serfs can be safely controlled without the need to employ quite so many expensive policemen.

And the policemen too will be surveilled by the panopticon, if they break their master’s rules, the system will refer them for punishment. This is the stuff that really drives investment into these technologies – the prospect of firing workers/breaking unions, summarily clearing out the welfare roles, purging undesirables from the public square, and subjecting anyone who can’t afford privacy to an unending regime of surveillance and control. No wonder they’re pulling in so much funding.

Thing is, “the AI hype cycle” ≠ “AI”.

Different things.

snarkhuntr@29:

Meanwhile, which technologies do?

Renewable energy and energy storage tech, for starters.

These promise more local and distributed energy storage and generation, and will also undermine the economic, and thus the political, power base of some of the worst actors, from elements of the Republican party to the thuggish Saudi regime and Tory parties in Canada and other countries.

More local resiliency, coupled with taking at least some of the wind from the sails of the political right wing, can only redound to the benefit of the little guy.

And this is before we get into the whole climate change mitigation angle.

Fission. We need fission power, plants should be in the planning/permitting stages all over the world. Other power sources are also great, local microgrids and storage/peak shaving plants would be good too. But if we want to do the things we’re powering now with fossil fuels into the future, we’re going to need wide scale deployment of fission plants for baseload power demands.

Right. Particularly where there’s a war going on!

I mean, yeah – especially then. Nuclear plants have actually been captured in this war (I assume you’re talking Russia v Ukraine here). But it’s hard to see how capturing a site that’s currently undergoing seismic/geotechnical evaluation for a future nuclear installation is going to present a larger risk than – say – capturing or blowing up an oil refinery. You’ve certainly seen those videos of the results of drone strikes against oil storage facilities in Rus, do you think that the contents of those huge black smoke plumes are simply vanishing with no external consequences for people downwind?

Also there are places not currently fighting those wars, and all of those places are consuming electricity at an ever-increasing rate. That electricity is going to be supplied – I’d rather the smallest portion of that new demand be serviced by fossil fuels.

<sigh>

https://skepticalscience.com/print.php?r=374

And yet the myth that we need fission for baseload simply will not die …

@46, Bekensten Bound

There are some pretty questionable assumptions in that page you linked.

As an example:

or

No energy source is without its tradeoffs. My own province is currently in the process of flooding a massive valley for industrial hydropower, destroying a vast and beautiful valley – cutting off wildlife routes and who knows what other damage will be done to the ecosystem. It also used enormous quantities of concrete to do this, concrete that was absolutely not produced via carbon-free methods. Most of the sites that would make good hydropower sites are already being used for that purpose, at least in North America – and plenty of sites that used to be good sources will be drying up as the glaciers melt and climate change.

The suggestion that biomass burning will be a major contributor is also rather suspect – ‘biomass from plantation forests’, well – I live in an area where that’s done (British Columbia, Canada). The plantations can’t keep up with demand, and so we’re cutting down large areas of pristine first and second growth forest in order to feed these fuel pellet plants that allow UK energy producers to claim to be reducing their carbon emissions. The forests are then replanted with monocultures of fast-growing pine species and heavily oversprayed with herbicides to kill off an undesirable tree species that might try to come in. Those places aren’t forests – they’re farms that just happen to be up a mountain, and they’re bleak as fuck. Not to mention the downwind consequences for people living near biomass burners – PM2.5 particles and other combustion products.

I can go on – all of those papers linked in your article seem to assume that we will, as a society, be able to meaningfully reduce our electricity consumption. I don’t think this is the case at all, there are massive industrial heating and process loads using fossil fuels that will need staggering quantities of electricity to replace natural gas or coal as an energy source. The electrification of individual transportation is fantastic, but is going to increase the power requirements for domestic electricity significantly. EV charging is at least somewhat flexible as to time of consumption, I’m currently working with a friend to set up his car to peak-shave his electric power demand, but fundamentally that thing uses a decent multiple of his other domestic power draw – even if he can choose, somewhat, when it does so.

I don’t know that we need nuclear for baseload power, but I think it has substantially fewer negative externalities than a lot of other kinds of large scale power generation.

The efficiency measures needed include, especially, a big shift toward public transit, as well as heat pump use. Public transit use in turn means making the urban downtowns affordable for ordinary people to live in again.

Both the fossil fuel and the real estate sectors hate that, for separate reasons.

That may well be true – but asserting as a fact that baseload demand can be met with renewables, when the very article you cite explains repeatedly that this will only take place if we reduce electrical demand seems flawed.

Working in heavy industry, the amount of energy currently being provided by fossil fuels is staggering. I’m not saying that we should continue to build a world based around explodiing dinosaurs, but the fact is – we use a lot of energy, and we need replacement sources that don’t damage the world around us the way that our current energy mix does. Nuclear offers that in a way that few other technologies do – solar is great, I have some myself. Batteries suck, but they’re getting better. Biomass is still in a relatively primitive state basically just repurposing fossil solid-fuel combustion technology but now using agricultural or sylvan feedstock.

Nuclear is a technology that has proven to work, and proven to be reliable. It provides vast amounts of energy with minimal use of non-renewable resources. I don’t know why people are so afraid of it.

Let’s see what the experts say about nuclear power, then.

https://thebulletin.org/2017/10/a-dozen-reasons-for-the-economic-failure-of-nuclear-power/

Oh.

I think you made a misspelling there… where you should have said “an expert”, you mistakenly wrote “the experts.” Probably autocorrect.

I don’t think this is a good format for this discussion, so we’ll have to agree that we disagree on this one.

On the other hand, we can both probably hate this:

Three Mile Island nuclear plant will reopen to power Microsoft data centers

I’m talking about the vast number of nuclear power plants that would be required across the world in the next few decades if nuclear is to make a major contribution to replacing fossil fuels. You think it would have been a good idea to build such plants in Sudan? Yemen? Myanmar? Lebanon? All the other places there may be wars over those decades? The question is not whether fossil fuels need to be replaced, it’s what they should be replaced with.

@49

Batteries in the usual sense are only one of many ways of storing energy. But unless you think cars and trucks are going to be tootling around with mini-nuclear power stations under the bonnet, a lot of batteries in that narrow sense are going to be needed whether they are charged using electricity from renewable or nuclear sources – transport as a whole currently produces something close to 1/3 of CO2 emissions, and only trains and large ships could use nuclear-generated electricity without having batteries or fuel cells. (And those batteries will in effect form part of the energy storage system.)

That must be because you’ve got your fingers in your ears and are repeating “La-la-la, can’t hear you!”. It’s because they have seen just how unreliable the nuclear iundustry is with regard to safety (as well as in terms of completing projects on time and within budget), and because when accidents occur (and they will) the results are potentially catastrophic. Because it inevitably involves the production and transport of extremely hazardous materials of great interest to terrorists. Because the problem of long-term storage of nuclear wastes remains unsolved. Because it cannot in practice be divorced from the proliferation of nuclear weapons.