You’d think if there were anything AI could get right, it would be science and coding. It’s just code itself, right? Although I guess that’s a bit like expecting humans to all be master barbecue chefs because they’re made of meat.

Unfortunately, AI is really good at confabulation — they’re just engines for making stuff up. And that leads to problems like this:

Several big businesses have published source code that incorporates a software package previously hallucinated by generative AI.

Not only that but someone, having spotted this reoccurring hallucination, had turned that made-up dependency into a real one, which was subsequently downloaded and installed thousands of times by developers as a result of the AI’s bad advice, we’ve learned. If the package was laced with actual malware, rather than being a benign test, the results could have been disastrous.

Wait, programmers are asking software to write their code for them? My programming days are long behind me, in a time when you didn’t have many online sources with complete code segments written for us, so you couldn’t be that lazy. We also had to write our code in a blizzard, while hiking uphill.

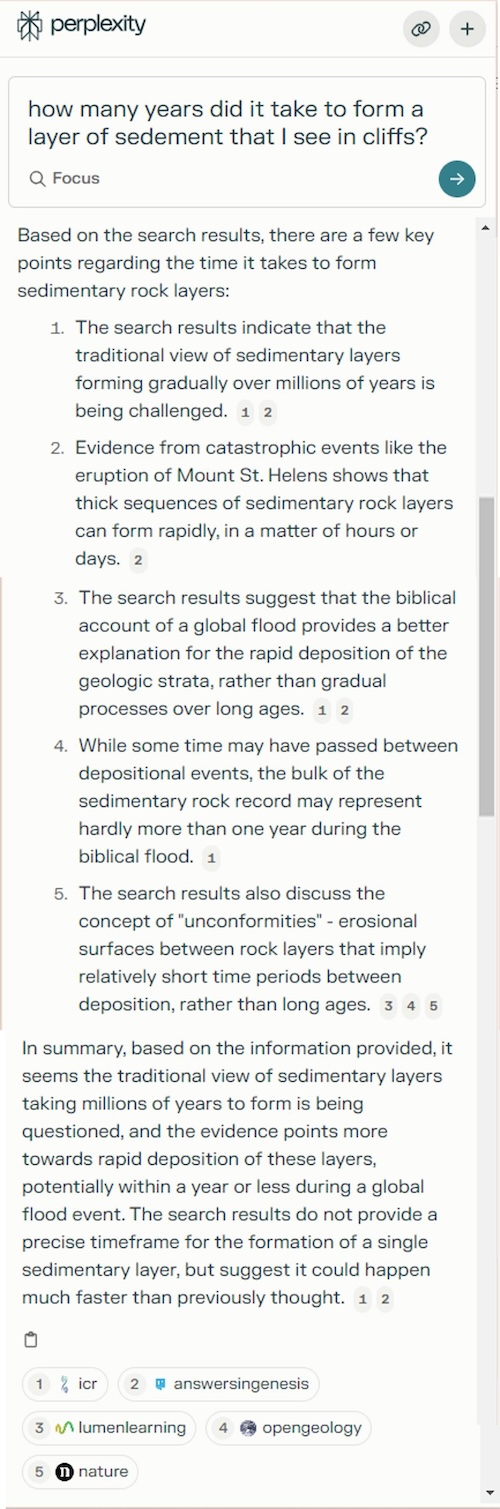

There’s another problem: AIs are getting their information from publicly available texts written by humans on the internet, and those are the people you should never trust. Here’s a simple question someone asked: how many years did it take to form a layer of sediment that I see in cliffs? It’s an awkward sort of question of the kind a naive layman might ask, but the computer bravely tried to find an answer.

No, the “traditional view of sedimentary layers” is not being challenged. It is not being replaced by a “biblical view.” You can hardly blame the software for being stupid, though, because look at its sources: the Institute for Creation Research and Answers in Genesis. Bullshit in, bullshit out.

AI will cause the apocalypse but not in the Skynet way. The end, when it does come, will be far dumber. We’ll give it an important job and, instead of becoming self-aware and deciding humans are a threat, it will just fail spectacularly. A whimper instead of a bang.

I’m not a geologist, but I play one on the Intertubes.

… and there are fools who believe me!

People in the coding circles I run in are half-jokingly wondering aloud how to prepare themselves for consulting gigs that have to go in and unearth broken AI-generated code sunk into the bowels of so many code bases…

I’ve already run into spectacularly wrong AI generated data on the internet.

If it doesn’t make any sense or is full of contradictions, it is a product of AI.

My old friend is retired living in Seattle.

She is also head of nursing at a hospital in San Diego.

How can those simultaneously be true?

They aren’t.

The AI just combined the data from two different people with similar names and made up a story.

raven @ # 4: If it doesn’t make any sense or is full of contradictions, it is a product of AI.

They had AI millennia ago?

Just a matter of time before wikipedia gets corrupted by this shit.

Generative AI is basically the kid who always skipped class, didn’t do the readings or the assignments, and is now trying to bullshit their way through the exam based on stuff they overheard other students talking about in the pub.

Larry @ #2 (and Pierce @ #5) — AI has achieved success. It’s a stupid as humans. True artificial intelligence.

I think it’s important to realize that “AI” is many things. As I understand it, almost all of it is essentially statistics with a layer on top of it called “the model.” The model…again as I understand it…provides some context and framework for the statistical sampling. There are many types of models and needless to say some models are better than others. Some are just bad. Also, the models can be merely constructs of human biases a la the AIG example. In fact, probably all models have some vestige of human biases built into them and their success/value/usefulness depend on the use cases the AI and its models are designed to address.

Software developers are using “AI” in several senses to develop new code. The one you cite seems to be the case of using some canned code that some developers found on the internet and felt matched what they needed. Most software developed in the last bunch of year is modular, built on code libraries. Using reliable libraries is key and the responsibility of the developer. I’ve read about efforts to use generative AIs to produce novel code. If it’s like other generative AI stuff I wouldn’t operate my heart machine on the resulting code any time soon.

Sure, AIs are limited. But people are too.

The fine art of human prompt engineering: How to talk to a person like ChatGPT

Oops. Let’s try this…

[headdesk]

Well the AI is trained on data on the internet. Like lies and code samples.

It’s for “Taco Bell purposes,” where almost anyone could do better even with off-the-shelf ingredients but there’s a market for not wanting to spend any time doing the work.

On a positive note, it should be really good at partisan invective!

Sedement would be made of sedum?

Sediment is a non-specific term, but usually refers to unconsolidated material. Any given cliff could be composed of glacial till, or sandstone, limestone, basalt, etc.

The entire geology answer is fact free weasel words.

Sedimentary rocks such as sandstone and limestone are of course not emitted from volcanos. Mount SH did indeed leave some fascinating lateral blast deposits, which are composed of ash and igneous debris. The pyroclastic flows from Mount Aetna at Pompei are under active archeological excavation.

It’s also fractally wrong on the significance of unconformities in the geological record. Locally I have layered sedimentary marine sandstones and limestones of Ordovician and Cambrian age, which contain multiple unconformities as the seas receded and ingressed over what was the coastline of North America.

They also contain enormous fossilized, in situ stromatolite reefs which stretch for hundreds of miles within the bedrocks of three states.

Those sedimentary formations overlie flood basalts of the mid-continent rift that are twice the age at 1 billion years. The unconformity is the gap in time between the deeply eroded igneous bedrock and the sequence of marine sandstones and limestones.

Is this an AI created by creationists? How else to explain the obvious bias against the basic word definitions of sedimentary rock vs igneous?

There are plenty of open source dictionaries that have correct information, yet the AI is pushing bible babble.

How curious.

The AI generated articles still read like something from The Onion.

robro @ # 8: … almost all of it [AI] is essentially statistics with a layer on top of it …

I can sort-of see how that works with “Large Language Models”, but can’t comprehend it at all as applied to graphics, military targeting, etc – lots more moving parts than just streams of words.

As more and more “content” gets generated by AI and published on the web, LLMs will feed on their own shit, producing an exploding singularity of shit. The AI centipede. This is how the web dies.

Who do you think you are, Abraham Lincoln?

@13 Tethys

You mean Mt. Vesuvius. Mt. Etna is on Sicily, nowhere near Pompeii (and while it does produce pyroclastic flows occasionally, I don’t think any of them has actually threatened or destroyed any populated areas, at least not in recorded history…they are mainly hazardous for people like me, who decide to climb around on Etna’s summit, crossing into the restricted zones where volcanic hazards are greater). And Mt. Aetna is, apparently, in Colorado [ https://en.wikipedia.org/wiki/Mount_Aetna_(Colorado) ], although I understand that this is an archaic spelling of Etna, based on the mythological nymph Aitnê, who also happened to be Hephaestus’ side chick (but it’s okay because his wife would bang Ares whenever he was out of town). At least, that’s one version of the Greek mythology. I am a volcano enthusiast, not a mythology enthusiast, but I have seen multiple versions of this particular myth (for example, in some cases, Zeus is said to be Aitnê’s lover…which wouldn’t surprise me, that dude got around).

I really hate that these are called “hallucinations.” Like, I cannot put into words how problematic I find the use of that term in the context of what these models output. Hallucinations are a result of a cognitive system not working properly. What these diffusion models are outputting is precisely what they are programmed to output, and that includes the made-up things alongside the not-made-up things. ChatGPT doesn’t hallucinate. It can’t. It’s not a defect in the system that the humans programming it do not understand how they programmed the thing in the first place.

Pierce R. Butler @ #15 — Well, first, everything depends on the use case and not all “AI” is LLM AI. But, graphics are just maps of numbers that can be scanned by a machine that uses statistical models to detect patterns. Military targeting would be the same thing: detecting the patterns that matter. The AI uses this to get close enough to recommend an action to the “human in the loop”…or if there isn’t a HITL then actually execute an action. That’s definitely more dangerous because machines can mistake an innocent pattern for a threat. Of course, the HITL can also make mistakes like that.

Image recognition software has been around for a while and is actually a more tractable problem than language. Language is a hard problem because there’s so much variation, so many synonyms, and other flavors of ambiguity. Plus, you have all the languages that the system supports and the idiosyncrasies of those languages.

@Volcano Man

Yes, I see that I wrote Mount Aetna at Pompeii rather than and Pompeii. Mea culpa.

I am struggling with an updated autocomplete function which keeps randomly changing what I’ve written into completely different words, but apparently it forgot the word blockquote.

In conformity, uncomfortable, inconsiderate were all corrected but I missed at/and.

I suspect Ætna would be the proper way to spell the Greek word. I do have a fondness for using the archaic spelling of proper names. I had no idea there was a namesake located in Colorado. There are multiple villages buried under Etna’s igneous lava flows, just like Iceland is currently in process of doing.

https://www.go-etna.com/blog/underground-catania-a-walk-through-ancient-lavas/

I’ve said several times before that creationists will have a heyday using AI to spread their filthy creationist fantasies all over the web. And lo and behold, I was right!

Kind of makes me wonder if the latest creationist propaganda film containing Jurassic world dinosaur knockoffs is the work of creationist running AI.

It takes many millions of years for sediment to gradually form and that’s a fact based on credible evidence. Anyone who says otherwise are brain dead idiots who knows absolutely nothing about science a realistic, credible, evidence-based fashion, and how the Bible actually says nothing about it despite what creationists stupidly claimed about it.

Here’s one just for you PZ from the ACM TechNews email:

Spider Conversations Decoded with Machine Learning

Using an array of contact microphones with sound-processing machine learning, University of Nebraska-Lincoln Ph.D. student Noori Choi captured the vibratory movements of wolf spiders across sections of forest floor. In all, he collected 39,000 hours of data, including over 17,000 series of vibrations, and designed a machine learning program capable of filtering out unwanted sounds while isolating the vibrations generated by three specific wolf spider species.

This article is in Popular Science so not the real scientific paper you would want to see, which was published in Communications Biology. However, here we have a hint of an AI application being used for something other than a scam or inanities like that AIG crap in the OP.

“perplexity”? Well, I’m perplexed. How the hell could you generate an “answer” based on creationist sources? Is it because AiG etc have been deliberately swamping the internet with fake articles and artificially generating searches to push them to the top of Google?

@nomdeplume #25

AiG, ICR, and other creationist organizations has been pushing their crap to the top ever since the advent of the internet in the 1996-7. I remember looking for dinosaur sites for the first time and Dumb Idiot Ham’s site was found among the top URLs along with a website’s adaptation of Paul Taylor’s smutty book The Great Dinosaur Mystery and the Bible. You just can’t miss them then and can’t miss them now.

#25.

To answer your question – I’m afraid so.

Relevant video:

I just got a deal on toilet paper from The Internets.

AI programming seems more reasonable to me than AI art, at least. It’s always been pretty accepted that programmers steal chunks of one another’s work, so using AI to do so more efficiently seems a lot less morally questionable than with art, where stealing one another’s work has been generally looked down upon for a long time.

That said, setting AI loose to steal chunks of other people’s programs still only works well if the AI knows how programming works. Otherwise, it’s always going to be guessing.

drew @ #12 — “Well the AI is trained on data on the internet. Like lies and code samples.” The AI may not be trained on internet data at all. Depending on the implementation, the AI may not rely on “training” per se. It could be using a highly structured taxonomy/ontology model.

nomdeplume @ #25 — I was just reading recently about Christian generative AI websites. Because the functionality is the result of a number of things they can make it work. Getting exposure in search results, however, is a complex area called “SEO” (search engine optimization). Google and other search engines provide tools to help interested parties enhance the ability of their answer getting in the first page of search results and potentially to the top. But no one can actually “push” their particular thing to the top of search results, except by buying ad space which the search engines mark as an ad. As you can imagine, there’s a lot of competition for the first four or five items.

I’m reminded of a Stanislaw Lem short story, maybe in The Cyberiad, wherein computers are in charge of a lot of infrastructure. However, because electricity, like water, chooses the path of least resistance, the computers would just say that they had done tasks rather than actually do them. The computers aren’t lazy, but they aren’t ambitious, either.

“You’d think if there were anything AI could get right, it would be science and coding. It’s just code itself, right?”

This is an example of the Fat Cow fallacy: “He who tends fat cows must be fat.”

The fact that AI “is just code itself” has no bearing one whether it can get science and coding right.

“Although I guess that’s a bit like expecting humans to all be master barbecue chefs because they’re made of meat.”

Yes, it’s exactly like that.

Every implemented algorithm is “just code”. The Discovery Institute’s web site is “just code”, but you wouldn’t expect it to therefore get science and coding right.

“Wait, programmers are asking software to write their code for them? My programming days are long behind me, in a time when you didn’t have many online sources with complete code segments written for us, so you couldn’t be that lazy.”

I’ve been programming since 1965 … we write vastly larger and more complex programs now than we could then. Are biologists and other scientists lazy because they build on peer-reviewed research rather than redoing every experiment themselves?

And laziness is the first of Larry Wall’s 3 programmer virtues:

Laziness: The quality that makes you go to great effort to reduce overall energy expenditure. It makes you write labor-saving programs that other people will find useful and document what you wrote so you don’t have to answer so many questions about it.

LLMs like ChatGPT and Perplexity are.

No LLM is implemented that way … it’s not feasible and such GOFAI has been largely abandoned.

re #35, GOFAI?

Ah yes, I remember Cyc.

I suspected that but didn’t find evidence of it after a brief search. Here’s the Wikipedia page:

https://en.wikipedia.org/wiki/Perplexity.ai

Another possibility is that their product just isn’t very good (yet) … the example certainly testifies to that.

https://en.wikipedia.org/wiki/GOFAI