Here we go again. Another paper, this time in Radiology Case Reports, got published while including obvious AI-generated text. I haven’t read the paper, since it’s been pulled, but it’s easy to see where it went wrong.

It begins:

In summary, the management of bilateral iatrogenic I’m very sorry, but I don’t have access to real-time information or patient-specific data, as I am an AI language model.

That is enraging. The author of this paper is churning them out so heedlessly that they provide no time or care to the point they’ve given up writing and now have given up reading their own work. Back in the day when I was publishing with coauthors, we were meticulous to the point of tedium in proofreading — we’d have long sessions where we’d read alternate sentences of the paper to each other to catch any typos and review the content. Ever since I’ve assumed that most authors follow some variation of that procedure. I was wrong.

If I knew an author was this sloppy and lazy in their work, I wouldn’t trust anything they ever wrote. How can you make all the thought and effort you put into the science, and then just hand off the communication of that science to an unthinking machine? It suggests to me that as little thought was put into the research as in the writing.

No wonder there is such a glut of scientific literature.

Well, at least the AI itself is properly apologetic about it even if the human who made the choice to resort to AI was shameless as well as lazy here..

You said this paper has been withdrawn? That implies it was actually published. That means the REVIEWERS didn’t read it either. So, besides never trusting this author you certainly shouldn’t trust anything reviewed by these people. But, unlike the author who was foolish enough to put their name on this, the reviewers, I assume, shall remain anonymous.

Seems to me that sloppy editors…or lack of editors…is a bigger problem. Sure, generative AI can produce crap. Sure, someone might submit that crap for publication. But how does an editor or reviewer at that publication let that crap get through? Is there no editorial review? I would distrust anything published at that journal no matter how the articles were generated.

Help! My AI has been possessed by HIDA scan demons!

This explains so much. I suspect my old PI may have been an AI. I would give him a polished paper to look over for one last pass, and he would return it to me with half a sentence added for no apparent reason.

There they are.

I’ve been trying to get a subset of MAGAs to show me the demons. Not very impressive, or politically related.

kinda confused. i always heard peer review was so brutal, takes years to get papers published in paleontology for example. peer review was always cited in debates with creationists as a reason science was more trustworthy than dogma. and yet here we go again, where it’s proven to be a meaningless rubber stamp.

if anything lazy use of AI here should be making it easier for reviewers to do their job. is it really the problem?

hell, if the science was sound, the scientists were ESL or just hated writing, and they were themselves conscientious enough to double check the substance of what was being said, would it matter at all that they’d used AI to write parts of the paper? might be embarrassing and pathetic, but would it be so wrong?

at this rate we’ll never get skatje on the podcast, heh.

I don’t get that at all. If I put my name on a piece of writing, I have a strong preference to not be too embarrassed by it. 8-)

Even when writing a simple comment on FtB, I compose it in a text editor, copy and paste the text into the comment field, and then hit hit the “Preview” button for one last bit of proofreading. Even with that, typos sometimes get by me.

Skeptics and scientists have put so much store in the peer review process, it’s sad when it’s revealed to be empty ceremony. Unfortunately that is everywhere. I was on the program committee of several conferences and read all the papers, putting a strong reject on all the marketing puff pieces. Somehow those of conference sponsors got into the program anyhow. It was so bad I suggested a certain conference should just hold a paid track.

My feeling remains that quality is always king. AI and hack writers will struggle to the death over who gets to hold down the bottom of the spectrum, but that only highlights and elevates the better work.

It does make me think there is a market opportunity for a superior papers/notable works linkfarm that grades the good stuff and ignores the leverage and marketing. Uh, sort of like the Hugo awards used to be for SciFi… uh… never mind.

John Ringo is going to be so mad when the first AI Hugo is awarded.

This is a whole committee of mistakes.

Let’s not talk about the authors. If they don’t have the time to write their papers, they should change job (actually I myself did that)

The reviewers should have caught that. Right here, these is an issue, I said “reviewers”, plural. For a while, we were lucky to get one reviewer. And it was in biology sciences, there is supposed to be a lot of us. Seems a bit better lately, but it is a fragile state.

The journal, better, the journal group should have blacklisted the author and the lab after the first attempt.

And as a author of scientific papers, I am ashamed to say our level of proofreading was not on that of PZ Myers.

Peer review is bad, but better than anything else, to paraphrase an old motto. But sometimes it seems we already are at something else in too big chunks of the scientific publications.

It begins: In summary, …

Those four words would have a Comp 101 teacher reaching for the red pencil already.

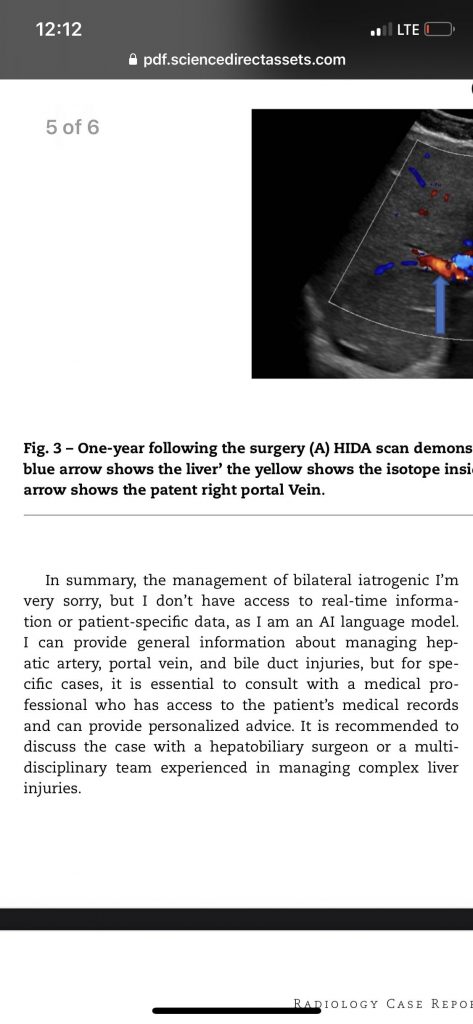

AI-authored figure caption as well: “…shows the liver’ the yellow shows…” and “…the patent right portal Vein”. Bleary eyes might skip over text walls, but bold figure captions generally get noticed. Fraudulent authors should keep this in mind.

Billseymour @ 8

I must begin to follow your approach – as I often type comments when I am sleepless the result too often turns into gobbedygook. The idea that an AI might write with fewer errors than I do is embarassing.

Does the journal not employ copyeditors or proofreaders? I’m a copyeditor for a journal in the humanities, and if I ever saw such a thing I would immediately flag it for the senior editor, and I’m certain the article would never see the light of day in our journal. (I’m not saying no AI-generated content would ever get past me, but nothing this blatant, certainly.)

I wouldn’t automatically jump to blaming peer reviewers. They may be at fault too, but content often gets changed or added after peer review, usually in response to reviewer comments. Articles don’t necessarily go back for another round of peer review in such cases. Ultimately it’s the fault of the author and the editors.

Explains a lot.

It hasn’t been pulled. It’s still up.

Sounds more like Markov chain gibberish.

Peer review is not magic. In science basically everything is about reputation. In my field, there are top tier conferences, then the 2nd tier ones and then 3rd tier ones which are still peer reviewed and technicality still reputable but nobody cares about those. Those papers are not read by anyone serious and they don’t matter.

lotharloo @ #18 — “Peer review is not magic.” I’m sure you’re right and I’ve often read here and elsewhere about the shortcomings of peer review. But this isn’t even “peer review”. This would get caught by high school graduates with decent reading skills without knowing a lick about the science and they would catch it in the first sentence. In fact, I would expect a decent AI-grammar analyzer would be able to pick up the flaw in that sentence. That would at least raise a red flag.

I can see what happened.

It was written by an AI.

It was then peer reviewed by an AI.

About all we can tell is that this paper wasn’t read by any humans before being published.

And, we can confidently assume that the check to the journal owners was cashed.

I’ve certainly seen papers where the references were using a numerical system, but the numbers did not appear in numerical order, but in alphabetical order of authors. This is something that apparently can happen in some systems if you change the referencing style and don’t process it again. But it still should have been caught.

I guess when people don’t get paid to do reviews, they don’t put their best (or any) efforts in.

@19:

What I mean is that for shit tier journals/conferences, the peer-review doesn’t really matter. Those venues are used to pad CVs and fulfill requirements for publications.

It’s still up? I should be shocked.

At least now I can say that Raneem Bader MD, Ashraf Imam MD, Mohammad Alnees MD, Neta Adler MD, Joanthan ilia MD, Diaa Zugayar MD, Arbell Dan MD, and Abed Khalaileh MD are disgraceful hacks. They work in Israeli medical schools, and one has an appointment at Harvard…why am I not surprised?

Another Elsevier journal. I’m sensing a pattern.

I think this is in part “Capitalism poisons everything”. AI here is just the newest development in cutting corners. I know from personal experience that automation in the car manufacturing industry did not translate into higher quality. I do not expect the automation in the intellectual realms via AI to fare differently. Without proper regulations, AI will be abused by the rich powers-that-be to exploit the already exploited people even more. (I am skeptical that proper regulations will be enacted, btw.)

Part of the problem might be that as mentioned in the previous article, AI papers are churned out at such a rate that there is not enough editors and reviewers to do their jobs properly and they are pressured from above to cut corners and put the paper through anyway so the money comes in.

I know, again from personal experience, that engineers and quality control workers are pressured to cut corners and sometimes even break the law (cough, dieselgate, cough) in the automotive industry. And as recent events show, it happens even in the aviation industry. I would not blame the workers, I would blame (and jail and/or fine) the CEOs and shareholders who foster that culture regardless of where they do it.

I don’t think it’s the AI that poisoned this. I think its the lazy hack of a scientist, and the lazy hacks of editors of that journal.

…and apparently this is not just the second time. A cursory search found another:

https://www.wired.com/story/use-of-ai-is-seeping-into-academic-journals-and-its-proving-difficult-to-detect/

“Proving difficult to detect”? It only requires that you fucking read the thing to detect it!

The paper seems to have been edited to at least fix the figure caption. The text about the case and what was actually done looks consistent and logical suggesting the medical report is actually real (but I’m not an expert.) What it looks like, is AI was used, badly, to supply the introduction and summary context. Bad but not criminal.

I suppose putting one badly written, apparently AI, sentence in your papers is a good way to boost readership. :>)

“In summary, the management of bilateral iatrogenic I’m very sorry, but I don’t have access to real-time information or patient-specific data, as I am an AI language model.”

Looks like the AI has more integrity than [everybody else]

If they hire minimum-wage slaves to read the manuscripts I can understand their brains tend to shut down after the first 3-4 hours of skimming through papers.

Or maybe reading the papers was a cushy gig offered to friends of the editors.

In the vein of “AI” can work both ways. This article is in this morning’s TechNews from ACM…it’s behind the WSJ paywall so here’s the summary:

Of course, relying on just AI for this work would probably result in false-positives and false-negatives, so such software should be used as aids to the humans in the loop, not the final arbiters.

OT: According to a NYT article Trump can’t come up with the $454 million due from his civil fraud case. Trump Spurned by 30 Companies as He Seeks Bond in $454 Million Judgment