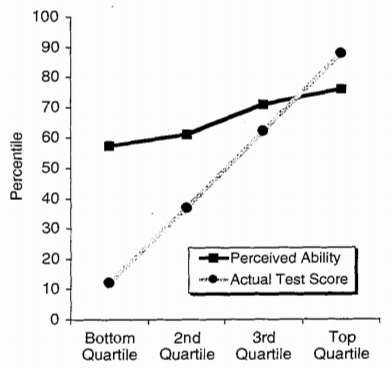

The Dunning-Kruger Effect states that people with the lowest competence tend to overrate their competence, but people with the highest competence tend to underrate themselves. This was shown in 1999 paper by Dunning and Kruger.1 Here’s one of the figures from the paper:

This figure shows results from a test on humor. People are scored based on how well their answers agree with those of professional comedians, and then they are asked to assess their own performance. There were similar results for tests on grammar and logic.

The Dunning-Kruger effect has entered popular wisdom, and is frequently brought up whenever people feel like they’re dealing with someone too stupid to know how stupid they are. But does the research actually mean what people think it means?

Before reading into this subject, I must admit that I had a major misconception. I thought that people’s self-assessment was actually anti-correlated with their competence. I thought someone who knew nothing would actually be more confident than someone who knew a lot. (This leads to an amusing dilemma: Should I choose to give myself a lower rating, because it would that increase posterior probability that I’m more competent?2)

But it is not true. People who know nothing are less confident than people who know a lot. People who know nothing are overconfident relative to their actual ability, but they are still not as confident as people who have high ability.

More self-aware, or just lucky?

Dunning and Kruger argue that the Dunning-Kruger effect arises because people with less competence are also worse at self-assessment. But there is, in fact, an alternative explanation.3

Let’s suppose that people are completely ignorant of their own ability. On average, everyone gives themselves the same rating, regardless of their actual ability. People tend to be overconfident in general, so let’s just say that people rate themselves in the 70 percentile. In this scenario, you might find that the people in the bottom 25% are very overconfident, rating themselves in the top 30%. In contrast, people in the top 25% would have very accurate self-ratings.

But does that really mean that people with greater ability are better at assessing their own ability? Or are they just lucky that the guess they made–which is the same guess that everyone makes–happens to align with the correct answer? Perhaps it’s not that competent people are better at self-assessment, it’s that everyone is very confident in themselves, and only some people have the ability to match that confidence.

There is also a subtler statistical argument being made. It is not merely that people are bad at assessing their own skills, it’s that the tests are also bad at assessing their skills. If the tests are completely unreliable, you expect that the test results will have no relation to people’s self-assessments.

In practice, people aren’t completely ignorant of their own ability, and the tests are at least somewhat reliable. As a result, people who perform better on the test are slightly more confident in themselves. Nonetheless, this interpretation casts doubt on the Dunning-Kruger effect.

Overconfidence is American

Which interpretation is correct? This could be tricky to discern, since both interpretations make similar predictions. Dunning and Kruger, for their part, reject the statistical argument, based on analysis of their data.4 However, I feel they have not sufficiently addressed the hypothesis that basically everyone is ignorant of their own ability.

One interesting fact I discovered is that self-ratings are culturally dependent. East Asians systematically underrate themselves, compared to USians The researchers claim that this actually has some utility: USians tend to work harder when they think they’re good at something, whereas East Asians tend to see failure as an invitation to try harder.

If this is true, it implies that among East Asians, people with lower competence are more accurate in their self-assessments. This appears to directly contradict the Dunning-Kruger interpretation. But perhaps you shouldn’t take my argument too seriously, since I’m not backing it up with any data.

The next time you see the Dunning-Kruger effect used to mock someone’s intelligence, it would be wise to remember that this is a real area of psychology research, one which you may not know much about. Real research is often more muddled and complicated than people make it out to be in popular media, and this is no exception.

Every month I repost an article from my archives. This month, I had major issues with the article I wrote in 2015, so I ended up rewriting it substantially. So it’s not exactly a repost, but it’s taking the monthly repost slot.

1. Kruger, J. and Dunning, D. “Unskilled and Unaware of It: How Difficulties in Recognizing One’s Own Incompetence Lead to Inflated Self-Assessments”. Journal of Personality and Social Psychology 77 (6), 1121–34 (1999). (return)

2. This dilemma is a form of Newcomb’s paradox. If you’re a one-boxer, you might prefer to rate your own ability in the way that maximizes the expectation value of your actual competence. If you’re a two-boxer, you might say that this isn’t a consideration, because how you rate yourself has no causal connection to your actual competence. (return)

3. Ackerman, P. L. et al. “What We Really Know About Our Abilities and Our Knowledge”. Personality and Individual Differences 33, 587-605 (2002). (return)

4. Ehrlinger, J. et al. “Why the Unskilled Are Unaware: Further Explorations of (Absent) Self-Insight Among the Incompetent”. Organizational Behavior and Human Decision Processes 105 (1), 98-121 (2008). (return)

I think you’re missing out on discussions of mechanisms.

There is an obvious mechanism for understanding why someone with low competence would rate themselves slightly above the median:

If they don’t have the knowledge in the area to begin with, not only do they fail to recognize what they don’t know, but they also fail to recognize genuine expertise, because they can’t know that the expert is right about something. They have no right answer of their own to compare to the expert’s answer.

Not being able to recognize expertise, everyone around them appears equally qualified. As a non-exceptional person, they have no reason not to think of themselves as falling in the undifferentiated middle.

But then philosophy comes into play. I have some expertise in certain topics – feminism, anti-violence work, the effects of domestic and sexual violence on trans* persons, and law. In one area I might be near the peak of human expertise at it exists right now, certainly above the 95% and probably well, well into the 99th (though how could I be sure without empirical research on who has expertise in trans* experiences of domestic & sexual violence and how much?). In the other areas, I’m no one special, but since the majority of people don’t study those topics much at all, I’m still going to well above the median.

Okay. That’s fine as far as it goes. But let’s take my area of greatest (relative) expertise: if you’re someone who believes that trans* identity is fictional, then all my so-called expertise appears to be based on erroneous foundations. My hard-won knowledge is and must be entirely wrong to such a person, and whatever knowledge I think I have would be (from the perspective of that person) actually burdensome delusion. To that person, then, although I have genuine expertise, it appears that I am less knowledgable than they.

This won’t apply to all experts, of course, but where strong philosophical stances are opposed to established facts (e.g. flat-earthers judging expertise in geology), one can make the case to oneself that you’re very much above average.

Even when not dismissing true experts, when duffers compare their own expertise to other non-specialists, other known psychological effects can play a role in the evaluation. The availability heuristic, for example, distorts the importance of knowledge we easily remember. It is because we remember something that we deem it important, so when we compare our knowledge to others, if they remember the same number of things but not the same things, the availability heuristic encourages us to view our knowledge favorably, because while we get the same number of things right as our comparators, it seems to us that it is the more important things that we get right, while the things we get wrong (and others get right) are less important.

Thus known factors (e.g. lack of good information upon which to base a comparative judgement, well-studied heuristics that are known to mislead us) can explain the Dunning-Krueger effect fairly well, and leads to predictions that match what we see fairly well. It explains why all assessments are above average, but it also explains why we would see a gradually-increasing self-assessment. To wit: with more knowledge, one is able to dismiss a certain highly unqualified group as not comparable and beneath one in expertise, with the same factors as before affecting judgement about how one falls in the remaining group. Thus if the tendency is to place one at the 55th percentile of one’s own peers, then dismissing 10% of people and placing oneself in the 55th percentile of the rest places one in the 59th percentile overall. The pattern continues as one becomes more and more expert and is thus able to dismiss more and more people as clearly not worthy of being considered peers.

Of course, some people will be particularly arrogant, and others will be particularly willing to embrace certain evidences (setting the curve, getting straight As, etc.) that, when one reaches a career pinnacle after meeting those milestones along the way might justify assessing oneself as better than the 55th-60th percentile of one’s peers AND through career specialization might limit drastically the number whom one would call peers. For both these arrogant persons and these rare elites, you might get them to self-rate in the 99th percentile, but there will be others who underrate themselves, and you can still see self-ratings top out at 75% or so for most of the categories we see bandied about (e.g. “feminism” or “parenting”).

Crucially, that top rating would depend on how many people are considered peers. Expertise in “law” is actually quite broad. Law school gives you a great education in certain aspects, but a court reporter might easily know more about civil procedure than the vast majority of attorneys. Even trial attorneys aren’t in court every day, while the court reporter does nothing but record what’s happening in a court, when, & why. All day, every work-day. That kind of expertise can’t be dismissed. And yet, it’s also a knowledge that’s about what the procedure *is*, not what it once was or what it is likely to be in the future. Academics studying civ pro might well have a far deeper and better understanding even without being in court every day. If you ask such an expert where they rank, among all members of society, in their understanding of the uses and likelihood of success of ex parte motions in tort cases, they might have no trouble ranking themselves in the top 1% – and possibly very highly within that 1%. But this is likely to be related to one’s ability to dismiss quite a few more people as potential peers than is reasonable for broader categories of knowledge.

So when the time comes to rank one’s expertise in “law” (generally) and one is a civil procedure expert and professor at a law school, does one include lawyers that don’t know anything about civ pro, being contract specialists or PIL specialists? Of course. But what about cops – do they know some things about criminal law that the civ pro professor doesn’t? Probably, but how much?

If you ask that civ pro professor about where they stack up in knowledge of civ pro, they might actually rate themselves very high – there aren’t that many court reporters anyway, and some of them are going to do their jobs more or less by rote without picking up nearly as much about the law as others do. But as soon as you make it about “the law” more generally, certainty plummets. Other people approach the law differently and study different aspects. Without knowledge of those different disciplines, it’s hard to say how much one doesn’t know. So suddenly millions of cops join the comparator group just because you can’t say for sure they’re not in the comparator group. If one is fairly average in a larger comparator group one falls much closer to the mean than if one can narrow the comparator group while still seeing oneself as average within it.

In sum, I think the know explanations for the DK effect render that explanation much more likely than the incompetent testing explanation, but I’ve probably gone on too long, so I’ll stop here.

@Crip Dyke,

The Dunning-Kruger effect definitely seems to have a solid theoretical mechanism, but without direct evidence showing a connection between the theory and the observations, I might deride it as a just-so story. There’s also the question of why it apparently isn’t cross-cultural (possibly–I haven’t looked into the study of East Asians). Are East Asians not equally vulnerable to the availability heuristic?

Some of the mechanism you describe is also overlapping with the alternative interpretation that I described. “Lack of good information upon which to base a comparative judgement” is pretty much what I was saying. The thing is, people with higher aptitude don’t necessarily have good information on which to base a comparative judgment either, for a lot of the reasons you discuss.

I don’t think we need to worry about the issue where, e.g., “the law” is a rather broad and ill-defined topic. From what I saw of the studies on the Dunning-Kruger effect, they always give specific tests to people, and ask them to rate their own performance on that particular test. On the other hand, I’m not sure who they told test subjects are their “peers”. Perhaps these were college students, and they were told to compare themselves to their classmates? (I don’t have journal access anymore so it’s not easy for me to check.)

Oh, sure. I don’t know much about DK. I was simply asserting that plausible causative factors exist that have already been studied, not that I know for sure which ones apply and to what degree.

I’m not sure what the mechanisms are for the alternative hypothesis, if I really understand the alternative hypothesis. And I’m not sure if those mechanisms would see the function of estimated ability that we actually observe (starting a bit over average and climbing slower than actual ability climbs). That makes the original hypothesis seem more facially plausible to me, but you’re absolutely right that without testing it remains a just so story.

Separately:

That’s a great question. I’ll have to look into it in my spare time someday.