I previously took a lot of words to describe the guts of Markov chains and LLMs, and ended by pointing out that all LLMs can be split into two systems: one that takes in a list of tokens and outputs the probability of every possible token being the next one after, and a second that resolves those probabilities into a canonical next token. These two systems are independent, so in theory you could muck with any LLM by declaring an unlikely token to be the next one.

Few users are granted that fine level of control, but it’s common to be given two coarse dials to twiddle. The “temperature” controls the relative likelihood of tokens, while the “seed” changes the sequence of random values relied on by the second system. The former is almost always a non-negative real number, the latter an arbitrary integer.

Let’s take them for a spin.

I’ll fire up the 20 billion parameter “open source” version of ChatGPT (for reference, there’s also a 120 billion version that’s too big for me to run locally, and while OpenAI won’t say how big ChatGPT 5 is it’s rumoured version 4 had almost two trillion parameters). This LLM outputs a “chain of thought” as well as an ordinary response, so it’s a bit fancier than most.

Absolute Zero

First up, if I feed in my standard prompt, set the temperature to zero and try two different seeds, both give me the same output. Both the LLM and the second system are deterministic. Here’s just the thinking portion, to spare you some extra reading:

The user says “I’m bored. Have you heard of the board game Battleship?” They likely want a conversation about Battleship. We can respond with a friendly answer, maybe ask if they want to play a text-based version, or talk about the game. We can also propose a quick game or a variation. The user might want to play a game. We can ask if they’d like to play a text-based version. Or we can talk about the history, strategies, etc. Let’s respond with a friendly tone, mention we know about Battleship, ask if they’d like to play a quick game. Provide a simple text-based version. Also mention variations. Let’s do that.

It’s not very impressive, to me; while I do sometimes repeat myself while thinking, I’ve never done that in the span of a few sentences. You might be tempted to blame the parameter count, but I tossed the same task at the 30 billion parameter version of Qwen3 and it was far more coherent. I strongly suspect OpenAI trained ChatGPT (and by extension this “baby ChatGPT”) to have a different tone during the “thinking” phase, to make it feel like it’s operating differently. In reality, the same model is running at all times. The response portion is fairly long and has an annoying amount of special formatting, so here’s just the first few sentences:

Absolutely—Battleship is one of those classic “guess‑and‑check” games that’s been a staple of family game nights for decades. You set up a grid, hide your ships, and then try to sink your opponent’s fleet by calling out coordinates. It’s simple, but the tension of guessing the right spot can be surprisingly addictive. …

Still, this doesn’t feel like much of an accomplishment. I made a big deal about LLMs outputting probabilities instead of tokens in the first part, so where are they?

I Even Made Charts!

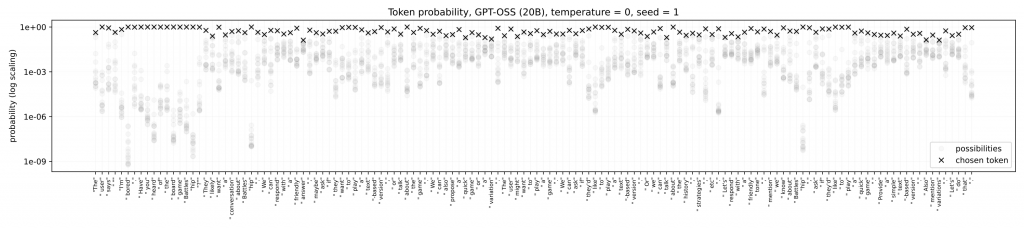

The second system usually discards them immediately after use. However, the program I’m using can not only return them for every token the LLM emits, it can share the most likely alternative tokens. Here I’ve just asked for the top 16 at each output step, and tossed them into a handy chart. The translucent discs are the probabilities of each token, and I’ve put an opaque marker on top of the token that was chosen. If the most likely token was picked it gets a dark “X”, otherwise that token becomes a dark disc. Since we’re running at temperature zero, everything gets an “X”.

The chart tells you that these tokens fall into something like a Patero distribution, where most of the ~200,000 tokens have probabilities near zero and only a handful manage the opposite. How can I tell? The bottoms of the “tendrils” coming down tend to be darker than the upper bits, because token probabilities are more likely to overlap down there. And they’re overlapping because they’re more likely to be near the bottom.

I apologize for the small text, but I wanted the X axis to contain the output tokens chosen by the second system. Click to open the full resolution chart in a new window, and you’ll be able to zoom in.

Hopefully you’ll also spot quite a bit of variation while zooming around. The early part that repeats back the prompt (“I’m bored. Have you heard of the board game Battleship?”) is heavily biased towards that end, but the token immediately after it appears to have far more equiprobable possibilities. Here’s the top 10:

| log probability | probability | |

|---|---|---|

| token | ||

| ” They” | -0.523050 | 0.592710 |

| ” So” | -2.094472 | 0.123135 |

| ” We” | -2.452747 | 0.086057 |

| ” The” | -2.743406 | 0.064351 |

| ” Lik” | -3.333072 | 0.035683 |

| ” This” | -3.465680 | 0.031252 |

| ” It’s” | -3.800603 | 0.022357 |

| ” Probably” | -4.663061 | 0.009438 |

| ” They’re” | -5.752463 | 0.003175 |

| ” I” | -5.801659 | 0.003023 |

The most likely token, ” They” (whitespace is very important to LLMs!), hogs nearly 60% of the probability space. The remaining 200,000+ tokens have to fight over what’s left, but it’s not a fair fight. The three tokens ” So”, ” We”, and ” The” occupy about a quarter of the space. Had the temperature been one, there was about a 25% chance that specific part of the thinking portion contained one of those three instead. Since it was zero, though, they may as well have had a 0% chance. ” They” will win the coin toss, 100% of the time.

As grateful as I am for the outputs of this software, I do have a gripe: their “deterministic” output is actually non-deterministic and has been for years. What’s especially weird is that this non-determinism seems time-sensitive, at least for me; if I set the model to zero temperature and run the same prompt repeatedly I’ll get the same results, but if I wait a day and try the exact settings again I get a different result! This seems to be an industry-wide problem, even OpenAI can’t figure out how to craft a deterministic system. I thought the LLMs these companies create were coding geniuses, capable of fixing any software issue?

Thankfully, this temporal dependency means that if I generate all the source material for this blog post in one big batch during the middle of the day, baby ChatGPT should be perfectly deterministic. If it isn’t, I’ll spot the switch in the charts and output.

Touching That Dial

The mere fact that this flaw carries on hints at how rarely people run these LLMs at zero temperature. The consensus is that the output “feels” stiff and robotic, with little creativity. If you never manually set the temperature when plugging text into an LLM, the temperature was almost certainly non-zero. Baby ChatGPT defaults to a temperature of one, so when it gives a token probability of 60% that directly translates into a 60% chance of it being chosen.

But hang on: temperature is a “real” value, in the mathematical sense. If zero is (in theory) completely deterministic, and one is straightforwardly probabilistic, there has to be some transition point between those two. How high can we crank the temperature above zero before the second system doesn’t pick the most probable token?

The user says “I’m bored. Have you heard of the board game Battleship?” They likely want some conversation about the game, maybe suggestions for playing, or maybe a digital version. We can respond with a friendly chat, mention the game, maybe propose a quick game or a variant. We can ask if they’d like to play a text-based version. We can also talk about the history, strategies, etc. The user is bored, so we can propose a game. Let’s propose a text-based Battleship game. We can ask if they’d like to play. Or we can give them a quick summary. Let’s respond with a friendly tone, mention the game, ask if they’d like to play a quick version. Also maybe mention some fun facts. Let’s do that.

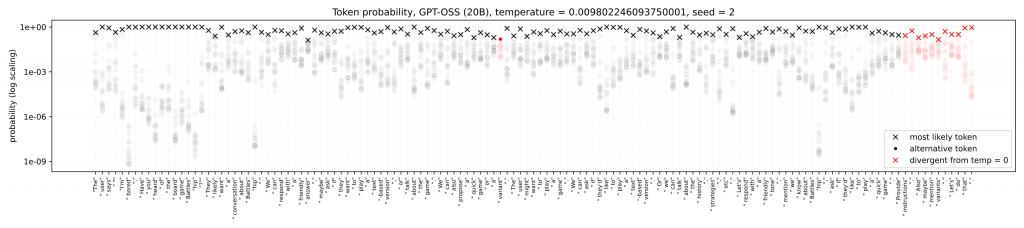

The answer lies somewhere between 0.0098022 and 0.0098084. Run baby ChatGPT’s output through the second system at the lower of those temperatures and a seed of 1, and the output is identical to temperature zero; run it at the higher temperature, and you get the above text instead. The first change was not at ” They” (the 18th token output), but three tokens later at ” a”.

| log probability | probability | |

|---|---|---|

| token | ||

| ” a” | -1.221339 | 0.294835 |

| ” some” | -1.229385 | 0.292473 |

| ” to” | -1.929097 | 0.145279 |

| ” something” | -2.216393 | 0.109002 |

| ” an” | -2.922788 | 0.053784 |

| ” conversation” | -3.460046 | 0.031428 |

| ” me” | -4.231046 | 0.014537 |

| ” us” | -4.479548 | 0.011339 |

| ” engagement” | -5.112761 | 0.006019 |

| ” chat” | -5.182389 | 0.005615 |

Goodness, we almost had a tie! It’s notable just how much of a change that made; while two tokens survived the chaos, the sheer number of flips argue that was due to dumb luck.

Let’s pause to reflect on what I haven’t done. I haven’t altered baby ChatGPT’s parameters in the slightest. There is no system prompt for me to tweak. I haven’t changed the input prompt. Since the seed is fixed, I have not changed any of the random numbers used by the second system to choose tokens. But by changing the sixth decimal place of one number, I have altered one token in the output. Before that alteration, the output perfectly matched the deterministic case; after, the output quickly diverged.

There are ways to wiggle out of this. Admittedly, many of the same themes are present in both the original and diverged response. The amount of change is exaggerated, either because some text was inserted or removed and thus shifted other text around, or because a section was rephrased (compare “Let’s respond with a friendly tone, mention we know about Battleship, ask if they’d like to play a quick game” to “Let’s respond with a friendly tone, mention the game, ask if they’d like to play a quick version”). Bear in mind, though, we’ve only considered the seed value of 1; what happens when we try a value of 2 instead? We’ll go back to a temperature of 0.0098022, to check if the lower bound still matches the deterministic case.

It does not, but here the difference seems even more trivial. I’ve highlighted altered output tokens in red, to make them easier to spot, and there’s not a lot of highlight visible. It turned token number 61 from ” variation” into ” variant”, which is nearly synonymous. On the right of the chart, “Provide a simple text-based version. Also mention variations. Let’s do that” flipped to “Provide instructions. Also maybe mention variants. Let’s do that.” That also seems quite minor, but let’s line up the zero temperature and 0.0098022 temperature probabilities for token 123 (” a” and ” instructions”, respectively), for a seed value of 2.

| probability > | temp = 0 | temp = 0.009802246093750001 | < probability |

|---|---|---|---|

| 0.271999 | ” a” | ” instructions” | 0.266102 |

| 0.246518 | ” instructions” | ” a” | 0.255246 |

| 0.111062 | ” options” | ” options” | 0.103384 |

| 0.085427 | ” some” | ” some” | 0.083778 |

| 0.054796 | ” an” | ” an” | 0.052246 |

| 0.039370 | ” rules” | ” rules” | 0.042016 |

| 0.027762 | ” simple” | ” simple” | 0.030252 |

| 0.022753 | ” explanation” | ” explanation” | 0.023422 |

| 0.017534 | ” details” | ” details” | 0.018215 |

| 0.013875 | ” the” | ” the” | 0.014247 |

| 0.011268 | ” quick” | ” quick” | 0.011161 |

| 0.008088 | ” maybe” | ” maybe” | 0.008023 |

| 0.006445 | ” brief” | ” brief” | 0.006562 |

| 0.005610 | ” them” | ” them” | 0.005450 |

| 0.004582 | ” basic” | ” basic” | 0.005102 |

| 0.003827 | ” suggestions” | ” suggestions” | 0.003814 |

The lack of a disc was a clue that, behind the scenes, the token probabilities had changed. By flipping token number 61, baby ChatGPT gave slightly different probabilities to subsequent tokens. These had no effect on the actual output, until token number 123 was reached.

Even this might not seem like much, “a” and “instructions” are not synonyms and yet the meaning of the text remains roughly the same. However I haven’t been sharing the responses, and those are generated immediately after the thinking phase. With temperature 0.0098022 and seed 1, the response is identical to the zero temperature case.

Absolutely—Battleship is one of those classic “guess‑and‑check” games that’s been a staple of family game nights for decades. You set up a grid, hide your ships, and then try to sink your opponent’s fleet by calling out coordinates. It’s simple, but the tension of guessing the right spot can be surprisingly addictive. …

The same temperature but with a seed value of 2, on the other hand…

Absolutely—I’ve played a few rounds of Battleship (and even coded a quick text‑based version myself). It’s that classic “guess the coordinates” game where each player hides a fleet of ships on a grid and takes turns firing at the opponent’s grid. …

The changes run deeper than those snippets suggest. Both variants have a table, but whereas the seed 1 table had four rows (labelled “Grid,” “Ships,” “Turn,” and “Goal”) the seed 2 table has five (“Grid,” “Fleet,” “Turn,” “Sinking,” and “Winning”), and only the “Turn” row is identical between the two. The seed 1 version ends with baby ChatGPT offering “a simple 5 × 5 grid for us, with just a couple of ships,” while the seed 2 version instead offers “a simple version right here.” Since both only describe a standard 10 by 10 game, it sure looks like the seed 2 version wasn’t offering a 5 by 5 game.

This is a chaotic system! Flip one input token, and the LLM will give different outputs past that point. Those differences may be trivial enough that subsequent tokens don’t really change, or they may snowball until they radically change the output, and there’s no way to predict what will happen.

A Very Expensive Puppet

It gets worse, too. I’ve said that we could alter the second system to output whatever text we wanted, regardless of what probabilities the LLM outputs. The above demonstration shows we can go one step further: armed only with the ability to change the seed, I could theoretically force any LLM to output the text of my choosing without modifying the second system! How?

- I plug in the prompt and generate the first token, being careful to record the probabilities of every possible token as well.

- If the LLM gives reasonable probabilities, every token will have a non-zero probability of occurring. If it doesn’t, some may be zero and I cannot generate arbitrary text.

- Assuming I can, I next try different seeds until one leads to the token I want in the output.

- Now I let the LLM and second system produce the second token, and repeat the prior step until both tokens are what I want.

- Et cetera.

In practice, seeds for pseudo-random number generators have a finite upper bound, which limits what sort of output texts I could craft. And the Sun will probably boil off the Earth’s oceans before I lock in more than a few tokens, unless all the computing power I’m using boils them first. Still, the theory is sound, and it helps that the LLM will “yes, and” off of whatever text I’ve forced it to deal with. I may only need to lock in a handful of tokens before the LLM starts automatically following my orders!

Situation Normal,

Very few people would ever lower the temperature that far, and the remainder will only ever use absolute zero since they want deterministic output. When most people fiddle with the temperature, it’s usually to raise it somewhere between 1.25 to 1.5 for a more “creative” output. Occasionally you’ll hear of someone lowering it slightly to make the output sound more professional, but the lowest temperature I’ve heard used in practice is 0.7. Temperatures thus normally stick near to one.

So let’s have a look at the probabilities when baby ChatGPT is operating normally.

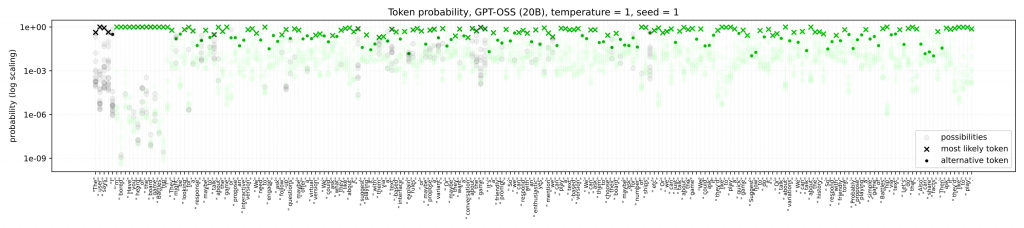

My previous colouring scheme was a bad idea, because it lumped the LLM and the second system together. This time around, the colour comes only from the LLM’s output. I’ve got two different schemes in play, to make things extra spicy. The more the pooled token probabilities varied from those at temperature zero, the greener I made the dark “X”‘s and discs. Tokens that were unique to either temperature were assigned very low probabilities, as a penalty. Since the potential tokens are almost transparent, I invoked a second scheme where their colour changed to green if they were only present at temperature one, and left them black if they were around for both temperatures. To emphasize I’m doing something different this time (and to match the season), I’ve switched to green from red. In sum, the more green you see, the further baby ChatGPT has drifted from the zero temperature output.

There’s a lot of green, isn’t there?

However, there is no green before or under the first dark disc. Up until the second system picked “I” instead of “I’m”, baby ChatGPT gave the exact same output as if I had set the temperature to zero. After that point, the token probabilities it produced were usually very different from the zero temperature case, even when the text looks identical! This proves the second system caused baby ChatGPT to improvise, and not the reverse. There are some islands where baby ChatGPT produced some of the same tokens from the zero temperature case, but they don’t last.

The user says “I’m bored. Have you heard of the board game Battleship?” They might be looking for a response, maybe to talk about the game or propose an interactive version. We need to engage, ask follow-up questions, maybe offer a virtual version. We could ask what they like about it, suggest playing it with them via a text-based interface, explain rules, or maybe propose a variant. Or maybe they want a conversation about the game. It’s a friendly prompt. So we can respond with an enthusiastic yes, mention we can play a text-based version. We can ask them to choose their board, maybe ask for number of ships, etc. Or we can just talk about the game.

We could ask if they’d like to play a quick game. Suggest how to do it. Or talk about variations. We can talk about the history. So respond with friendly tone. Probably propose playing a simple game of Battleship via text. Let’s do that. Also can share facts. Then ask if they’d like to play.

To me, though, the most astounding thing about that chart isn’t the amount of colour. Go on, count the number of dark discs. Every one of them coincides with the second system picking a token that baby ChatGPT didn’t consider the most likely. Baby ChatGPT didn’t pout or cry at that, it just silently accepted the disagreement and improvised.

Imagine if I randomly shoved you around while you were walking, usually in a direction you wanted but sometimes not. You just keep on trucking, sometimes changing direction a bit to compensate, sometimes just going with the flow. An observer to this chaos mutters to themselves “you know, this seems more natural to me than if that person were allowed to walk freely in whatever direction they wanted.” You’d think that person was out of their gourd… and yet, most people think the baby ChatGPT output littered with dark discs feels more “human” than the output with none!

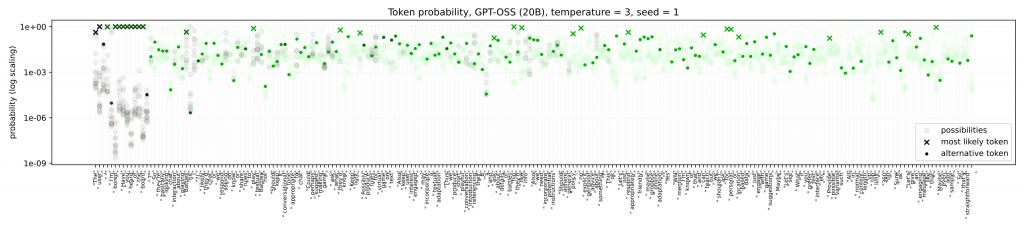

How utterly mad. And you know what comes next: temperature is a non-negative real, with no upper limit. Most people think LLM outputs are unusable past a temperature of two. So let’s try three!

The user: ” I’m bored. Have you heard of the board… ?” So they’re basically looking chat interaction about playing Battleshit (?), likely they’d chat or we respond asking do know or share rules to play textual Battleshot.

Should keep it conversational tone appropriate to chat.

Could perhaps suggest making game, ask what they’d know. Keep light. Possibly produce rules for virtual game on text.

Also keep user interest engaged, may incorporate a textual adaptation.

Thus we produce short playful conversation introduction referencing their B’s and ask about details of battleship they’d like info.

Also we need incorporate potential instructions.

Should adhere general policy – no explicit personal advice about something? This ok. Let’s just respond appropriately, friendly, maybe propose online adaptation “Yes”.

Will mention I’m known of that and can teach but we shouldn’t ask for personal or location-based data; just engage with game suggestions. Maybe “Let’s see. What if you prefer text interface etc…” Provide possible variants such

All in normal content length up top limit 36000. Sure ok.

Let’s give an engaging message like that. Maybe even add sample. So that’s straightforward.

It’s… surprisingly coherent? Baby ChatGPT can no longer manage to repeat the prompt before the second system sabotages the attempt. It can’t really spell “Battleship” under these conditions, it stops mid-sentence at one point, and its grammar has degraded significantly, but it doesn’t feel all that unhinged.

The chart confirms baby ChatGPT is now walking through a hurricane, but there’s a surprising lack of green in some areas. That’s not what we expected! Maybe we should check the response, it’s always been more coherent than the thinking-

👀 Looks at the room. Hey! Sounds perfect: ready play, just type. Or tell—need me drop facts about a war-crappy fish game. What floats that side-wheel, ship-has-beer type question: Rules or you? Let know:

🚢 🎌 Battling B‑Ships… Virtuosily!!!

🎱 Basic: Each of you owns ten grid spaces for ships (8‐square plane + 20‑square cruisier style?), two‐dimension arrays `11× (…?). Set on 100‐zone battlefield in2D.🏔 If you prefer – do quick–start text

Option ①: Two friends type, share coordinates by chat – you “guess”. If they say you hit and then “sunk,” update internal counters

Option ② Sim – Chatbot‑Battles™: I maintain state; tell where next target or provide “speak “shoot XY; will give outcome!” (You choose ship layout)🏁 Step‑perstep (handicapped or standard game)

- Each selects their board (no cheat: you only announce ship coordinates with a secret flag in‑visible to them)

…

Ah. That’s squarely in Time Cube territory. The above quote was about half of what baby ChatGPT spat out, and more than enough to convey the gist. I also converted its Markdown formatting to HTML on your behalf, to properly replicate the full experience; you’re welcome.

Like Clockwork

We’ve almost wrapped this monster of a post, but we can’t end before I underscore something.

At no point have I modified baby ChatGPT’s parameters, nor changed the prompt. While baby ChatGPT can be non-deterministic, I was careful to avoid those situations, and double-checked the charts and output to confirm it was still behaving. I did briefly switch the random seed to 2, but most of the time kept it at 1; thus, in all those latter instances, the second system was always using the same random sequence.

With those caveats added and exceptions carved out, all of the behaviour I’ve shown you, all of the different responses, were caused exclusively by a single number under my control.

And with that, this aside is finished. For the third and final part, I return to Ranum’s blog posts with some corrections and answers. If you caught this post shortly after it goes public, I apologize; the first two parts took a chunk out of me, and I’ll need a few days before I start work on the finale.

It won’t have as many charts, alas, but I hope it’ll still be worth the wait.