That’s an unholy trinity if ever I saw one: Bostrom, Musk, Galton. They’re all united by terrible, simplistic understanding of genetics and a self-serving philosophy that reinforces their confidence in bad ideas. They are longtermists. Émile Torres explains what that is and why it is bad…although you already knew it had to be bad because of its proponents.

As I have previously written, longtermism is arguably the most influential ideology that few members of the general public have ever heard about. Longtermists have directly influenced reports from the secretary-general of the United Nations; a longtermist is currently running the RAND Corporation; they have the ears of billionaires like Musk; and the so-called Effective Altruism community, which gave rise to the longtermist ideology, has a mind-boggling $46.1 billion in committed funding. Longtermism is everywhere behind the scenes — it has a huge following in the tech sector — and champions of this view are increasingly pulling the strings of both major world governments and the business elite.

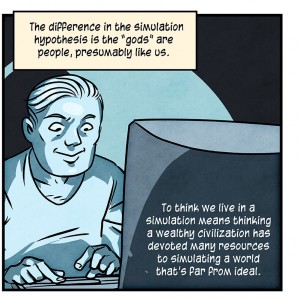

But what is longtermism? I have tried to answer that in other articles, and will continue to do so in future ones. A brief description here will have to suffice: Longtermism is a quasi-religious worldview, influenced by transhumanism and utilitarian ethics, which asserts that there could be so many digital people living in vast computer simulations millions or billions of years in the future that one of our most important moral obligations today is to take actions that ensure as many of these digital people come into existence as possible.

In practical terms, that means we must do whatever it takes to survive long enough to colonize space, convert planets into giant computer simulations and create unfathomable numbers of simulated beings. How many simulated beings could there be? According to Nick Bostrom —the Father of longtermism and director of the Future of Humanity Institute — there could be at least 1058 digital people in the future, or a 1 followed by 58 zeros. Others have put forward similar estimates, although as Bostrom wrote in 2003, “what matters … is not the exact numbers but the fact that they are huge.”

They are masters of the silly hypothetical — these are the kind of people who spawned the concept of Roko’s Basilisk, “that an all-powerful artificial intelligence from the future might retroactively punish those who did not help bring about its existence”. It’s “the needs of the many outweigh the needs of the few”, where the “many” are padded with 1058 hypothetical, imaginary people, and you are expected to serve them (or rather, the technocrat billionaire priests who represent them) because they outvote you now.

The longtermists are terrified of something called existential risk, which is anything that they fear would interfere with that progression towards 1058 hardworking capitalist lackeys working for their vision of a Randian paradise. It’s their boogeyman, and it doesn’t have to actually exist. It’s sufficient that they can imagine it and are therefore justified in taking actions here and now, in the real world, to stop their hypothetical obstacle. Anything fits in this paradigm, it doesn’t matter how absurd.

For longtermists, there is nothing worse than succumbing to an existential risk: That would be the ultimate tragedy, since it would keep us from plundering our “cosmic endowment” — resources like stars, planets, asteroids and energy — which many longtermists see as integral to fulfilling our “longterm potential” in the universe.

What sorts of catastrophes would instantiate an existential risk? The obvious ones are nuclear war, global pandemics and runaway climate change. But Bostrom also takes seriously the idea that we already live in a giant computer simulation that could get shut down at any moment (yet another idea that Musk seems to have gotten from Bostrom). Bostrom further lists “dysgenic pressures” as an existential risk, whereby less “intellectually talented” people (those with “lower IQs”) outbreed people with superior intellects.

Dysgenic pressures, the low IQ rabble outbreeding the superior stock…where have I heard this before? Oh, yeah:

This is, of course, straight out of the handbook of eugenics, which should be unsurprising: the term “transhumanism” was popularized in the 20th century by Julian Huxley, who from 1959 to 1962 was the president of the British Eugenics Society. In other words, transhumanism is the child of eugenics, an updated version of the belief that we should use science and technology to improve the “human stock.”

I like the idea of transhumanism, and I think it’s almost inevitable. Of course humanity will change! We are changing! What I don’t like is the idea that we can force that change into a direction of our choosing, or that certain individuals know what direction is best for all of us.

Among the other proponents of this nightmare vision of the future is Robin Hanson, who takes his colonizer status seriously: “Hanson’s plan is to take some contemporary hunter-gatherers — whose populations have been decimated by industrial civilization — and stuff them into bunkers with instructions to rebuild industrial civilization in the event that ours collapses”. Nick Beckstead is another, who argues that saving lives in poor countries may have significantly smaller ripple effects than saving and improving lives in rich countries, … it now seems more plausible to me that saving a life in a rich country is substantially more important than saving a life in a poor country, other things being equal.

Or William MacAskill, who thinks that If scientists with Einstein-level research abilities were cloned and trained from an early age, or if human beings were genetically engineered to have greater research abilities, this could compensate for having fewer people overall and thereby sustain technological progress.

Just clone Einstein! Why didn’t anyone else think of that?

Maybe because it is naive, stupid, and ignorant.

MacAskill has been the recipient of a totally uncritical review of his latest book in the Guardian. He’s a philosopher, but you’ll be relieved to know he has come up with a way to end the pandemic.

The good news is that with the threat of an engineered pandemic, which he says is rapidly increasing, he believes there are specific steps that can be taken to avoid a breakout.

“One partial solution I’m excited about is called far ultraviolet C radiation,” he says. “We know that ultraviolet light sterilises the surfaces it hits, but most ultraviolet light harms humans as well. However, there’s a narrow-spectrum far UVC specific type that seems to be safe for humans while still having sterilising properties.”

The cost for a far UVC lightbulb at the moment is about $1,000 (£820) per bulb. But he suggests that with research and development and philanthropic funding, it could come down to $10 or even $1 and could then be made part of building codes. He runs through the scenario with a breezy kind of optimism, but one founded on science-based pragmatism.

You know, UVC, at 200-280nm, is the most energetic form of UV radiation — we don’t get much of it here on planet Earth because it is quickly absorbed by any molecule it touches. It’s busy converting oxygen to ozone as it enters the atmosphere. So sure, yeah, it’s germicidal, and maybe it’s relatively safe for humans because it cooks the outer, dead layers of your epidermis and is absorbed before it can zap living tissue layers, but I don’t think it’s practical (so much for “science-based pragmatism”) in a classroom, for instance. We’re just going to let our kiddos bask in UV radiation for 6 hours a day? How do you know that’s going to be safe in the long term, longtermist?

Quacks have a “breezy kind of optimism”, too, but it’s not a selling point for their nostrums.

If you aren’t convinced yet that longtermism/effective altruism isn’t a poisoned chalice of horrific consequences, look who else likes this idea:

One can begin to see why Elon Musk is a fan of longtermism, or why leading “new atheist” Sam Harris contributed an enthusiastic blurb for MacAskill’s book. As noted elsewhere, Harris is a staunch defender of “Western civilization,” believes that “We are at war with Islam,” has promoted the race science of Charles Murray — including the argument that Black people are less intelligent than white people because of genetic evolution — and has buddied up with far-right figures like Douglas Murray, whose books include “The Strange Death of Europe: Immigration, Identity, Islam.”

Yeah, NO.