We are currently being inundated with polls and it is easy to become overwhelmed, with the risk of whiplash as one poll jerks you one way to be followed immediately by another in a different direction. The media will highlight any new poll, especially if it is a surprising outlier, and even more so if it supports their desired narrative of a close race, thus whipping up interest in following the news.

One of the side benefits of elections is the increased attention paid to statistics. I find it a fascinating subject, though I am not an expert, and following the polls gives me a reason to brush up on my knowledge.

The individual polls usually report margins of error given by 100/√N, where N is the sample size. This means that there is a 95% probability that the true figure lies within plus or minus the margin of error, which reflect (in part) the uncertainties due to the fact that each time they run the survey, a different group of people is sampled. Because polls tend to use sample sizes of the order of 1000, this usually gives a margin of error of 3.2%. This can cause fairly dramatic swings from one poll to the next, even when done by the same polling outfit using the same methodology. With different polls using different methodologies the swings can be even greater.

Because of these fluctuations, I tend to pay more attention to poll aggregators like Sam Wang at the Princeton Election Consortium, Nate Silver at FiveThirtyEight, Drew Linzer at Votamatic, and Pollster since they average out (using different methodologies) the results of the different polls and this tends to reduce the size of swings and allow the ‘house effects‘ of each individual poll to somewhat cancel each other out, giving a more accurate picture of the state of play.

I particularly like the first three of those because they are done by number crunchers who use algorithms that are fixed once and for all (except for minor tweaks) and they then let the chips fall where they may. Furthermore, these people are all genuinely data driven. While they have their own personal preferences for whom they would like to see win (and it seems like Obama for all three) you can tell that they also take pride in their professionalism and their models, and all of them are highly unlikely to secretly place their thumbs on the scale to get a desired result.

So what have these polls shown us so far?

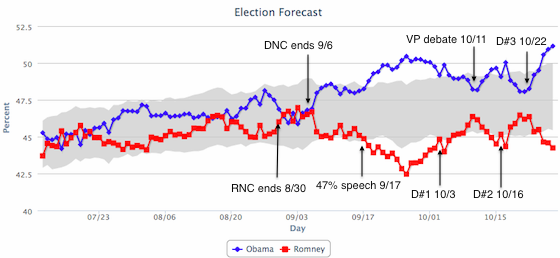

All three of them showed a steady Obama lead after the conventions were over, followed by a sharp drop in his support from October 4 to October 12, supporting the media narrative that Obama’s poor performance in the first debate on October 3 caused that decline, which was then stemmed by Joe Biden’s performance on October 12.

There is one poll however that is different from the rest and which I also watch with interest. That is the RAND American life Panel. In this poll the same people are sampled over and over to see what changes occur over time. They started back in July with a population of 3,500, which means a margin of error of 1.7%. This poll is a seven-day rolling average, which means that 500 people are polled each day and the responses on any given day are added to those of the 3,000 people questioned over the previous six days. So any change in the reported average on any given day from the previous day is caused by the change in the views of those 500 people sampled that day from what they thought seven days earlier. The sample is weighted in the usual way to match the population in factors such as sex, age category, race-ethnicity, education, household size, and family income. (You can see more details of the methodology here.)

The survey asks the same three questions each day:

What is the percent chance that you will vote in the Presidential election?

What is the percent chance that you will vote for Obama, Romney, someone else?

What is the percent chance that Obama, Romney, someone else will win?

Note that by asking people to estimate the probability of their voting, and weighting their vote accordingly, it incorporates such things as enthusiasm. So it can measure the effect of a voter who gets disenchanted with his or her chosen candidate for some reason but still plans to vote for him.

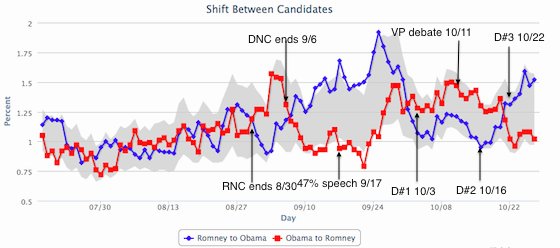

What this poll enables one to do is get a better handle on what changes in voting, if any, are caused by specific events. I give below the results of the survey released yesterday, along with the dates marked for those we are told are significant political events. Recall that it takes seven days after an event for all 3,500 people to get sampled.

Note that Obama started taking the lead immediately after the Democratic National Convention ended and has kept it since. The overall total for each candidate may not be accurate due to sampling and other effects (in fact the RAND result is very much an outlier in terms of the level of support for Obama and should be taken with caution) but what is interesting is to see the changes of this group over time. The RAND survey also tracks what percent of the group switched their votes from one candidate to the other.

Debate #1 was broadcast as a ‘game changer’ but we see that it occurred at the mid-point of a rising trend for Romney that had already begun more than a week earlier, with a steady rise in the two-week period starting from his nadir on September 26 when he had just 42.5% to when he had his peak of 46.4% October 10. Linzer also saw signs of a rise in Romney’s fortunes beginning before the debate. But what happened around September 26 that could be the cause of the best period in Romney’s fortunes? Nothing notable happened as far as I can tell. It seems like Romney’s 47% speech also came in the middle of a period of steady decline in his fortunes, and not prior to it.

The idea that the vice-presidential debate stopped Romney’s rise gets some support from this data. Obama is supposed to have stomped on Romney in debate #2 but Romney started gaining again a little after that. Romney’s fortunes started declining again after debate #3.

Looking at these results, I wonder if the ‘big’ events that the media focus on have only marginal effects on actual changes in people’s voting decisions, which may be more influenced by underlying factors that are not easy to detect. The media may be focusing on surface waves and missing the deep but slow moving ocean currents which is what really causes overall shifts.

The RAND survey method was developed in the UK and this is the first time it has been used for a US election and thus it does not have a track record here. But it is an interesting method that sheds some light on changes in voting patterns and I am curious to see after the election is over how well its overall predictions turn out.

While we care about the outcome, we nerds also see major political events as tests of mathematical models. That’s just the way we roll.

This was good to read. I have been confident Obama would win until last Tuesday when I started getting a weird sense of dread.

I just want this to be over.

margins of error given by 100/√N, where N is the sample size

—

-- Isn’t SE = SD/√N?

-- Further, the probability that the true mean is within a particular CI is either O or 1. All one can say is that 95% of such intervals will contain the true mean.

I wonder about the RAND poll, does it have some sort of--for lack of a better term— test-taker’s bias?

If I were in a situation where I knew I was going to be called every week and asked my opinions on things, it would undoubtedly pressure me to actually take the time to understand those things. It’s just human nature to want to look smart and well-informed.

Both campaigns lie quite a bit, but I believe that Romney has depended much more strongly on misinformation and confusing the voter. Thus, but if RAND causes its participants to voluntarily become better informed than they would otherwise, it might cause them to end up favoring obama more on average.

It’s an ad hoc hypothesis, so take it with a grain of salt.

This is a breath of fresh air amid the stale, smokey, clubhouse atmosphere that is our mainstream media coverage. Thank you, sir.

This is a possibility. We don’t have an answer since this is the first time it has been done. I think (hope) that the people who run this poll do a lot of deep post-election analysis to tease out such things. They obviously cannot do it while it is going on for fear of contaminating their results.

The 7/11 coffee method has been proven accurate; at least last couple election anyway.

It’s likely just pareidolia and confirmation bias, or just overfitting, but when I look at the RAND plots of switchers, it looks like the labeled “major events” may have significant effect, but mostly time-delayed—the maximum effect is several days to a week after the event. And not just the maximum cumulative effect, but the maximum day-to-day change.

If that’s true, I can think of several reasons it might be so.

I am curious. I notice that you and a few others use ‘OM’ as part of your identifier. What does it mean?

Other people can confirm or correct this for me, but I’m pretty sure it means they won an “Order of the Molly” over at PZ’s site. I’ve also seen “FCD” appended to a few commenter’s IDs and the best explanation I’ve seen for that is “friend of Charles Darwin.”

Right on both counts. I put it the OM there mostly to make my handle more specific, having been repeatedly confused with some other Pauls, a Dave W. at Ophelia’s place, and even another Paul W. who was around for a while a few years ago over at ScienceBlogs.