Here’s a provocative essay: AI is driving down the price of knowledge – universities have to rethink what they offer. The title alone irritated me: it proposes that AI is a competing source of “knowledge” against universities. AI doesn’t generate new knowledge! It can only shuffle, without understanding, the words that have been used to describe knowledge. It’s a serious mistake to conflate what a large language model does with what researchers at a university do — throughout the essay, the professor (an instructor at a business school, no surprise) treats “knowledge” as a fungible product that should be assessed in terms of supply and demand.

For a long time, universities worked off a simple idea: knowledge was scarce. You paid for tuition, showed up to lectures, completed assignments and eventually earned a credential.

That process did two things: it gave you access to knowledge that was hard to find elsewhere, and it signalled to employers you had invested time and effort to master that knowledge.

The model worked because the supply curve for high-quality information sat far to the left, meaning knowledge was scarce and the price – tuition and wage premiums – stayed high.

This is a common error — even our universities market themselves as providers of certificates, rather than knowledge — so I guess I can’t blame the author. He’s just perpetuating a flawed capitalistic perspective on learning. But digging further into the essay, I find abominations. Like this graph, which he claims illustrates “why tuition premiums and graduate wage advantages are now under pressure.”

Hot tip for whenever someone shows you a graph: first, figure out what the axes are.

The Y axis is labeled “Price (tuition/wage premium)”. No units, but OK, I can sort of decipher it. We’re paying a sum of money for college tuition, and after we graduate, we might expect that will translate to a wage increase, so this might represent something like a percent increase in base pay for college graduates over what non-college graduates might get. Fine, I could see doing some kind of statistical analysis of that. But it’s not going to produce a simple number!

For instance, in my cohort of students entering undergraduate education in the 1970s, we all paid roughly the same tuition. Afterwards, though, some of us were English majors, some of us were biologists, and some of us were electrical engineers…and there’s a vast difference in the subsequent earnings of those students. This graph is saying that when knowledge, that is, educated workers, are rare, then an education leads to a premium in wages. I can see that, but I think “price” is going to be far more complicated than is shown.

The X axis though…that’s made up. How do you measure “knowledge accessibility”? What are the units? How is it measured? I’ll have to return to that in a moment.

So there are lines drawn on the graph. One is going down, that’s “demand,” and obviously, going down is bad. The value, or price, of knowledge is declining, a claim that I’m not seeing justified here. Why is it going down? Because the supply is going up, which should be good, since it is going up, but knowledge is some kind of commodity that is being stockpiled, but is being called scarce anyway. Curiously, on this graph, the Price of knowledge is going up as “accessibility” increases, while demand goes down.

I’m not an economist, so the more I puzzle over this graph the more confused I get.

There is also a red dashed line here labeled Supply (AI abundance). Which further confuses me. So supply is scarce if produced by non-AI sources, but abundance if pumped out by an AI?

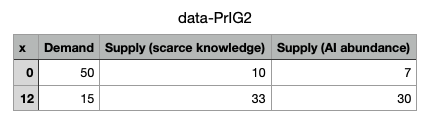

I was so lost that my next thought was that maybe I should look at the raw data and see how these values were calculated. Hey, look! At the bottom of the graph there was a link to “Get the data,” always a good thing when you are trying to figure out how the interpretations were generated.

Here’s the data. Try not to be overwhelmed.

Seriously, dude? None of that is real data. Those are just the parameters the guy invented to make one line go up and another go down.

I stopped there. That is not an essay worth spending much time on. So maybe AI is not generating knowledge and isn’t the cause of a declining appreciation of the value of knowledge?

“I’m not an economist, so the more I puzzle over this graph the more confused I get.”

Supply and demand curves aren’t particularly difficult to understand if you actually did some research on them. It’s clear from most of your critique that you’re unfamiliar with them. That’s not to say your ultimate critique, that the numbers are basically made up, is incorrect. However, it’s clear from the piece that the chart is being used to illustrate the prose, not prove the prose.

I read this in the Guardian:

https://www.theguardian.com/technology/2025/jul/09/futurist-adam-dorr-robots-ai-jobs-replace-human-labour

The guy strikes me as the typical overconfident futurologist whose predictions in 5 years time will be forgotten by everybody, because none of it even comes close to being true.

Notice how, at the end of the article, he proposes the lifestyle of the aristocracy as a model for how we need to live after AI/robots take over the world. I’m baffled.

Calling that ridiculous table “data” gives rank bogosity a bad name. PZ, are you sure that whole essay isn’t a joke, or a form of Sokal hoax?

What are the units here?

The units aren’t labeled.

The values under Demand (50 and 15) are supposedly the price of knolwedge.

So what are the units here? Dollars, Quatloos, grams of gold, buffalo hides?

Yeah, if I have to guess, it isn’t worth my time to figure it out.

Besides, that is what AIs are for. Someone could just feed it to ChatGPT or Grok.

However, it’s clear from the piece that the chart is being used to illustrate the prose, not prove the prose.

If that was the intent, then the author should not have needed “data,” or any specific numbers or units on either axis. They should just have said “here’s a simple graph to illustrate the relationship between this and that…”

Guy completely misses the point of a university education, if it was just about learning facts we wouldn’t need to distinguish universities from schools.

At least he does not think he is communicating with non-corporeal entities when he is on chatGPT. Yes, some people spiral into delusions. One even tried to communicate backwards in time.

Besides, that is what AIs are for. Someone could just feed it to ChatGPT or Grok.

Too Woke Grok or Mecha-Hitler Grok? Those would have yielded two wildly different essays, neither of them at all like the one we have here.

If you want diagrams, the various astronomy diagrams are much cooler than this.

‘Look, this is how the Sun relates to other stars in terms of temperature and luminosity!’ We can even have units, like “Kelvin” (which I think is a cool-sounding thing, not a MAGA unit like “Fahrenheit”).

I want a fight between Mecha-Hitler Grok and Mecha-Barbara-Streisand !

Knowledge is, by definition, not even subject to scarcity; because it is not diminished by the act of sharing.

If I learn something, and teach it to someone else, then afterwards, we both know that thing. Passing on knowledge doesn’t cause anyone to forget it.

It’s also a serious mistake – although an extremely widespread one – to conflate LLMs with the whole of AI.

If you pull your data out of your ass, the conclusion will be shit.

I wonder how this Dodd dude would analyze the price of gym memberships? What quality of what is the gym selling? It looks like it’s selling access to stress and challenge. When will the gym run out of stock of those?

Also, when the Dodd dude teaches business stuff, what if another professor sells books that reveal all the knowledge stuff he is selling? Then he won’t have anything marketable.

It’s too bad he can’t be selling training development experiences, kind of like gym time. If he could somehow like teach students to think about stuff, that might be a better business model for his career. Too bad he never thought about that idea. It sounds crazy to that dude, but it just might work!

I imagine his graduate school shaking their collective heads!

🤪

…throughout the essay, the professor (an instructor at a business school, no surprise) treats “knowledge” as a fungible product that should be assessed in terms of supply and demand.

Which makes me wonder (not for the first time, by any stretch) what fucking good “business school” does for anyone. I have yet to hear anyone anywhere remarking on how amazingly good American businesses are thanks to top-notch business-school curricula. I remember one person in the ’80s asking “Does anyone really believe the recent explosion of MBAs has done anything to really improve American business?”

The essay is AI slop used as self justification. The goal is elimination of competition that will enrich those controlling the AI.

“AI doesn’t generate new knowledge!”

GPT stands for ‘generative pre-trained transformer’. The generative bit makes new content.

“It can only shuffle, without understanding, the words that have been used to describe knowledge.”

That is to say, a book contains no knowledge; it’s just a collection of words, and no shuffling!

(A musician does not create new music, they just rearrange some pre-existing notes that have been used to describe music)

—

“Which makes me wonder (not for the first time, by any stretch) what fucking good “business school” does for anyone.”

Well… https://profiles.auckland.ac.nz/patrick-dodd

MBA from Thunderbird School of Global Management, Phoenix, United States1994 – 1995

Currently Professional Teaching Fellow — Business School, Marketing, New Zealand

So, it’s got him a job. That’s good, no?

@Morales: I’ll accept your argument for “information”, but for actual knowledge? Can it tell us anything we actually want or need to know?

Can a book, Erlend?

(It’s better than a a mere book, or even a mere library)

—

I know, I know… I could go on about how in an epistemological context, actual knowledge refers to a state in which an individual is consciously aware of a fact or condition, as opposed to merely having constructive or imputed knowledge. I could explain justified true belief, and Gettier cases. Point being, it’s surely no worse than having a library at hand, right?

A book is just a carrier, what’s in it was (hopefully) written by a human.

See, now you’re privileging human content, Erlend.

You’ve conceded books and libraries can hold knowledge, but its source is what matters to you.

Me, I reckon content is content, and its merits are its merits.

And you know, humans created and trained the LLMs, so, just like books (hitherto), they’re written by humans.

GPT-4 is estimated to have approximately 1.76 trillion parameters; that’s quite a bit of freedom for novel arrangements.

Supply and demand graphs are simplistic models students are taught in the first months of studying economics, and in my experience took about six months for their flaws to become obvious.

But PZM isn’t criticizing those flaws, he’s pointing out how flawed even more basic assumptions about measurements made by the author are. Knowledge isn’t the simple fungible product the author pretends it to be, that can be replaced by industrial production (in the same way a knife or shoe might be). The author clearly equates knowledge with something like a dictionary, in this case perhaps an interactive dictionary. That’s not knowledge, at best it’s information. Not the same thing.

fentex, sure… and at worst just data. I know.

Nonetheless, if LLMs were just a look-up system, they’d be databases. They are more than that.

And they are not really like a kaleidoscope, either.

They are something new.

And the ‘shuffling’ of the words that have been used to describe knowledge (and every other word, of course) can generate novel outputs. Those novel inputs may be informative, being a synthesis or an adumbration or a rephrasing or whatever.

(And infomation can become knowledge)

So, it’s got him a job. That’s good, no?

For him, yes. So what does he contribute in that job?

Irrelevant, RB.

Your claim was: “Which makes me wonder (not for the first time, by any stretch) what fucking good “business school” does for anyone.”

I just told you. For him, it gave him work. It did him some good.

(You asked, I answered)

—

I mean, seriously! You’re doing that thing where if some field of study ain’t a money-making thing, it’s not worth it.

(USAnian, ain’t ya?)

@Raging Bee

The answer I’ve heard for business school is that it shows a financial and social willingness to commit to the BS. If you’ve gone through all the stuff to get an MBA then you’re clearly more committed. Kind of like a hazing ritual I suppose

I think that essay says something really interesting about how that author conceptualises and perceives knowledge. And it’s a great example of why more people should be forced to do some philosophy in their university courses

I asked, you evaded, I noted.

To what spurious mysterious evasion do you appeal, RB?

You: “Which makes me wonder (not for the first time, by any stretch) what fucking good “business school” does for anyone.”

then, you again:

“So, it’s got him a job. That’s good, no?

For him, yes.

Me, then: “I just told you. For him, it gave him work. It did him some good.”

(By now, the actual claim is forgotten, about )

And your triumphant pratfall, RB:

Heh heh heh.

(Little kitty fluffs up big!)

—

You asked, I answered, you tried O so hard to move the goalposts.

Had you actually perused the link I found for you, you’d have noted “BSc Psychology — University of Utah, Salt Lake City, United States1987 – 1991”.

He’s a psychologist who went into marketing and business. I know that.

And you, you yourself! conceded: “For him, yes. [it’s good]” — and any good greater than none is, perforce, an acknowledgement that there can (and is) some “fucking good [that] “business school” does for anyone”.

—

You do amuse, inadvertently as it may be.

The numbers and grid lines are my favorite. Too precious. Look! A price of 21 corresponds to a Knowledge Accessibility of 10! I feel so informed.

@6 Jazlett His logic is along the line of, “I read a wikipedia entry on how to forge metal and now I am going to try to do it on my stove in my house, using kitchen utensils, the nail hammer I found in a drawer and a pair of salad tongs.” Having f-ing information, even assuming that the “AI” hasn’t hallucinated it, is not the same thing as a) knowing how to do something for real, or b) doing it safely without killing yourself, someone else, of burning down your own house. Its that step of, “How do I actually do X.”, that the bloody university is for, otherwise the same stupid argument could have been made for just visiting a flipping library, instead of spending money on an education.

Oh, and, once again, its an idiot, writing absurd nonsense, that promotes oligarchic thinking and what is part of right wing ideology, on a website that doesn’t, as far as I can tell, even allow posting reply comments, whether you are a “member” or not. Its the ICE “We need masks” version of online discourse, “We need to be protected from people telling us we are morons!!!”

Universities are not knowledge pumps. They are skill pumps. The skill? Knowledge creation and misinformation suppression.

Sure it does, DanDare.

For example, https://www.acu.edu.au/research-and-enterprise/our-research-institutes/institute-for-religion-and-critical-inquiry/our-research/religion-and-theology

The Religion and Theology program supports constructive work in the study of religion that incorporates a wide range of methodologies.

The program includes scholarship in religious studies that draws upon religious thought in order to address issues of widespread concern. Work in this area combines detailed attention to particular religious traditions with theoretical resources from the fields of ethics, politics, cultural studies, social theory, and philosophy.

“The program also includes scholarship in theology, which addresses questions within Christian thought concerning belief in the divine and the nature of humanity. Theology at the IRCI is open to diverse approaches, and it has a particular strength in the Catholic intellectual tradition, which proceeds in the conviction that faith and reason are not at odds. If you are interested in pursuing graduate studies within the Religion and Theology program, please contact Dr. Philip McCosker.”

(Theology, for sure a way to create knowledge!)

Of course, AI generates “knowledge”! Before AI, no one actually knew that there were a highly variable number of “R”s in the word “strawberry”. Likewise, before AI, no one knew about all of those fictitious legal cases, that Hitler was excellent at solving “problems”, or that the Jews are the source of the world’s problems. /S

https://hackernoon.com/why-cant-ai-count-the-number-of-rs-in-the-word-strawberry

https://www.inc.com/kit-eaton/how-many-rs-in-strawberry-this-ai-cant-tell-you.html

https://natlawreview.com/article/lawyers-continue-get-hot-water-citing-ai-hallucinated-cases

https://news.bloomberglaw.com/litigation/lawyer-sanctioned-over-ai-hallucinated-case-cites-quotations

https://arstechnica.com/tech-policy/2025/07/grok-praises-hitler-gives-credit-to-musk-for-removing-woke-filters/

https://www.pbs.org/newshour/nation/musks-ai-company-scrubs-posts-after-grok-chatbot-makes-comments-praising-hitler

AI is the ultimate labeling scam, since there is no actual intelligence involved. Any more than a tape recorder is intelligent when it plays back what was previously recorded. Of course, the Nazi’s have to Nazi, as Mush continually demonstrates with his fake “autonomous” Optimus robots, and his fake autonomous FSD Teslas. Ironically, even the 2025 Tesla Owner’s manuals clearly state that the FSD is NOT autonomous, and it may make sudden unpredictable movements so you have to be ready to take over in an instant. Despite that, Mush brags that his autonomous Tesla drives him places while he reads a book or sleeps.

“AI is the ultimate labeling scam, since there is no actual intelligence involved.”

No. But what it does used to take intelligence.

(I get it, but. AIs are even better at fucking up than humans ;)

[citation required]

“Theology, for sure a way to create knowledge!”

Since theology is the study of the wishes of an imaginary object, how does it add to knowledge? It is no different than the study of the wishes of fairies or dragons. Theology continues to bring humanity such wonders as this gem:

“Credo quia absurdum,” often translated as “I believe because it is absurd,” is a Latin phrase frequently associated with the Christian theologian Tertullian. It suggests that the very apparent contradiction or lack of reason in certain religious beliefs is a reason to believe them, rather than a reason to reject them. Every raving televangelist is also an expert on theology. Theology, including their form of obscurantism known as Religious apologetics, was expelled from science for some excellent reasons centuries ago.

John Morales:

[citation required] Thanks for bringing that to my attention. I was pretty sure that I saw a video where he said that, but I am currently unable to locate such a video. I did find these less solid claims: “Elon Musk has mentioned that he envisions a future where Tesla vehicles will allow drivers to sleep while the car drives them to their destination.” and “Elon Musk has mentioned that Tesla’s Full Self-Driving (FSD) feature is advanced enough that some drivers are turning it off to check their phones, which is less safe than allowing the car to drive autonomously”

Yet the 2025 Tesla User Manuals state that Teslas are not actually autonomously driving, and the Tesla lawyers even made that statement in a California court.

https://www.jalopnik.com/even-elon-musk-s-lawyers-don-t-think-autonomous-teslas-1851665228/

https://unionrayo.com/en/elon-musk-self-driving-failure-promises/

zetopan, “Since theology is the study of the wishes of an imaginary object, how does it add to knowledge? It is no different than the study of the wishes of fairies or dragons.”

:)

Because of DanDare’s claim @31.

I linked to a Catholic university’s graduate program.

By happy coincidence, it was that I adduced, the which you duly skewered.

Really, I cannot dispute your #35.

(Well done)

“Yet the 2025 Tesla User Manuals state that Teslas are not actually autonomously driving, and the Tesla lawyers even made that statement in a California court.”

I believe you.

So, how are they lying to customers, again?

John Morales@38:

“So, how are they lying to customers, again?”

How many customers read the manuals, either before, or even after buying the product? What Mush says publically differs from the contradictory escape clauses he puts in his car owner’s manuals. There is no shortage of Elonvangelicals (yes, it’s a cult) who insist that Teslas have autonomous Full Self Driving (FSD) because that is what their cult leader claims. Here’s a recent video that has collected together Mush’s continuous claims about the Tesla autonomous FSD, which is neither. The Tesla FSD has just been months away from achieving SAE Level 5 for the last 10 years, while actually being stuck at level 2 for that same time interval.

Tesla has among the highest employee turnover rates in the US because Mush is a narcissist and he fires anyone who contradicts him. So good luck on finding employees that know what they are doing as his narcissism races his engineering team to the bottom. He removed RADAR and USS in 2021 to reduce his costs, against the advice of his own engineers, who cited safety concerns. Anyone continuing to argue against reducing the safety, was fired. And he invented at least 3 excuses for doing the removal. First he said that is was due to vender supply problems, then it was due to the conflict of RADAR vs camera vision interpretations, like Kalman filtering and sensor fusion didn’t even exist since the 1960s! Or perhaps his programmers were spectactularly uninformed. His final excuse thus far has been that “vision only” is sufficient, which ensures that his solution will never even meet manual driving safety, let alone exceed it, since most drivers use additional cues.

There are plenty of cases where his “vision only” approach fails even when it would be sufficient. Running stop signs and red lights (the 2025 Users Manuals states this happens, illegally speeding past stopped school buses with multiple stop signs deployed and red lights flashing, running into stopped emergency vehicles with red lights flashing, etc. Even the following distance is unreliable with the alleged camera binocular vision: From the 2025 Model 3 owners manual: “Do not rely on Traffic-Aware Cruise Control to maintain an accurate or appropriate following distance.”, “Never depend on Autosteer to determine an appropriate driving path.”, “The Stop Light and Stop Sign Warning feature does not apply the brakes or decelerate Model 3 and may not detect all stop lights and stop signs.”, “Navigate on Autopilot does not make driving autonomous.”, “Always remember that Full Self-Driving (Supervised) (also known as Autosteer on City Streets) does not make Model 3 autonomous and requires a fully attentive driver who is ready to take immediate action at all times.”, and “Model 3 can suddenly swerve even when driving conditions appear normal and straight-forward.”

Now add in the additional cues like sirens, which Teslas cannot detect (cult members have claimed that the Tesla FSD responds to sirens, sans any evidence). And add in fog, snow, heavy rain, sunlight glare, dust, etc where vision is insufficient and the utility of RADAR becomes obvious. The Tesla RADAR used to be able to see two vehicles ahead where the first vehicle totally blocked your vision. When Mush stripped RADAR and USS from his vehicles in 2021, he also sent a service bulliten out telling servoce centers how to disable RADAR on the vehicles equipped with that hardware, so anyone who bought a Tesla with the FSD option prior to 2021 was defrauded because that safety feature had been purposly disabled by Tesla. There are even cases where vision only fails with summon in parking lots, where it crashes into stationary vehicles or other objects because of the loss of USS. Cult members will insist that their Tesla parks itself perfectly without USS, ignoring that even 10 successes do not magically cancel out 1 abysimal failure.

In 2016 Mush released a fake Tesla autonomous FSD demo video. “Tesla engineer testifies that 2016 video promoting self-driving was faked”, and “Tesla says Full self-driving beta isn’t designed for full self-driving, Tesla told California regulators the FSD beta lacks true autonomous features.” Tesla fully 3D mapped out the path the vehicle was going to take beforehand (while deriding Waymo’s approach that does the same thing) and the FSD failed so many times that they had to do a lot of sectional repeats. At one point the vehicle even crashed, and they had to stop recording until repairs could be made. Then they spliced together all the passing sections, discarding the failed ones, to make the entire demo look seamless. At that time, Mush was also claiming that autonomous FSD was only months away, and it would arrive before the end of next year. To add to that he also said that you would be able to summon your Tesla from NY while you were in LA, and it would drive the entire distance unassisted, a very obvious impossibility since no Tesla Since no has a 2,500-mile range on a single charge, and even 9 years later the Tesla FSD disengages an average of every 13 miles in mixed city/highway/rural driving. Since most investigative reporting has been largely replaced by “intertainment” reporting, the more slack jawed members of the press never push back on even obvious counterfactual claims.

https://www.reuters.com/technology/tesla-video-promoting-self-driving-was-staged-engineer-testifies-2023-01-17/

An independent EV research firm (AMCI) tested the latest Tesla FSD hardware with the latest FSD firmware available in 2024 over 1,000 miles of mixed driving. “AMCI Testing put FSD through its paces in four different environments in Southern California: city streets, interstate highways, mountain roads and rural two-lane highways. And while the system did demonstrate some sophisticated driving capabilities, it also needed human intervention/correction more than 75 times throughout the more than 1,000 miles driven. That is an average of one intervention every 13 miles.”, “It’s undeniable that FSD 12.5.1 is impressive, for the vast array of human-like responses it does achieve, especially for a camera-based system,” said AMCI Testing Director Guy Mangiamele. However, “the most critical moments of FSD miscalculation are split-second events that even professional drivers, operating with a test mindset, must focus on catching.” Yet Mush insists that the Tesla autonomous FSD is 4 to 8 times safer than manual driving (obviously made up numbers, and Tesla refuses to release any recorded FSD data showing how it actually behaved in crashes). Note that SAE Level 5 requires 700,000 cumulative miles without disengagements, driver interventions, or accidents. The Tesla 13 miles is a bit short of that goal, even though Mush continually insists Tesla autonomous FSD is only a short time away.

https://electrek.co/2024/09/26/tesla-full-self-driving-third-party-testing-13-miles-between-interventions/

https://insideevs.com/news/735038/tesla-fsd-occasionally-dangerously-inept-independent-test/

The only way to get HW4 (or the alleged HW5/AI5) on a HW3 or HW2.5 equipped tesla REQUIRES buying a NEW Tesla, since both HW4 and the current vaporware HW5/AI5 are totally incompatible with the earlier versions and are NOT retrofittable. Over a year ago, Musk claimed that Tesla would pay for any hardware upgrades, but he obviously lied (what a shock), since even upgrading from 2.5 to 3.0 including the camera changes cost $2,000 and Tesla wwill ould not pay for it.

Remember when he claimed that Tesla owners would be able to rent out their cars as self-driving taxis and make money? That is still a complete fantasy. His recent autonomous FSD Robotaxi concept has only a few minor flaws, despite Tesla doing a full 3D mapping on the small retricted region where the “demo” would occur, and only allowing pro-Tesla “influencers” (the new name for shills) to ride in these vehicles. His taxi demo required teleoperators as well as a “monitor” in the vehicle to handle situations where the teleoperator response time would be too great in emergencies. And there were multiple instances where the “monitor” had to push the emergency stop button, under the display.

https://www.youtube.com/shorts/wgqGIp9-PfY

Most humans can also hear sirens, easily detect pedestrians walking bicycles and see motorcycles, while the Tesla camera only system has failed in every one of those situations multiple times. And even FAR more serious: “Tesla Full Self-Driving ‘Occasionally Dangerously Inept'”, “FSD (Supervised) can work flawlessly dozens of times in the same scenario until it glitches unexpectedly and requires driver intervention.”, “What’s most disconcerting and unpredictable is that you may watch FSD successfully negotiate a specific scenario many timesoften on the same stretch of road or intersectiononly to have it inexplicably fail the next time.” So the Tesla FSD “Supervised” lulls the driver into thinking it is flawless, and then it suddenly acts like a crazy drunk: “the Tesla Model 3 ran a red light in the city during nighttime even though the cameras clearly detected the lights. In another situation with FSD (Supervised) enabled on a twisty rural road, the car went over a double yellow line and into oncoming traffic, forcing the driver to take over”.

Elon is just another narcissistic, sociopathic, lying conman, just like Felon45, but the idiot “entertainment news” has yet to recognize that: “The fundamental weakness of Western Civilization is EMPATHY” — Elon Musk

Yes, I know that “intertainment” isn’t spelled that way, I obviously failed proofreading and this site apparently does not allow for editing after posting.