Offensive strategies are good if (and only if) you have an identifiable, small, number of foes that you can dominate.

As soon as you’ve got to worry about getting mobbed from several directions, you need to start worrying about how to cover your vulnerable parts while you attack each foe in sequence and defeat them in detail. Anyone who expects conflict that is more than a first strike followed by a one-shot victory, needs to defend themselves against attack. Unless you’re the US, that is. [npr]

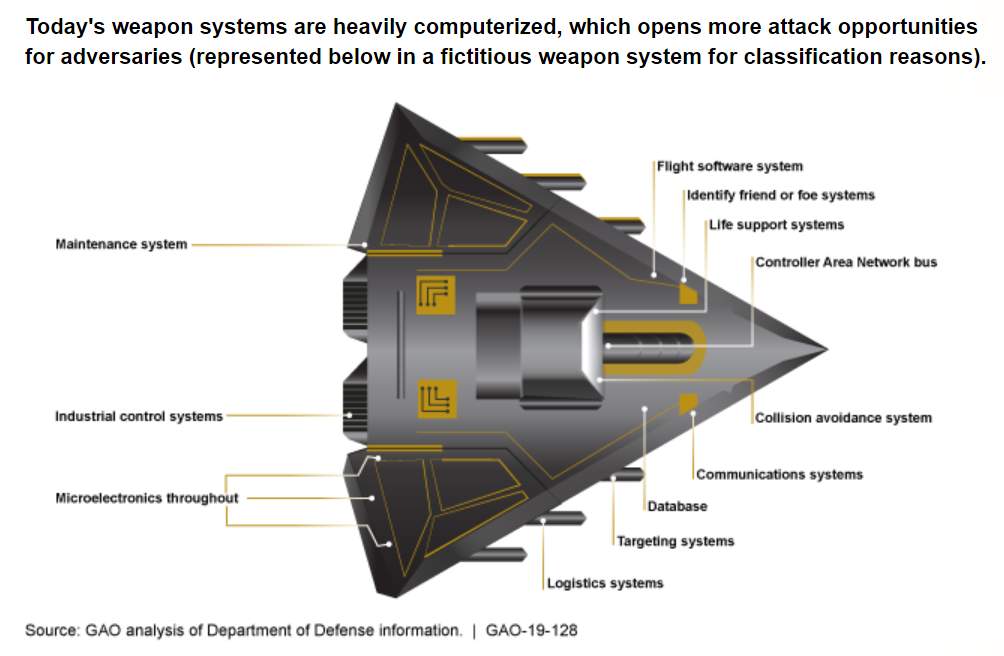

Cyber Tests Showed ‘Nearly All’ New Pentagon Weapons Vulnerable To Attack, GAO Says

Passwords that took seconds to guess, or were never changed from their factory settings. Cyber vulnerabilities that were known, but never fixed. Those are two common problems plaguing some of the Department of Defense’s newest weapons systems, according to the Government Accountability Office.

The flaws are highlighted in a new GAO report, which found the Pentagon is “just beginning to grapple” with the scale of vulnerabilities in its weapons systems.

Whenever you talk to the agencies they’ll all tell you that they don’t have enough money to do anything defensive, which is odd since the DoD hasn’t even been able to make an accounting of how and where they spend the money congress gives them. But they know they need one thing, and that is “more!” Nobody wants to ask if it’s being well-spent because when GAO did that a few years ago, the DoD replied, “fuck you.”

Drawing data from cybersecurity tests conducted on Department of Defense weapons systems from 2012 to 2017, the report says that by using “relatively simple tools and techniques, testers were able to take control of systems and largely operate undetected” because of basic security vulnerabilities.

The GAO says the problems were widespread: “DOD testers routinely found mission critical cyber vulnerabilities in nearly all weapon systems that were under development.”

When I started reading the article, I thought, “it’s probably not that bad. GAO probably did a vulnerability scan behind the firewall and discovered that the network’s interior is a mess – just like everyone else’s. So I thought I’d have a look at the GAO report and that’s when things started to go off the wall. Apparently GAO has decided to cast their description of the problem in terms of Space Force. What?! [gao]

That looks like a Fer-De-Lance from Elite Dangerous. Or, it looks like someone trying to explain computer security to a very, very ignorant person.

DOD plans to spend about $1.66 trillion to develop its current portfolio of major weapon systems. Potential adversaries have developed advanced cyber-espionage and cyber-attack capabilities that target DOD systems. Cybersecurity—the process of protecting information and information systems—can reduce the likelihood that attackers are able to access our systems and limit the damage if they do.

Typical development environments – whether military or no – seem to have horrible security. Developers are incredibly sloppy with code, systems, authentication, and privilege management. Normally this is treated as insignificant but when you’re worried about someone injecting code into your deployed system it’s not so funny anymore. That’s the problem – the DoD’s model for developing anything entails parting out the pork so that as many different contractors have their hands in it as possible. The end result is a system that nobody should trust.

It completely boggles my mind that the same government which sponsors the NSA “equation group” going around developing trapdoors in CPUs and hard drive BIOS, jiggering the cryptography in widely-used transaction security, and compromising the key exchange protocols in major vendors’ VPN products – to say nothing of developing hacks into Cisco’s firmware – how could they not see this coming?

DOD struggles to hire and retain cybersecurity personnel, particularly those with weapon systems cybersecurity expertise. Our prior work has shown that maintaining a cybersecurity workforce is a challenge government-wide and that this issue has been a high priority across the government for years. 53 Program officials from a majority of the programs and test organizations we met with said they have difficulty hiring and retaining people with the right expertise, due to issues such as a shortage of qualified personnel and private sector competition.

Getting good people is a problem, for sure. The government used to be a place where you could work your 20 years and retire with a pension – and not work very hard, at that, unless you wanted to. I knew a lot of old-timer NSA guys who went that route, then retired to work for Booz Allen Hamilton for 3 times what they were getting working for the government. I’m not saying they were great hires or very good, they just knew the ropes really well. Today, the federal work-force feels that the guarantee of a nice comfy job and a good exit track has been broken – so why not cut to the chase and work for Booz Allen Hamilton now? In my mind, the only people who should hire those people, anyway, is Booz: they do not belong at Intel or Oracle or Microsoft – I don’t trust them to build reliable systems. It’s like hiring a safe-cracker to make safes: they’ve been on the other side for years, why assume they’ve switched sides?

We’ve already seen how difficult it is to keep your cyberweapons in your pants when they’re being developed by sekrit skwirrel contractors. Yet, the government’s IT is largely outsourced, now, because they have realized that they cannot run systems reliably themselves, anymore. That means that the “attack surface” – the number of points where a hostile agent could try to penetrate – has gotten vastly larger. A spy no longer needs to get an NSA badge, soon all they’ll need is “Amazon Web Services” on their resume and they’ll have system privileges at a beltway bandit, just like Edward Snowden did. I’m a big fan of not letting governments keep big nasty secrets, but that’s a different problem from building systems that are not full of great, big, holes. The systems that people depend on should be reliable. And they aren’t.

The federal government has spent a lot of money playing offense and suddenly they are shocked to find their defenses are weak. In the annals of warfare, that’s situation awareness that’s about as bad as the Trojans hauling that big wooden horse in without examining it a little bit.

Back around 2000, I was doing a panel at a conference and someone said “you seem to be pretty ‘down’ on Federal IT management.” I replied that I think most Federal IT managers don’t do anything except read Powerpoint – they can’t even write a deck in Powerpoint without a contractor doing it for them, none of them can manage a system or operate a compiler or understand security at all. OK, “none” is a bit broad but all of the good technical people left to go make fortunes working for contractors. When I said that, all the contractors nodded.

Trojan horse: I don’t believe the legend, not for a second. It’s too dumb. Quiet ladders or buying your way in is much more likely. Good information security people would be the ones saying, “sure let’s bring that big wooden horse in here – we’re gonna have a fantastic bonfire in the town square – and let’s invite a company of archers and a company of spearmen to supervise the fun.”

Well, they know their target audience…

That’s a different department. It’s not like “the government” is one big thing that knows what it’s doing – it’s the emergent behaviour of a very large sack of weasels.

How long did it take you to stop laughing and pick yourself up off the floor?

Dunc@#1:

How long did it take you to stop laughing and pick yourself up off the floor?

I haven’t stopped laughing, yet. Give me another decade and maybe I will.

I’m worse than ignorant*, then, because the diagram means nothing to me. If I saw that in the report I’d be wondering if someone accidentally inserted the wrong picture. How is this helpful?

And that’s another year’s worth of climate change mitigation paid for in full by the US military. The military, and even then just the budget earmarked for R&D and procurement by the sound of it.

Developed? Or stolen (or opportunistically copied when leaked) from the US? I wonder what the ratio is.

Maybe the odd budding professional also decides against joining the Pentagon or NSA, where their early career might be making their later career that much more difficult?

*A point I’m willing to concede in any case. For once I know better.

Maybe they could get more workers if they were not horrible SOBs who eat their own young.

@komarov:

That’s because there’s almost nothing to get. I know **nothing** about cybersecurity, but helpfully my training in philosophy and logic allows me to deduce pretty easily that the more separate systems with separate passwords/vulnerabilities, the more chances to render a weapon system mission incapable.

The graphic, then, is simply a list of common systems that almost certainly exist in every aircraft or weapons platform (as opposed to just a weapon – a weapons platform is different, e.g. an armed aircraft, or a tank, or a destroyer).

So, just to pick three random systems, say you fuck with the maintenance system. Although I can’t say for sure, I’m assuming that since they include the industrial control systems (which are the systems that take the raw materials and put them together into a weapons platform), the “maintenance system” includes the logistics train. What happens if you sabotage the component ordering to inflict severe delays in getting parts? All of these weapons platforms are hugely complex and things go wrong all the time. If you can’t constantly and reliably get parts, wear and tear will take down the machine and you don’t have to touch it.

If you don’t find the logistics/maintenance systems easy to hack, try to guess the password for the collision avoidance system on a modern carrier aircraft so that after it’s in the air, when it returns to land on its home carrier, the CAS either prevents you from landing (maybe an auto-pull up) by slightly changing the obstacle-recognition software or, with more difficult coding that requires bypassing more code you could maybe program a last second roll right or left. Either one would almost certainly be fatal since you’re flying low and even if you don’t clip a wing on the deck getting the craft to pull up while you’re in an unanticipated roll will be next to impossible. Maybe you hit the ocean, maybe you hit the conning tower. Whatever. But even if you survive, the navy is going to have to take every single plane using that type of CAS off line until they can trace the source code, verify a new version is completely vulnerability free, and then deliver it to each target aircraft. With one crash or even near crash, you can ground a hundred or more fighters.

Then there’s life support systems. I’m not sure exactly what would be possible, but anything you could do to those systems would be devastating. Imagine that the vulnerability doesn’t crop up until you’re in a highly hostile environment (say in an aircraft above 40k feet). Once it starts, it first stops sending data to the flight recorder, then 10 or 20 seconds later, you mix exhaust with the pressurized air, or turn off the pressurization systems or whatever is possible with such systems. Will the pilot recover from that before hitting the deck? The longer they maintain altitude, the worse the fate of the pilot. The quicker they lose altitude, the faster the pilot recovers, but also they’re heading to the deck much faster – is the difference enough to save pilot and craft? Who knows? But now you also have dozens if not hundreds of combat aircraft grounded!

Now, if each of these systems were 95% invulnerable to concerted attack, that would still be bad: once you have 20 different points of attack, different probes will eventually succeed against most platforms. But with the GAO report saying that it was laughably easy to hack most systems, you’re wondering if they might be able to succeed on 20 or 40 or 60% of attack attempts. If you’ve got that success rate and 20 ssytems per platform to try, then any enemy that has the resources to make the attempt is almost guaranteed to succeed.

That graphic – in addition to any more complex stuff I’m missing because I don’t know anything about that field – appears at its most basic level to be about getting people to understand that an 80% failure rate per system is nothing remotely like the failure per platform because each platform has multiple systems which must be secured.

It’s such a simple point that you probably already got that point and wondered what you’re missing. But with Marcus statement about the graphic, I’m more inclined (as ignorant as I am) to conclude that there really isn’t anything that you or I have missed. That’s the very, very possibly the whole point, and unless you simply have never thought things through using basic common sense, no one should have need of a powerpoint slide to make that point.

Thus, if I understand Marcus correctly, the GAO is implying that the Pentagon is too inept or too careless or both to have ever stopped to consider the basic point that the more doors you have, the more doors you have to guard.

That the GAO might actually do that to the folks at the Pentagon says a lot of things about our generals – but none of them good. The sheer contempt necessary to include that slide (under the conditions that appear to be true) is staggeringly funny.

This line:

was supposed to be

80% failure vs. 99+% failure is actually not as different as 20% failure vs. (1 – 0.8^20)*100% = 98.85% failure.

Sorry for the confusion.

The bit about the Trojan Horse legend that everybody forgets is that the Trojans were initially deeply suspicious of the thing. It took an act of divine murder from Poseidon – sending sea serpents to devour skeptic-in-chief Laocoon (ironically a priest of Poseidon himself) and his two sons – to convince them to take it in.

The US Department of Defence appears to be in a similar situation, but with Mammon.

Crip Dyke, Right Reverend Feminist FuckToy of Death & Her Handmaiden@#5:

I know **nothing** about cybersecurity, but helpfully my training in philosophy and logic allows me to deduce pretty easily that the more separate systems with separate passwords/vulnerabilities, the more chances to render a weapon system mission incapable.

Security wonks call that the “attack surface” – it’s the size of the amount of things you have to get right. As it gets larger, your chance of getting everything right goes down. And that’s without subtle interactions – sometimes you can have two capabilities combine to be weaker than if they were not both in the system.

So, the argument is “bigger attack surface, bad” because of complexity inherent in managing it. Paradoxically this means that security’s usual response: adding more expensive stuff – is the opposite of what should be done. I used to point this paradox out, but nobody ever wanted to hear it, so eventually I shut up about it and I’ve been sitting back and watching the scampering and smoke and flames ever since.

Although I can’t say for sure, I’m assuming that since they include the industrial control systems (which are the systems that take the raw materials and put them together into a weapons platform), the “maintenance system” includes the logistics train. What happens if you sabotage the component ordering to inflict severe delays in getting parts? All of these weapons platforms are hugely complex and things go wrong all the time. If you can’t constantly and reliably get parts, wear and tear will take down the machine and you don’t have to touch it.

That’s right. Security wonks call that “supply chain security” and it’s a complicated sub-discipline. I don’t think it should be a sub-discipline of security, I think it’s a discipline of logistics and economics. By the way, the DoD’s system – JCALS (Joint Combined Army Logistical System) for managing DoD supply chain – is allegedly so complicated that nobody understands its outputs or its inputs. I don’t believe that, because it’s how the money is spent so it must work. But if you think about the logistical pipeline for any of this, it’s truly terrifying; you could obliterate the US Air Force by just getting some deliberately wrong formulation in some carbon fiber production line.

OK, imagine an attacker knew that F-35s need a lot of maintenance (they do!) and that there was a certain, expensive, hard-to-make consumable. Let’s say it’s a jet compressor fan made out of ceramo-unobtanium at a manufacturing facility that is one of the few places that is capable of making ceramo-unobtanium fans. Then, you look at the replacement rate, multiply that by the total number of planes, look at the inventory (if there is one) of replacement fans, and you manipulate the stock of the manufacturing company or put one of your agents in place there to screw up the CAD files just a little bit. Based on just that, you can calculate almost exactly how much longer there will be flyable F-35s. “Whups parts shortage!” Remember my posting about how the F-35 engines are only maintaineable at a facility in Turkey and another in the US? Shame if someone stole a propane truck and rammed one of those facilities and blew it up. Six months later, there would be no flyable F-35s.

Then there’s life support systems. I’m not sure exactly what would be possible, but anything you could do to those systems would be devastating. Imagine that the vulnerability doesn’t crop up until you’re in a highly hostile environment (say in an aircraft above 40k feet). Once it starts, it first stops sending data to the flight recorder, then 10 or 20 seconds later, you mix exhaust with the pressurized air, or turn off the pressurization systems or whatever is possible with such systems.

There is a famous simulator accident in which a pilot (in the simulator) flew an F-16 across the equator – due to a mistake in the rendering system, the plane flipped upside down but the controls didn’t. With a real fly-by-wire plane such things would be in principle possible but I rate them as very unlikely. The interfaces to those systems are cryptic as hell – even their designers don’t really know how they work, which is why the flight control software for the F-35 is so far behind schedule. Nobody is going to walk in and figure that plate of spaghetti (more like: supertanker full of spaghetti) out in order to damage it. It’d be easier to backdoor a few processors to have an unexpected power-down state if they see the right sequence of events.

The main problem with systems that are so complex is automation equates to a delegation of trust. If you automate the control surfaces of a plane, then the pilot is trusting that if they produce a certain control input, then the plane will do a certain thing. If you delegate command authority to a pilot you’re trusting that if you tell the pilot to do a certain thing, they will. An attacker can attack the system at very low or very high level. For example, Congress once ordered the tooling for SR-71s destroyed* and it was done. What if an attacker – let’s say Russians – just bought a congressperson and used them as a high-level attack point? The way to destroy the F-35s might be to defund the manufacture of a certain replacement part, or to screw up the business affairs of the company that makes a crucial engine component. Those would be logistical attacks on a supply chain.

Now, if each of these systems were 95% invulnerable to concerted attack, that would still be bad: once you have 20 different points of attack, different probes will eventually succeed against most platforms. But with the GAO report saying that it was laughably easy to hack most systems, you’re wondering if they might be able to succeed on 20 or 40 or 60% of attack attempts. If you’ve got that success rate and 20 ssytems per platform to try, then any enemy that has the resources to make the attempt is almost guaranteed to succeed.

It’s worse than that because the probability shifts if you are willing to add knowledge to the problem. So, for example, GAO determines 80% of systems are OK against attack, if I develop a new attack, the scenario might suddenly flip from 80% OK to 100% vulnerable. “Ooops” That’s a reason I am not a big fan of vulnerability management (the computer security art of enumerating system flaws in hopes of mitigating them top-down) – all the targets are moving targets.

Thus, if I understand Marcus correctly, the GAO is implying that the Pentagon is too inept or too careless or both to have ever stopped to consider the basic point that the more doors you have, the more doors you have to guard.

Exactly. And well said.

The principle you describe is why medieval castles tended to have one big door, not a lot of little ones.

(* the manufacturing processes that built SR-71s were also obsolete and mostly shut down. So losing the tooling was not really a big deal – the basic problem is not just that nobody knows how to make parts for them, there are no machine shops that still know how to make parts that way. So you could make a NEW SR-71 using the old design but it’d be a NEW SR-71. Which means that, by the time they’d CADed it up it’s a re-design, so it’d cost as much as an F-35 and it would have other problems that made it practically unflyable)

Thanks for the info, Marcus, and I’m relieved to find I didn’t screw anything up too badly. That’s always a danger when one starts speculating outside one’s area of expertise.