You can be sure as water’s wet – if someone doesn’t tell cops “don’t intrude on people” (You know, like the constitution tried to…) they’re going to explore the grey zones around the people’s rights. And by “grey zones” that means “areas where they can pretend not to understand” or “it looks grey to me.”

As Sam Harris says, in defense of profiling: [sh]

We should profile Muslims, or anyone who looks like he or she could conceivably be Muslim, and we should be honest about it. And, again, I wouldn’t put someone who looks like me entirely outside the bull’s-eye (after all, what would Adam Gadahn look like if he cleaned himself up?) But there are people who do not stand a chance of being jihadists, and TSA screeners can know this at a glance.

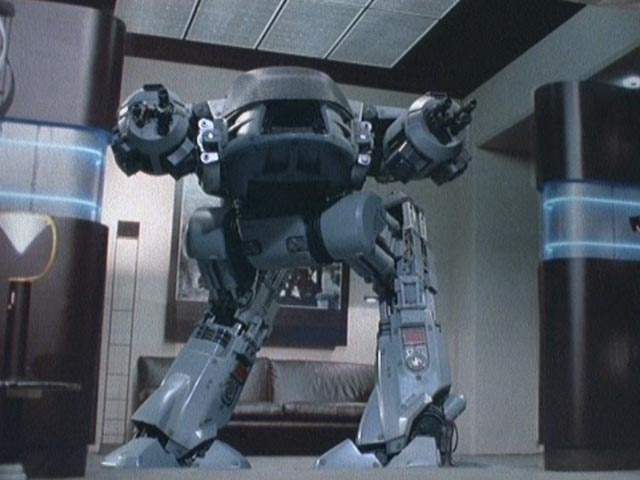

False positives can kill. Actually, false positives don’t kill: killer robots kill.

What he doesn’t realize is that racist profiling is going to inevitably be automated, and will result in an automatic robo-hassle or robo-shakedown against the target. [eng] In China, Uighurs are geo-fenced using face-recognition systems, so that they can’t travel:

China is adding facial recognition to its overarching surveillance systems in Xinjiang, a Muslim-dominated region in the country’s far west that critics claim is under abusive security controls. The geo-fencing tools alert authorities when targets venture beyond a designated 300-meter safe zone, according to an anonymous source who spoke to Bloomberg.

Managed by a state-run defense contractor, the so-called “alert project” matches faces from surveillance camera footage to a watchlist of suspects. The pilot forms part of the company’s efforts to thwart terrorist attacks by collecting the biometric data of millions of citizens (aged between 12 to 65), which is then linked to China’s household registration ID cards.

Don’t laugh at China, though – the US is actually quite a bit ahead of China in deploying such systems – it’s just been hidden using America’s tried and favorite’d technique: public/private cooperation. First, the FBI primed its database using drivers license photos from every state that electronically captures drivers’ faces (i.e.: all of them now) The FBI face recognition database contains photos of half the US population and (surprise!) the recognizers are about 15% accurate – probably because drivers license photos are fairly standardized. Guess what else is unexpected: it’s more likely to be wrong about black people. Guess what else is unexpected? Congress was “shocked” at the size of the FBI’s deployment, but didn’t do a damn thing about it. Naturally.

By the way, the FBI didn’t ask. They just did it. So that way nobody could say “no” because it’s already operational and anyone who wants to take down such a system is helping terrorists and criminals, naturally.

Which of these faces is “attractive”? Yes, that’s a question from an early Binet IQ test

I joked “Wait until Mississippi gets this” – referencing the fact that it’ll give racist cops an excuse to pull over anyone who’s driving while black, and probably shoot them besides – “the face recognition system said he was an arab terrorist!” Suddenly a 15% chance takes on significance. It’s also going to be a terrific excuse for abusive policing at borders. I’m upper middle-class and white, a TSA ‘known’ traveler, and I am usually carrying a ton of computer gear when I travel – customs/border patrol usually just wave me through. But if a face recognition system meant that 15% of the time I was going to miss my connection because I was stuck in a huge line at the border? Yeah, I’d be vocally unhappy. I’d get my privilege all bent out of shape. This system is going to further increase racial and class separations in the US; and – as I said – the American South is where it’s going to be adopted, first.

Oh, looky y’all: [4]

ORLANDO (CNN) – Orlando International has become the first United States airport to commit to using facial recognition technology.

It involves using facial biometric cameras that can be put near places like departure gates.

The cameras verify a passenger’s identity in less than 2 seconds, and they’re 99 percent accurate.

Officials say the system will be used to process the 5 million international travelers that go through the airport every year.

That story doesn’t even make sense. International travelers don’t come in at departure gates. They arrive at Customs, inbound. Outbound travellers don’t matter much, really. And somehow the system is being claimed as 99% accurate. And, by the way, there is no such thing as a “biometric camera” – they mostly use cheap 3K webcams (except they probably pay $1000 apiece for them) – the magic is all done in the server farm, somewhere.

And you can bet that the FBI will ask the airports, politely, “can we have a copy of that?” after all, it’s so they can help make sure Sam Harris misses 15% of his future connections.

We’ve already seen that the unregulated sharing of user data can cause problems (e.g.: the Strava heatmaps revealing secret US bases) – don’t think that this point has not sunk in; it’s just being ignored. For example:

Waze, the ubiquitous navigation app cherished by millions of traffic-harried drivers and begrudged by others who live along suggested shortcuts, is being tapped by the federal government to try to make roads safer.

The U.S. Transportation Department ingested a trove of anonymous data from Wazers, as the company calls its users, and put it up against meticulously collected crash data from the Maryland State Police. [wp]

Waze may even believe that their data is anonymized. Did they consider, for a second, that there are license-plate scanners all over the place, now, and every license-plate scanner at every toolbooth and airport parking lot, as well as many other locations, share that information with State Police – who are making it available to the FBI via “fusion centers”? I’m not saying “it’d be a simple perl script to match up Waze data with license-plate scanner data and de-anonymize drivers” because I don’t code in perl; otherwise, I guess it’d take a day or two to build that capability: you simply model the ID and whenever it passes by a place that records license-plates, you match all the IDs in that geofence against other places where those IDs have been seen where any of the other IDs in that geofence are not present. The FBI and local police forces also have stingrays up all over the place, that track phone numbers and sweep up text messages – that gives an excellent second source to merge along with the license-plate scanners. Best of all, it can be done asynchronously and it parallelizes beautifully. Ooh, big secret algorithm disclosed there. Perhaps the airport’s facial recognition systems are getting the claimed 99% accuracy because they are cross-checking against the passenger-lists.

It’s a weird mixture of hyper-optimism and short-sightedness.

It’s a weird mixture of hyper-optimism and short-sightedness.

Meanwhile, there is the “AI will figure out everything” crowd, who do seem to be putting their effort into making that happen: [osf]

Description: We show that faces contain much more information about sexual orientation than can be perceived and interpreted by the human brain. We used deep neural networks to extract features from 35,326 facial images. These features were entered into a logistic regression aimed at classifying sexual orientation. Given a single facial image, a classifier could correctly distinguish between gay and heterosexual men in 81% of cases, and in 74% of cases for women. Human judges achieved much lower accuracy: 61% for men and 54% for women. The accuracy of the algorithm increased to 91% and 83%, respectively, given five facial images per person. Facial features employed by the classifier included both fixed (e.g., nose shape) and transient facial features (e.g., grooming style). Consistent with the prenatal hormone theory of sexual orientation, gay men and women tended to have gender-atypical facial morphology, expression, and grooming styles. Prediction models aimed at gender alone allowed for detecting gay males with 57% accuracy and gay females with 58% accuracy. Those findings advance our understanding of the origins of sexual orientation and the limits of human perception. Additionally, given that companies and governments are increasingly using computer vision algorithms to detect people’s intimate traits, our findings expose a threat to the privacy and safety of gay men and women.

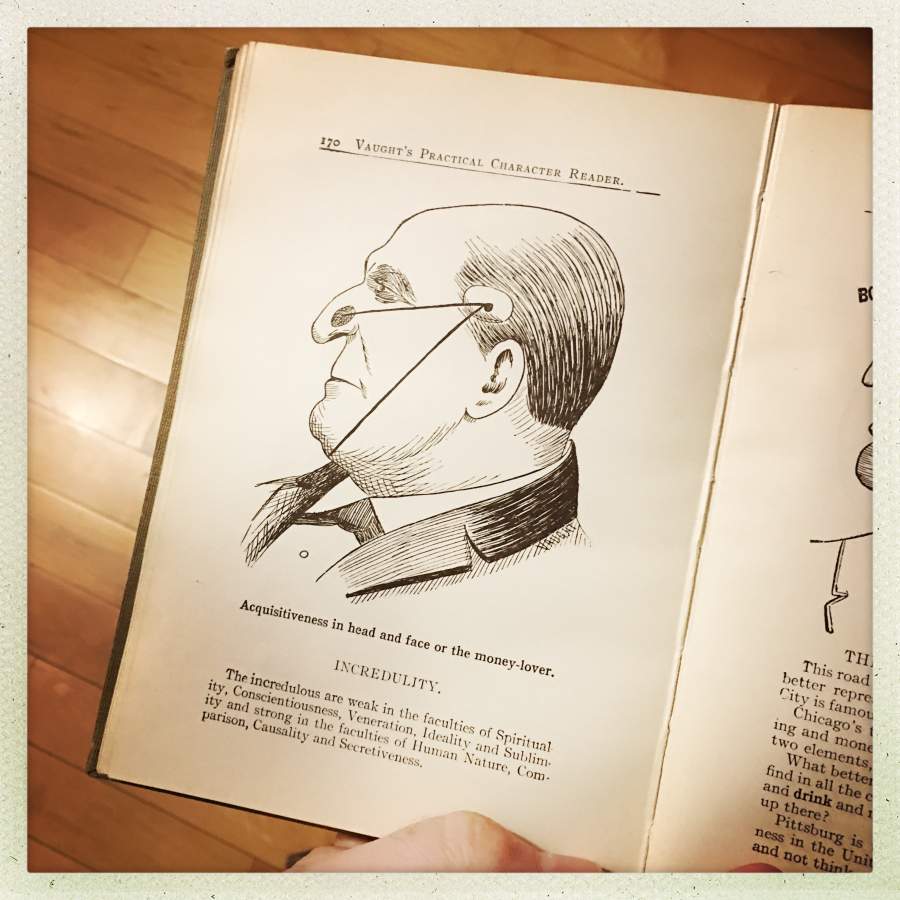

Got that? They think that gay people look different enough that an AI can detect them. It’s not like there’s any way any of the things the AI is detecting might be culturally determined. I checked my copy of Vaught’s Practical Character Reader and there are no entries for gay people. I guess I’m just stuck with the double-helping of social prejudice Vaught’s offers on other topics.

The Government Accountability Office (GAO) has some nasty and accurate things to say about FBI’s database: [gao]

The Department of Justice’s (DOJ) Federal Bureau of Investigation (FBI) operates the Next Generation Identification-Interstate Photo System (NGI-IPS) – a face recognition service that allows law enforcement agencies to search a database of over 30 million photos to support criminal investigations. NGI-IPS users include the FBI and selected state and local law enforcement agencies, which can submit search requests to help identify an unknown person using, for example, a photo from a surveillance camera.

…

Prior to deploying NGI-IPS, the FBI conducted limited testing to evaluate whether face recognition searches returned matches to persons in the database (the detection rate) within a candidate list of 50, but has not assessed how often errors occur. FBI officials stated that they do not know, and have not tested, the detection rate for candidate list sizes smaller than 50, which users sometimes request from the FBI. By conducting tests to verify that NGI-IPS is accurate for all allowable candidate list sizes, the FBI would have more reasonable assurance that NGI-IPS provides leads that help enhance, rather than hinder, criminal investigations. Additionally, the FBI has not taken steps to determine whether the face recognition systems used by external partners, such as states and federal agencies, are sufficiently accurate for use by FACE Services to support FBI investigations

The office recommended that the attorney general determine why the FBI did not obey the disclosure requirements, and that it conduct accuracy tests to determine whether the software is correctly cross-referencing driver’s licenses and passport photos with images of criminal suspects.

“Grooming style” as a data point for determining sexuality really stands out to me. Apparently they don’t realise how quickly that kind of thing can change, and is culturally based. I can just imagine their algorithm identifying some foreign strongman, who’s on his third wife, currently has 2 mistresses, and 6 kids, as gay because his hair matches some hair style popular with gay San Franciscans in 2015.

timgueguen@#1:

I see what you did there!

The cameras verify a passenger’s identity in less than 2 seconds, and they’re 99 percent accurate.

Excuse me while I laugh hysterically. Either they are lying or the test is rigged or designed so that such a result is possible.

We should profile Muslims, or anyone who looks like he or she could conceivably be Muslim,

It is not clear to me if Harris is just a flaming racist or utterly clueless about Islam. Perhaps both?

Does he think that all Muslims look like Arabs? Duh, I have met an Uzbeki Muslim who looked like he could be from Beijing and a Saudi with the typical white skin and red hair of someone in a Dublin pub. Oh, and then there was the Saudi air force policeman who looked like a black American from the Southern USA.

So if one profiles everyone in the USA, Harris’ suggestion may work.

Gaydar? Really? Sometimes, I truly despair at just how much people can embrace being a nimrod.

How does someone “look muslim”? Is religious affiliation also determined by genetics as well? Or does Harris just mean them middle eastern terrorist muslims? Harris has a unreasonable high expectation of safety, which seems to trump all other peoples expectation of privacy.

I would like to see a dimensionality reduction like t-SNE run on this facial data, would be interesting how the cluster. I don’t understand the difference between the two classifiers though. Where is the difference in input between the one with 81% and 74% true positives and the one for “gender alone” with 57% and 58% accuracy (a slightly better coin).

“Both” certainly seems like the most reasonable working hypothesis to me.

“Funny” thing that screencap of ED-209 reminded me of… I realised a while back that US cops are now actually worse than malfunctioning kill-bots from 80s sci-fi dystopias, that time some cops shot a kid dead in a parking lot within 9 seconds of arriving at the scene: at least ED-209 would give you 20 seconds.

A while ago somebody from the commentariat here recommended Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy by Cathy O’Neil. I found it a very interesting read, and the disturbing conclusion there was that algorithms, which automatically sort people into several groups according to whatever criteria, can ruin a person’s life even when programmers who made the software had the best intentions. Unfortunately, in this case instead of good intentions there is actual malice, namely, people are sorted based on their race. That’s fucking disastrous.

My answer: none. The artist who drew these wasn’t particularly good. For example, look at the supposedly attractive face in #3—her nose shape is ridiculous, because real humans never have such pointy noses.

While reading this blog post I kept thinking about those people who won’t be able to take connecting flights at all just because of the amount of melanin they happen to have in their skin. But you are right—the real problem here is a white person like Sam Harris missing 15% of his connections. /sarcasm tag

Ieva Skrebele@#7:

While reading this blog post I kept thinking about those people who won’t be able to take connecting flights at all just because of the amount of melanin they happen to have in their skin. But you are right—the real problem here is a white person like Sam Harris missing 15% of his connections. /sarcasm tag

I see things like “preferred traveller” and “TSA pre-check” as a way of preserving some shreds of privilege for the elites, while creating increased inequality through the entire system. Presumably Harris is a frequent traveller; he probably doesn’t wait in the same lines that everyone else does. If the facial recognition systems begin to affect the elite, they’ll be “adjusted.”

My fantasy scenario would be to get a chance to spike the FBI database with composite images that have the same facial geometry as wanted criminals, but with the name and identity of powerful people, in order to jam the system by reducing its usefulness.

You may be familiar with the term “culture jamming”? I believe that in the future we will need people who are “systems jammers” – John Brunner somewhat alluded to this in Shockwave Rider(1975)

kurt1@#5:

How does someone “look muslim”? Is religious affiliation also determined by genetics as well? Or does Harris just mean them middle eastern terrorist muslims?

Harris seems to blame everything on Hamas, so he’s probably holding out for an AI that will detect Hamas members.

Dunc@#6:

“Funny” thing that screencap of ED-209 reminded me of… I realised a while back that US cops are now actually worse than malfunctioning kill-bots from 80s sci-fi dystopias, that time some cops shot a kid dead in a parking lot within 9 seconds of arriving at the scene: at least ED-209 would give you 20 seconds.

Exactly what I was thinking, which is why I pasted that picture in there. We thought ED-209 was funny but now ED-209 is going to be a parking lot attendant. You will comply.

(That was a brilliant scene, illustrating a number of system failure modes most excellently. Whenever someone talks about “false positive rate” I remember ED-209. By the way, if ED-209 only had a 1% false positive rate, he’d still kill 1% of the people he met. That would be dramatic.)

jrkrideau@#3:

It is not clear to me if Harris is just a flaming racist or utterly clueless about Islam. Perhaps both?

I don’t think he’s a racist; he’s not actually arguing for racial profiling he’s argued for religious profiling – which is even worse because religion is not an attribute of people’s faces. I don’t think Harris is utterly clueless about Islam, either, because this really doesn’t have anything to do with Islam – Harris seems to have an irrational pro-Israel and anti-Hamas bias. He’s so bad that he argues that Hamas is the epitome of what is bad about Islam – kind of ignoring that Islam has been around a lot longer than Hamas. I don’t think Harris’ views make sense enough to call an “opinion” it’s more like a mishmash or wrong impressions with a dollop of dishonesty spread on top, baked in well-poison sauce.

Ieva Skrebele@#7:

A while ago somebody from the commentariat here recommended Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy by Cathy O’Neil. I found it a very interesting read, and the disturbing conclusion there was that algorithms, which automatically sort people into several groups according to whatever criteria, can ruin a person’s life even when programmers who made the software had the best intentions. Unfortunately, in this case instead of good intentions there is actual malice, namely, people are sorted based on their race. That’s fucking disastrous.

Yup. If a neural network embeds the statistical probabilities that go into it, and produce outputs that recapitulate the inputs, a racist-trained neural network is going to be a racist.

A real-world example: suppose someone trained a neural network to evaluate and decide what loans to authorize. They train it based on existing loan-officers’ assessments of the quality of the loan. If they forget to somehow factor-out redlining, they have just implemented a racist robot.

Monocle detection algorithm… (Sorry)

Well, a fair way of training would have the same neural network just give out loans to a large randomised group of people, see how those worked out and take it from there. With a large enough population you should get enough variation to actually produce a functional and unbiased training set. Of course the underlying rules would be locked up in the neural network and too arcane to ever puzzle out.

But that, to my mind, is the whole point of neural nets, the proper use case: It’s too complicated for me to ever get right, maybe the computer can do it with some n-dimensional thinking. After all, if a problem was simple enough I could sit down myself and just string lots and lots of of if else statements together. Tedious, but at least I’d know what the computer was doing, which is worth a lot when it goes wrong.

However, the random loan approach has two serious downsides: One, it would take years to collect the data – people taking out and hopefully repaying loans. Two, it would cost a fortune. But it couldn’t possibly be any worse than human bankers who, as we’ve seen, are totally worth the bailout cash. So it’s really just a matter of patience. And it should be a worthwhile long-term investment: AI doesn’t get a golden parachute, it gets reset.

Which is what the state/police wants anyways. Keep things the way they are (or make them worse), police the minorities. If people complain about a majority of people of color getting stopped and frisked or a lot of muslims getting held at airports, officials can point to the software, implying that of course, that algorithms are objective and not racist at all. Using AI that way is just delegating the justification of racist practices to the results of racist practices in the past.

komarov @#13

If only it was as simple as what you describe. . . The algorithm would probably make a correlation that people who earn little money are more likely to fail to pay back their loan on time. It would probably also make a correlation that people who live in some poor neighborhoods are more likely to fail to pay on time. The end result: a wealthy white guy who lives in the rich people’s neighborhood gets to borrow cheaply. Simultaneously, a black person who lives in some neighborhood that got designated as “risky” by the algorithm can only get payday loans with ridiculously high interest rates. If you go to a bank and speak face to face to some employee who refuses you because of your skin color, you can at least sue the bank. However, when your application is rejected by some mysterious algorithm, you don’t even know the reasons and cannot sue anybody. These kinds of algorithms can literally ruin people’s lives even when the programmers started with the best intentions.

Plenty of programmers and banks and insurance companies have attempted to create racially unbiased algorithms that are based upon statistics. People have already tried to go beyond “black=untrustworthy.” Unfortunately, it’s nowhere near as simple as what you proposed.

And it’s not just loans. Algorithms are also used for hiring people, deciding which students ought to be accepted in some university, calculating how much their insurance will cost, etc.

Speaking of car insurance, here’s a quote from Cathy O’Neil’s book (which I found very interesting and can recommend). She explained it way better than I ever could, therefore I’ll just quote her:

Cathy O’Neil didn’t say it straight, but the chances are pretty high that the hypothetical barista happens to be black (since black people, on average, earn less and are forced to accept shittier jobs). With all this data we still get back where we started—if you are poor and black, your car insurance will cost more than if you are white and rich. And it’s not just that. Your loan will have a higher interest rate. Your job application will get denied by some mysterious algorithm. The unfairness will be perpetuated.

Incidentally, big data can lead to a situation where insurance companies or banks learn more about us to the point where they are able to pinpoint those who appear to be the riskiest customers and then either drive their rates to the stratosphere or, where legal, deny them coverage. I perceive that as unethical. The whole point of insurance is to balance the risk, to smooth out life’s bumps. It’s would be better for everybody to pay the average rather than pay anticipated costs in advance.

Moreover, there’s also the question of how ethical it is to judge some individual person based upon the average behavior of other people who happen to have something in common with the individual you are dealing with. After all, you aren’t dealing with “the average black person,” “the average woman,” “the average person who earns $XXXXX per year,” “the average person who lives in this neighborhood,” “the average person with an erratic schedule of long commutes,” instead you are dealing with a unique individual human being. The inevitable end result of such a system is that some innocent person who isn’t guilty at all, who haven’t done anything bad will get punished because some algorithm put this person in the same bucket with some other people who have engaged in some bad or risky behavior.

Harris Just Doesn’t Get It. This was clear early on in his his back-and-forth with Bruce Schneier, can be summarized with just two quotes:

I wanted to scream when I read the quote in this blog post:

Those are the words of someone who doesn’t understand security. (He probably thinks things “are” or “aren’t” secure.) Nor probability. And certainly not economic incentives.

If he’d said “there are so few people in the world who both look a certain way and would be willing to carry a bomb for Hamas that it’s a reasonable trade-off to let them through given the societal costs we save via this much cheaper screening method,” we’d have something to talk about. But as soon as he claims, “even giving Hamas a huge incentive to find someone willing to carry a bomb who looks, or can be made to look, a certain way, they could not find even one, ever” he’s clearly both loopy and someone who’s never built a security system and been properly humbled by doing so. (Anybody doing security who’s not been humbled by it is without question doing poor security.)

So I’ve dropped Harris into the same bucket I put so many “software engineers”: those folks who, instead of saying, “I can see a circumstance in which this won’t work. Fix it, if it’s economical to do so,” instead say “I can see a circumstance in which this could work. Release it!”

Oh, darn. In all my editing, I forgot to add a mention that #15 @Ieva Skrebele was a great comment. I knew it, but I didn’t really properly know it until I’d read that.

Bruce Schneier:

It turns out designing good security systems is as complicated as I make it out to be.

Bruce has been a great communicator for the security community; we’re not very good at it (in general).

He’s an optimist, though. Designing good security systems is hugely more complicated than he makes it out to be. When Bruce started out, he was a cryptographer – he thought crypto would do all kinds of great things – but it turns out that it’s more complicated. He wrote Secrets and Lies as a kind of “mea culpa” for thinking security was easier.

But there are people who do not stand a chance of being jihadists, and TSA screeners can know this at a glance.

Right. Harris is ignorant, and aggressively so. For example there was the PanAm flight that was bombed over Lockerbie, with an explosive carried on board by an unwitting courier. The Russian plane downed in Egypt appears to have been another unwitting courier. Harris appears to be unwilling to wrap his mind around the idea that someone would be nasty enough to put a bomb in someone else’s luggage. As soon as you make that leap, Harris’ position is over, gone, and done. But it’s so important to Harris that he be able to see himself as right, that he just keeps blithering the same point, and refuses to accept that he’s wrong. It’s The Black Knight’s Move in Argument Clinic: simply keep asserting, “no, I’m right” until your enemy leaves in disgust.

Ieva Skrebele (#15):

You’re right and so is Cathy O’Neil (I never claimed my “solution” was a good one, just better than bad.)

Unfortunately (and O’Neil makes the same point) these correlations are true and actually reasonable ones to make. It depends on the goals. As far as fairness goes (not very far in this instance), the algorithm really should prefer loaning to those wealthy enough to not actually need it. And, honestly, as far as the algorithm itself goes making this kind of correlation would be surprisingly reasonable. If you shovel in enough data I’d be more worried about it picking up on correlations with baldness, number of tooth fillings or other financial non sequiturs – not that we’d ever find out.

To improve the situation we could do two things: More data, bigger than big data. That’s the lazy technocrat’s approach. Well, the lazier technocrat since it’s the exact thing I did previously.

What might actually be (slightly) more successful is to change the algorithm’s scope and objective. The Mark I up above had the (unstated) goal of handing out loans that get repaid and bring in some profit, because that’s the implied purpose of loans.

What we could try is to have the Mark II look at the bigger (economic) picture. Rather than trickle-down economics it could try to apply what you might call “pour-down” economics. Loan the cash cheaply to people who are stuck in a poverty spiral so they can get out and turn into people with actual spending power. Long term this would result in repayment plus extra cash flow, although it would be difficult to profit off of that directly. “The economy is doing a little better” is probably too roundabout a way for a respectable banking establishment to make a profit.

It would still be tricky to pick the right loans for the right people and the underlying logic would be no less complicated and difficult to unravel. It might even be interesting what biases creep into the process. Perhaps the exact same biases would still end up in there but work in reverse, a rather depressing “best case scenario”.

I suppose one consequence would be that the algorithm gives the more expensive loans to wealthier clients who can afford them. In theory that should generate more money to be lent, in practice noone would take those loans. Still, if we had a bailout worth of cash to spend the Mark II could keep going for quite a while…

Of course we could also admit that this capitalism thing we’ve been trying is fundamentally flawed and that mass poverty is one of it’s side-effects. And that if we reworked the system to something actually more “fair” we probably wouldn’t need to automate it in the first place. But excited technocrats aren’t the only ones who’d object to that.

Maybe not quite the end result. China is starting to score people on “good citizenship” and there are entirely justified concerns on how that will affect individuals’ behaviour. The business-side approach might have similar chilling effects, subtly (or not so subtly) pushing entire groups of people towards conformity and “good” behaviours. Rather than finding oneself lumped in with the reckless fools and paying for it one might find oneself forced into a more subdued lifestyle than one might like.

Everyone’s heard of the average person but noone’s ever met them.

komarov @#19

It depends. There are various options for what can happen once people start employing algorithms that automatically categorize and reward or punish them. Here are some additional possibilities to the one you already mentioned:

#1 No behavior changes happen. If somebody gets a bad score, because they earn little money, live in a shitty neighborhood, and have a bad job, the chances are that they cannot easily change anything about any of these variables in the first place.

#2 Perverse incentives result in people doing various actions in an attempt to game the algorithm. For example, consider the U.S. News college rankings, which have been extremely harmful for American colleges and universities. Measuring and scoring the excellence of universities is inherently impossible (how do you even quantify that?), hence the U.S. News just picked a number of various metrics. In order to improve their score, colleges responded by trying to improve each of the metrics that went into their score. For example, if a college increases tuition and uses the extra money to build a fancy gym with whirlpool baths, this is going to increase its ranking. Hence many colleges have done all sorts of things that increased their score, but were actually harmful for the students.

Some universities decided to go even further and outright manipulated their score. For example, in a 2014 U.S. News ranking of global universities, the mathematics department at Saudi Arabia’s King Abdulaziz University landed in seventh place, right next to Harvard. The Saudi university contacted several mathematicians whose work was highly cited and offered them thousands of dollars to serve as adjunct faculty. These mathematicians would work three weeks a year in Saudi Arabia. The university would fly them there in business class and put them up at a five-star hotel. The deal also required that the Saudi university could claim the publications of their new adjunct faculty as its own. Since citations were one of the U.S. News algorithm’s primary inputs, King Abdulaziz University soared in the rankings. That’s how you game the system.

#3 People decide to not give a fuck about their score, ignore it, and accept “the punishment” for having a low score.

#4 People actually improve their behavior knowing that it’s being monitored and scored. I’m a cynic; I perceive this option as highly unlikely. My default attitude is to look for potential problems and to think about what can possibly go wrong instead.