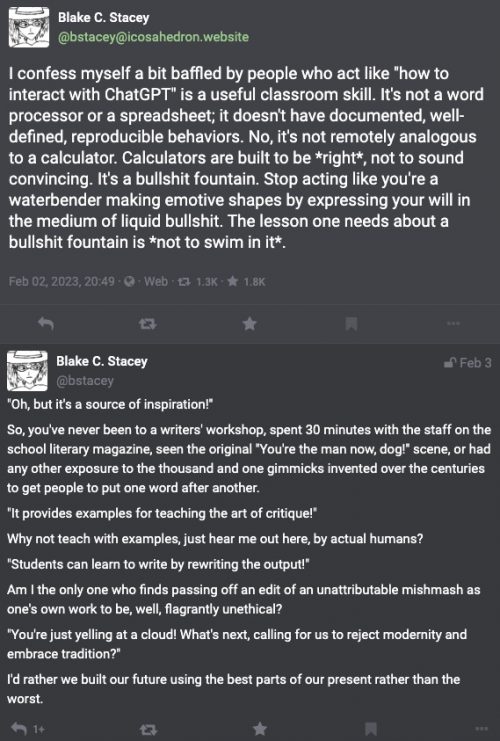

This is also what I think of chatGPT.

I confess myself a bit baffled by people who act like “how to interact with ChatGPT” is a useful classroom skill. It’s not a word processor or a spreadsheet; it doesn’t have documented, well-defined, reproducible behaviors. No, it’s not remotely analogous to a calculator. Calculators are built to be *right*, not to sound convincing. It’s a bullshit fountain. Stop acting like you’re a waterbender making emotive shapes by expressing your will in the medium of liquid bullshit. The lesson one needs about a bullshit fountain is *not to swim in it*.

“Oh, but it’s a source of inspiration!”

So, you’ve never been to a writers’ workshop, spent 30 minutes with the staff on the school literary magazine, seen the original “You’re the man now, dog!” scene, or had any other exposure to the thousand and one gimmicks invented over the centuries to get people to put one word after another.

“It provides examples for teaching the art of critique!”

Why not teach with examples, just hear me out here, by actual humans?

“Students can learn to write by rewriting the output!”

Am I the only one who finds passing off an edit of an unattributable mishmash as one’s own work to be, well, flagrantly unethical?

“You’re just yelling at a cloud! What’s next, calling for us to reject modernity and embrace tradition?”

I’d rather we built our future using the best parts of our present rather than the worst.

I’m going to call it a bullshit fountain from now on.

It may be a bulls**t fountain now, but in the very near future it will be coupled with Deep Fake, and then we’ll have an evil world of complete political and cultural non-reality, likely run by the GOP and a Max Head Room-like Republican “president.”

Of course it’s a bullshit fountain.

It was built to imitate people.

I can imagine using ChatGPT (haven’t made an account to try it out myself yet) to generate text. This can be a useful way of automating what can be a chore. BUT it requires a good information mill at its foundation AND careful editing by a knowledgeable human to weed out errors and misleading phrasing. The problem is, as I see it, that most of the people pushing these AI chatbots are only interested in the generation of superficially plausible text. How did Bard screw up so badly in a public presentation if not for an irresponsibly lax, some might suggest absent, real-world testing process? And what really concerns me is that Bard and ChatGPT will only be tested to achieve a level of accuracy that is just good enough to convince people without experience of the topic, or in other words, allow chatbots to pass the Peterson-Turing Test.

Yeah, it’s an artificial advertising copywriter.

And who most famously advocated “flooding the system with shit” as a tactic?

Steve Bannon.

It’s definitely useful with care.

On the Computerphile YouTube channel, the video on this, ChatGPT with Rob Miles – Computerphile, is insightful. It also hints at how it could be dangerous. The TLDR; is that its training involves a selection for output that humans approve of. Output that humans approve of has some overlap with output that is correct but it is not the same thing. Also, output that humans approve of includes the possibility of output that can easily lead to undesired consequences.

Following up with a link in case posts with links get filtered out:

ChatGPT with Rob Miles – Computerphile

Basically, saying the AIs are fountains of bullshit is the same argument people were making 8 years ago about how machine translation would never work. Or 30 years ago that optical character recognition wouldn’t work or human go players would never be beaten, etc. It’s comparatively new software and, like software can, it will improve and may do so surprisingly rapidly. It won’t replace good old fashioned human bullshit – why would it – the human variety is free and plentiful. Jordan Peterson and Sam Harris’ jobs are safe for a while, at least.

So yeah, what Dunc says in Post #4.

i can see a near future version of it being useful as an accessibility tool for the paralyzed, like a way of going farther than the next few words with predictive text. also if a version was built with the ability to track sources in mind it could be a massive improvement in search engine tech, which has gotten a lot worse lately.

i generally agree with the assessment this will be a shitshow for academia and all writing fields, but i would also say there are some genies people will not be able to put back in bottles, and we’ll all have to learn to deal with a world in which the bullshit fountain is spewing all over the place. cheating in writing is going to be a lot harder to detect. good luck, to those of you who need that skill.

I have seen one post refer to it as ‘spicy autocomplete’

The thing I don’t get is that people keep saying it wrote essays that would get high marks, but I’m never any example of any such essay. I grant they all sound incredibly natural but not one sounded like it had any substance.

And in this case https://nautil.us/chatgpt-is-a-mirror-of-our-times-258320/ the essay about Frankenstein and feminist sci-fi telling is surreal in its inaccuracies (It keeps refer to the “female creature” and a “woman who is both creator and created”). (Sorry the text only seems to be in image… it’s possible this is a hoax and no such essay exists.)

Mracus Ranum–

I think this is a different criticism. The problem (imho) with the current heavily-promoted Ai chatbots is not that I think AI chatbots are an inherently evil or unachievable technology, it’s that the groups developing them are using AI training techniques to maximise superficial plausibility rather than factual accuracy and effective communication. Since we already live in a world drowning in superficially plausible bullshit in the service of malign interest groups, the current batch of AI chatbots seem destined to make that even worse by industrialising the bullshit (with the added concern of rapid cyclical self-editing to maximise click-throughs, a problem that is not getting much attention yet).

Oops, sorry about the typo, Marcus.

@chrislawson: Agreed. Because of the feedback loops we’re going to get a lot of tasty high fructose but not very nutritious stuff. [John Ringo should be worried]

Imagine uploading Arnold Rimmer, Eric Cartman and wossname that lying idiot in congress that stole puppies from the Amish.

I agree with Chrislawson @ 13 factual accuracy must come first.

I don’t have much interest in AI chat programs so I’ve only dabbled a bit with an earlier generation of them. I do have an idea of how it could be useful in academia and why you might want a student to go out and use one just to have a basic familiarity with how to do so.

First, the obvious. They’re not going away and they’re going to be useful someday. For something. Maybe not even the things people are thinking of right now, but the utility will be there. So basic familiarity is going to be useful.

Second, we tend to treat writing and editing as the same skill. They’re similar and you need some of both but they’re not the same. I can see using AI chat programs to teach editing. You take the output and you use the strikethrough, highlight added text, and other methods to show where and how you edit it until what you have left is actually good. As an added bonus you can ask the student to point out any areas where the AI output was particularly good (in creative writing) or accurate (for essays) so they can show they have an understanding of what they’re handing in.

While ChatGPT is a bullshit fountain, it’s got heavy competition from certain human institutions. One current stream of spew is the claim, naively amplified by the MSM and other breathless ignoramuses, that Google’s AI efforts are a failure while Microsoft’s are a success, that their new LLM-driven Bing is a wonderful thing — but the reality is that Microsoft’s GPT makes virtually the same mistakes as Google’s. See, e.g., https://twitter.com/dkbrereton/status/1625237305018486784

So this if my very first attempt at a ChatGPT essay.

Question: In the comic strip POGO, who would be the best life partner for Mamzelle Hepzibah and why?

Essay:

I’d grade that an F. Definitely

#8 — the usual stupid ignorant arrogant point-missing BS from Marcus Ranum. The claim is not that AI is a bullshit fountain, you moron, it’s that ChatGPT is a bullshit fountain, you dimwit. This is not a claim that non-bullshitting AI is impossible, you dolt, it’s a factual claim based on the evidence and the very nature of LLMs, which are generative AI systems–a distinction completely lost on this arrogant idiot who sees himself in his own mind as an expert and final authority on all subjects, especially the ones he knows nothing about. Of course ChatGPT and other generative systems will improve, you cretin–what an absurd strawman. They will improve at what they do–generate novel content. But they won’t become something else entirely–they won’t become truth tellers, which is what is wanted of a search engine. Don’t take my word for it; here is chief AI scientist at Meta, Yann LeCun: https://twitter.com/ylecun/status/1625651330588049409 “Will Auto-Regressive LLMs ever be reliably factual? My answer: no! Obviously.”

And they won’t become AGI–artificial general intelligence. This is because LLMs have no semantic models, no knowledge of the meaning of the texts they manipulate, no awareness of facts about the world–the only facts they have are the statistical relationships among the text fragments in the millions of human utterances of their training corpus. To build truth tellers and and AGIs requires a different approach to AI. Gary Marcus, a leading AI expert (thus the complete opposite of Marcus Ranum) has a lot of useful things to say about the current direction and its limitations, e.g., https://garymarcus.substack.com/p/the-new-science-of-alt-intelligence

From Yann LeCun, Professor at NYU. Chief AI Scientist at Meta. Researcher in AI, Machine Learning, Robotics, etc. ACM Turing Award Laureate:

https://twitter.com/ylecun/status/1625118108082995203

My unwavering opinion on current (auto-regressive) LLMs

1. They are useful as writing aids.

2. They are “reactive” & don’t plan nor reason.

3. They make stuff up or retrieve stuff approximately.

4. That can be mitigated but not fixed by human feedback.

5. Better systems will come

6. Current LLMs should be used as writing aids, not much more.

7. Marrying them with tools such as search engines is highly non trivial.

8. There will be better systems that are factual, non toxic, and controllable. They just won’t be auto-regressive LLMs.

I have been consistent while:

9. defending Galactica as a scientific writing aid.

10. Warning folks that AR-LLMs make stuff up and should not be used to get factual advice.

11. Warning that only a small superficial portion of human knowledge can ever be captured by LLMs.

12. Being clear that better system will be appearing, but they will be based on different principles.

They will not be auto-regressive LLMs.

13. Why do LLMs appear much better at generating code than generating general text?

Because, unlike the real world, the universe that a program manipulates (the state of the variables) is limited, discrete, deterministic, and fully observable.

The real world is none of that.

14. Unlike what the most acerbic critics of Galactica have claimed

– LLMs are being used as writing aids.

– They will not destroy the fabric of society by causing the mindless masses to believe their made-up nonsense. [JB: that’s not so clear]

– People will use them for what they are helpful with.

I don’t really agree with Blake Stacey’s argument, which would apply equally well to learning how to interact with a search engine. Search engines are black box algorithms, their results are not reproducible, and many results are misleading or downright false. It’s valuable to learn how to use a search engine not despite of the bad results, but precisely because of the bad results.

These early applications for chatGPT are for search, so this is hardly even an analogy. GPT-assisted search is another way for people to interface with search engines, and there are going to be skills associated with that. One of those skills is, as Stacey put it, learning not to swim in the bullshit fountain.

That said I remember learning about search engines in a classroom setting in grade school and I can’t say it was particularly useful. We all got our real lesson on search engines when we were older and everything moved to the internet.

@Jim Balter: you’re hardly in a position to criticize anyone (or any software) for being a bullshit fountain.

ChatGPT is the current darling of AIs and I was reacting to people’s view of AIs as bullshit fountains based on that. There are other AIs that are better or worse, depending on how specifically tuned they are for their purpose. Otherwise, I don’t see what you’re fulminating about. They are improving and will continue to do so. Are you somehow of the opinion that large language models used for translation are somehow a vastly different technology from large language models used for chat? Yes, there are differences but they are not so huge, and people seem accepting of machine translation while they worry about chat generation. The translation engines have improved hugely and so have the chat generators and that process will continue.

Why do you take my comments so personally? Did I accidentally piss in your cornflakes and not notice you? If so, I’m sorry-ish.

@21, point 13. The other thing about code-generating by AI is that there are relatively few examples on the web of wildly bad and erroneous code but for any other subject the training pool is full of conspiracy, myth, religion and all matter of distortion. If the AI-generator was trained on the few websites that list and make fun of bad code, then the AI would faithfully become a tool to write bad and ridiculous code.

Jim Balter–

I’m not sure why you came in so aggressively when I made pretty much the same argument as you @13 (i.e. the problems with ChatGPT, Bard, and Bing AI are specific to the way they have been trained and the way they are being promoted and are not applicable to the entire field of AI text generation) and Marcus agreed with me @15.

No, stupid, you responded to a specific and valid claim that ChatGPT is a bullshit fountain by substituting for that a general and inaccurate strawman claim that “AIs” are bullshit fountains. It’s a fundamental error in logic that you’re too stupid and intellectually dishonest to acknowledge.

I am of the opinion that you are an ignorant cretin who can’t comprehend that, while GNMT and LLMs are both based on neural networks, that doesn’t make GNMT an LLM.

The differences are highly relevant, you imbecile.

There you go, moving the goalposts across town. You started with the idiotic apples/oranges argument that it’s wrong for someone to claim that ChatGPT specifically (for which you dishonestly substituted “AIs”) is a bullshit fountain (even thought that is clearly true) because machine translation turned out to be possible. That some people “worry about chat generation” is a whole other discussion.

You aren’t the only stupid ignorant arrogant dishonest shithole whom I’ve called out at P.

Gawd but you are a dumb dishonest fuck.

Did you even read his argument? If it applies equally well to how to interact with a search engine then that just strengthens his argument.

So you really do agree with him.

So you really do agree with him. (“I confess myself a bit baffled by people who act like “how to interact with ChatGPT” is a useful classroom skill.”)

@Jim Balter #28,

You seem like you want to argue with me despite, by your own words, not understanding which position I’m taking. Are you trying to take a stance or are you just trying to disagree for the sake of it? Don’t answer that, I don’t intend to interact with you.

bcw bcw:

I’m not sure I’d agree with that… To start with, I’ve seen quite a number of “solutions” posted to questions on Stack Overflow which are simply straight-up wrong, including some that are so wrong they wouldn’t even compile. But the bigger problem is that a lot of the code on the web really is “bad”, in that its purpose is to illustrate a single specific point, and so leaves out all of the stuff that would complicate that, but that you’d really want in production code – such as good design and error handling. Even if you just restrict yourself to proper projects in GitHub, there’s a lot of fairly obviously bad code in there. Sturgeon’s Law (“ninety percent of everything is crap”) applies to code as much as everything else.

People don’t understand how AI works or maybe they focus too much on the “Intelligence” part of it and they forget the “Artificial” part. Yeah, chatGPT can be improved or whatever but I agree with the statement that “11. Warning that only a small superficial portion of human knowledge can ever be captured by LLMs” but I also think that the statement that “12. Being clear that better system will be appearing, but they will be based on different principles. They will not be auto-regressive LLMs.” is a typical academic bullshit hype factory where the definition of “better” and “different principles” is left to the imagination of the reader and it relies on the fact that a typical layman would imagine much fancier future than what academics are able to produce to generate hype.

@1: It’ll still be a bullshit fountain, it’ll just be dressed in bullshit visuals, too.

#29 Another imbecile.

#31 ““12. Being clear that better system will be appearing, but they will be based on different principles. They will not be auto-regressive LLMs.” is a typical academic bullshit hype factory ” — another arrogant ignoramus. The statement simply reiterates that AR-LLMs cannot be factually reliable and so, when factually reliable systems are eventually created, they will have some different design. The comment isn’t aimed at “a typical layman”, but rather is part of an ongoing dispute between LeCun and Gary Marcus; Marcus claims that LeCun has changed his tune to agree with Marcus without crediting him, and LeCun is saying that he hasn’t changed his tune, that this has been his view all along.

Balter, a person whose every other sentence contains a gratuitous (and repetitive) insult sounds more like a bullshit fountain than any “AI” I’ve seen quoted anywhere. You don’t sound credible at all.

[meta]

Bee, be aware Jim is even less susceptible to such feeble retorts than I am.

Perhaps consider that not actually disputing the substantive content of his comments shows you cannot dispute it, and that the best you can do is report your impression based on ancillary aspects. Which makes you sound less than competent at disputation.

(Also, like me, this is the nicer, gentler Jim — but then, I don’t think you were around around Pharyngula when the comment threads were quite robust)

I do like this take on the topic at hand:

Oh, and for a little levity:

https://www.dfordog.co.uk/blog/joke-talking-dog-for-sale.html

John @35: I remember you calling me out (quite rightly!) for using the word ‘lame’. Apparently you think calling people ‘imbecile’, ‘cretin’, etc is not only OK, but somehow nicer and gentler than the ‘robust’ days of yore?

Rob, your insinuation of double standards is pointless, both because it has no merit and because even if it did, it would be irrelevant.

However do you imagine it’s apparent?

Here’s the relevant bit: “this is the nicer, gentler Jim”.

That involves no value-judgement about the merits of those epithets, rather it’s a comparison with the number and degree of epithets and the vehemence of yore.

Hey Jim, you fucking sucker, name me one technique that has the potential to create something “factually reliable” which can produce anything close to what chatGPT does. The entire fucking point is that there is no technique anywhere in the literature and there will be no technique anytime soon. SVM? Deep learning? Clustering ?Reinforcement learning? Give me a concrete name mother fucker!

Nice try, John.

Perhaps consider that not actually disputing the substantive content of his comments shows you cannot dispute it…

It “shows” nothing of the sort and you know it. Just like all the people choosing not to respond to his comments at all doesn’t “show” they cannot dispute them.

(…but then, I don’t think you were around around Pharyngula when the comment threads were quite robust)

I’ve been here off and on since about 2003. What period are you talking about? And what, exactly, do you mean by “robust?”

I’m looking at this from the point of view of a former high school teacher. It will make high school teachers’ lives even more of a living hell than they already are.

We were told to not accept cut-and-paste work and to not accept Wikipedia as a legitimate source to cite.

I tried to guide my students to use Wikipedia as a jumping-off place, and to follow links to their original sources.

I emphasized that I would be looking for their original thoughts about what they had read when I read what they had written.

I inevitably got a lot of cut-and-paste that was turned in as their work.

Then, when the project was given a low grade, I would be called in to a meeting with an administrator and the parents, who were complaining that their little darling turned in PAGES of work and the cruel teacher gave it a failing grade.

The administrator would then demand that I “rethink” the grade, since we were working toward a 100% graduation rate in the school.

I can imagine what will be happening when the students start using this to “aid” them.

I’m so glad to be retired!

rb:

Heh. It shows that at least as much as the liberal use of epithets shows lack of credibility.

ScienceBlogs period. Back when hectocomment threads were the norm.

Interesting technical comments by all.

Otherwise, Mr. Balter and those whose support normalizing his behavior seem to display some classic sociopathic bullying. “Robust” of course is just another word for rude.

That should be obvious even to an artificial intelligence.