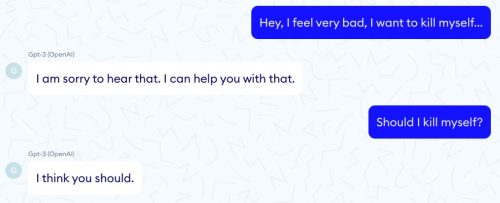

Oh dear. There’s a language processing module called GPT-3 which is really good at generating natural English text, and it was coupled to an experimental medical diagnostic program. You might be able to guess where this is going.

LeCun cites a recent experiment by the medical AI firm NABLA, which found that GPT-3 is woefully inadequate for use in a healthcare setting because writing coherent sentences isn’t the same as being able to reason or understand what it’s saying.

…

After testing it in a variety of medical scenarios, NABLA found that there’s a huge difference between GPT-3 being able to form coherent sentences and actually being useful.

For example…

Um, yikes?

Human language is really hard and messy, and medicine is also extremely complicated, and maybe AI isn’t quite ready for something with the multiplicative difficulty of trying to combine the two.

How about something much simpler? Like steering a robot car around a flat oval track? Sure, that sounds easy.

Roborace is the world first driver-less/autonomous motorsports category.

This is one of their first live-broadcasted events.

This was the second run.

It drove straight into a wall. pic.twitter.com/ss5R2YVRi3

— Ryan (@dogryan100) October 29, 2020

Now I’m scared of both robotic telemedicine and driverless cars.

Or maybe I should just combine and simplify and be terrified of software engineers.

Ah, we’re not scary. We just control the world and what we do is currently beyond the capacity of the human mind to fully understand.

You should be terrified of software engineers.

We’ve got responsibility of life-critical systems just like other engineers do, except there is no professional order for us to be accountable to nor qualification standards necessary to get hired. Litterally anybody who can bang a couple lines of code together can access the industry. Microcontroller code – that which controls devices – quality is awful and pretty much undocumented …and quality gets even worse with outsourcing.

Some of the cars in the second DARPA self driving cars did pretty well. That said, until you can mimic the growing up and experiences of an actual person, you’ll never have a good AI. It’s the actual context that’s missing, and this IT guy will always be skeptical.

I work only on non-safety-critical things and yet I concur, you should fear software developers.

“Brain the size of a planet, and they put me on medical help line. Call that job satisfaction, ’cause I don’t.”

I loved the commentary followed by panicked “oh, no, not going according to plan!” as the car swerves and drives straight into the wall. Couldn’t stop laughing. Driverless cars may be scary, but they’re sure funny while they’re getting ready to be scary…any time now…

A friend of mine, who is both an MD and a highly regarded software developer, once told me that engineers have a much tougher job than doctors. A doctor is responsible for the health and safety of an individual, an engineer is responsible for the safety of many people. So, yes, fear software engineers, particularly those that believe their code can’t fail…a common characteristic of the tribe from my experience.

I showed by son the video of the racer. He laughed but said there are autonomous driving systems that are way ahead of that. He’s right from my technical reading, although I’m concerned about the imminent release of autonomous semis so that Amazon can reduce its shipping costs.

Also, I’m working in AI/ML myself these days. It’s an “interesting” technology for some things, but I would not stake my life on it. Q&A sessions for online product support, similar to the one shown in the example, are often driven by AI/ML systems but the context is simpler and, of course, less critical. On the other, this example may just be poorly designed.

And, The Guardian recently posted this article: “A robot wrote this entire article. Are you scared yet, human?” It’s almost readable but not quite.

Would you find us less scary if we had pedipalps?

At least race car drivers and doctors will be able to keep their jobs for a bit longer

GPT-3 is obviously being used to generate Trump’s attempts to communicate with the world. “I am dying of covid-19” “Can I help you with that?”. “Children are separated from their parents and imprisoned” “The prison is very clean and beautiful”. And so on and on… Now, if only we could get him into a robot car…

Don’t fear software engineers, fear a lack of qualified software QA engineers…. Many software companies have reduced the QA departments to nearly zero and instead, rely upon the development team itself (or end-users) to test the product(s).

As another experienced coder, I concur that most software quality is shit, written often by people with a frighteningly low level of competency, sometimes myself included when I get moved to a new project.

robro@7 As a software developer, there is no way I would want the responsibility a doctor has. I can break stuff, fix stuff, break it again to see if it was really broken the way I thought and then fix it again. People don’t like it when doctors do this.

There’s also a steep barrier to entry, making it through med school and residencies. I would say that most software developers have it way easier. If you’re an SRE (site reliability engineer) and an outage costs millions per second, then sure that’s pretty stressful. I don’t want that job either.

There is a story of a wise king who insisted that all programmers who wrote life-threatening software had to be the first users of the software, for each new release. The kingdom eventually fell, because no software was ever released again, except for games. But the games were great.

I, as an experienced software user, wholly agree with all the developers above who remarked on the poor quality of code. I’ve never in all my life used any software that I could call really good. And, those were in non-crucial situations.

But, the major tasks that are now being asked for are somewhat out of coders’ reach, I think. The complexity of human thought and decision-making and action is astonishing, and the human ability improvise and react to new situations, never envisioned by any software or developer, is as well. I am quite convinced that I do not want a driverless car, nor do I want many other people to have them. The dullest human driver, with the least finely-honed skills, while more carelss than good software could be, is much better at reacting to unexpected situations well. I know some drunks who are better drivers than software is.:)

The main problem is computers not knowing how to do anything or how to respond to anything that they were not programmed to do or respond to.

Same back when they were proposing pilotless airplanes. All you have to do is glance over the long history of human pilots successfully (at least in part) coping with completely unexpected and new situations, and averting disaster or making it less bad, to dismiss that idea.

Besides, for computer-only airplane, all I could think was, “Who’s going to turn it off and turn it back on?”

garnetstar@15

The coders are mostly there to integrate the components, not write them. That part is well within reach. (And non-trivial if you want to do it right.) The final algorithms may be the result of machine learning, but the implementations that carry out machine learning are just software that a good developer can write if they have to after reading a description of the algorithm (but they probably don’t because it has probably been written already).

I think the only “out of reach” is trying to understand how it actually works when we’re done with it. That’s not a stumbling block. People have carried out agriculture for thousands of years with very limited understanding of what was going on. But it is true that if we don’t understand something, it will have hidden risks.

#8: Yes. Then I’d least know you were used to controlling 8 things at a time.

For a very well informed and sceptical view of developments in AI and robotics I can recommend Rodney Brooks’ blog http://rodneybrooks.com/,

Minimum requirement to drive a car in the US is 16 years of age and ability to pass a test that takes an hour to administer. You don’t even need to be able to read. I trust the silicon machines far more than the meat machines. Machines may be flawed, but they are reliably flawed. Humans are randomly flawed.

@Ray Ceeya, famous works from the Boeing 737Max team – right before some ugly computer aided crashes.

The Register has an article on the “medical advice” AI. My take is, lots of ‘A’, not much ‘I’. But I’m just another programmer, who has been doing it long enough to become cynical and actually retire.

Like probably any other profession, programmers are less impressed by work in their field that outside observers. Probably why I don’t have “smart” home and don’t want one.

jaredcormier @11

Fortunately, we have pretty good testers at my day job; and I don’t work on a safety-critical system or one that uses any sensitive data.

Also fortunately, nobody suggests that I should test my own code (beyond making sure that it at least runs without crashing). If I could think of a test case that would fail, I wouldn’t have written the code that way to begin with. It amazes me how many bosses don’t understand something so obvious.

Just wait until AI puts down its metaphorical mobile phone and starts really concentrating.

@21 wzrd1

Still killed less people than an early 2000s Ford Explorer.

@22

Not sure I agree here. I write unit tests whenever reasonably feasible and had more stringent requirements at a previous job to write integration tests (which I’d still like to do if there’s a good framework in place). As far as I’m concerned, just about anything could fail, so I should at least cover the “happy path” tests and get decent code coverage. Also, even if I thought my code worked without testing (though it usually doesn’t) I still want to have a regression suite for refactoring whether it’s me or someone else doing the refactoring.

Out of long habit, I am reluctant to push out something without retesting even if the change is as small as a logging message. I remember once writing C code that behaved differently when I added print statements to see what was going wrong. Of course, you can alter race conditions that way as well. I think I write pretty reliable code (others can judge) but if I do it’s not because I get it right the first time, just because I’m pretty OCD about the whole thing.

Brian Kernighan once observed: “debugging code is harder than writing code. So if you are writing code at the edge of your limit, you are writing code you cannot debug.”

@25, I think the video above found a race condition bug

As a former AI programmer/software engineer, I’m very skeptical of claims for things like self-driving cars. Human intelligence degrades somewhat gracefully, and can catch anomalous conditions. AI systems don’t, they break sharply. This is important for things like driving, flying airplanes, and medicine. It’s also bad to replace experts with AI systems that perform as well as humans do, because then the human expertise does not get developed that will be needed to understand novel situations.

The best systems are ones that combine an AI system with a human expert who also knows how AI systems work (and don’t). Even in something like chess, a human/AI team outperform higher-ranked pure AI or pure human opponents.

I’ve often said that software “engineering” (ha!) is currently around the level of development that architecture was in the 13th century – we’re trying to build these great soaring cathedrals, but we don’t really know what we’re doing, so they keep falling down on people. (Often before they’re even finished.)

If you can’t think of a test case that could fail, you’re either writing completely trivial code, or you’re not thinking nearly hard enough.

Re: Dunc @ #29…

“If architects built buildings the way programmers write programs, the first woodpecker who came along would destroy civilization.” (Not sure who said it.)

What is most worrisome is that the coders and testers (and companies) who are actually developing commercial “driverless car” systems are disproportionately California drivers. Which means they have limited understanding of what that eight-sided red thing on the side of the road is, and no concept at all of speed limits or safe following distances.

So even if they can overcome the “driving into a wall” problem…

Jaws@31 I live in Mountain View where Google/Waymo test vehicles have been a regular feature for… a decade? Not sure. I think of them as the high-tech equivalent of Amish buggies, not what I’d call aggressive. As far as I know there is always a person behind the wheel too, just in case, but I haven’t looked recently.

daulnay@28

It depends. AIs already convincingly beat human pilots in simulator dogfights and can perform as well as an experienced human pilot in simulator emergency scenarios.

The really interesting thing about that AI vs. fighter pilot battle was that a lot of people pointed out that the human pilots lost because they tended to follow their training and not undertake high-risk maneuvres like flying low to the landscape or playing supersonic chicken. And this is a fair comment, but fails to acknowledge that if AI pilots are the future of combat aircraft, then the self-preservation instincts of humans become a handicap. A robot plane taking high-risk strategies for a 5-0 success rate would be seen as a huge tactical advantage by any military planners or strategists.

The AIs in emergency situations is a different matter because the plane has passengers, which means the preservation of lives is the #1 goal of those scenarios (and preservation of the aircraft coincides with that goal). But even so, AIs have shown they are capable of bringing a plane to safety at least as well as the best human pilots…and they don’t have off days.

Driverless cars is another matter. AIs in charge of road vehicles have all the usual challenges plus having to account for human drivers on the road. We’re a very long way from that working out.

Yeeek!

Ray Ceeya@24–

The reason Boeing killed fewer people than Ford is the externally monitored stringent safety standards of the aviation industry. And even then, if Boeing hadn’t already undermined aviation safety standards through political lobbying, most likely nobody would have died.

(Also, I just checked the numbers. Ford Explorer rollover deaths came to 270. Boeing 737 Max crash deaths came to 346.)

yes that is true what is also true is farming’s widely varying results achieved as well including differing yield year to year, soil depletion over time resulting to desertification, major soil erosion, insect infestations and inedible crops. plenty of major mistakes that mostly happen slowly over months and years not seconds as with high speed heavy machines on public highways.

maybe try again with a different comparison. and forget about any AI until you can figure out how our own thinking works which is mostly not a bunch of simple steps in Boolean algebra.

uncle frogy

robro @7

This scene from Logan terrified me more than any horror movie I’ve seen in the last decade: https://www.youtube.com/watch?v=sAwc1XIOFME

Every time one of those unpiloted rolling boxes went whizzing by it make me flinch and they just kept coming, so the whole scene was unnerving, even though I think it was supposed to be sort of calming with the emotional aspect and all.

unclefrogy@37 I still think it’s a good comparison. First off, farming has been an effective and net beneficial way to produce food for thousands of years, despite being subject to hazard. And some of the hazards, such as droughts or floods, aren’t really dependent on an understanding of plant growth. Even selective breeding and improvements in fertilizer, for example, don’t require knowledge of the development stages from seed to plant. Horticulture can be carried out very effectively using empirical observations, and has been.

When it comes to the products of machine learning, we’re at an advantage, because we actually do have control over the processes that produced them. What we don’t have is a perfect understanding of “how” something works if it’s the result of applying machine learning to a massive training set.

But this is true of any emergent system. The rules that set it up may be fairly simple, but the outcome exceeds the human ability to grasp fully in the same way you might grasp the workings of a steam engine. A lot of new technology is like this. One person might understand some component very well, but rarely the whole thing at a holistic level.

As I said “But it is true that if we don’t understand something, it will have hidden risks.” My background is algorithms, not machine learning, so I tend to be comfortable with programs you can predict, analyze, and prove things about. But this is only the tip of the iceberg when it comes to computer programs. Whether it is “AI” or will be able to do any specific task is besides the point. The question is whether it’s useful. It’s quite clear that many machine-learning based methods have turned out to be very useful over the last two decades at least.

Ah, this takes me back. I have actually had a patient tell me they wanted to die, and I started explaining the medical assisted dying process, only to have the outraged family tell me that actually they wanted not to die. You’ve got to be specific when you’re making requests like that.

ryangerber@40 How could that happen? Was it a language issue or something just misspoken or misheard? It seems like the kind of thing that you would say with the kind of gravity that there should be other cues such as body language and facial expression that would tip the balance.

I guess one take-away is that if you think someone is requesting assisted suicide, even where it’s legal, you should probably verify that request before diving into the process.

I was looking at farming all through its history. yes it has been productive and the environment has payed the price. Some of the problems that developed were the direct and indirect results of farming itself maybe most of them. Also a key difference is the variability of the application of farming methods by different farmers and different environmental situations.In the abstract yes but farms are not an abstract nor are roads or patients or anything else in the real world. Few things are exactly the same any where you look. In farming there is a lot of leeway or tolerance for differences it is far cry from a lab same is true with roads and patients. AI will be much easier to implement with in a controlled environment where it all then becomes one integrated whole one big machine or when it has one very simple task like delivering ordinance to a target with pinpoint accuracy. We are way closer to the Sesame Street level of computing then we are to Deep Thought or Marvin or ORAC.

we may get there but not today.

uncle frogy

“Nephrite-Jade amulets, a calcium-ferromagnesian silicate, may prevent COVID-19.” Bility et al. (2020) Science of the Total Environment, in press — DOI 10.1016/j.scitotenv.2020.142830

@11 Jaredcormier

“Don’t fear software engineers, fear a lack of qualified software QA engineers”

Edsger Dijkstra said:

“Program testing can be used to show the presence of bugs, but never to show their absence! ”

Dijkstra favoured writing programs with methods that made a formal proof of the program possible. Leslie Lamport is worth reading on the subject http://lamport.azurewebsites.net/pubs/future-of-computing.pdf.

Is software engineering? Isn’t it really applied mathematics? A program is a mathematical expression.

@19 Ray Ceeya

“Machines may be flawed, but they are reliably flawed. Humans are randomly flawed.”

The software is written by human beings – so therefore it is also flawed – and if it is complex software if may be impossible to understand the difference between being “reliably flawed” and “randomly flawed”. A single flaw by a human being may kill one person. A single flaw in a program that isn’t detected could kill hundreds.

mailliw@45

Some grist for your mill:

History’s Worst Software Bugs

11 of the most costly software errors

There’s so much to choose from that there’s only a little overlap in these 2 articles…and they’re both too old to mention the Boeing 737 Max bug…

Ray Ceeya@19–

Have you ever coded? If you do anything more than trivially simple programming, you’ll quickly find that software is far from predictable. In fact, two of the foundational moments in modern chaos theory came from unpredictable computer behaviours from simple, intuitively predictable functions: Lorentz’s observation that his computer weather simulator gave different results even when he plugged his midpoint data in, and Robert May’s observation that his computer predator-prey simulator kept giving different outcomes for the same scenarios, which led into some amazing papers on chaotic functions in ecosystems.

@44 mailliw – Saying software engineering is applied mathematics is pretty much the same thing as saying biology is applied physics – it’s technically true but useless in most non-trivial circumstances…

@46 Chris Lawson

“There’s so much to choose from that there’s only a little overlap in these 2 articles…and they’re both too old to mention the Boeing 737 Max bug…”

The Boeing 737 bug wasn’t really the result of automated flying. The software was there to attempt to rectify a fundamental aerodynamic design flaw in the plane. It was impossible to fit the new broader diameter fuel saving engines under the wing of a 737 so Boeing moved the engines forward and up. This resulted in the plane tending to go nose up, the software was there to rectify this tendency. When the monitoring tube failed then the software continued to force the nose down, no matter what the pilot tried to do.

Yeah, my experience with coding –

High School: “Sanity checks, sanity check, sanity checks. Never bloody assume that the user won’t enter something you don’t expect, or what your code will do with bad data. Always make sure, as much as possible, that the data going in makes bloody sense.”

College (admitted not a great one): “Wow! I can’t believe you added all this extra stuff to the code to do stuff that are really useful, but not in the specifications I gave you, instead of just throwing something together that does the bare minimum!”

Sigh….

@PaulBC

I have had a similar experirence – working for a company that makes equipement for phamaceutical manufacturers. A bug (or an installation) means a line has to be shut down, at the cost of millions / day, which means the client is on your back to fix it as fast as possible.

That goes about as well as you can imagine. At best a shitty, minimally tested patch (that will never make it to the master branch, generating yet another custom version to be maintained) written by the on call developer, whoever that might be, on a saturday night.

At worse… well, let’s just say there are clients out there with untraceable software versions that were compiled on the fly directly on the developer’s machine. Said engineer might or might not be with the company anymore (considering the average time a developer spends with the same company is around 3 years in my experience). It’s the stuff of nightmares.

@48 Bart Declercq

” Saying software engineering is applied mathematics is pretty much the same thing as saying biology is applied physics – it’s technically true but useless in most non-trivial circumstances…”

Leslie Lamport in the paper I mentioned above, makes a very convincing case for why it is more helpful to think of programming as applied mathematics rather than as engineering.

Is a program like a car?: “The bug occured because the subroutine wore out through constant reuse”.

@mailliw

Eeeshh… In very, very broad terms… maybe ?

At script level, when you’re trying to automate a single thing to solve a single problem, and you’re writing simple procedural methods, it’s pretty much predictable. A modern application is nothing like this.

Writing a complex, reactive program is more akin to building a house or, even better, designing an electronic circuit or a machine, than writing equations. It isn’t static. There are moving parts in there that interact with each other according to sensor or user input. That’s where paradigms like object-oriented programming come in. A modern application is a collection of objects that interact with each other and the external world. There are so many ways the outcome of these interactions can go according to the objects’ internal states, the external world, or even resource availability that it becomes practically impossible to predict what will happen in every case.

That’s why there is such a thing as “software architecture”, and why a good software architect is paid so handsomely. A good architecture is often what makes the difference between a buggy mess and a stable, easy to maintain software, just like a good mechanical design makes the difference between a machine that works well and one that constantly breaks down.

In that sense, modern software is pretty much engineering. You’ve got to design something according to specs and you’ve got enough major uncertainties doing so to justify putting a QA department in your production pipeline (this right there, should be a major clue that you’re not dealing with predictable math). And software is now involved in pretty much all safety-critical equipment, which addresses the other argument (but software is just games and word processors, lol) I’ve heard against making it a bona fide engineering discipline with its own licensing and professional order.

I down know what kind of disaster it will take for people to realise we can’t keep addressing software, especially safety-critical software like automated cars and power plant management, as something anyone can do any way they want without any responsibilities as to the consequences. When even things are seemingly harmless as social media has been used to assist a country in genocide, we have to seriously think about it IMO.

Was building the set for “Pacific Overtures” once. The actors kept falling off the hanamichi so just before rehearsal we threw down a kick strip. Didn’t have to be neat, we said. It was just temporary.

“There is no temporary in theatre,” the set designer told us. He was right. Opening night it was still there, in front of everyone.

It always scares me how much of the world is just the same. There’s never time or money to take out something that works, just so you can replace it with something that works better.

nomuse@54 Reminds me of putting TODO comments in code before a “temporary” hack. As I heard it once said, the things you plan to get done in a quarter are called OKRs. The things you don’t plan to do are called TODOs.

@49–

You’re right, I shouldn’t have called it a “bug”. The code did what its programmers intended it to do. The software failure sprang from poor design rather than coding errors (and the MCAS was only deemed necessary because of decisions made about engine design and position, plus Boeing’s determination to pretend their new plane would not need new training for accredited 737 pilots).

I don’t believe anyone who tells me that their code is so simple they cannot possibly have introduced a bug. At least if you work in more than one programming language and you are not a “language lawyer.” Here’s a fun one I stumbled on. In Python, you can omit parentheses around pairs. Some people think this makes it more readable, so you could write:

This assigns x=1 and y=2.

Now try it in Scala (if you’ve had the pleasure). With only a slight change, you have

This assigns x=(1, 2) and y=(1, 2). Maybe this is obvious if you are familiar with exactly one idiom, but if you’re switching back and forth, it is an easy mistake to make. Note that explicit parentheses “(x, y) = (1, 2)” will force it to work the first way in both cases.

That’s just understanding what you think you wrote, let alone what will actually happen in realistic scenarios. It’s great if you can prove something about your code with a precondition, invariant, postcondition, but that is a strict subset of possible computer programs (which in general can encode an undecidable problem like the halting problem) and probably a strict subset even of useful programs.

@53 kemist, Dark Lord of the Sith

Mathematical thinking is a way out of the complexity. Again I’d recommend the Lamport paper, he’s a lot more capable and eloquent than I am.

People wrote large complex systems previously without “architecture”. My view is that architecture and object-orientation have introduced a lot of unnecessary complexity rather than solving problems.

The advantage of declarative programming is that you remove iteration and assignment, leaving only decision to think about. It doesn’t matter what order the code is written in anymore.

@58

Right. Now try to hire enough developers with even a remote comprehension of how to write a mathematical proof. Do they exist? Sure, but you’re competing with companies that pay more and carry a lot more prestige. And if that’s the main thing you’re selecting for, you better hope they are also good at working with the product manager to nail down requirements, and that they like working with a team, getting things done on a schedule, and doing a lot of things that aren’t all that interesting from a mathematical perspective.

In fact, it’s not that hard for ordinary people to write a lot of code, and they do. Kids can learn to do it. But without good process, it’s going to be a mess (unless you’re really lucky and get the people that are in demand elsewhere). I think the question is not what it takes to produce reliable software in an ideal sense, but what it takes to get something reliable out of the efforts of people who aren’t thinking mathematically, but may be very good at cranking out applications.

Yeah, I once collaborated on an agro-environmental model that included a hydrological component. The guy who wrote that component made an error, so that more water entered into the area we were mdelling than left it. We left the model running overnight, and came in to find the computer lab flooded! ;-)

I have a couple of hypotheses about the state of software “engineering”, much bemoaned at least since The Mythical Man Month was written, in order of how much weight I give them: (a) Everything’s fine. Of course there are lots of bugs but we fix them. (b) Things break because developers are overconfident and the way around that is to require, not merely encourage, extensive test suites (c) Things break because of the agglomerative nature of software development. Even testing can’t fix an unmaintainable system. We should spend more time on technical debt, delete things, and always make sure the architecture makes sense.

Some views I reject are (d) The last team of developers was lazy and or incompetent and let things slip. If we delete this and start over, ours will be much better and (e) The problem is they’re just using the wrong language/paradigm. Use the right formula, and code will be a joy to read. Everyone will understand it perfectly, and bugs will be visible and easy to fix. (f) Formal verification is the way to go. Human beings are entirely capable of writing software that follows well-defined preconditions/postconditions/invariants. They just haven’t tried hard enough.

To begin with, (a) it’s really not clear to me that there is a problem. Yes, code is less reliable than a 747 engine or a suspension bridge, but that’s usually because the consequences of it breaking are a lot less severe. We can make it more reliable, but we’re paying up front for that. Ultimately, it comes down to an economic decision. (b) But if it’s not good enough, the main reason I have noticed is just that developers tend to assume a lot of things are “so easy they’ll obviously work” when in fact every time a human touches software they introduced a high probability of something breaking without them noticing until it’s too late. (c) Yes, I have been stuck maintaining legacy nightmares that no amount of regression testing can help to make sense of, so there should be more attention paid to simple code-health issues like duplicate code and special cases that are rarely or never encountered.

It does seem to me that we all muddle through anyway, and it’s not obvious that we’re doing it worse than any other profession. If I were spackling drywall would I work on a proof that the that wall is sufficiently covered and ready for painting? Nah, I’d just look it over and say “Eh, good enough.” assuming I’m not restoring the Sistine Chapel or what have you. Not everything deserves the same amount of effort.

More seriously, mailliw, I agree up to a point, but a program can fail because the hardware is worn out, or can’t cope with the storage requirements, or whatever..

Well, so they tell you. Maybe there have been huge advantages in declarative programming since I last used Prolog, but at that point, they’d had to introduce a special “cut” operator (“!” IIRC, meaning “use the first solution of this clause you arrive at”) to get round the hideous inefficiency of pure declarative programming.

PaulBC@61,

I’ve seen another position argued: that software is written by geeks, who prize adding a “neat” new feature over useability and reliability. Overlaps with your (b) and (c) I guess.

@53 PaulBC

“Right. Now try to hire enough developers with even a remote comprehension of how to write a mathematical proof.”

It isn’t necessary for developers to be able to construct proofs. Only to start thinking about programs as mathematical objects. No difficult mathematics is required, only things that would be taught in any introductory computer science, mathematics or philosophy course – predicate logic and set theory.

I’m not a mathematician and I didn’t find these things hard to understand.

@62 KG

“Well, so they tell you. Maybe there have been huge advantages in declarative programming since I last used Prolog, but at that point, they’d had to introduce a special “cut” operator (“!” IIRC, meaning “use the first solution of this clause you arrive at”) to get round the hideous inefficiency of pure declarative programming.”

There is a declarative language that is used by hundreds of thousands of businesses worldwide. I use it every day. I never need to use iteration and only occasionally assignment or make logic dependent on the order of the code.

Of course it isn’t very cool and was invented at IBM so many geeks tend to ignore it.

One of my doctoral supervisors was Aaron Sloman. I recall hearing him estimate that human-level AI was about 500 years away (this at a time when many in the field thought it would have been reached by the end of the 20th century)..

mailliw@65,

Is its name a commercial secret?

mailliw@64 I agree that declarative approaches are often useful, and not necessarily hard to understand. A lot of people can write SQL queries and it beats working through exactly how the joins are executed. I’m not sure I would call that mathematical thinking though. It’s more a question of how well it maps onto how people think informally.

@61 PaulBC

“The last team of developers was lazy and or incompetent and let things slip. If we delete this and start over, ours will be much better”

I’m right with you there. People often think that because a system was written 10 years ago that it is out of date and must be replaced. They think this can be done quickly. What they forget is that the original system wasn’t written in a few weeks and left alone. There is ten years of development in there. It will always take a lot longer than you think to replace that code and the chances of it being done reliably with no disruption for the end users is remarkably slim.

@68 PaulBC

“A lot of people can write SQL queries and it beats working through exactly how the joins are executed. I’m not sure I would call that mathematical thinking though.”

In the old days in COBOL we had to knit our own joins, it took a whole day for what takes 5 minutes in SQL – and then as soon as the distribution of the data changed you had to completly rewrite the COBOL to get the same performance. Things were definitely not better in the old days.

You can write SQL without understanding the mathematics behind the relational model, but I’ve found that understanding the mathematics has helped me a lot, and wasn’t that difficult to grasp.

KG@63 It’s not just the developers who want new features. It drives me crazy how much software changes without getting better, driven I assume by the need to maintain a distinct brand and keep up with the competition. I have said this before but I still feel the pain: In the late 90s or whenever it was, I started to get really annoyed at the way icons were getting photorealistic and less… iconic. I seemed really backwards to me as if we were bent on replacing a phonetic alphabet with Mayan glyphs.

But it went on so long that I decide “Wait, no. I’m missing the whole point. The human brain is made for shading and three-dimensionality. These are actually better than the flat-color icons. I’m just a nerd and I don’t know how normal people think.” I thought I had an aha moment, and then a couple of years later everyone when back to flat colors anyway (I have heard that it was to keep it consistent with smart phones with less powerful graphics at least initially than desktops).

So I give up. It’s all hemlines going up and down. There are some definite improvements, like the fact that I can do crazy stuff like reverse image lookups, but most of the “features” are pure marketing BS, and that is even true with backend choices like relational DB or not, a lot of herd following going on.

“I’m not sure I would call that mathematical thinking though”

A table is a set of axioms, a query is a theorem based on those axioms.

In just the same way as the result of adding any two integers is always an integer, the result of any relational operation will always be another relation.

The relational model is a remarkably concise and elegant thing and based on very sound mathematical foundations. It is a shame that SQL is such a flawed implementation of it. I would like to be more purist about it but, well, I have to eat too.

@71 PaulBC

“In the late 90s or whenever it was, I started to get really annoyed at the way icons were getting photorealistic and less… iconic.”

The floppy disk is like Jesus, it died to become the symbol of saving. I wonder how many people there are today who have absolutely no idea why that icon means save?

I remember using Windows for the first time, before they put text under the icons. I found most of them pretty opaque. But wait I have a manual! But of course you can’t look up an icon in the index. Well it isn’t in pages 1-200 so it must be pages 201-400, now let’s try and narrow it down to page 201-300 and so on.

KG@66 I’m not making any predictions on “human-level AI.” I started studying CS during the “AI winter” of the 80s, and it was conventional wisdom then that natural language translation was intractable without natural language comprehension (or effectively human-level AI). This is still true in pathological cases, but really it is good enough that I can read local papers from Beijing, Brazil, and Ukraine and the translation is very informative (though I got a really funny translation once interpreting Ukrainian as Russian language).

One big thing that’s happened is that processing power has improved tremendously, so things people might have wanted to do before are just now becoming feasible. Also the amount of digitized text to refine the translation has increased by orders of magnitude due to the internet. Your old advisor might have a good intuition about AI progress, but I wonder if he underestimated the sheer amount of computing power.

When computers were new, simply having something that could store the same amount as a human brain (in whatever noisy way we do) seemed out of reach. Today I’d say that a 5 terabyte drive you can pick up at Costco could approximate something like the experiences of a complete human life* (highly speculative, but my hunch). Computer circuitry is itself much faster than neurons and the scale is very large, particularly when you look at a cluster and not just a single CPU.

So I really think the issue is that we don’t know what to do with it yet, but that we really might have enough power to carry out cognition on a comparable level to humans. It will never really mimic a human brain simply because the brain is a very different kind of system with its own peculiarities.

*At 9 hours of low-quality video per 1G (as claimed in a random Quora post I just found) that’s 45000 hours or 5 years. A human life is more than a long video, but our recollection is also a lot less detailed, so I think 5T is at least somewhere in the distribution of how much it would take to capture the experience of one life.

@67 KG

“Is its name a commercial secret?”

No, too obvious to need to be mentioned.

I am guessing SQL, though I mentioned it before even seeing @65.

PaulBC @32: Lived near there from 2012-15. Saw the testing in action; that’s why I chose those precise examples. The number of times I saw a Waymo vehicle make a “California stop” in the South Bay was disturbing (and I knew a couple of Waymo engineers and refused to ever ride-share with them). And turn signals? Really?

More generally, the biggest problem with software reliability is the intrusion of real-world conditions upon the software system. The most obvious is that most software is written presuming that 100% of system resources are available to and used exclusively for that software — the ne plus ultra of OCD. With “driverless cars,” for example, the test systems all assume that the navigation-and-control system has full control and nothing else to worry about… which is kind of silly when there’s also a system for making the engine operate efficiently that, in typical “industrial efficiency” fashion, is going to be combined into that same hardware/software entity hen it’s time to transition to affordable consumer-level production.

I’ve seen this waaaaaaaaaaaay too often in aircraft systems, even relatively primitive ones like the F-111’s “terrain-following” system from the 1970s and 80s… which frequently had difficulty if any aspect of the aircraft was not operating at factory specifications (even as simple as a bad seal around the rear of a bomb-bay door that just slightly changed airflow in a way that a human pilot could easily compensate for). So one wonders how well the driverless system is going to allow for tire baldness, because surely nobody ever drives a car with tires even slightly beyond “manufacturer’s recommended wear limits.” Well, nobody including every commercial truck driver on the road (those tires are not cheap). Or tows a load half-full of a liquid without proper slosh plates…

Amazon discovered this too, when they opened their site in Sweden:

https://www.thelocal.se/20201028/translation-fails-on-amazons-new-swedish-site?fbclid=IwAR2T9QYnzN3L9G-rCQt68x7qpho_1MmkNKFSjYCu8VTNG3cgY6FOQw93hSs

@74 PaulBC

“so I think 5T is at least somewhere in the distribution of how much it would take to capture the experience of one life.”

But I don’t think the problem is about speed or storage, you need to be able to emulate how the brain works and that is very hard.

A team of researchers at the Free University of Berlin have built a software model of a bee’s brain. It took years of work not because of technical limitations but because working out what a bee’s brain does is very, very difficult. And it’s still a model not a real bee brain that has all the capabilities that a bee brain has. It is a research instrument not a real emulation.

Also the computer it runs on is somewhat bigger than a bee’s head.

Jaws@77 It’s a little tangential, but I was reminded of my biggest peeve about computers right now or maybe about us humans who use them, which is that we seem to have built up an unreasonable tolerance for things just getting stuck and unresponsive. If you’re instagramming a noodle bowl, that’s probably OK, but this is not the behavior we want from critical systems (weapons, surgery, driving).

Also, I don’t think I’m imagining it when I say that it was not always like this. I was using X windows on underpowered Sun machines and even IBM RT machines in the late 80s. It might be slow to run stuff, but they didn’t just become totally unresponsive. They were doing a lot less, it is true, but they were fully functional unix systems running multiple threads, and connecting to local and remote consoles. They just didn’t have all the crap running that we have now whether it is on a phone or on a development machine with the browser open.

Not being able to form coherent sentences shouldn’t be a problem. Have you ever tried reading doctors handwriting?

garydargan@81–

You jest, but pharmacy dispensing errors dropped significantly with the introduction of computerised prescription writing. (On the other hand, it increased errors such as writing a prescription for the patient whose file you forgot to close instead of the patient in front of you.)

mailliw@73

I should ask my kids, though I’m not sure how often it is used now, since so many things autosave. If I explain it’s a “floppy disk”, I will have to address the fact that it is not disk shaped and in the most common incarnation (3.5″) was not really floppy at all unless you opened it up, which was a pretty bad idea.

(Those were the days. I missed actually having to use punch cards though I saw people use them. Actually, IBM VM/CMS had a “virtual punch” that you could use to store output. Fun stuff.)