I’ll always enjoy seeing Steven Pinker, Sam Harris and the epidemic of annoying white male intellectuals ragged on — and generally, any member of the Intellectual Dork Web deserves a thorough savaging. This one is good because it documents specific examples of Pinker and Harris being bad scholars, and shows how their fellow travelers flock to defend even their more egregious errors. I have to agree with its conclusions.

The point is that the entire IDW movement is annoying. It’s really, really annoying — its champions misrepresent positions without their (mostly white male) audience knowing, and then proceed to “embarrass” the opposition. They embrace unsupported claims when it suits their narrative. They facilely dismiss good critiques as “hit jobs” and level ad hominem attacks to undercut criticism. And they refuse — they will always refuse, it’s what overconfident white men do — to admit making mistakes when they’re obviously wrong. I am annoyed, like Robinson, mostly because I expected so much better from the most popular “intellectuals” of our time.

“Intellectuals” seems to be acquiring a new meaning here in the 21st century. It refers to well-off white people who use their illusion of academic prestige to defend 18th century ideas against all reason, as long as they bolster the status quo.

Crawl back into the bushes, you poseurs.

I resent that they have stolen the name of one of my favourite comic publishers:

https://www.idwpublishing.com/

This sounds interesting, I’m reading the various articles linked there. But, at the first paragraphs of “A Detailed Critique of One Section of Steven Pinker’s Chapter “Existential Threats” in Enlightenment Now”, I see that he writes:

And I’m wondering, was “The Better Angles …” really a good book or another poorly-research pile of non-sense that people with no expertise in the area like? I’m leaning towards the latter though.

It’s one thing to stubbornly refuse to acknowledge a mistake that bears on some core feature your beliefs or ideology. People are, for good reason, deeply invested in their core ideologies and even if we should abandon them after certain mistakes are made apparent, it is a lot of cognitive work to replace a fallacious ideology with a more coherent one and people just don’t have the time. It’s not the most intellectual thing to do, but people are people and I’m willing to give people the benefit of the doubt about why they won’t abandon core beliefs no matter how badly flawed they are (though I still hold them responsible for those beliefs as long as they hold them).

However, it is another thing entirely to double-down on a careless error that bears no real weight on your overall argument or position. Pinker mischaracterized people in ways that don’t really affect his larger claims and he refuses to acknowledge that. It’s lazy, it’s stubborn, and it is, as the author suggests, about as characteristically “overconfident white man” as you can get.

Intellectual Dork Web, indeed.

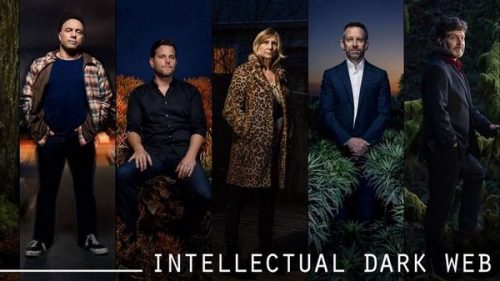

Is that… Joe Rogan? He’s supposed to be part of the INTELLECTUAL Dark Web? Ooohhh… kay.

LOL… Joe Rogan. Okay.

Who is #2 and #5?

Oh, these guys are fucking adorable! Apologists for capitalism, privilege and the patriarchy pretending their Just-So stories are courageous, subversive manifestos of a skeptical counter-culture.

All else aside, and from the article PZ referenced Pinker seems to have gotten an expert on AI risk backwards, what is the actual existential risk of AI outside of HAL and the Terminator coming to fruition? Pinker punctures some speculative AI bubbles in Enlightenment Now. I would have assumed, given PZ’s opinions of Musk and Kurzweil, he would have some sympathy for Pinker, as cognitive scientist, taking AI hype down a few pegs.

Pinker acknowledged the downside to automation where human workers are at risk, but downplays that. As dialectic, Pinker’s opinions on such stuff serves as counterpoints to hype the other way.

But yeah there are some issues with how Pinker fields criticism and how his mostly uncritical bulldog weighs in.

#2 is Dave Ruben. No idea who is #5

Since PZ seriously dissed Laura Nyro, here’s another lyric that wasn’t written by Jerry Fisher:

Hands off the man, the flim flam man.

His mind is up his sleeve and his talk is make believe.

Oh lord, the man’s a fraud, he’s flim flam man. He’s so cagey, he’s a flim flam man.

Hands off the man, flim flam man.

He’s the one in the Trojan horse making out like he’s Santa Claus.

Oh lord, the man’s a fraud, he’s a flim flam man. He’s a fox, he’s a flim flam man.

. . .

His mind is up his sleeve and his talk is make believe.

Oh lord, the man’s a fraud, he’s flim flam man. He’s so cagey, he’s an artist.

He’s a fox, he’s a flim flam man.

Don’t believe him, he’s a flim flam. Ole road runner, he’s a flim flam.

@ Lotharloo#2

I read The Better Angles. It was needlessly obtuse.

#5 is Bret Weinstein. Professor of biology during the Evergreen farrago and has been dining out on that story ever since (and now gets to opine on the biological roots of facism and other such stuff whenever someone needs to explain why feminist are angry(without asking them of course) or whatever this week)

By the way, the curious composition of Sam Harris’ picture makes it look like he’s wearing plant themed clown pants.

I do think the risk from AI is greatly over-hyped, because I think AI researchers have grossly underestimated the complexity of achieving human-like, self-aware intelligence. However, how you arrive at your conclusion matters, Pinker truly fucked a duck to twist the work to fit his conclusion, and we shouldn’t accept an answer simply because it agrees with ours.

Actually I think self-awareness is beside the point. If an AI programmed to make paper clips destroys the planet in order to manufacture unlimited quantities of paper clips, it doesn’t matter if it is self aware, or does it because it “wants to” or in any sense knows what it is doing. All that matters is the output, what’s inside the black box is not the point. There are other reasons why the probability of anything like that happening is trivial, but sure, AIs could do damage, they wouldn’t need “human like” intelligence. Isaac Asimov anticipated the problem and came up with the Three Rules of Robotics. Fundamental to their operating system is command one, never harm a human or allow a human to come to harm. Command two is obey humans. So I can order it to kill you to make paper clips but it won’t do it. Of course, in some of his stories things didn’t work out as one would hope . . .

The AI problem is probably more about things like resume sorting software that conceals racial and sexist biases under the covers.

Meanwhile on the that photo caption, isn’t it supposed to read “Intellectual Dork Web?”

One of them — Pinker, I think? — recently made a tweet pretending that the embarrassing name “Intellectual Dark Web” was coined by their detractors to discredit them.

I’d say that’s a good sign — when they’re trying to jettison their self-chosen label, it suggests that the label’s (and therefore the movement’s) public perception has become negative. The same happened with “alt-right”, originally a euphemism that was supposed to make them sound modern and iconoclastic, now so toxic that right-wingers are trying to establish the term “alt-left” specifically as a smear against leftists.

@ David Klopotoski #4 and Saad #5 Its the dark web so you gotta have at least one guy in there dealing drugs.

I agree. I think it will be a long time before we see anything like this, and there will be many steps along the way giving ample time to debate the ethics and dangers of true self-aware AI. For one, we still have to deal with the far more imminent upheaval of millions of white collar jobs being lost through advances in AI-driven automation. For example, websites that employ people to vet product reviews submitted by consumers are already experimenting with using advanced machine learning algorithms instead as a way to cut costs.

I tend to be an optimist when it comes to self-aware AI, should it ever happen, but I will freely admit I haven’t done the legwork to vociferously defend my case, and actually, I doubt it’s even possible to draw a definitive conclusion either way at this point.

Yep, that is the key point. Pinker is as financially and emotionally invested in his own thesis as Alex Jones is in his New World Order conspiracy theories, to the point where he believes he must be right.

Totally bizarre that Rubin is considered to be an intellectual of any kind. His only claim to fame is that he’s a (former) liberal who has discovered he can make a good living by creating a platform where far-right personalities can whine about SJWs while promoting their offensive ideology without fear of being challenged in any way. Basically, he’s the IDW’s pet liberal, and none other than Dennis Prager has called him that, to his face, not that Rubin cares, of course.

Even Joe Rogan on his worst days could run rings around Dave Rubin intellectually, he’s that bad.

Delusions of adequecy.

True, at least in most cases (but one of my thesis supervisors, Aaron Sloman, back in the late 1970s when a lot of his peers thought it would be achieved in a few decades, thought 500 years was nearer the mark), but that’s not where the risk comes from; it’s the use by the powerful of specialist capabilities that fall well short of that – face recognition, monitoring of text and speech for possibly “subversive” content, modelling and analysis of responses to advertising/propaganda, prducitoon of “deepfakes”, and others no-one has thought of yet.

After reading more, I’m sorry but I’m with Pinker. Phil Torres had only bullshit arguments, that are either irrelevant or appeal to authority fallacy. Deep learning is over-hyped as fuck, there is some success in some areas, but as per usual people love to make grand predictions. Even legit researchers such as Christian Szegedy make baseless predictions that they can solve “mathematics and computer science”, not exactly in those terms but to that effect.

Are you saying that people complaining that Pinker has twisted their own words to mean the exact opposite of what they actually intended is a fallacious appeal to authority? Because I tend to think that people can be legitimately regarded as valid authorities as to the meanings of their own words…

@23 Dunc

Don’t think that’s what lotharloo is saying. Phil Torres ‘bullshit arguments’ are more along the line of Pinker is wrong because he’s been photographed with a known pedophile (Jeffrey Epstein) and he’s on good terms with other people accused of being sexual harassers and/or rapists. Also he’s wrong because he disagrees with Phil Torres.

@24 chris61: Well, there is some of that in there, but it’s not by any means all of it. There’s also quite a lot of stuff like:

Is that a “bullshit argument”?

lotharloo@22,

Could you please specify what writing by Torres you are referring to? Is it

a) The salon article PZ links to: “Steven Pinker, Sam Harris and the epidemic of annoying white male intellectuals”.

b) The salon article linked from (a): “Steven Pinker’s fake enlightenment: His book is full of misleading claims and false assertions” in which Torres attacks Pinker’s Enlightenment Now.

c) The extended critique of Enlightenment Now linked to from (b): A Detailed Critique of One Section of Steven Pinker’s Chapter“Existential Threats” in Enlightenment Now.

d) Something else.

I’ve read (a) and (b), and neither justify your description.

The article PZ links to does contain such criticisms, but if they are “arguments” they are arguments for the position implied by the title: “Steven Pinker, Sam Harris and the epidemic of annoying white male intellectuals” (i.e., that there is an epidemic of annoying white male intellectuals, including Pinker and Harris). It also contains examples of Pinker misusing others’ words to mean the opposite of what they meant.

@25 Dunc

It might be. I haven’t read Pinker’s book so I can’t be certain but what ‘might’ be happening is that Pinker uses some other peoples observations or facts to support his own conclusions. That would not be unreasonable unless Pinker specifically states that the people in question agree with him. Nor would it be unreasonable that people would be annoyed to have their own words used to support someone else’s conclusions.

You should say “I don’t know” or “no response.” Because nobody disputed the mere possibility of it.

Is that what you need? Okay.

Those are the ingredients you wanted:

(1) Pinker is “skeptical of AI risk.”

(2) He counted Russell as if he agreed with him about that, but he does not agree.

And then, more of the same…. Pinker “doubled down” in response (my emphasis):

As if the part he was just talking about wasn’t doing exactly that…. “The rest” means other parts, which he had not discussed before this (concerning for example his characterization of Russell).

It would be one thing if it “strikes” him that Russell should buy his arguments and thus agree. But that’s just Pinker selling his thesis again, not responding adequately to the criticism given about him misrepresenting the guy.

Even if he simply didn’t want to acknowledge the mistake (as bad as that would be), because he knew he had no good response, he could’ve said nothing. Instead, the opening of the next paragraph implies once again that Russell does (not “should” but “does”) agree with him.

@ consciousness razor

Well, those details do make it sound as if Pinker exhibited bad scholarship in that book.

Has anyone here actually read the section(s) in Pinker’s book where he addresses AI and its purported risks toward human existence? I‘ve been given the impression he had untoward relations toward waterfowl, but based on what? Mischaracterizing Russell? Schadenfreude?

In one paragraph in a much larger section devoted to AI risk Pinker wrote: “When we put aside fantasies like foom, digital megalomania, instant omniscience, and perfect control of every molecule in the universe, artificial intelligence is like any other technology. It is developed incrementally, designed to satisfy multiple conditions, tested before it is implemented, and constantly tweaked for efficacy and safety (chapter 12). As the AI expert Stuart Russell puts it, “No one in civil engineering talks about ‘building bridges that don’t fall down.’ They just call it ‘building bridges.’” Likewise, he notes, AI that is beneficial rather than dangerous is simply AI.” Not the only thing Pinker says about AI in the book.

hemidactylus:

Not I; but then, why should I care what he thinks? Hardly an expert about this, he.

He does? What a knob.

(And what is this fantastic “foom”?)

AGI does not actually exist, so his claims about what it actually is are just his own fantasy.

What an idiot, then, since that entails that at least some AI is in fact dangerous.

Sure, give us some more such stupidities. I’m quite ready to sneer even more.

@31- John

“Foom” isn’t something Pinker just pulled out of his arse. It, to me, sounds like that scariest of moments when Skynet becomes suddenly (on our timescale) self-aware and decides to extinct us all just because. Or from: https://rationalwiki.org/wiki/Eliezer_Yudkowsky

“for “recursively self-improving Artificial Intelligence engendered singularity.”

We are frickin doomed. But doesn’t matter because the simulation aspect. From: https://docs.wixstatic.com/ugd/d9aaad_8b76c6c86f314d0288161ae8a47a9821.pdf

Torres:

“Perhaps the only risk discussed in the literature that could aptly be described as “exotic” is the possibility that we live in a computer simulation and it gets shut down. Yet even this scenario is based on a serious philosophical argument—the “simulation argument,” of which one aspect is the “simu- lation hypothesis”—that has not yet been re- futed, at least to the satisfaction of many philosophers.”

And that’s that. It’s a serious argument. We’re in a simulation so why worry about “risk”? We are facilitating the next round where our foomy creations simulate a new universe with its own Freethoughtblogs, maybe last Thursday.

Seriously though the whole simulation thing seems inspired by a movie trilogy inspired by a French pomo guy who thought in more mundane terms about reality mediated by tv, adverts, and ironically movies. And in a reality mediated by the web, gurus like Musk signify their unquestionable status as such by pontificating about…wait for it…simulated realities. Let that sink in. Buadrillard’s localized arguments for simulation co-opted ironically to sell the idea of a matrix universe. You cannot make this shit up.

Not that Pinker would have truck with French pomo. But that’s just funny as hell.

As is this: https://www.cnbc.com/2018/03/01/elon-musk-responds-to-harvard-professor-steven-pinkers-a-i-comments.html

“ “Now I don’t think [Musk] stays up at night worrying that someone is going to program into a Tesla, ‘Take me to the airport the quickest way possible,’ and the car is just going to make a beeline across sidewalks and parks, mowing people down and uprooting trees because that’s the way the Tesla interprets the command ‘take me by the quickest route possible,’” says Pinker.”

Sorry. Maybe I should stop my sneering.

hemidactylus, excellent, you at least try.

You have to start before you stop. :)

Heh. Yeah, it is, regardless of how it sounds to you.

—

I do like how you try to divert onto the simulation hypothesis thingy, too.

(What next, Omega Point? Roko’s Basilisk?)

But sure, you are impressed with Pinker’s wanking about how what is not isn’t dangerous, except when it is.

@33- John

How can Pinker be pulling foom out of his ass when he references Hanson & Yudkowsky 2008?

And…ahem…Torres introduced the serious argument of the simulated universe in his critique of Pinker. As with Pinker’s mischaracterization of Russell in a larger section on AI, it was fair game. Pinker’s joke about Teslas taking commands literally is apt too (contra guru Musk).

hemidactylus:

How? He put it there first, then pulled it out, all stinky.

I’m not Torres, and my critique is that GAI (“true” AI) ain’t a thing.

(I’m actually commenting on the subject at hand, not doing lit crit)

Look, there are things called AIs right now, but they don’t actually think, they are algorithms. Software governors, essentially. Not intelligences, as we know them.

You imagine nav systems are actual intelligences? Chess engines, maybe?

(Because if you do not, it is anything but apt, and if you do, you’re just ignorant)

Pulling things out of another’s ass is not really so different from pulling them out of one’s own. Or so I’ve been told, by … sources.

I hope I shouldn’t think “serious argument” is supposed to mean anything like “good argument.” To me, it means the person giving it is being sincere and so forth. They’re not saying it in jest, not saying it to trick/distract people, not while trying to shut down a bratty kid when they won’t stop asking “why?”, or things like that. All of that may be true about the person, while the thing is still garbage.

hemidactylus, you’ve gone so quiet!

(Any other ways Pinker impresses you?)

Pinker might have done shoddy scholarship with respect to Russel guy but it does not change the fact that Phil Torres’s arguments are full of shit, much more than Pinkers.

@KG:

Let’s stars with the linked article, and remember that the main disagreement is scholarly and about the dangers of AI.

Okay, at the beginning he sets up the charges of characterization and quoting things out of context. That’s fine. Then he lists a lot of irrelevant stuff before getting to Eric Zency. I agree Pinker pulls the quote out of its context but it’s really minor. Pinker’s explanation is this:

So Pinker is using Eric Zency’s quotation to disagree with Eric Zency. Eric Zency thinks this is outrageous but it really is not. You can use someone’s quotation against them.

Then he gets to Stuart Russel. Once again, I give some credit to the criticism of Pinker but then again, Torres does appeal to authority. Nowhere ever, in his writing he actually addresses the main topic. He always cites researchers and authorities, so and so says AI is dangerous! I’m sorry but I call bullshit on all those arguments specially since the people who most spout bullshit hypes are the AI reseachers themselves. The notation of “paperclip making AI who kills everyone and everything to make more paperclips” is absurd by any measure. There’s no amount of “appeal to authority” is going to make that claim credible.

TL;DR. Torres brings some valid criticism but what he mainly does is appeal to authority. He only quotes people saying stuff, saying their opinions. Nowhere ever he gives any substance related to the actual point of contention.

@37- John

I was only awake for a brief while and decided to fall back asleep. Sorry to keep you in suspense while I dreamed. That you don’t even believe in AGI I wonder what the issue with Pinker and his derailment of the disastrous foom moment could be. Source fallacy, because he’s Pinker?

As for more mundane scenarios what about the self-driving car, “intelligent” enough to trust on autopilot. Would it take the “quickest route” by a literal beeline over shrubs and bystanders? Or would the engineers put in a little semantic commonsense? I would think trolley problems harder, like decisions whether to sacrifice the passenger (or bystander) for a sudden appearance of a group of kids. And who would bear ultimate responsibility? The owner? Manufacturer? Or in another domain would there ever be a paper clip problem?

Pinker explores disanalogies between humans with our modular minds evolved with goal oriented intelligence and emotions bundled. Or because we are exploitive exterminating monsters why would special purpose machines surpassing us on given tasks become that way. Sure by unfortunate consequence they supersede us as automation causing unemployment, which is a more prominent concern than runaway AGI. We lose taxi and bus drivers.

Pinker downplays that with: “Until the day when battalions of robots are inoculating children and building schools in the developing world, or for that matter building infrastructure and caring for the aged in ours, there will be plenty of work to be done.” He leads into that previously with a NASA report dated to 1965 that: “Man is the lowest-cost, 150-pound, nonlinear, all-purpose computer system which can be mass-produced by unskilled labor.”

I mean seriously, this is the kind of drivel that Torres writes:

He takes a survey of opinions and blows it up to the level of facts. I’m sorry but even if 100% of all AI experts think by 2075 we will have 99.999999999% chance of having a HLMI, it doesn’t mean shit, it doesn’t mean squat, it’s not an argument. It’s bullshit hype.

Here’s more non-sense by Phil Torres, the supposed expert:

Right, they sound like very reliable people. Fucking bullshit merchants.

Here’s more drivel by Torres, as previously quoted:

Right. How does logic work again? So if I make a claim, it’s your job to refute it to my satisfaction, eh? Seriously?

Right, Phil Torres, the inventor of “science through opinion surveys”.

Other random bullshit quotations from the bullshit merchant Phi Torres:

And here’s the typical “a silicon brain is 1000000 times faster” bullshit:

This is your misunderstanding. The paperclip-making AI is a funny shorthand, used to describe a wide range of non-absurd cases that can happen, if people are not extremely cautious about the risks of AI.

In general, when programming and trying to avoid buggy behavior, you often have to think very far ahead and worry about strange, degenerate, unlikely cases, which may not be obvious at all initially, even when trying to do what may seem like a very simple task. It’s a very common issue, not limited to AI, and it should not be hard to understand. So, there are questions: what if it’s not so simple, what if we use it in ways that won’t just mean a minor inconvenience to a few people, and what if designers happen not to think far enough ahead (as they’ve done countless times in the past)?

Back to that shorthand, which is only meant to evoke many other cases…. You cannot dispense with all of those, by noting how silly it would be to design a paperclip-making AI that has disastrous consequences. They use a stupid shorthand like that, so that people (honest and sensible ones, who at least know something of the subject, aren’t trying to mischaracterize them, etc.) will have no trouble getting the fucking point that this is obviously a problem, maybe a very big one in the right circumstances.

From there, once this point is clear, all you need to realize is that nothing whatsoever requires that all such cases would be so fucking stupid, such that you’d have difficulty believing that specific this prediction (concerning the funny shorthand, which is obviously not all cases) will actually come true or is likely to come true.

To put it differently, nobody out there thinks “one day people will design paperclip-making AIs, so watch out, that means a shitload of risk!” So it’s irrelevant, if you (or Pinker) believe a prediction like that isn’t credible, because you think people collectively are not quite stupid enough to do that specific thing.

Instead, some do actually think people may in the future design other types of AIs, for other less-absurd purposes, which may be exceedingly complicated and impossible to describe in detail now (since we have no such designs now, while it’s not at all hard to picture something making paperclips).

There is plenty of reason to think there are analogous risks associated with that project. Frankly, if you’ve never heard of a software glitch/bug (which are every-fucking-where) or you just didn’t think to connect those dots, then I don’t think you’re going to offer anything useful to a discussion like this.

Please realize that it really doesn’t take anything like “authority” or “expertise” to get this, so I’m not suggesting that…. Just some very basic knowledge of the topic and a little simple reasoning should suffice.

Well, you mainly tried to brush off the criticisms of him misrepresenting people. You didn’t actually specify anything in particular that’s supposed to be an appeal to authority; you’ve only offered vague assertions to that effect.

Note that saying “you’re mistaken, Pinker: that’s not what the person that you yourself cited as an expert actually said” is not an appeal to authority or any kind of fallacy. But Pinker citing them as such in the first place may be an example, depending on other claims that may go along with that.

switched some words:

“difficulty believing that this specific prediction”

lotharloo

I think Torres’ critique of Pinker has some value as does Pinker’s book in places. The section on AI is a point where I would be inclined to say: “Give em hell, Steve.” I did find Torres’ invoking simulation a funny aside as it makes Musk relevant contrary to: “ First, it’s completely irrelevant that Musk’s company and Hawking’s synthesizer uses AI. This strikes me as similar to some- one saying, with an annoying dose of sardon- ic snark, that “it’s really ironic that Joe Mc- Normal works at the nuclear power plant yet also claims that nuclear missiles are danger- ous. Pfft!”” And in Pinker v Musk I have a difficult time taking the latter seriously.

But in a more meta- sense we are witnessing the conflict of biases. Pinker is an optimist. Torres is more a pessimist. Lacking a crystal ball who can know? The dialectic point-counterpoint at least on AI works for me. But Torres does point to some flaws in how Pinker uses sources. And my enthusiasm for how Pinker punctures AI bubbles doesn’t carry over to his absence of coverage for the human experimentation that happened in Guatemala which went further than Tuskegee. Or other parts of the book.

Oh goody goody! I unearthed a priceless gem of Dork Web conversation that should induce a severe bout of source based dissonance among the commentariat:

https://youtu.be/ByGC3Vwaio0

On this IDW video I take issue with both on problems of scientism and their reflexive Nietzsche bashing.

Watch Harris v Pinker on risk starting 47:00 through 1:20:00. Evil grin.

If you’re against Pinker you stand with Harris. Dissonance inducing source reflection from the Dork Web. Harris contemplates emergence of misaligned AI and Pinker says thats a huge step made. Harris defends Bostrom against Pinker. Pinker attacks the foom (from his butt?) and our implicit projection of human motivations. He dismantles AI fearmongering by contrasting its pathway to existence with how human minds evolved in a competitive landscape. Why would fooming AI seek to dominate? And he punctures the scala naturae argument by going to knowledge built by Popperisn conjecture-refutation cycles which machines could do faster which is degree not kind. Pinker dismisses AGI (hi John). He goes to Swiss Army knife fragmentation of human capacity into modules.

At 1:11:27 Stuart Russell becomes a topic. Harris counters Pinker’s quotation of Russell wrt building bridges. Touche’ Sam!

So is Harris right about AI as a threat?