The novel Snow Crash analogized human minds to computer operating systems and suggested that they could be just as susceptible to bad code, like a mind virus. There’s a lot to like about the idea, but the book takes it very literally and has people’s brains being wiped and taken over by a mere brief exposure to a potent meme…which is ridiculous, isn’t it?

Maybe it would take repeated exposures to do that.

We’re doing the experiment right now. Facebook has these “content moderators”, a job farmed out offsite to groups of people who are required to view hours of atrocious content on a tightly regimented schedule built on the call center model. They don’t get to escape. Someone posts a video of someone being murdered, or of a naked breast, and they have to watch it and make a call on whether it is acceptable or not, no breaks allowed. No, that’s not quite right: they get 9 minutes of “wellness” time — they have to clock in and clock out — in which they can go vomit in a trash can or weep. It sounds like a terrible job for $29,000/year. And it’s having lasting effects: PTSD and weird psychological shifts.

The moderators told me it’s a place where the conspiracy videos and memes that they see each day gradually lead them to embrace fringe views. One auditor walks the floor promoting the idea that the Earth is flat. A former employee told me he has begun to question certain aspects of the Holocaust. Another former employee, who told me he has mapped every escape route out of his house and sleeps with a gun at his side, said: “I no longer believe 9/11 was a terrorist attack.”

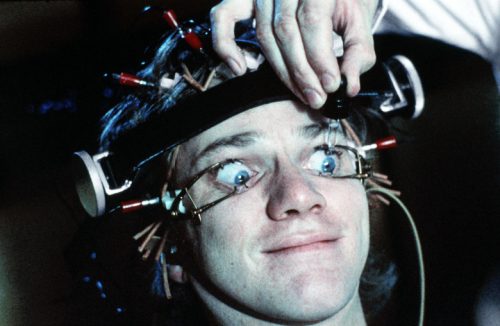

Maybe Clockwork Orange was also a documentary.

It’s not all horrifying. Most of the work involves petty and mundane complaints from people who just don’t like what other people are saying, a domain where the principle of free speech applies. The company, other than the routine fact that it’s run by micromanaging assholes, is above average in how it treats its workers (which tells you what kinds of horrors are thriving under capitalism everywhere else, of course).

Everyone I meet at the site expresses great care for the employees, and appears to be doing their best for them, within the context of the system they have all been plugged into. Facebook takes pride in the fact that it pays contractors at least 20 percent above minimum wage at all of its content review sites, provides full healthcare benefits, and offers mental health resources that far exceed that of the larger call center industry.

And yet the more moderators I spoke with, the more I came to doubt the use of the call center model for content moderation. This model has long been standard across big tech companies — it’s also used by Twitter and Google, and therefore YouTube. Beyond cost savings, the benefit of outsourcing is that it allows tech companies to rapidly expand their services into new markets and languages. But it also entrusts essential questions of speech and safety to people who are paid as if they were handling customer service calls for Best Buy.

I think part of the problem is that we treat every incident as just another trivial conversational transaction, yet that is the least worrisome aspect of social media. There are obsessives who engage in constant harassment, and this approach just looks at it instance by instance, which means the obsessive simply has to escalate to try and get through. It ignores the possible of planned maliciousness, where organizations use the tools of propaganda and psychological manipulation to spread damaging ideas. You check one of their memes, they simply reroute around that one and try other probes with exactly the same intent. No one can stop and say, “Hey, this is coming from a bot farm, shut it down at the source” or “This guy is getting increasingly vicious toward this girl — kill his account, and make sure he doesn’t get another one”. It’s all about popping zits rather than treating the condition.

As long as Facebook and Twitter and Google persist on pretending this is a superficial symptom rather than a serious intrinsic problem with their model of “community”, this is a problem that will not go away.

yes, you are right they do think it is a superficial problem because to them it is just a P.R. problem and the abuses do not negatively effect the bottom line much it might be that they see controversy promoting more views, more traffic which is the product they sell so it effects them not at all in the short run.

They may tell themselves and us that they sell community that may even have been what they were trying to do when they started out but the millions of dollars a day they get comes from selling access to their users views and “meta data” is not selling community unless by that it is meant selling the community out.

uncle frogy

It’s out of control. Last week, YouTube suspended over 400 accounts after pædophiles were found openly hanging out in the comments on videos of children making lewd comments and swapping links to actual child porn; this week, they’ve had to pull a bunch of videos targeted at children because they contained fucking instructions on how to commit suicide. WTF is wrong with people?!

(Rhetorical question: a heady combination of the Greater Internet Fuckwad Theory and FREEZE PEACH fetishism, as evidenced by Mano’s recent post on the phenomenon of “First Amendment auditors”, is what’s wrong, IMO)

Gotta say, I’m disappointed that some of these “regular people” (the moderators) can be swayed by some of the crap that gets posted, like Holocaust denials and pretty much anything the religious right posts. I was somehow raised to be a cynic, and I highly recommend that cynicism be taught as a basic skill in schools. I find it’s one of the few traits that’s necessary to my survival.

markkernes, it amuses me that you consider yourself to be a cynic, yet admit to that disappointment.

(You seriously expected better?)

Just read the article. This seems to me to be a suitable job for a psychopath; they’d not be so vulnerable to PTSD, and the metrics would keep them in line.

Implied but otherwise passed over is the fact that to Facebook, a breast and a human murder are equally offensive.