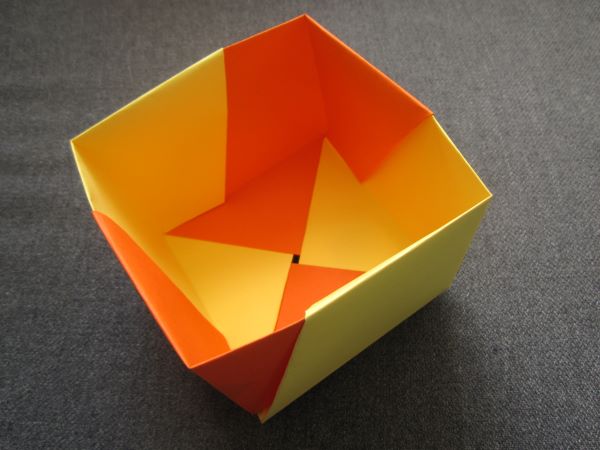

Striped Box, designed by me

Once a year I run a little origami class for kids, for someone I know. As a self-imposed constraint, I always teach modular origami. It’s hard to find simple modular origami models that kids can do in a reasonable amount of time!

I’ve wanted to make a modular origami box, and a big one so that it can hold other origami inside. So I bought some colored A4 paper, and looked around for a simple box design. None of them were quite to my liking, so I made my own design. There’s no lid for this box, because we’re keeping it simple. I have folding diagrams if you’d like to try.